Siqiang Luo

MoSE: Unveiling Structural Patterns in Graphs via Mixture of Subgraph Experts

Sep 11, 2025Abstract:While graph neural networks (GNNs) have achieved great success in learning from graph-structured data, their reliance on local, pairwise message passing restricts their ability to capture complex, high-order subgraph patterns. leading to insufficient structural expressiveness. Recent efforts have attempted to enhance structural expressiveness by integrating random walk kernels into GNNs. However, these methods are inherently designed for graph-level tasks, which limits their applicability to other downstream tasks such as node classification. Moreover, their fixed kernel configurations hinder the model's flexibility in capturing diverse subgraph structures. To address these limitations, this paper proposes a novel Mixture of Subgraph Experts (MoSE) framework for flexible and expressive subgraph-based representation learning across diverse graph tasks. Specifically, MoSE extracts informative subgraphs via anonymous walks and dynamically routes them to specialized experts based on structural semantics, enabling the model to capture diverse subgraph patterns with improved flexibility and interpretability. We further provide a theoretical analysis of MoSE's expressivity within the Subgraph Weisfeiler-Lehman (SWL) Test, proving that it is more powerful than SWL. Extensive experiments, together with visualizations of learned subgraph experts, demonstrate that MoSE not only outperforms competitive baselines but also provides interpretable insights into structural patterns learned by the model.

When Deepfake Detection Meets Graph Neural Network:a Unified and Lightweight Learning Framework

Aug 07, 2025Abstract:The proliferation of generative video models has made detecting AI-generated and manipulated videos an urgent challenge. Existing detection approaches often fail to generalize across diverse manipulation types due to their reliance on isolated spatial, temporal, or spectral information, and typically require large models to perform well. This paper introduces SSTGNN, a lightweight Spatial-Spectral-Temporal Graph Neural Network framework that represents videos as structured graphs, enabling joint reasoning over spatial inconsistencies, temporal artifacts, and spectral distortions. SSTGNN incorporates learnable spectral filters and temporal differential modeling into a graph-based architecture, capturing subtle manipulation traces more effectively. Extensive experiments on diverse benchmark datasets demonstrate that SSTGNN not only achieves superior performance in both in-domain and cross-domain settings, but also offers strong robustness against unseen manipulations. Remarkably, SSTGNN accomplishes these results with up to 42.4$\times$ fewer parameters than state-of-the-art models, making it highly lightweight and scalable for real-world deployment.

DHIL-GT: Scalable Graph Transformer with Decoupled Hierarchy Labeling

Dec 06, 2024

Abstract:Graph Transformer (GT) has recently emerged as a promising neural network architecture for learning graph-structured data. However, its global attention mechanism with quadratic complexity concerning the graph scale prevents wider application to large graphs. While current methods attempt to enhance GT scalability by altering model architecture or encoding hierarchical graph data, our analysis reveals that these models still suffer from the computational bottleneck related to graph-scale operations. In this work, we target the GT scalability issue and propose DHIL-GT, a scalable Graph Transformer that simplifies network learning by fully decoupling the graph computation to a separate stage in advance. DHIL-GT effectively retrieves hierarchical information by exploiting the graph labeling technique, as we show that the graph label hierarchy is more informative than plain adjacency by offering global connections while promoting locality, and is particularly suitable for handling complex graph patterns such as heterophily. We further design subgraph sampling and positional encoding schemes for precomputing model input on top of graph labels in an end-to-end manner. The training stage thus favorably removes graph-related computations, leading to ideal mini-batch capability and GPU utilization. Notably, the precomputation and training processes of DHIL-GT achieve complexities linear to the number of graph edges and nodes, respectively. Extensive experiments demonstrate that DHIL-GT is efficient in terms of computational boost and mini-batch capability over existing scalable Graph Transformer designs on large-scale benchmarks, while achieving top-tier effectiveness on both homophilous and heterophilous graphs.

Towards Graph Foundation Models: The Perspective of Zero-shot Reasoning on Knowledge Graphs

Oct 16, 2024

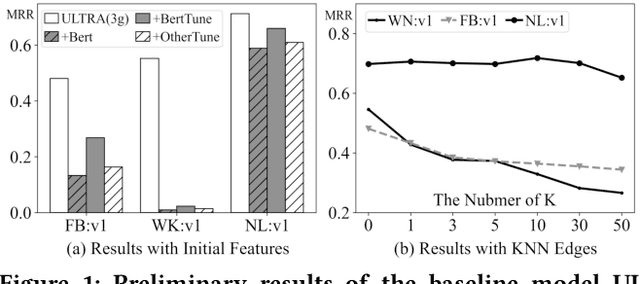

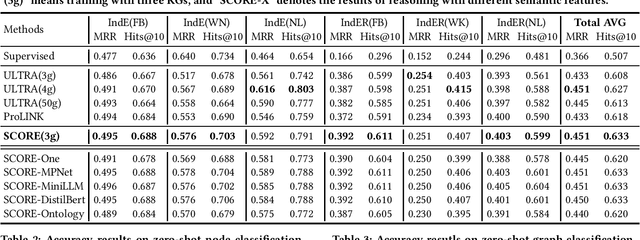

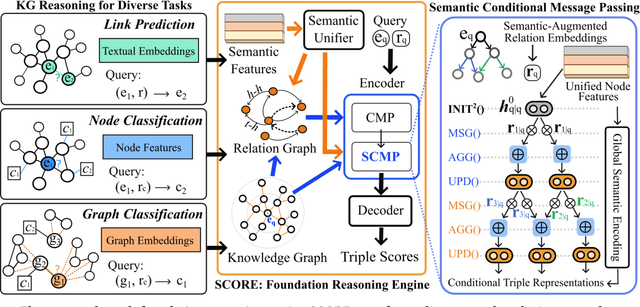

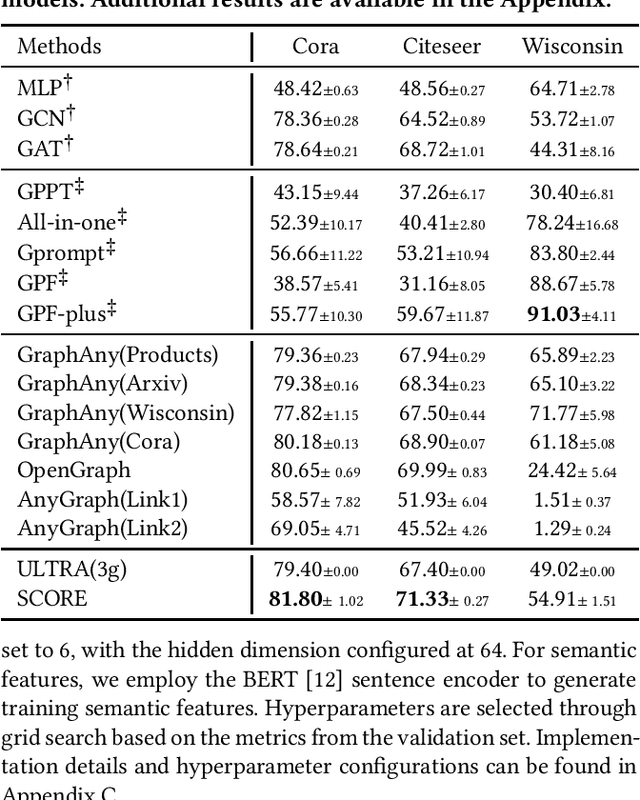

Abstract:Inspired by the success of artificial general intelligence, there is a trend towards developing Graph Foundation Models that excel in generalization across various graph tasks and domains. However, current models often require extensive training or fine-tuning to capture structural and semantic insights on new graphs, which limits their versatility. In this work, we explore graph foundation models from the perspective of zero-shot reasoning on Knowledge Graphs (KGs). Our focus is on utilizing KGs as a unified topological structure to tackle diverse tasks, while addressing semantic isolation challenges in KG reasoning to effectively integrate diverse semantic and structural features. This brings us new methodological insights into KG reasoning, as well as high generalizability towards foundation models in practice. Methodologically, we introduce SCORE, a unified graph reasoning framework that effectively generalizes diverse graph tasks using zero-shot learning. At the core of SCORE is semantic conditional message passing, a technique designed to capture both structural and semantic invariances in graphs, with theoretical backing for its expressive power. Practically, we evaluate the zero-shot reasoning capability of SCORE using 38 diverse graph datasets, covering node-level, link-level, and graph-level tasks across multiple domains. Our experiments reveal a substantial performance improvement over prior foundation models and supervised baselines, highlighting the efficacy and adaptability of our approach.

Benchmarking Spectral Graph Neural Networks: A Comprehensive Study on Effectiveness and Efficiency

Jun 14, 2024

Abstract:With the recent advancements in graph neural networks (GNNs), spectral GNNs have received increasing popularity by virtue of their specialty in capturing graph signals in the frequency domain, demonstrating promising capability in specific tasks. However, few systematic studies have been conducted on assessing their spectral characteristics. This emerging family of models also varies in terms of designs and settings, leading to difficulties in comparing their performance and deciding on the suitable model for specific scenarios, especially for large-scale tasks. In this work, we extensively benchmark spectral GNNs with a focus on the frequency perspective. We analyze and categorize over 30 GNNs with 27 corresponding filters. Then, we implement these spectral models under a unified framework with dedicated graph computations and efficient training schemes. Thorough experiments are conducted on the spectral models with inclusive metrics on effectiveness and efficiency, offering practical guidelines on evaluating and selecting spectral GNNs with desirable performance. Our implementation enables application on larger graphs with comparable performance and less overhead, which is available at: https://github.com/gdmnl/Spectral-GNN-Benchmark.

Unifews: Unified Entry-Wise Sparsification for Efficient Graph Neural Network

Mar 20, 2024Abstract:Graph Neural Networks (GNNs) have shown promising performance in various graph learning tasks, but at the cost of resource-intensive computations. The primary overhead of GNN update stems from graph propagation and weight transformation, both involving operations on graph-scale matrices. Previous studies attempt to reduce the computational budget by leveraging graph-level or network-level sparsification techniques, resulting in downsized graph or weights. In this work, we propose Unifews, which unifies the two operations in an entry-wise manner considering individual matrix elements, and conducts joint edge-weight sparsification to enhance learning efficiency. The entry-wise design of Unifews enables adaptive compression across GNN layers with progressively increased sparsity, and is applicable to a variety of architectural designs with on-the-fly operation simplification. Theoretically, we establish a novel framework to characterize sparsified GNN learning in view of a graph optimization process, and prove that Unifews effectively approximates the learning objective with bounded error and reduced computational load. We conduct extensive experiments to evaluate the performance of our method in diverse settings. Unifews is advantageous in jointly removing more than 90% of edges and weight entries with comparable or better accuracy than baseline models. The sparsification offers remarkable efficiency improvements including 10-20x matrix operation reduction and up to 100x acceleration in graph propagation time for the largest graph at the billion-edge scale.

LLM as Prompter: Low-resource Inductive Reasoning on Arbitrary Knowledge Graphs

Feb 19, 2024Abstract:Knowledge Graph (KG) inductive reasoning, which aims to infer missing facts from new KGs that are not seen during training, has been widely adopted in various applications. One critical challenge of KG inductive reasoning is handling low-resource scenarios with scarcity in both textual and structural aspects. In this paper, we attempt to address this challenge with Large Language Models (LLMs). Particularly, we utilize the state-of-the-art LLMs to generate a graph-structural prompt to enhance the pre-trained Graph Neural Networks (GNNs), which brings us new methodological insights into the KG inductive reasoning methods, as well as high generalizability in practice. On the methodological side, we introduce a novel pretraining and prompting framework ProLINK, designed for low-resource inductive reasoning across arbitrary KGs without requiring additional training. On the practical side, we experimentally evaluate our approach on 36 low-resource KG datasets and find that ProLINK outperforms previous methods in three-shot, one-shot, and zero-shot reasoning tasks, exhibiting average performance improvements by 20%, 45%, and 147%, respectively. Furthermore, ProLINK demonstrates strong robustness for various LLM promptings as well as full-shot scenarios.

Learning from Graphs with Heterophily: Progress and Future

Feb 01, 2024Abstract:Graphs are structured data that models complex relations between real-world entities. Heterophilous graphs, where linked nodes are prone to be with different labels or dissimilar features, have recently attracted significant attention and found many applications. Meanwhile, increasing efforts have been made to advance learning from heterophilous graphs. Although there exist surveys on the relevant topic, they focus on heterophilous GNNs, which are only sub-topics of heterophilous graph learning. In this survey, we comprehensively overview existing works on learning from graphs with heterophily.First, we collect over 180 publications and introduce the development of this field. Then, we systematically categorize existing methods based on a hierarchical taxonomy including learning strategies, model architectures and practical applications. Finally, we discuss the primary challenges of existing studies and highlight promising avenues for future research.More publication details and corresponding open-source codes can be accessed and will be continuously updated at our repositories:https://github.com/gongchenghua/Awesome-Survey-Graphs-with-Heterophily.

Label Propagation for Graph Label Noise

Oct 25, 2023

Abstract:Label noise is a common challenge in large datasets, as it can significantly degrade the generalization ability of deep neural networks. Most existing studies focus on noisy labels in computer vision; however, graph models encompass both node features and graph topology as input, and become more susceptible to label noise through message-passing mechanisms. Recently, only a few works have been proposed to tackle the label noise on graphs. One major limitation is that they assume the graph is homophilous and the labels are smoothly distributed. Nevertheless, real-world graphs may contain varying degrees of heterophily or even be heterophily-dominated, leading to the inadequacy of current methods. In this paper, we study graph label noise in the context of arbitrary heterophily, with the aim of rectifying noisy labels and assigning labels to previously unlabeled nodes. We begin by conducting two empirical analyses to explore the impact of graph homophily on graph label noise. Following observations, we propose a simple yet efficient algorithm, denoted as LP4GLN. Specifically, LP4GLN is an iterative algorithm with three steps: (1) reconstruct the graph to recover the homophily property, (2) utilize label propagation to rectify the noisy labels, (3) select high-confidence labels to retain for the next iteration. By iterating these steps, we obtain a set of correct labels, ultimately achieving high accuracy in the node classification task. The theoretical analysis is also provided to demonstrate its remarkable denoising "effect". Finally, we conduct experiments on 10 benchmark datasets under varying graph heterophily levels and noise types, comparing the performance of LP4GLN with 7 typical baselines. Our results illustrate the superior performance of the proposed LP4GLN.

Learning to Optimize LSM-trees: Towards A Reinforcement Learning based Key-Value Store for Dynamic Workloads

Aug 14, 2023

Abstract:LSM-trees are widely adopted as the storage backend of key-value stores. However, optimizing the system performance under dynamic workloads has not been sufficiently studied or evaluated in previous work. To fill the gap, we present RusKey, a key-value store with the following new features: (1) RusKey is a first attempt to orchestrate LSM-tree structures online to enable robust performance under the context of dynamic workloads; (2) RusKey is the first study to use Reinforcement Learning (RL) to guide LSM-tree transformations; (3) RusKey includes a new LSM-tree design, named FLSM-tree, for an efficient transition between different compaction policies -- the bottleneck of dynamic key-value stores. We justify the superiority of the new design with theoretical analysis; (4) RusKey requires no prior workload knowledge for system adjustment, in contrast to state-of-the-art techniques. Experiments show that RusKey exhibits strong performance robustness in diverse workloads, achieving up to 4x better end-to-end performance than the RocksDB system under various settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge