Shivam Chauhan

Who Gets Heard? Rethinking Fairness in AI for Music Systems

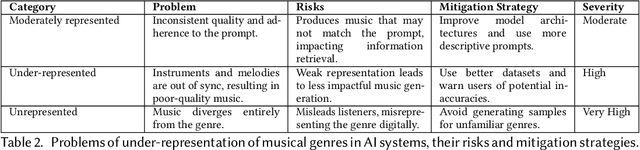

Nov 08, 2025Abstract:In recent years, the music research community has examined risks of AI models for music, with generative AI models in particular, raised concerns about copyright, deepfakes, and transparency. In our work, we raise concerns about cultural and genre biases in AI for music systems (music-AI systems) which affect stakeholders including creators, distributors, and listeners shaping representation in AI for music. These biases can misrepresent marginalized traditions, especially from the Global South, producing inauthentic outputs (e.g., distorted ragas) that reduces creators' trust on these systems. Such harms risk reinforcing biases, limiting creativity, and contributing to cultural erasure. To address this, we offer recommendations at dataset, model and interface level in music-AI systems.

Llama-3-Nanda-10B-Chat: An Open Generative Large Language Model for Hindi

Apr 08, 2025

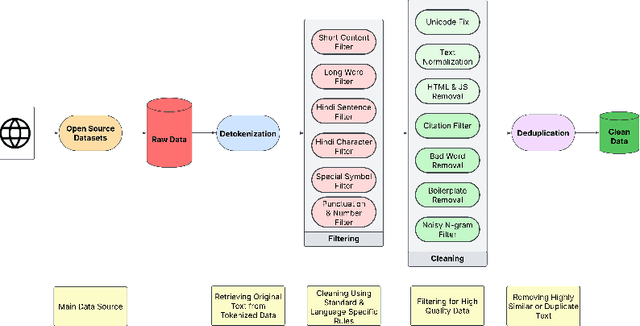

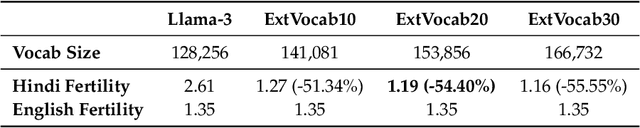

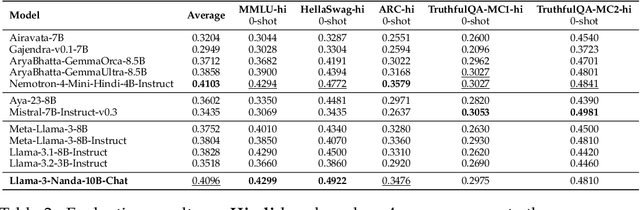

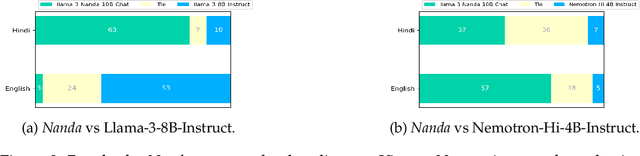

Abstract:Developing high-quality large language models (LLMs) for moderately resourced languages presents unique challenges in data availability, model adaptation, and evaluation. We introduce Llama-3-Nanda-10B-Chat, or Nanda for short, a state-of-the-art Hindi-centric instruction-tuned generative LLM, designed to push the boundaries of open-source Hindi language models. Built upon Llama-3-8B, Nanda incorporates continuous pre-training with expanded transformer blocks, leveraging the Llama Pro methodology. A key challenge was the limited availability of high-quality Hindi text data; we addressed this through rigorous data curation, augmentation, and strategic bilingual training, balancing Hindi and English corpora to optimize cross-linguistic knowledge transfer. With 10 billion parameters, Nanda stands among the top-performing open-source Hindi and multilingual models of similar scale, demonstrating significant advantages over many existing models. We provide an in-depth discussion of training strategies, fine-tuning techniques, safety alignment, and evaluation metrics, demonstrating how these approaches enabled Nanda to achieve state-of-the-art results. By open-sourcing Nanda, we aim to advance research in Hindi LLMs and support a wide range of real-world applications across academia, industry, and public services.

Music for All: Exploring Multicultural Representations in Music Generation Models

Feb 12, 2025

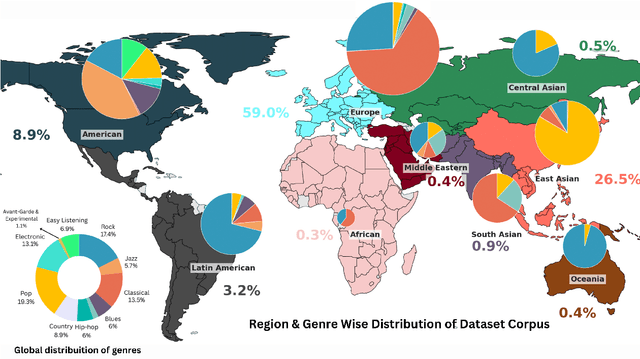

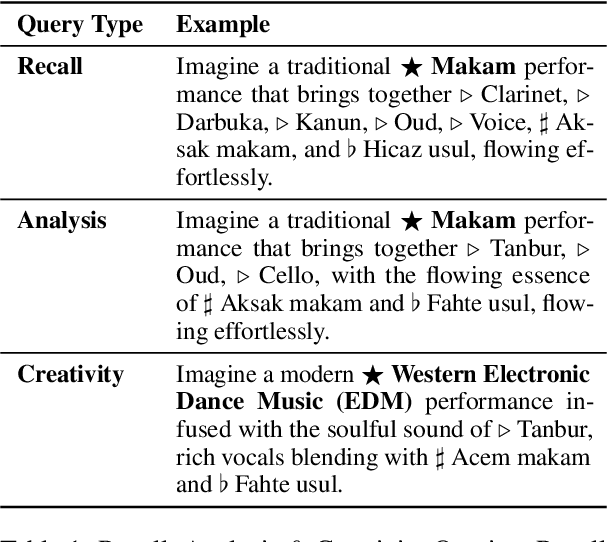

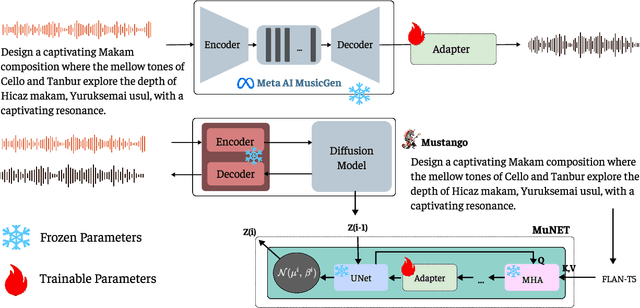

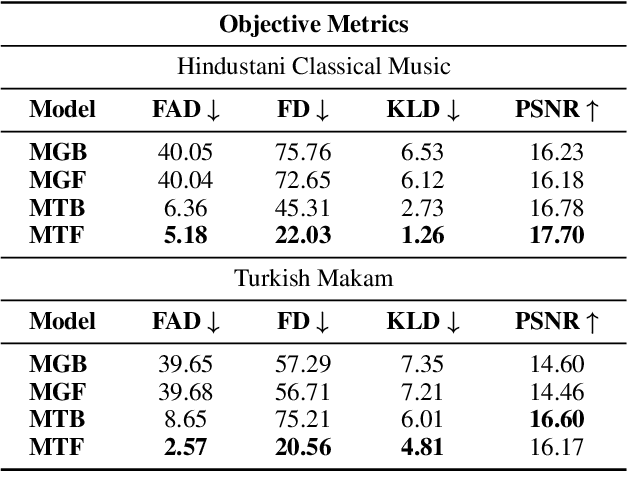

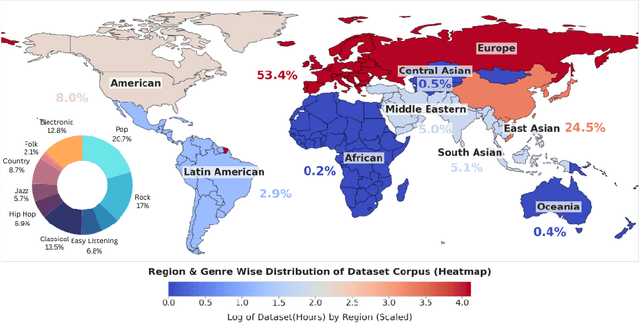

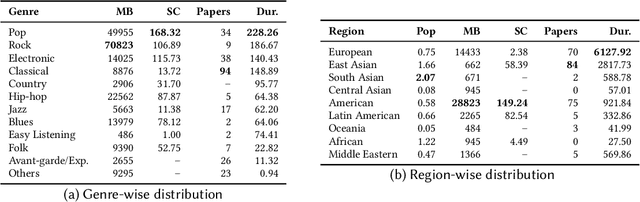

Abstract:The advent of Music-Language Models has greatly enhanced the automatic music generation capability of AI systems, but they are also limited in their coverage of the musical genres and cultures of the world. We present a study of the datasets and research papers for music generation and quantify the bias and under-representation of genres. We find that only 5.7% of the total hours of existing music datasets come from non-Western genres, which naturally leads to disparate performance of the models across genres. We then investigate the efficacy of Parameter-Efficient Fine-Tuning (PEFT) techniques in mitigating this bias. Our experiments with two popular models -- MusicGen and Mustango, for two underrepresented non-Western music traditions -- Hindustani Classical and Turkish Makam music, highlight the promises as well as the non-triviality of cross-genre adaptation of music through small datasets, implying the need for more equitable baseline music-language models that are designed for cross-cultural transfer learning.

Missing Melodies: AI Music Generation and its "Nearly" Complete Omission of the Global South

Dec 05, 2024

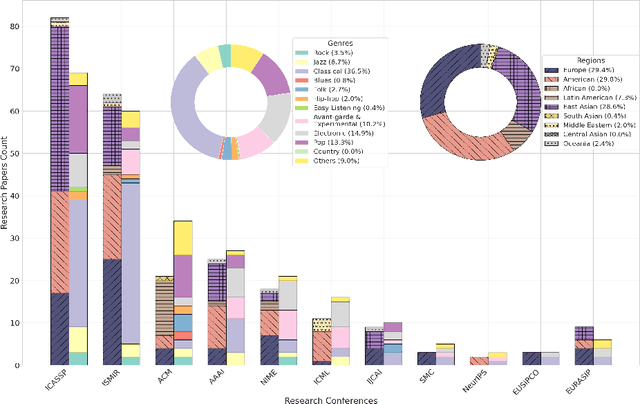

Abstract:Recent advances in generative AI have sparked renewed interest and expanded possibilities for music generation. However, the performance and versatility of these systems across musical genres are heavily influenced by the availability of training data. We conducted an extensive analysis of over one million hours of audio datasets used in AI music generation research and manually reviewed more than 200 papers from eleven prominent AI and music conferences and organizations (AAAI, ACM, EUSIPCO, EURASIP, ICASSP, ICML, IJCAI, ISMIR, NeurIPS, NIME, SMC) to identify a critical gap in the fair representation and inclusion of the musical genres of the Global South in AI research. Our findings reveal a stark imbalance: approximately 86% of the total dataset hours and over 93% of researchers focus primarily on music from the Global North. However, around 40% of these datasets include some form of non-Western music, genres from the Global South account for only 14.6% of the data. Furthermore, approximately 51% of the papers surveyed concentrate on symbolic music generation, a method that often fails to capture the cultural nuances inherent in music from regions such as South Asia, the Middle East, and Africa. As AI increasingly shapes the creation and dissemination of music, the significant underrepresentation of music genres in datasets and research presents a serious threat to global musical diversity. We also propose some important steps to mitigate these risks and foster a more inclusive future for AI-driven music generation.

Detecting key Soccer match events to create highlights using Computer Vision

Apr 06, 2022

Abstract:The research and data science community has been fascinated with the development of automatic systems for the detection of key events in a video. Special attention in this field is given to sports video analytics which could help in identifying key events during a match and help in preparing a strategy for the games going forward. For this paper, we have chosen Football (soccer) as a sport where we would want to create highlights for a given match video, through a computer vision model that aims to identify important events in a Soccer match to create highlights of the match. We built the models based on Faster RCNN and YoloV5 architectures and noticed that for the amount of data we used for training Faster RCNN did better than YoloV5 in detecting the events in the match though it was much slower. Within Faster RCNN using ResNet50 as a base model gave a better class accuracy of 95.5% as compared to 92% with VGG16 as base model completely outperforming YoloV5 for our training dataset. We tested with an original video of size 23 minutes and our model could reduce it to 4:50 minutes of highlights capturing almost all important events in the match.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge