Shinji Ito

Fast EXP3 Algorithms

Dec 12, 2025

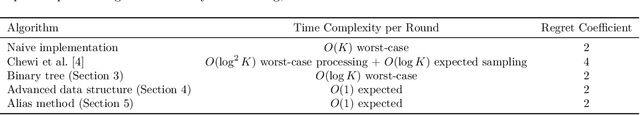

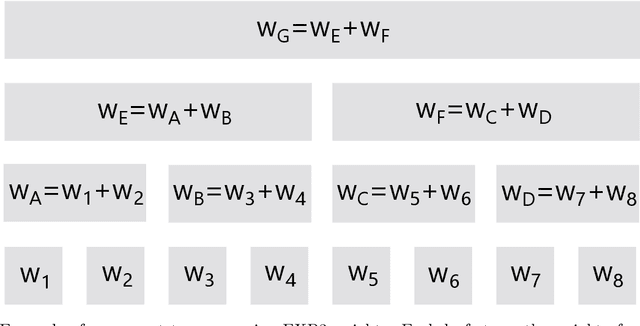

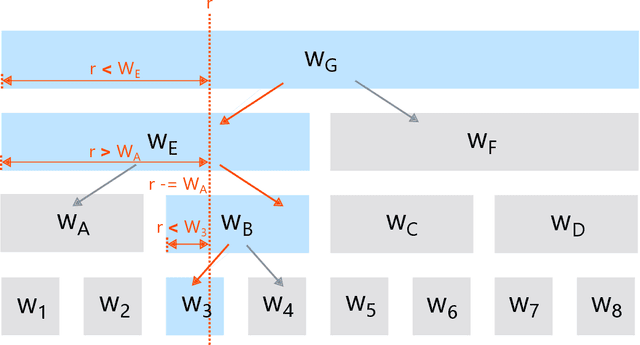

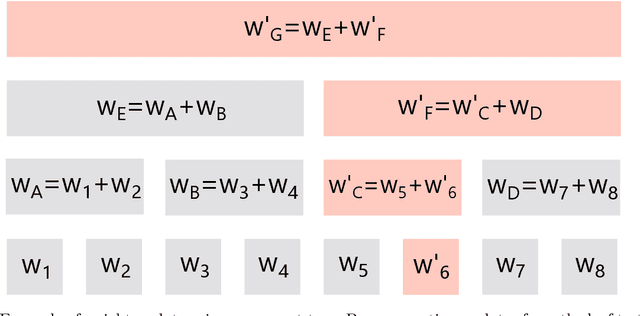

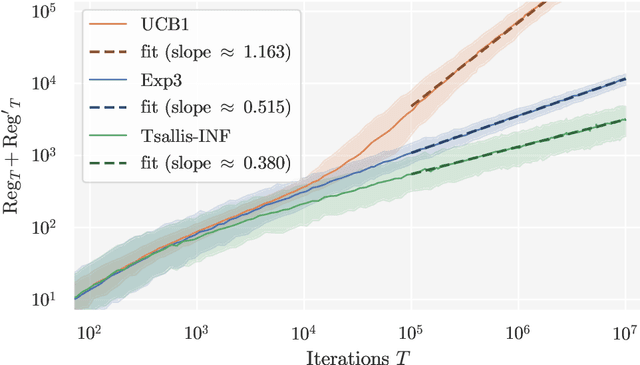

Abstract:We point out that EXP3 can be implemented in constant time per round, propose more practical algorithms, and analyze the trade-offs between the regret bounds and time complexities of these algorithms.

Reinforcement Learning from Adversarial Preferences in Tabular MDPs

Jul 15, 2025Abstract:We introduce a new framework of episodic tabular Markov decision processes (MDPs) with adversarial preferences, which we refer to as preference-based MDPs (PbMDPs). Unlike standard episodic MDPs with adversarial losses, where the numerical value of the loss is directly observed, in PbMDPs the learner instead observes preferences between two candidate arms, which represent the choices being compared. In this work, we focus specifically on the setting where the reward functions are determined by Borda scores. We begin by establishing a regret lower bound for PbMDPs with Borda scores. As a preliminary step, we present a simple instance to prove a lower bound of $\Omega(\sqrt{HSAT})$ for episodic MDPs with adversarial losses, where $H$ is the number of steps per episode, $S$ is the number of states, $A$ is the number of actions, and $T$ is the number of episodes. Leveraging this construction, we then derive a regret lower bound of $\Omega( (H^2 S K)^{1/3} T^{2/3} )$ for PbMDPs with Borda scores, where $K$ is the number of arms. Next, we develop algorithms that achieve a regret bound of order $T^{2/3}$. We first propose a global optimization approach based on online linear optimization over the set of all occupancy measures, achieving a regret bound of $\tilde{O}((H^2 S^2 K)^{1/3} T^{2/3} )$ under known transitions. However, this approach suffers from suboptimal dependence on the potentially large number of states $S$ and computational inefficiency. To address this, we propose a policy optimization algorithm whose regret is roughly bounded by $\tilde{O}( (H^6 S K^5)^{1/3} T^{2/3} )$ under known transitions, and further extend the result to the unknown-transition setting.

Bandit Max-Min Fair Allocation

May 08, 2025Abstract:In this paper, we study a new decision-making problem called the bandit max-min fair allocation (BMMFA) problem. The goal of this problem is to maximize the minimum utility among agents with additive valuations by repeatedly assigning indivisible goods to them. One key feature of this problem is that each agent's valuation for each item can only be observed through the semi-bandit feedback, while existing work supposes that the item values are provided at the beginning of each round. Another key feature is that the algorithm's reward function is not additive with respect to rounds, unlike most bandit-setting problems. Our first contribution is to propose an algorithm that has an asymptotic regret bound of $O(m\sqrt{T}\ln T/n + m\sqrt{T \ln(mnT)})$, where $n$ is the number of agents, $m$ is the number of items, and $T$ is the time horizon. This is based on a novel combination of bandit techniques and a resource allocation algorithm studied in the literature on competitive analysis. Our second contribution is to provide the regret lower bound of $\Omega(m\sqrt{T}/n)$. When $T$ is sufficiently larger than $n$, the gap between the upper and lower bounds is a logarithmic factor of $T$.

Optimal Regret of Bernoulli Bandits under Global Differential Privacy

May 08, 2025Abstract:As sequential learning algorithms are increasingly applied to real life, ensuring data privacy while maintaining their utilities emerges as a timely question. In this context, regret minimisation in stochastic bandits under $\epsilon$-global Differential Privacy (DP) has been widely studied. Unlike bandits without DP, there is a significant gap between the best-known regret lower and upper bound in this setting, though they "match" in order. Thus, we revisit the regret lower and upper bounds of $\epsilon$-global DP algorithms for Bernoulli bandits and improve both. First, we prove a tighter regret lower bound involving a novel information-theoretic quantity characterising the hardness of $\epsilon$-global DP in stochastic bandits. Our lower bound strictly improves on the existing ones across all $\epsilon$ values. Then, we choose two asymptotically optimal bandit algorithms, i.e. DP-KLUCB and DP-IMED, and propose their DP versions using a unified blueprint, i.e., (a) running in arm-dependent phases, and (b) adding Laplace noise to achieve privacy. For Bernoulli bandits, we analyse the regrets of these algorithms and show that their regrets asymptotically match our lower bound up to a constant arbitrary close to 1. This refutes the conjecture that forgetting past rewards is necessary to design optimal bandit algorithms under global DP. At the core of our algorithms lies a new concentration inequality for sums of Bernoulli variables under Laplace mechanism, which is a new DP version of the Chernoff bound. This result is universally useful as the DP literature commonly treats the concentrations of Laplace noise and random variables separately, while we couple them to yield a tighter bound.

Influential Bandits: Pulling an Arm May Change the Environment

Apr 11, 2025Abstract:While classical formulations of multi-armed bandit problems assume that each arm's reward is independent and stationary, real-world applications often involve non-stationary environments and interdependencies between arms. In particular, selecting one arm may influence the future rewards of other arms, a scenario not adequately captured by existing models such as rotting bandits or restless bandits. To address this limitation, we propose the influential bandit problem, which models inter-arm interactions through an unknown, symmetric, positive semi-definite interaction matrix that governs the dynamics of arm losses. We formally define this problem and establish two regret lower bounds, including a superlinear $\Omega(T^2 / \log^2 T)$ bound for the standard UCB algorithm and an algorithm-independent $\Omega(T)$ bound, which highlight the inherent difficulty of the setting. We then introduce a new algorithm based on a lower confidence bound (LCB) estimator tailored to the structure of the loss dynamics. Under mild assumptions, our algorithm achieves a regret of $O(KT \log T)$, which is nearly optimal in terms of its dependence on the time horizon. The algorithm is simple to implement and computationally efficient. Empirical evaluations on both synthetic and real-world datasets demonstrate the presence of inter-arm influence and confirm the superior performance of our method compared to conventional bandit algorithms.

Instance-Dependent Regret Bounds for Learning Two-Player Zero-Sum Games with Bandit Feedback

Feb 24, 2025

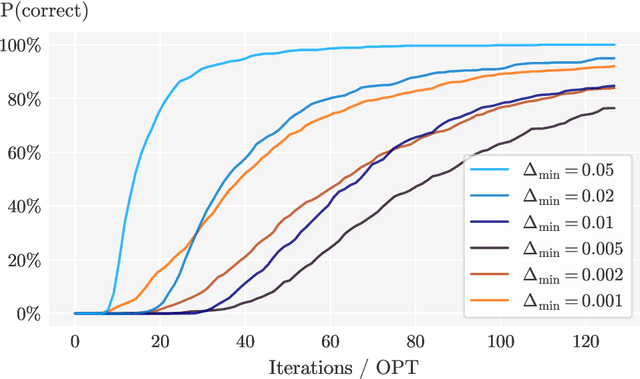

Abstract:No-regret self-play learning dynamics have become one of the premier ways to solve large-scale games in practice. Accelerating their convergence via improving the regret of the players over the naive $O(\sqrt{T})$ bound after $T$ rounds has been extensively studied in recent years, but almost all studies assume access to exact gradient feedback. We address the question of whether acceleration is possible under bandit feedback only and provide an affirmative answer for two-player zero-sum normal-form games. Specifically, we show that if both players apply the Tsallis-INF algorithm of Zimmert and Seldin (2018, arXiv:1807.07623), then their regret is at most $O(c_1 \log T + \sqrt{c_2 T})$, where $c_1$ and $c_2$ are game-dependent constants that characterize the difficulty of learning -- $c_1$ resembles the complexity of learning a stochastic multi-armed bandit instance and depends inversely on some gap measures, while $c_2$ can be much smaller than the number of actions when the Nash equilibria have a small support or are close to the boundary. In particular, for the case when a pure strategy Nash equilibrium exists, $c_2$ becomes zero, leading to an optimal instance-dependent regret bound as we show. We additionally prove that in this case, our algorithm also enjoys last-iterate convergence and can identify the pure strategy Nash equilibrium with near-optimal sample complexity.

Data-dependent Bounds with $T$-Optimal Best-of-Both-Worlds Guarantees in Multi-Armed Bandits using Stability-Penalty Matching

Feb 12, 2025

Abstract:Existing data-dependent and best-of-both-worlds regret bounds for multi-armed bandits problems have limited adaptivity as they are either data-dependent but not best-of-both-worlds (BOBW), BOBW but not data-dependent or have sub-optimal $O(\sqrt{T\ln{T}})$ worst-case guarantee in the adversarial regime. To overcome these limitations, we propose real-time stability-penalty matching (SPM), a new method for obtaining regret bounds that are simultaneously data-dependent, best-of-both-worlds and $T$-optimal for multi-armed bandits problems. In particular, we show that real-time SPM obtains bounds with worst-case guarantees of order $O(\sqrt{T})$ in the adversarial regime and $O(\ln{T})$ in the stochastic regime while simultaneously being adaptive to data-dependent quantities such as sparsity, variations, and small losses. Our results are obtained by extending the SPM technique for tuning the learning rates in the follow-the-regularized-leader (FTRL) framework, which further indicates that the combination of SPM and FTRL is a promising approach for proving new adaptive bounds in online learning problems.

Corrupted Learning Dynamics in Games

Dec 10, 2024Abstract:Learning in games is the problem where multiple players interact in a shared environment, each aiming to minimize their own regret, and it is known that an approximate equilibrium can be obtained when all players employ no-regret algorithms. Notably, by adopting optimistic follow-the-regularized-leader (OFTRL), the regret of each player after $T$ rounds is constant in two-player zero-sum games, implying that an equilibrium can be computed at a faster rate of $O(1/T)$. However, this acceleration is limited to the honest regime, where all players fully adhere to the given algorithms. To address this limitation, this paper presents corrupted learning dynamics that adaptively find an equilibrium at a rate dependent on the degree of deviation by each player from the given algorithm's output. First, in two-player zero-sum games, we provide learning dynamics where the external regret of the x-player (and similarly for the y-player) in the corrupted regime is roughly bounded by $O(\log (m_\mathrm{x} m_\mathrm{y}) + \sqrt{C_\mathrm{y}} + C_\mathrm{x})$, which implies a convergence rate of $\tilde{O}((\sqrt{C_\mathrm{y}} + C_\mathrm{x})/T)$ to a Nash equilibrium. Here, $m_\mathrm{x}$ and $m_\mathrm{y}$ are the number of actions of the x- and y-players, respectively, and $C_\mathrm{x}$ and $C_\mathrm{y}$ are the cumulative deviations of the x- and y-players from their given algorithms. Furthermore, we extend our approach to multi-player general-sum games, showing that the swap regret of player $i$ in the corrupted regime is bounded by $O(\log T + \sqrt{\sum_j C_j \log T} + C_i)$, where $C_i$ is the cumulative deviations of player $i$ from the given algorithm. This implies a convergence rate of $O((\log T + \sqrt{\sum_j C_j \log T} + C_i)/T)$ to a correlated equilibrium. Our learning dynamics are agnostic to the corruption levels and are based on OFTRL with new adaptive learning rates.

A Simple and Adaptive Learning Rate for FTRL in Online Learning with Minimax Regret of $Θ$ and its Application to Best-of-Both-Worlds

May 30, 2024

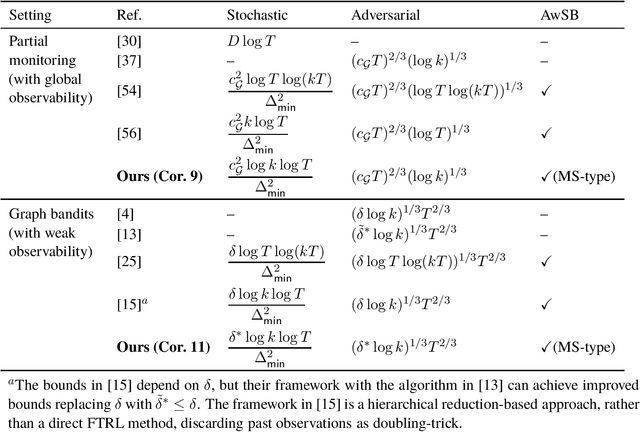

Abstract:Follow-the-Regularized-Leader (FTRL) is a powerful framework for various online learning problems. By designing its regularizer and learning rate to be adaptive to past observations, FTRL is known to work adaptively to various properties of an underlying environment. However, most existing adaptive learning rates are for online learning problems with a minimax regret of $\Theta(\sqrt{T})$ for the number of rounds $T$, and there are only a few studies on adaptive learning rates for problems with a minimax regret of $\Theta(T^{2/3})$, which include several important problems dealing with indirect feedback. To address this limitation, we establish a new adaptive learning rate framework for problems with a minimax regret of $\Theta(T^{2/3})$. Our learning rate is designed by matching the stability, penalty, and bias terms that naturally appear in regret upper bounds for problems with a minimax regret of $\Theta(T^{2/3})$. As applications of this framework, we consider two major problems dealing with indirect feedback: partial monitoring and graph bandits. We show that FTRL with our learning rate and the Tsallis entropy regularizer improves existing Best-of-Both-Worlds (BOBW) regret upper bounds, which achieve simultaneous optimality in the stochastic and adversarial regimes. The resulting learning rate is surprisingly simple compared to the existing learning rates for BOBW algorithms for problems with a minimax regret of $\Theta(T^{2/3})$.

Learning with Posterior Sampling for Revenue Management under Time-varying Demand

May 08, 2024

Abstract:This paper discusses the revenue management (RM) problem to maximize revenue by pricing items or services. One challenge in this problem is that the demand distribution is unknown and varies over time in real applications such as airline and retail industries. In particular, the time-varying demand has not been well studied under scenarios of unknown demand due to the difficulty of jointly managing the remaining inventory and estimating the demand. To tackle this challenge, we first introduce an episodic generalization of the RM problem motivated by typical application scenarios. We then propose a computationally efficient algorithm based on posterior sampling, which effectively optimizes prices by solving linear programming. We derive a Bayesian regret upper bound of this algorithm for general models where demand parameters can be correlated between time periods, while also deriving a regret lower bound for generic algorithms. Our empirical study shows that the proposed algorithm performs better than other benchmark algorithms and comparably to the optimal policy in hindsight. We also propose a heuristic modification of the proposed algorithm, which further efficiently learns the pricing policy in the experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge