Shengjie Zheng

The Brain-Inspired Cooperative Shared Control for Brain-Machine Interface

Oct 18, 2022

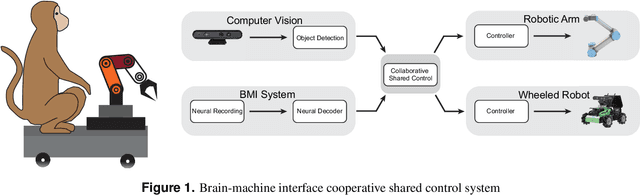

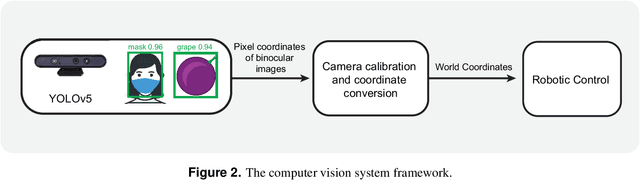

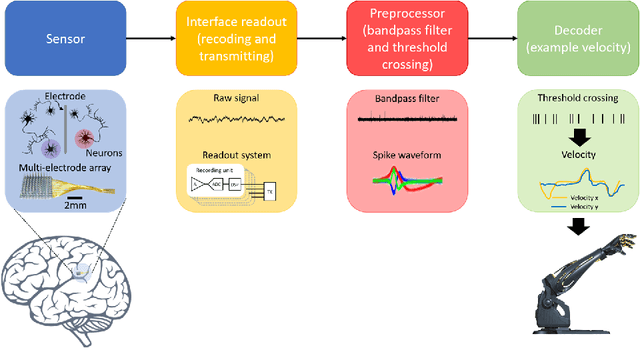

Abstract:In the practical application of brain-machine interface technology, the problem often faced is the low information content and high noise of the neural signals collected by the electrode and the difficulty of decoding by the decoder, which makes it difficult for the robotic to obtain stable instructions to complete the task. The idea based on the principle of cooperative shared control can be achieved by extracting general motor commands from brain activity, while the fine details of the movement can be hosted to the robot for completion, or the brain can have complete control. This study proposes a brain-machine interface shared control system based on spiking neural networks for robotic arm movement control and wheeled robots wheel speed control and steering, respectively. The former can reliably control the robotic arm to move to the destination position, while the latter controls the wheeled robots for object tracking and map generation. The results show that the shared control based on brain-inspired intelligence can perform some typical tasks in complex environments and positively improve the fluency and ease of use of brain-machine interaction, and also demonstrate the potential of this control method in clinical applications of brain-machine interfaces.

The Brain-Inspired Decoder for Natural Visual Image Reconstruction

Jul 18, 2022

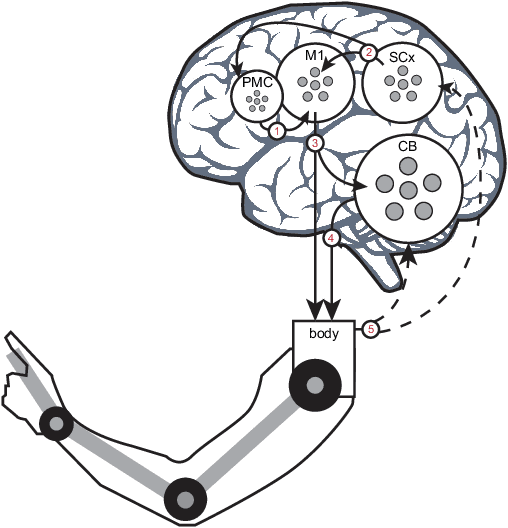

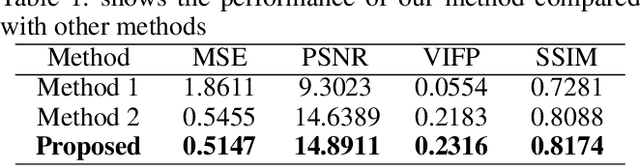

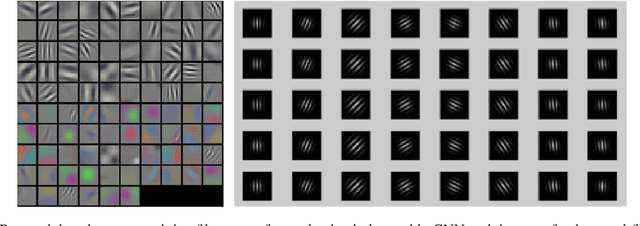

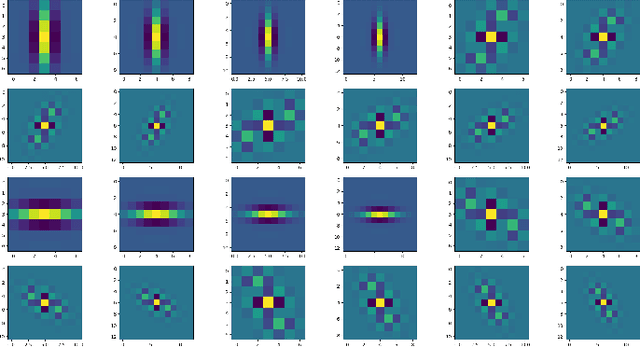

Abstract:Decoding images from brain activity has been a challenge. Owing to the development of deep learning, there are available tools to solve this problem. The decoded image, which aims to map neural spike trains to low-level visual features and high-level semantic information space. Recently, there are a few studies of decoding from spike trains, however, these studies pay less attention to the foundations of neuroscience and there are few studies that merged receptive field into visual image reconstruction. In this paper, we propose a deep learning neural network architecture with biological properties to reconstruct visual image from spike trains. As far as we know, we implemented a method that integrated receptive field property matrix into loss function at the first time. Our model is an end-to-end decoder from neural spike trains to images. We not only merged Gabor filter into auto-encoder which used to generate images but also proposed a loss function with receptive field properties. We evaluated our decoder on two datasets which contain macaque primary visual cortex neural spikes and salamander retina ganglion cells (RGCs) spikes. Our results show that our method can effectively combine receptive field features to reconstruct images, providing a new approach to visual reconstruction based on neural information.

An Adaptive Contrastive Learning Model for Spike Sorting

May 24, 2022

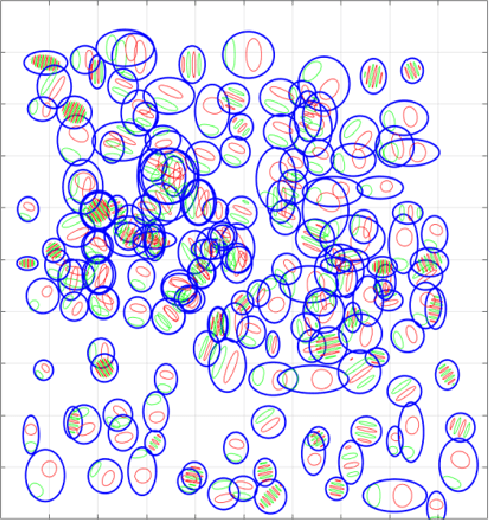

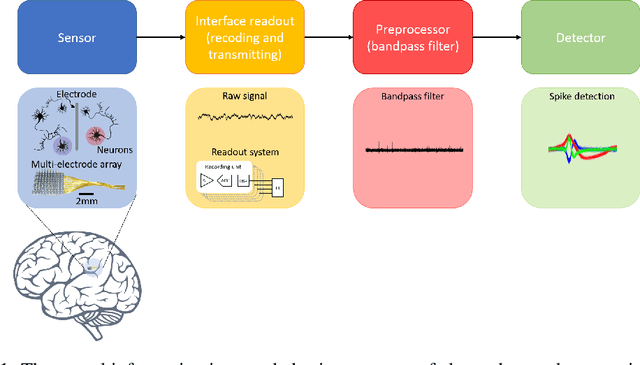

Abstract:Brain-computer interfaces (BCIs), is ways for electronic devices to communicate directly with the brain. For most medical-type brain-computer interface tasks, the activity of multiple units of neurons or local field potentials is sufficient for decoding. But for BCIs used in neuroscience research, it is important to separate out the activity of individual neurons. With the development of large-scale silicon technology and the increasing number of probe channels, artificially interpreting and labeling spikes is becoming increasingly impractical. In this paper, we propose a novel modeling framework: Adaptive Contrastive Learning Model that learns representations from spikes through contrastive learning based on the maximizing mutual information loss function as a theoretical basis. Based on the fact that data with similar features share the same labels whether they are multi-classified or binary-classified. With this theoretical support, we simplify the multi-classification problem into multiple binary-classification, improving both the accuracy and the runtime efficiency. Moreover, we also introduce a series of enhancements for the spikes, while solving the problem that the classification effect is affected because of the overlapping spikes.

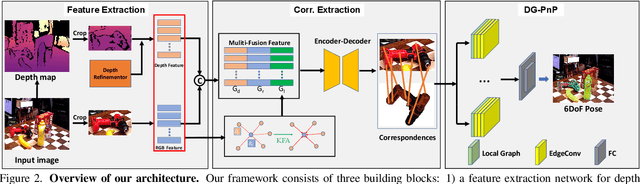

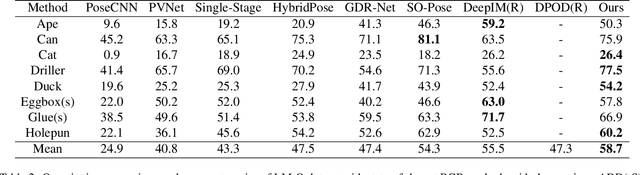

DGECN: A Depth-Guided Edge Convolutional Network for End-to-End 6D Pose Estimation

Apr 21, 2022

Abstract:Monocular 6D pose estimation is a fundamental task in computer vision. Existing works often adopt a two-stage pipeline by establishing correspondences and utilizing a RANSAC algorithm to calculate 6 degrees-of-freedom (6DoF) pose. Recent works try to integrate differentiable RANSAC algorithms to achieve an end-to-end 6D pose estimation. However, most of them hardly consider the geometric features in 3D space, and ignore the topology cues when performing differentiable RANSAC algorithms. To this end, we proposed a Depth-Guided Edge Convolutional Network (DGECN) for 6D pose estimation task. We have made efforts from the following three aspects: 1) We take advantages ofestimated depth information to guide both the correspondences-extraction process and the cascaded differentiable RANSAC algorithm with geometric information. 2)We leverage the uncertainty ofthe estimated depth map to improve accuracy and robustness ofthe output 6D pose. 3) We propose a differentiable Perspective-n-Point(PnP) algorithm via edge convolution to explore the topology relations between 2D-3D correspondences. Experiments demonstrate that our proposed network outperforms current works on both effectiveness and efficiency.

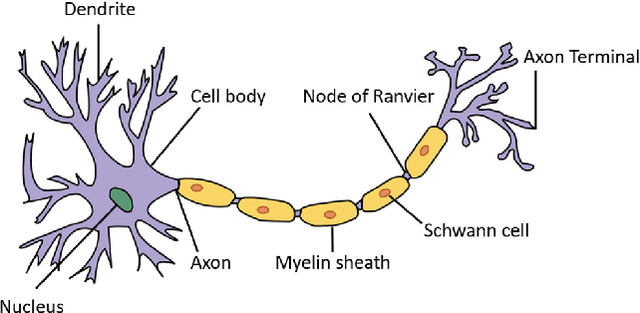

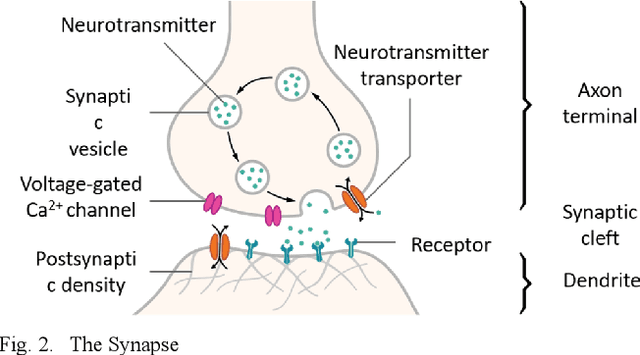

An Introductory Review of Spiking Neural Network and Artificial Neural Network: From Biological Intelligence to Artificial Intelligence

Apr 09, 2022

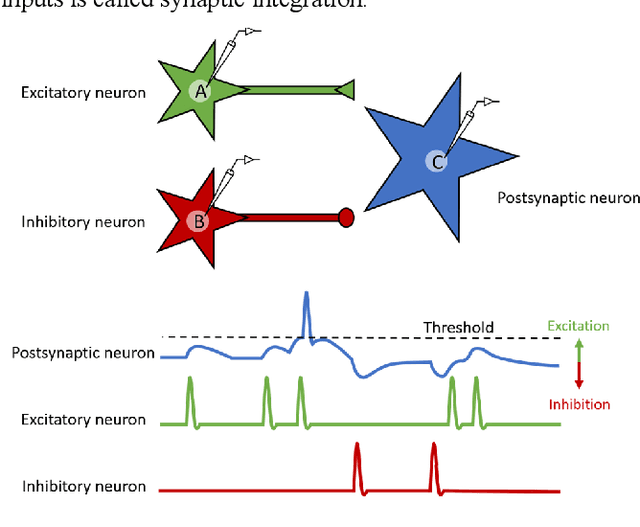

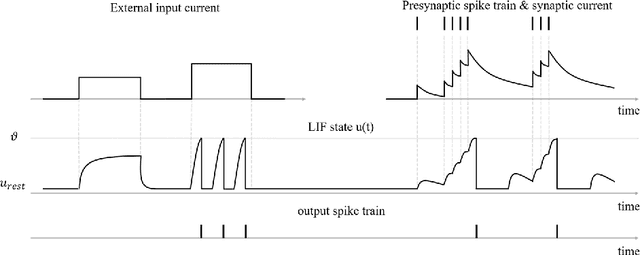

Abstract:Recently, stemming from the rapid development of artificial intelligence, which has gained expansive success in pattern recognition, robotics, and bioinformatics, neuroscience is also gaining tremendous progress. A kind of spiking neural network with biological interpretability is gradually receiving wide attention, and this kind of neural network is also regarded as one of the directions toward general artificial intelligence. This review introduces the following sections, the biological background of spiking neurons and the theoretical basis, different neuronal models, the connectivity of neural circuits, the mainstream neural network learning mechanisms and network architectures, etc. This review hopes to attract different researchers and advance the development of brain-inspired intelligence and artificial intelligence.

A Spiking Neural Network based on Neural Manifold for Augmenting Intracortical Brain-Computer Interface Data

Mar 26, 2022

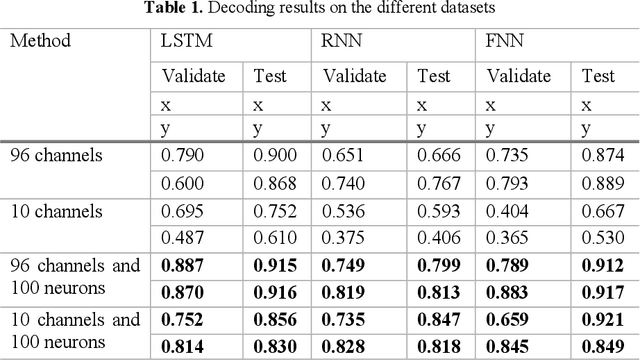

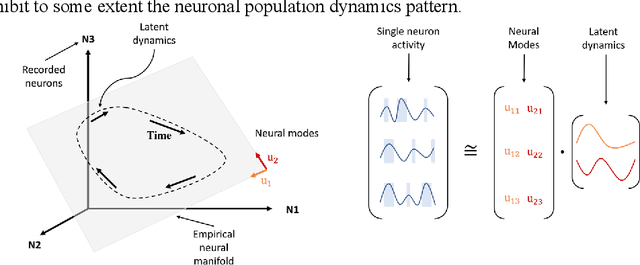

Abstract:Brain-computer interfaces (BCIs), transform neural signals in the brain into in-structions to control external devices. However, obtaining sufficient training data is difficult as well as limited. With the advent of advanced machine learning methods, the capability of brain-computer interfaces has been enhanced like never before, however, these methods require a large amount of data for training and thus require data augmentation of the limited data available. Here, we use spiking neural networks (SNN) as data generators. It is touted as the next-generation neu-ral network and is considered as one of the algorithms oriented to general artifi-cial intelligence because it borrows the neural information processing from bio-logical neurons. We use the SNN to generate neural spike information that is bio-interpretable and conforms to the intrinsic patterns in the original neural data. Ex-periments show that the model can directly synthesize new spike trains, which in turn improves the generalization ability of the BCI decoder. Both the input and output of the spiking neural model are spike information, which is a brain-inspired intelligence approach that can be better integrated with BCI in the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge