An Adaptive Contrastive Learning Model for Spike Sorting

Paper and Code

May 24, 2022

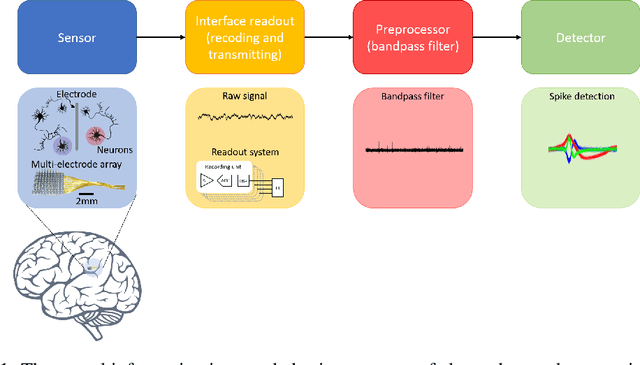

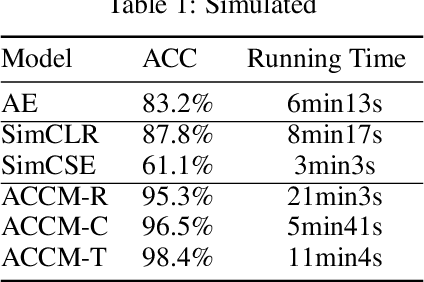

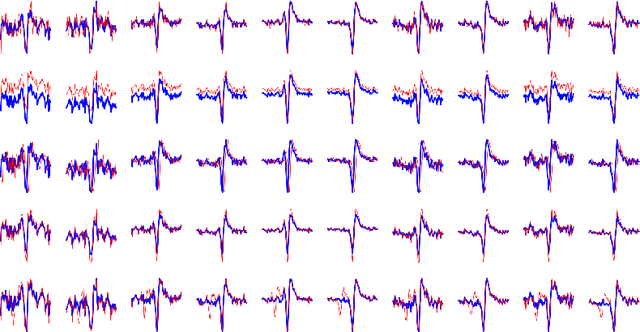

Brain-computer interfaces (BCIs), is ways for electronic devices to communicate directly with the brain. For most medical-type brain-computer interface tasks, the activity of multiple units of neurons or local field potentials is sufficient for decoding. But for BCIs used in neuroscience research, it is important to separate out the activity of individual neurons. With the development of large-scale silicon technology and the increasing number of probe channels, artificially interpreting and labeling spikes is becoming increasingly impractical. In this paper, we propose a novel modeling framework: Adaptive Contrastive Learning Model that learns representations from spikes through contrastive learning based on the maximizing mutual information loss function as a theoretical basis. Based on the fact that data with similar features share the same labels whether they are multi-classified or binary-classified. With this theoretical support, we simplify the multi-classification problem into multiple binary-classification, improving both the accuracy and the runtime efficiency. Moreover, we also introduce a series of enhancements for the spikes, while solving the problem that the classification effect is affected because of the overlapping spikes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge