Shaohua Gao

One-Step Event-Driven High-Speed Autofocus

Mar 03, 2025

Abstract:High-speed autofocus in extreme scenes remains a significant challenge. Traditional methods rely on repeated sampling around the focus position, resulting in ``focus hunting''. Event-driven methods have advanced focusing speed and improved performance in low-light conditions; however, current approaches still require at least one lengthy round of ``focus hunting'', involving the collection of a complete focus stack. We introduce the Event Laplacian Product (ELP) focus detection function, which combines event data with grayscale Laplacian information, redefining focus search as a detection task. This innovation enables the first one-step event-driven autofocus, cutting focusing time by up to two-thirds and reducing focusing error by 24 times on the DAVIS346 dataset and 22 times on the EVK4 dataset. Additionally, we present an autofocus pipeline tailored for event-only cameras, achieving accurate results across a range of challenging motion and lighting conditions. All datasets and code will be made publicly available.

Towards Single-Lens Controllable Depth-of-Field Imaging via All-in-Focus Aberration Correction and Monocular Depth Estimation

Sep 15, 2024

Abstract:Controllable Depth-of-Field (DoF) imaging commonly produces amazing visual effects based on heavy and expensive high-end lenses. However, confronted with the increasing demand for mobile scenarios, it is desirable to achieve a lightweight solution with Minimalist Optical Systems (MOS). This work centers around two major limitations of MOS, i.e., the severe optical aberrations and uncontrollable DoF, for achieving single-lens controllable DoF imaging via computational methods. A Depth-aware Controllable DoF Imaging (DCDI) framework is proposed equipped with All-in-Focus (AiF) aberration correction and monocular depth estimation, where the recovered image and corresponding depth map are utilized to produce imaging results under diverse DoFs of any high-end lens via patch-wise convolution. To address the depth-varying optical degradation, we introduce a Depth-aware Degradation-adaptive Training (DA2T) scheme. At the dataset level, a Depth-aware Aberration MOS (DAMOS) dataset is established based on the simulation of Point Spread Functions (PSFs) under different object distances. Additionally, we design two plug-and-play depth-aware mechanisms to embed depth information into the aberration image recovery for better tackling depth-aware degradation. Furthermore, we propose a storage-efficient Omni-Lens-Field model to represent the 4D PSF library of various lenses. With the predicted depth map, recovered image, and depth-aware PSF map inferred by Omni-Lens-Field, single-lens controllable DoF imaging is achieved. Comprehensive experimental results demonstrate that the proposed framework enhances the recovery performance, and attains impressive single-lens controllable DoF imaging results, providing a seminal baseline for this field. The source code and the established dataset will be publicly available at https://github.com/XiaolongQian/DCDI.

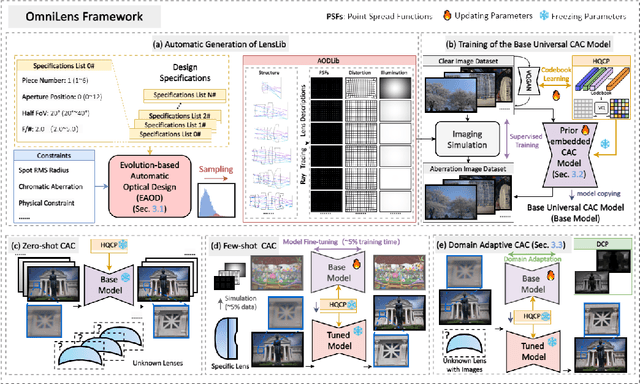

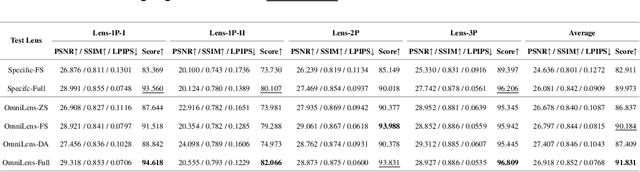

A Flexible Framework for Universal Computational Aberration Correction via Automatic Lens Library Generation and Domain Adaptation

Sep 09, 2024

Abstract:Emerging universal Computational Aberration Correction (CAC) paradigms provide an inspiring solution to light-weight and high-quality imaging without repeated data preparation and model training to accommodate new lens designs. However, the training databases in these approaches, i.e., the lens libraries (LensLibs), suffer from their limited coverage of real-world aberration behaviors. In this work, we set up an OmniLens framework for universal CAC, considering both the generalization ability and flexibility. OmniLens extends the idea of universal CAC to a broader concept, where a base model is trained for three cases, including zero-shot CAC with the pre-trained model, few-shot CAC with a little lens-specific data for fine-tuning, and domain adaptive CAC using domain adaptation for lens-descriptions-unknown lens. In terms of OmniLens's data foundation, we first propose an Evolution-based Automatic Optical Design (EAOD) pipeline to construct LensLib automatically, coined AODLib, whose diversity is enriched by an evolution framework, with comprehensive constraints and a hybrid optimization strategy for achieving realistic aberration behaviors. For network design, we introduce the guidance of high-quality codebook priors to facilitate zero-shot CAC and few-shot CAC, which enhances the model's generalization ability, while also boosting its convergence in a few-shot case. Furthermore, based on the statistical observation of dark channel priors in optical degradation, we design an unsupervised regularization term to adapt the base model to the target descriptions-unknown lens using its aberration images without ground truth. We validate OmniLens on 4 manually designed low-end lenses with various structures and aberration behaviors. Remarkably, the base model trained on AODLib exhibits strong generalization capabilities, achieving 97% of the lens-specific performance in a zero-shot setting.

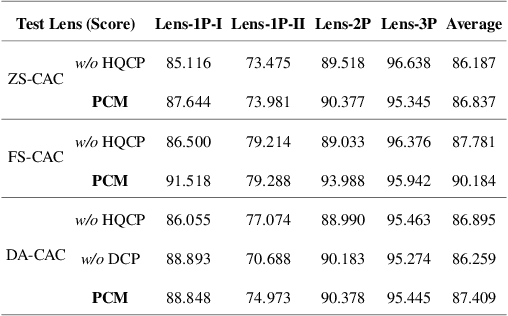

Design, analysis, and manufacturing of a glass-plastic hybrid minimalist aspheric panoramic annular lens

May 05, 2024

Abstract:We propose a high-performance glass-plastic hybrid minimalist aspheric panoramic annular lens (ASPAL) to solve several major limitations of the traditional panoramic annular lens (PAL), such as large size, high weight, and complex system. The field of view (FoV) of the ASPAL is 360{\deg}x(35{\deg}~110{\deg}) and the imaging quality is close to the diffraction limit. This large FoV ASPAL is composed of only 4 lenses. Moreover, we establish a physical structure model of PAL using the ray tracing method and study the influence of its physical parameters on compactness ratio. In addition, for the evaluation of local tolerances of annular surfaces, we propose a tolerance analysis method suitable for ASPAL. This analytical method can effectively analyze surface irregularities on annular surfaces and provide clear guidance on manufacturing tolerances for ASPAL. Benefiting from high-precision glass molding and injection molding aspheric lens manufacturing techniques, we finally manufactured 20 ASPALs in small batches. The weight of an ASPAL prototype is only 8.5 g. Our framework provides promising insights for the application of panoramic systems in space and weight-constrained environmental sensing scenarios such as intelligent security, micro-UAVs, and micro-robots.

Global Search Optics: Automatically Exploring Optimal Solutions to Compact Computational Imaging Systems

Apr 30, 2024

Abstract:The popularity of mobile vision creates a demand for advanced compact computational imaging systems, which call for the development of both a lightweight optical system and an effective image reconstruction model. Recently, joint design pipelines come to the research forefront, where the two significant components are simultaneously optimized via data-driven learning to realize the optimal system design. However, the effectiveness of these designs largely depends on the initial setup of the optical system, complicated by a non-convex solution space that impedes reaching a globally optimal solution. In this work, we present Global Search Optics (GSO) to automatically design compact computational imaging systems through two parts: (i) Fused Optimization Method for Automatic Optical Design (OptiFusion), which searches for diverse initial optical systems under certain design specifications; and (ii) Efficient Physic-aware Joint Optimization (EPJO), which conducts parallel joint optimization of initial optical systems and image reconstruction networks with the consideration of physical constraints, culminating in the selection of the optimal solution. Extensive experimental results on the design of three-piece (3P) sphere computational imaging systems illustrate that the GSO serves as a transformative end-to-end lens design paradigm for superior global optimal structure searching ability, which provides compact computational imaging systems with higher imaging quality compared to traditional methods. The source code will be made publicly available at https://github.com/wumengshenyou/GSO.

Real-World Computational Aberration Correction via Quantized Domain-Mixing Representation

Mar 15, 2024Abstract:Relying on paired synthetic data, existing learning-based Computational Aberration Correction (CAC) methods are confronted with the intricate and multifaceted synthetic-to-real domain gap, which leads to suboptimal performance in real-world applications. In this paper, in contrast to improving the simulation pipeline, we deliver a novel insight into real-world CAC from the perspective of Unsupervised Domain Adaptation (UDA). By incorporating readily accessible unpaired real-world data into training, we formalize the Domain Adaptive CAC (DACAC) task, and then introduce a comprehensive Real-world aberrated images (Realab) dataset to benchmark it. The setup task presents a formidable challenge due to the intricacy of understanding the target aberration domain. To this intent, we propose a novel Quntized Domain-Mixing Representation (QDMR) framework as a potent solution to the issue. QDMR adapts the CAC model to the target domain from three key aspects: (1) reconstructing aberrated images of both domains by a VQGAN to learn a Domain-Mixing Codebook (DMC) which characterizes the degradation-aware priors; (2) modulating the deep features in CAC model with DMC to transfer the target domain knowledge; and (3) leveraging the trained VQGAN to generate pseudo target aberrated images from the source ones for convincing target domain supervision. Extensive experiments on both synthetic and real-world benchmarks reveal that the models with QDMR consistently surpass the competitive methods in mitigating the synthetic-to-real gap, which produces visually pleasant real-world CAC results with fewer artifacts. Codes and datasets will be made publicly available.

Minimalist and High-Quality Panoramic Imaging with PSF-aware Transformers

Jun 22, 2023Abstract:High-quality panoramic images with a Field of View (FoV) of 360-degree are essential for contemporary panoramic computer vision tasks. However, conventional imaging systems come with sophisticated lens designs and heavy optical components. This disqualifies their usage in many mobile and wearable applications where thin and portable, minimalist imaging systems are desired. In this paper, we propose a Panoramic Computational Imaging Engine (PCIE) to address minimalist and high-quality panoramic imaging. With less than three spherical lenses, a Minimalist Panoramic Imaging Prototype (MPIP) is constructed based on the design of the Panoramic Annular Lens (PAL), but with low-quality imaging results due to aberrations and small image plane size. We propose two pipelines, i.e. Aberration Correction (AC) and Super-Resolution and Aberration Correction (SR&AC), to solve the image quality problems of MPIP, with imaging sensors of small and large pixel size, respectively. To provide a universal network for the two pipelines, we leverage the information from the Point Spread Function (PSF) of the optical system and design a PSF-aware Aberration-image Recovery Transformer (PART), in which the self-attention calculation and feature extraction are guided via PSF-aware mechanisms. We train PART on synthetic image pairs from simulation and put forward the PALHQ dataset to fill the gap of real-world high-quality PAL images for low-level vision. A comprehensive variety of experiments on synthetic and real-world benchmarks demonstrates the impressive imaging results of PCIE and the effectiveness of plug-and-play PSF-aware mechanisms. We further deliver heuristic experimental findings for minimalist and high-quality panoramic imaging. Our dataset and code will be available at https://github.com/zju-jiangqi/PCIE-PART.

Computational Optics Meet Domain Adaptation: Transferring Semantic Segmentation Beyond Aberrations

Nov 21, 2022

Abstract:Semantic scene understanding with Minimalist Optical Systems (MOS) in mobile and wearable applications remains a challenge due to the corrupted imaging quality induced by optical aberrations. However, previous works only focus on improving the subjective imaging quality through computational optics, i.e. Computational Imaging (CI) technique, ignoring the feasibility in semantic segmentation. In this paper, we pioneer to investigate Semantic Segmentation under Optical Aberrations (SSOA) of MOS. To benchmark SSOA, we construct Virtual Prototype Lens (VPL) groups through optical simulation, generating Cityscapes-ab and KITTI-360-ab datasets under different behaviors and levels of aberrations. We look into SSOA via an unsupervised domain adaptation perspective to address the scarcity of labeled aberration data in real-world scenarios. Further, we propose Computational Imaging Assisted Domain Adaptation (CIADA) to leverage prior knowledge of CI for robust performance in SSOA. Based on our benchmark, we conduct experiments on the robustness of state-of-the-art segmenters against aberrations. In addition, extensive evaluations of possible solutions to SSOA reveal that CIADA achieves superior performance under all aberration distributions, paving the way for the applications of MOS in semantic scene understanding. Code and dataset will be made publicly available at https://github.com/zju-jiangqi/CIADA.

Annular Computational Imaging: Capture Clear Panoramic Images through Simple Lens

Jun 13, 2022

Abstract:Panoramic Annular Lens (PAL), composed of few lenses, has great potential in panoramic surrounding sensing tasks for mobile and wearable devices because of its tiny size and large Field of View (FoV). However, the image quality of tiny-volume PAL confines to optical limit due to the lack of lenses for aberration correction. In this paper, we propose an Annular Computational Imaging (ACI) framework to break the optical limit of light-weight PAL design. To facilitate learning-based image restoration, we introduce a wave-based simulation pipeline for panoramic imaging and tackle the synthetic-to-real gap through multiple data distributions. The proposed pipeline can be easily adapted to any PAL with design parameters and is suitable for loose-tolerance designs. Furthermore, we design the Physics Informed Image Restoration Network (PI2RNet), considering the physical priors of panoramic imaging and physics-informed learning. At the dataset level, we create the DIVPano dataset and the extensive experiments on it illustrate that our proposed network sets the new state of the art in the panoramic image restoration under spatially-variant degradation. In addition, the evaluation of the proposed ACI on a simple PAL with only 3 spherical lenses reveals the delicate balance between high-quality panoramic imaging and compact design. To the best of our knowledge, we are the first to explore Computational Imaging (CI) in PAL. Code and datasets will be made publicly available at https://github.com/zju-jiangqi/ACI-PI2RNet.

Review on Panoramic Imaging and Its Applications in Scene Understanding

May 11, 2022

Abstract:With the rapid development of high-speed communication and artificial intelligence technologies, human perception of real-world scenes is no longer limited to the use of small Field of View (FoV) and low-dimensional scene detection devices. Panoramic imaging emerges as the next generation of innovative intelligent instruments for environmental perception and measurement. However, while satisfying the need for large-FoV photographic imaging, panoramic imaging instruments are expected to have high resolution, no blind area, miniaturization, and multi-dimensional intelligent perception, and can be combined with artificial intelligence methods towards the next generation of intelligent instruments, enabling deeper understanding and more holistic perception of 360-degree real-world surrounding environments. Fortunately, recent advances in freeform surfaces, thin-plate optics, and metasurfaces provide innovative approaches to address human perception of the environment, offering promising ideas beyond conventional optical imaging. In this review, we begin with introducing the basic principles of panoramic imaging systems, and then describe the architectures, features, and functions of various panoramic imaging systems. Afterwards, we discuss in detail the broad application prospects and great design potential of freeform surfaces, thin-plate optics, and metasurfaces in panoramic imaging. We then provide a detailed analysis on how these techniques can help enhance the performance of panoramic imaging systems. We further offer a detailed analysis of applications of panoramic imaging in scene understanding for autonomous driving and robotics, spanning panoramic semantic image segmentation, panoramic depth estimation, panoramic visual localization, and so on. Finally, we cast a perspective on future potential and research directions for panoramic imaging instruments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge