Shangyu Xie

Bidirectional Image-Event Guided Low-Light Image Enhancement

Jun 06, 2025Abstract:Under extreme low-light conditions, traditional frame-based cameras, due to their limited dynamic range and temporal resolution, face detail loss and motion blur in captured images. To overcome this bottleneck, researchers have introduced event cameras and proposed event-guided low-light image enhancement algorithms. However, these methods neglect the influence of global low-frequency noise caused by dynamic lighting conditions and local structural discontinuities in sparse event data. To address these issues, we propose an innovative Bidirectional guided Low-light Image Enhancement framework (BiLIE). Specifically, to mitigate the significant low-frequency noise introduced by global illumination step changes, we introduce the frequency high-pass filtering-based Event Feature Enhancement (EFE) module at the event representation level to suppress the interference of low-frequency information, and preserve and highlight the high-frequency edges.Furthermore, we design a Bidirectional Cross Attention Fusion (BCAF) mechanism to acquire high-frequency structures and edges while suppressing structural discontinuities and local noise introduced by sparse event guidance, thereby generating smoother fused representations.Additionally, considering the poor visual quality and color bias in existing datasets, we provide a new dataset (RELIE), with high-quality ground truth through a reliable enhancement scheme. Extensive experimental results demonstrate that our proposed BiLIE outperforms state-of-the-art methods by 0.96dB in PSNR and 0.03 in LPIPS.

Label Inference Attack against Split Learning under Regression Setting

Jan 18, 2023

Abstract:As a crucial building block in vertical Federated Learning (vFL), Split Learning (SL) has demonstrated its practice in the two-party model training collaboration, where one party holds the features of data samples and another party holds the corresponding labels. Such method is claimed to be private considering the shared information is only the embedding vectors and gradients instead of private raw data and labels. However, some recent works have shown that the private labels could be leaked by the gradients. These existing attack only works under the classification setting where the private labels are discrete. In this work, we step further to study the leakage in the scenario of the regression model, where the private labels are continuous numbers (instead of discrete labels in classification). This makes previous attacks harder to infer the continuous labels due to the unbounded output range. To address the limitation, we propose a novel learning-based attack that integrates gradient information and extra learning regularization objectives in aspects of model training properties, which can infer the labels under regression settings effectively. The comprehensive experiments on various datasets and models have demonstrated the effectiveness of our proposed attack. We hope our work can pave the way for future analyses that make the vFL framework more secure.

On Fair Classification with Mostly Private Sensitive Attributes

Jul 18, 2022

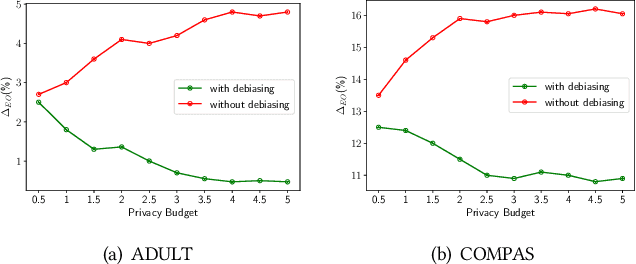

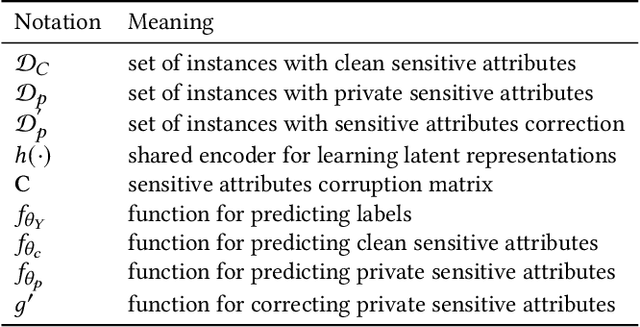

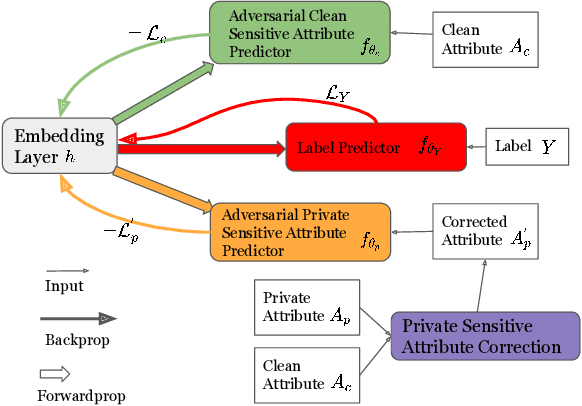

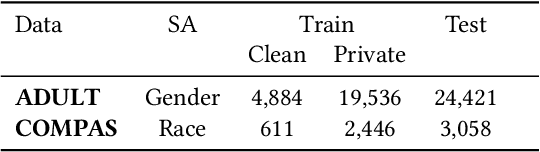

Abstract:Machine learning models have demonstrated promising performance in many areas. However, the concerns that they can be biased against specific groups hinder their adoption in high-stake applications. Thus it is essential to ensure fairness in machine learning models. Most of the previous efforts require access to sensitive attributes for mitigating bias. Nonetheless, it is often infeasible to obtain large scale of data with sensitive attributes due to people's increasing awareness of privacy and the legal compliance. Therefore, an important research question is how to make fair predictions under privacy? In this paper, we study a novel problem on fair classification in a semi-private setting, where most of the sensitive attributes are private and only a small amount of clean sensitive attributes are available. To this end, we propose a novel framework FairSP that can first learn to correct the noisy sensitive attributes under privacy guarantee via exploiting the limited clean sensitive attributes. Then, it jointly models the corrected and clean data in an adversarial way for debiasing and prediction. Theoretical analysis shows that the proposed model can ensure fairness when most of the sensitive attributes are private. Experimental results on real-world datasets demonstrate the effectiveness of the proposed model for making fair predictions under privacy and maintaining high accuracy.

VideoDP: A Universal Platform for Video Analytics with Differential Privacy

Sep 20, 2019

Abstract:Massive amounts of video data are ubiquitously generated in personal devices and dedicated video recording facilities. Analyzing such data would be extremely beneficial in real world (e.g., urban traffic analysis, pedestrian behavior analysis, video surveillance). However, videos contain considerable sensitive information, such as human faces, identities and activities. Most of the existing video sanitization techniques simply obfuscate the video by detecting and blurring the region of interests (e.g., faces, vehicle plates, locations and timestamps) without quantifying and bounding the privacy leakage in the sanitization. In this paper, to the best of our knowledge, we propose the first differentially private video analytics platform (VideoDP) which flexibly supports different video analyses with rigorous privacy guarantee. Different from traditional noise-injection based differentially private mechanisms, given the input video, VideoDP randomly generates a utility-driven private video in which adding or removing any sensitive visual element (e.g., human, object) does not significantly affect the output video. Then, different video analyses requested by untrusted video analysts can be flexibly performed over the utility-driven video while ensuring differential privacy. Finally, we conduct experiments on real videos, and the experimental results demonstrate that our VideoDP effectively functions video analytics with good utility.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge