Shang-Ling Hsu

Mobility-Embedded POIs: Learning What A Place Is and How It Is Used from Human Movement

Jan 29, 2026Abstract:Recent progress in geospatial foundation models highlights the importance of learning general-purpose representations for real-world locations, particularly points-of-interest (POIs) where human activity concentrates. Existing approaches, however, focus primarily on place identity derived from static textual metadata, or learn representations tied to trajectory context, which capture movement regularities rather than how places are actually used (i.e., POI's function). We argue that POI function is a missing but essential signal for general POI representations. We introduce Mobility-Embedded POIs (ME-POIs), a framework that augments POI embeddings derived, from language models with large-scale human mobility data to learn POI-centric, context-independent representations grounded in real-world usage. ME-POIs encodes individual visits as temporally contextualized embeddings and aligns them with learnable POI representations via contrastive learning to capture usage patterns across users and time. To address long-tail sparsity, we propose a novel mechanism that propagates temporal visit patterns from nearby, frequently visited POIs across multiple spatial scales. We evaluate ME-POIs on five newly proposed map enrichment tasks, testing its ability to capture both the identity and function of POIs. Across all tasks, augmenting text-based embeddings with ME-POIs consistently outperforms both text-only and mobility-only baselines. Notably, ME-POIs trained on mobility data alone can surpass text-only models on certain tasks, highlighting that POI function is a critical component of accurate and generalizable POI representations.

Forecasting Unseen Points of Interest Visits Using Context and Proximity Priors

Nov 22, 2024

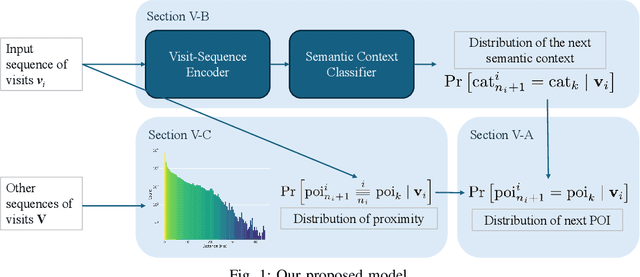

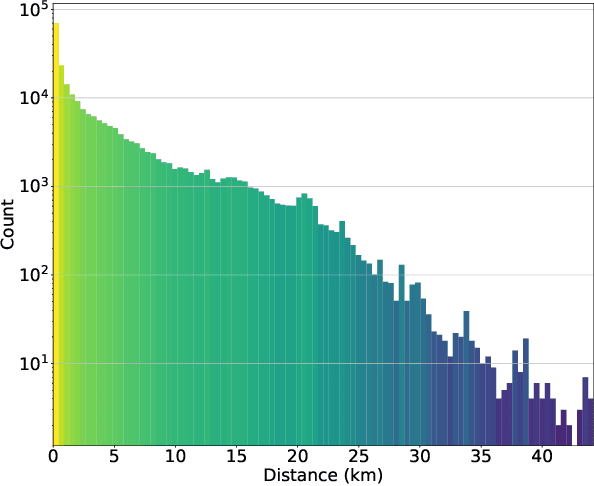

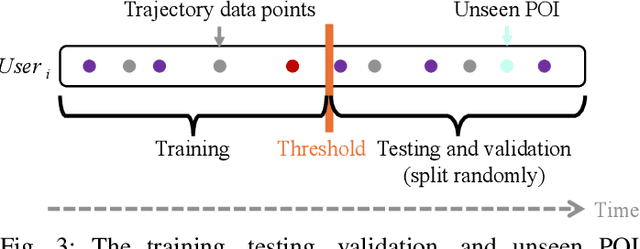

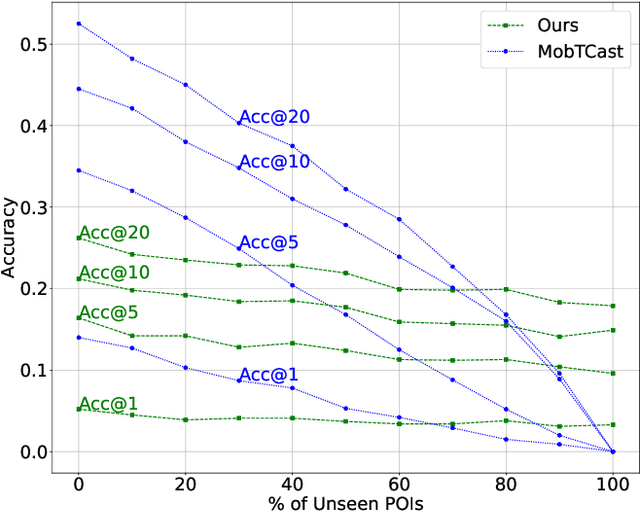

Abstract:Understanding human mobility behavior is crucial for numerous applications, including crowd management, location-based recommendations, and the estimation of pandemic spread. Machine learning models can predict the Points of Interest (POIs) that individuals are likely to visit in the future by analyzing their historical visit patterns. Previous studies address this problem by learning a POI classifier, where each class corresponds to a POI. However, this limits their applicability to predict a new POI that was not in the training data, such as the opening of new restaurants. To address this challenge, we propose a model designed to predict a new POI outside the training data as long as its context is aligned with the user's interests. Unlike existing approaches that directly predict specific POIs, our model first forecasts the semantic context of potential future POIs, then combines this with a proximity-based prior probability distribution to determine the exact POI. Experimental results on real-world visit data demonstrate that our model outperforms baseline methods that do not account for semantic contexts, achieving a 17% improvement in accuracy. Notably, as new POIs are introduced over time, our model remains robust, exhibiting a lower decline rate in prediction accuracy compared to existing methods.

TrajGPT: Controlled Synthetic Trajectory Generation Using a Multitask Transformer-Based Spatiotemporal Model

Nov 07, 2024

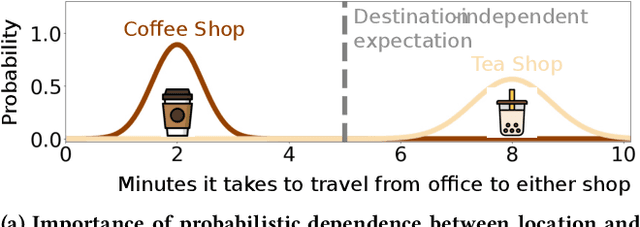

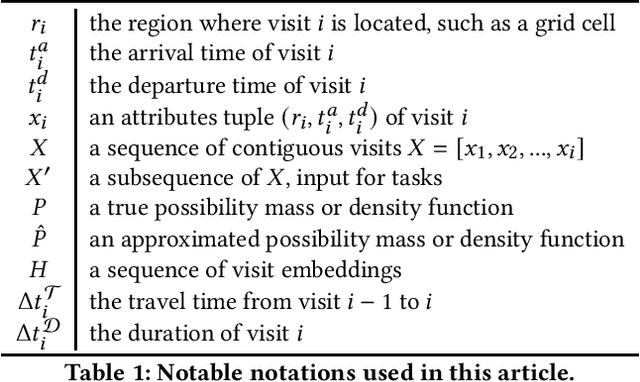

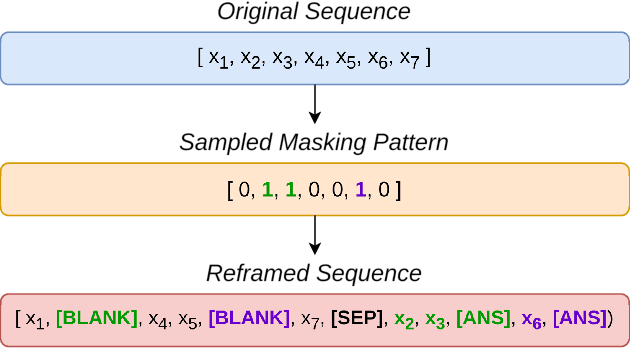

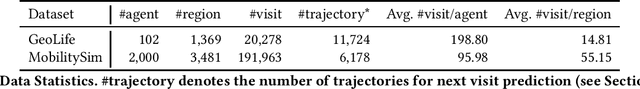

Abstract:Human mobility modeling from GPS-trajectories and synthetic trajectory generation are crucial for various applications, such as urban planning, disaster management and epidemiology. Both of these tasks often require filling gaps in a partially specified sequence of visits - a new problem that we call "controlled" synthetic trajectory generation. Existing methods for next-location prediction or synthetic trajectory generation cannot solve this problem as they lack the mechanisms needed to constrain the generated sequences of visits. Moreover, existing approaches (1) frequently treat space and time as independent factors, an assumption that fails to hold true in real-world scenarios, and (2) suffer from challenges in accuracy of temporal prediction as they fail to deal with mixed distributions and the inter-relationships of different modes with latent variables (e.g., day-of-the-week). These limitations become even more pronounced when the task involves filling gaps within sequences instead of solely predicting the next visit. We introduce TrajGPT, a transformer-based, multi-task, joint spatiotemporal generative model to address these issues. Taking inspiration from large language models, TrajGPT poses the problem of controlled trajectory generation as that of text infilling in natural language. TrajGPT integrates the spatial and temporal models in a transformer architecture through a Bayesian probability model that ensures that the gaps in a visit sequence are filled in a spatiotemporally consistent manner. Our experiments on public and private datasets demonstrate that TrajGPT not only excels in controlled synthetic visit generation but also outperforms competing models in next-location prediction tasks - Relatively, TrajGPT achieves a 26-fold improvement in temporal accuracy while retaining more than 98% of spatial accuracy on average.

Helping the Helper: Supporting Peer Counselors via AI-Empowered Practice and Feedback

May 15, 2023Abstract:Millions of users come to online peer counseling platforms to seek support on diverse topics ranging from relationship stress to anxiety. However, studies show that online peer support groups are not always as effective as expected largely due to users' negative experiences with unhelpful counselors. Peer counselors are key to the success of online peer counseling platforms, but most of them often do not have systematic ways to receive guidelines or supervision. In this work, we introduce CARE: an interactive AI-based tool to empower peer counselors through automatic suggestion generation. During the practical training stage, CARE helps diagnose which specific counseling strategies are most suitable in the given context and provides tailored example responses as suggestions. Counselors can choose to select, modify, or ignore any suggestion before replying to the support seeker. Building upon the Motivational Interviewing framework, CARE utilizes large-scale counseling conversation data together with advanced natural language generation techniques to achieve these functionalities. We demonstrate the efficacy of CARE by performing both quantitative evaluations and qualitative user studies through simulated chats and semi-structured interviews. We also find that CARE especially helps novice counselors respond better in challenging situations.

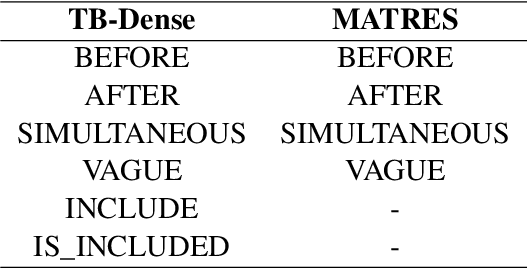

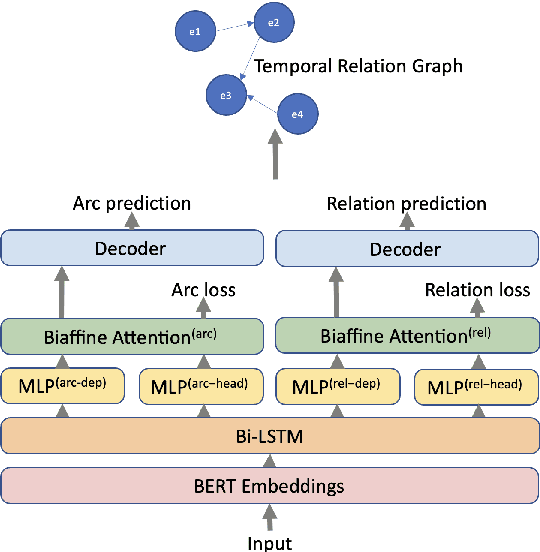

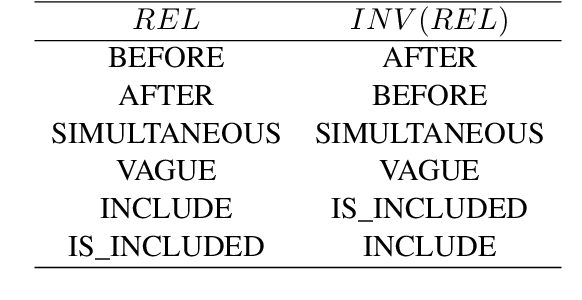

Temporal Relation Extraction with a Graph-Based Deep Biaffine Attention Model

Jan 16, 2022

Abstract:Temporal information extraction plays a critical role in natural language understanding. Previous systems have incorporated advanced neural language models and have successfully enhanced the accuracy of temporal information extraction tasks. However, these systems have two major shortcomings. First, they fail to make use of the two-sided nature of temporal relations in prediction. Second, they involve non-parallelizable pipelines in inference process that bring little performance gain. To this end, we propose a novel temporal information extraction model based on deep biaffine attention to extract temporal relationships between events in unstructured text efficiently and accurately. Our model is performant because we perform relation extraction tasks directly instead of considering event annotation as a prerequisite of relation extraction. Moreover, our architecture uses Multilayer Perceptrons (MLP) with biaffine attention to predict arcs and relation labels separately, improving relation detecting accuracy by exploiting the two-sided nature of temporal relationships. We experimentally demonstrate that our model achieves state-of-the-art performance in temporal relation extraction.

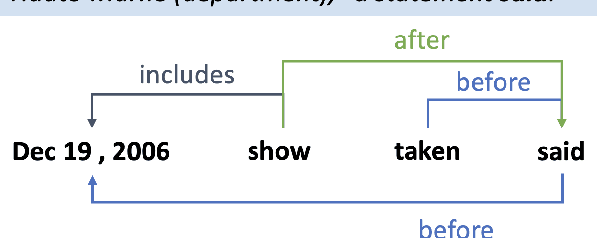

Context-Dependent Semantic Parsing for Temporal Relation Extraction

Dec 02, 2021

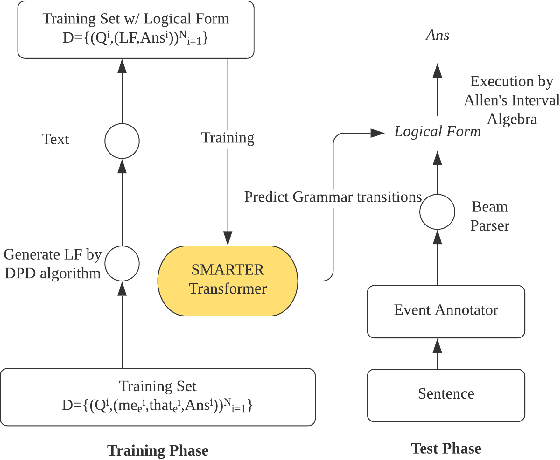

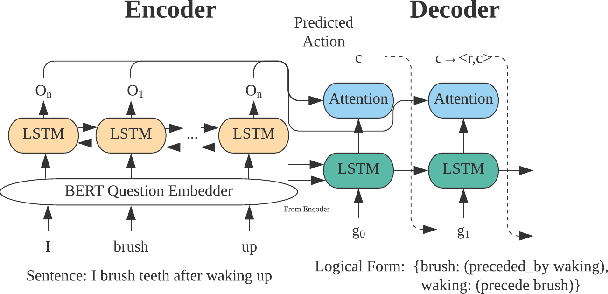

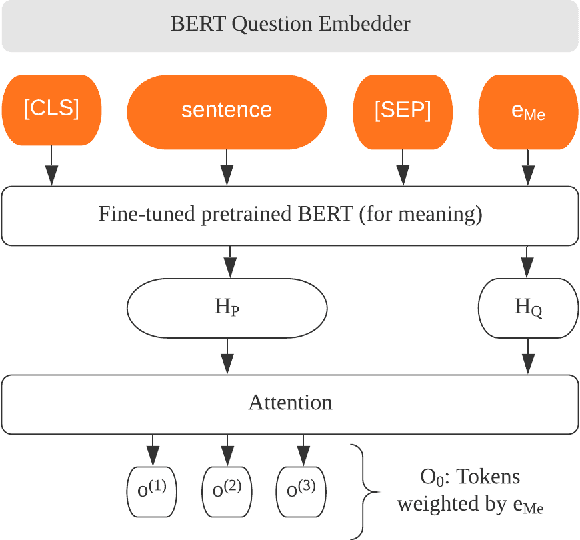

Abstract:Extracting temporal relations among events from unstructured text has extensive applications, such as temporal reasoning and question answering. While it is difficult, recent development of Neural-symbolic methods has shown promising results on solving similar tasks. Current temporal relation extraction methods usually suffer from limited expressivity and inconsistent relation inference. For example, in TimeML annotations, the concept of intersection is absent. Additionally, current methods do not guarantee the consistency among the predicted annotations. In this work, we propose SMARTER, a neural semantic parser, to extract temporal information in text effectively. SMARTER parses natural language to an executable logical form representation, based on a custom typed lambda calculus. In the training phase, dynamic programming on denotations (DPD) technique is used to provide weak supervision on logical forms. In the inference phase, SMARTER generates a temporal relation graph by executing the logical form. As a result, our neural semantic parser produces logical forms capturing the temporal information of text precisely. The accurate logical form representations of an event given the context ensure the correctness of the extracted relations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge