Shahine Bouabid

Score-based generative emulation of impact-relevant Earth system model outputs

Oct 05, 2025Abstract:Policy targets evolve faster than the Couple Model Intercomparison Project cycles, complicating adaptation and mitigation planning that must often contend with outdated projections. Climate model output emulators address this gap by offering inexpensive surrogates that can rapidly explore alternative futures while staying close to Earth System Model (ESM) behavior. We focus on emulators designed to provide inputs to impact models. Using monthly ESM fields of near-surface temperature, precipitation, relative humidity, and wind speed, we show that deep generative models have the potential to model jointly the distribution of variables relevant for impacts. The specific model we propose uses score-based diffusion on a spherical mesh and runs on a single mid-range graphical processing unit. We introduce a thorough suite of diagnostics to compare emulator outputs with their parent ESMs, including their probability densities, cross-variable correlations, time of emergence, or tail behavior. We evaluate performance across three distinct ESMs in both pre-industrial and forced regimes. The results show that the emulator produces distributions that closely match the ESM outputs and captures key forced responses. They also reveal important failure cases, notably for variables with a strong regime shift in the seasonal cycle. Although not a perfect match to the ESM, the inaccuracies of the emulator are small relative to the scale of internal variability in ESM projections. We therefore argue that it shows potential to be useful in supporting impact assessment. We discuss priorities for future development toward daily resolution, finer spatial scales, and bias-aware training. Code is made available at https://github.com/shahineb/climemu.

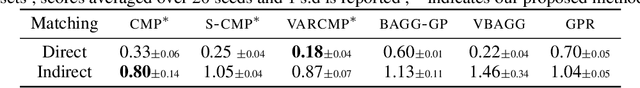

Indirect Query Bayesian Optimization with Integrated Feedback

Dec 18, 2024

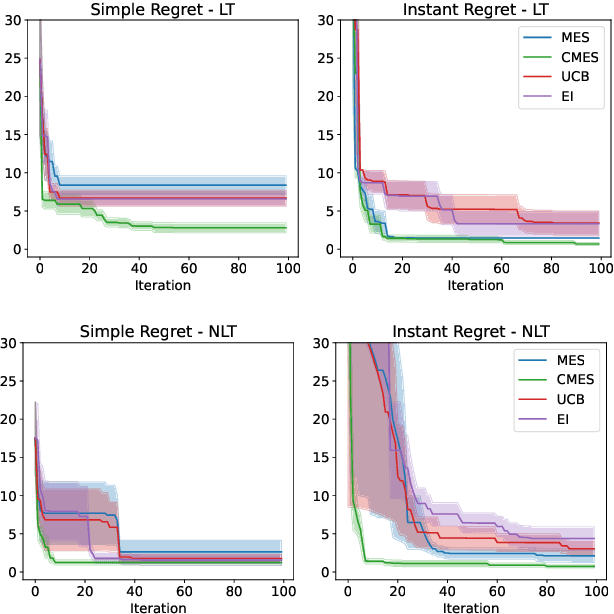

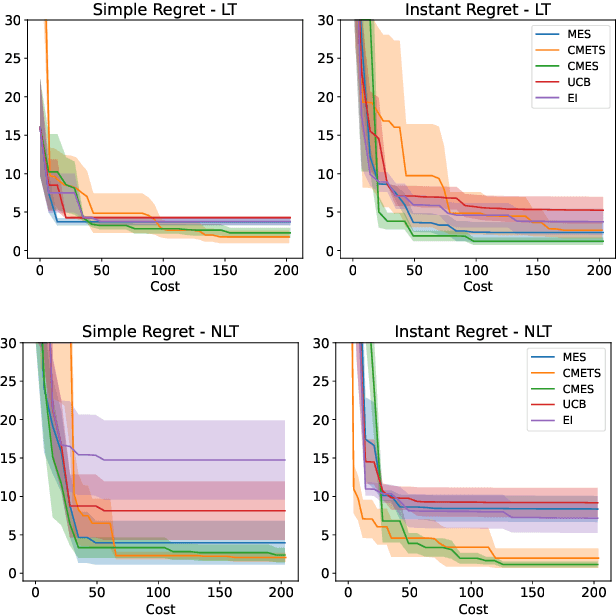

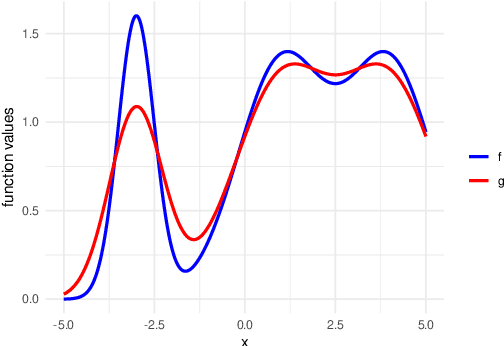

Abstract:We develop the framework of Indirect Query Bayesian Optimization (IQBO), a new class of Bayesian optimization problems where the integrated feedback is given via a conditional expectation of the unknown function $f$ to be optimized. The underlying conditional distribution can be unknown and learned from data. The goal is to find the global optimum of $f$ by adaptively querying and observing in the space transformed by the conditional distribution. This is motivated by real-world applications where one cannot access direct feedback due to privacy, hardware or computational constraints. We propose the Conditional Max-Value Entropy Search (CMES) acquisition function to address this novel setting, and propose a hierarchical search algorithm to address the multi-resolution setting and improve the computational efficiency. We show regret bounds for our proposed methods and demonstrate the effectiveness of our approaches on simulated optimization tasks.

Domain Generalisation via Imprecise Learning

Apr 06, 2024

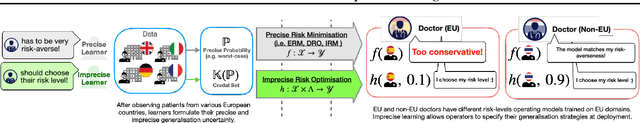

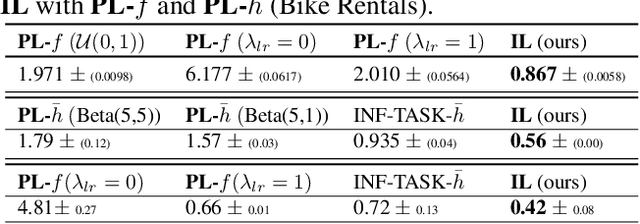

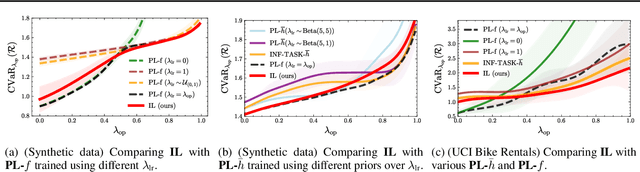

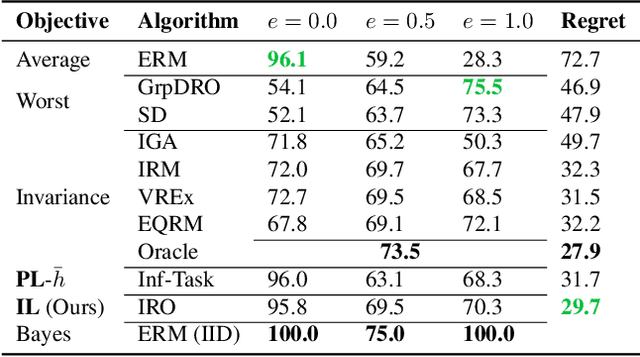

Abstract:Out-of-distribution (OOD) generalisation is challenging because it involves not only learning from empirical data, but also deciding among various notions of generalisation, e.g., optimising the average-case risk, worst-case risk, or interpolations thereof. While this choice should in principle be made by the model operator like medical doctors, this information might not always be available at training time. The institutional separation between machine learners and model operators leads to arbitrary commitments to specific generalisation strategies by machine learners due to these deployment uncertainties. We introduce the Imprecise Domain Generalisation framework to mitigate this, featuring an imprecise risk optimisation that allows learners to stay imprecise by optimising against a continuous spectrum of generalisation strategies during training, and a model framework that allows operators to specify their generalisation preference at deployment. Supported by both theoretical and empirical evidence, our work showcases the benefits of integrating imprecision into domain generalisation.

FaIRGP: A Bayesian Energy Balance Model for Surface Temperatures Emulation

Jul 14, 2023Abstract:Emulators, or reduced complexity climate models, are surrogate Earth system models that produce projections of key climate quantities with minimal computational resources. Using time-series modeling or more advanced machine learning techniques, data-driven emulators have emerged as a promising avenue of research, producing spatially resolved climate responses that are visually indistinguishable from state-of-the-art Earth system models. Yet, their lack of physical interpretability limits their wider adoption. In this work, we introduce FaIRGP, a data-driven emulator that satisfies the physical temperature response equations of an energy balance model. The result is an emulator that (i) enjoys the flexibility of statistical machine learning models and can learn from observations, and (ii) has a robust physical grounding with interpretable parameters that can be used to make inference about the climate system. Further, our Bayesian approach allows a principled and mathematically tractable uncertainty quantification. Our model demonstrates skillful emulation of global mean surface temperature and spatial surface temperatures across realistic future scenarios. Its ability to learn from data allows it to outperform energy balance models, while its robust physical foundation safeguards against the pitfalls of purely data-driven models. We also illustrate how FaIRGP can be used to obtain estimates of top-of-atmosphere radiative forcing and discuss the benefits of its mathematical tractability for applications such as detection and attribution or precipitation emulation. We hope that this work will contribute to widening the adoption of data-driven methods in climate emulation.

Returning The Favour: When Regression Benefits From Probabilistic Causal Knowledge

Jan 26, 2023

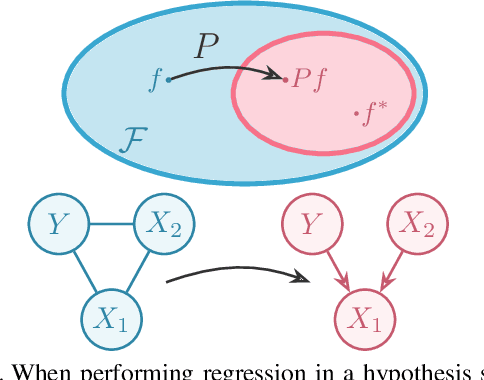

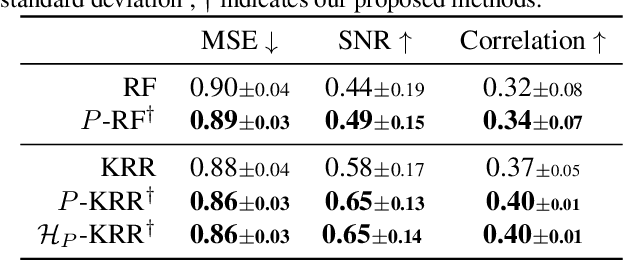

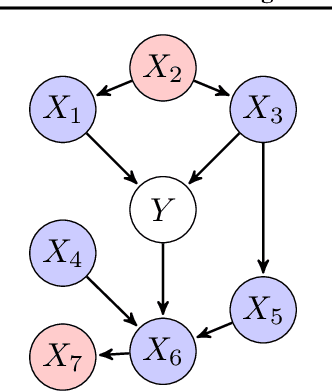

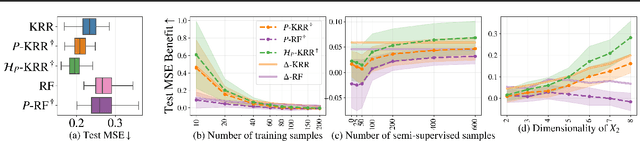

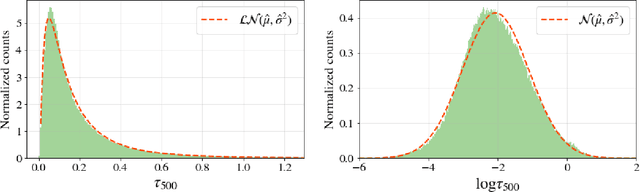

Abstract:A directed acyclic graph (DAG) provides valuable prior knowledge that is often discarded in regression tasks in machine learning. We show that the independences arising from the presence of collider structures in DAGs provide meaningful inductive biases, which constrain the regression hypothesis space and improve predictive performance. We introduce collider regression, a framework to incorporate probabilistic causal knowledge from a collider in a regression problem. When the hypothesis space is a reproducing kernel Hilbert space, we prove a strictly positive generalisation benefit under mild assumptions and provide closed-form estimators of the empirical risk minimiser. Experiments on synthetic and climate model data demonstrate performance gains of the proposed methodology.

AODisaggregation: toward global aerosol vertical profiles

May 06, 2022

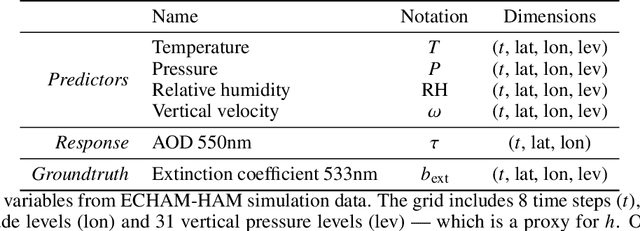

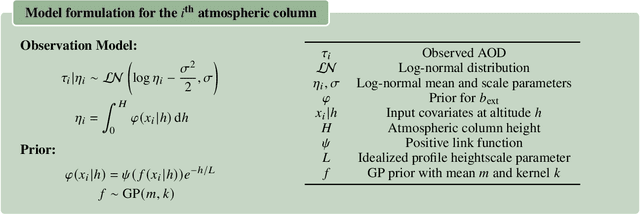

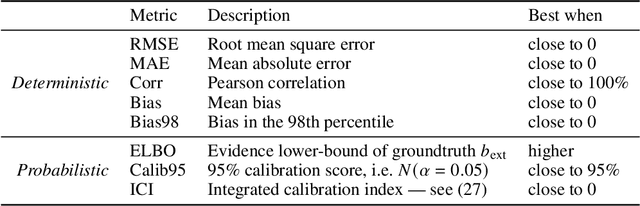

Abstract:Aerosol-cloud interactions constitute the largest source of uncertainty in assessments of the anthropogenic climate change. This uncertainty arises in part from the difficulty in measuring the vertical distributions of aerosols, and only sporadic vertically resolved observations are available. We often have to settle for less informative vertically aggregated proxies such as aerosol optical depth (AOD). In this work, we develop a framework for the vertical disaggregation of AOD into extinction profiles, i.e. the measure of light extinction throughout an atmospheric column, using readily available vertically resolved meteorological predictors such as temperature, pressure or relative humidity. Using Bayesian nonparametric modelling, we devise a simple Gaussian process prior over aerosol vertical profiles and update it with AOD observations to infer a distribution over vertical extinction profiles. To validate our approach, we use ECHAM-HAM aerosol-climate model data which offers self-consistent simulations of meteorological covariates, AOD and extinction profiles. Our results show that, while very simple, our model is able to reconstruct realistic extinction profiles with well-calibrated uncertainty, outperforming by an order of magnitude the idealized baseline which is typically used in satellite AOD retrieval algorithms. In particular, the model demonstrates a faithful reconstruction of extinction patterns arising from aerosol water uptake in the boundary layer. Observations however suggest that other extinction patterns, due to aerosol mass concentration, particle size and radiative properties, might be more challenging to capture and require additional vertically resolved predictors.

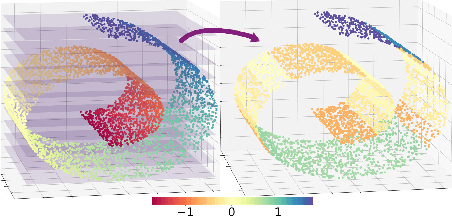

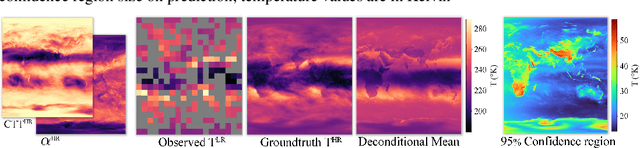

Deconditional Downscaling with Gaussian Processes

Jun 05, 2021

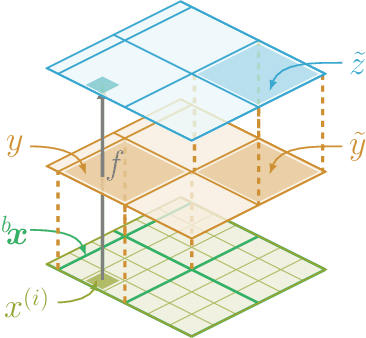

Abstract:Refining low-resolution (LR) spatial fields with high-resolution (HR) information is challenging as the diversity of spatial datasets often prevents direct matching of observations. Yet, when LR samples are modeled as aggregate conditional means of HR samples with respect to a mediating variable that is globally observed, the recovery of the underlying fine-grained field can be framed as taking an "inverse" of the conditional expectation, namely a deconditioning problem. In this work, we introduce conditional mean processes (CMP), a new class of Gaussian Processes describing conditional means. By treating CMPs as inter-domain features of the underlying field, a posterior for the latent field can be established as a solution to the deconditioning problem. Furthermore, we show that this solution can be viewed as a two-staged vector-valued kernel ridge regressor and show that it has a minimax optimal convergence rate under mild assumptions. Lastly, we demonstrate its proficiency in a synthetic and a real-world atmospheric field downscaling problem, showing substantial improvements over existing methods.

NightVision: Generating Nighttime Satellite Imagery from Infra-Red Observations

Dec 08, 2020

Abstract:The recent explosion in applications of machine learning to satellite imagery often rely on visible images and therefore suffer from a lack of data during the night. The gap can be filled by employing available infra-red observations to generate visible images. This work presents how deep learning can be applied successfully to create those images by using U-Net based architectures. The proposed methods show promising results, achieving a structural similarity index (SSIM) up to 86\% on an independent test set and providing visually convincing output images, generated from infra-red observations.

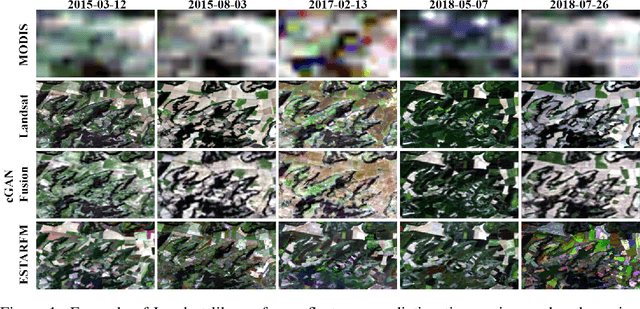

Predicting Landsat Reflectance with Deep Generative Fusion

Nov 09, 2020

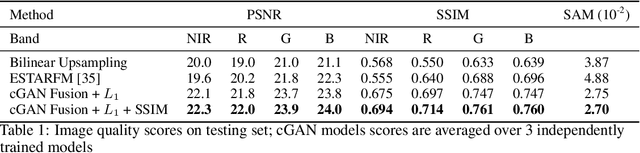

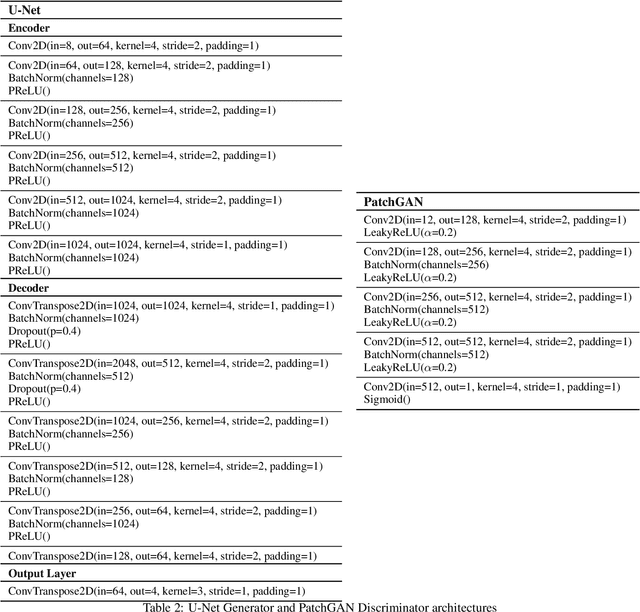

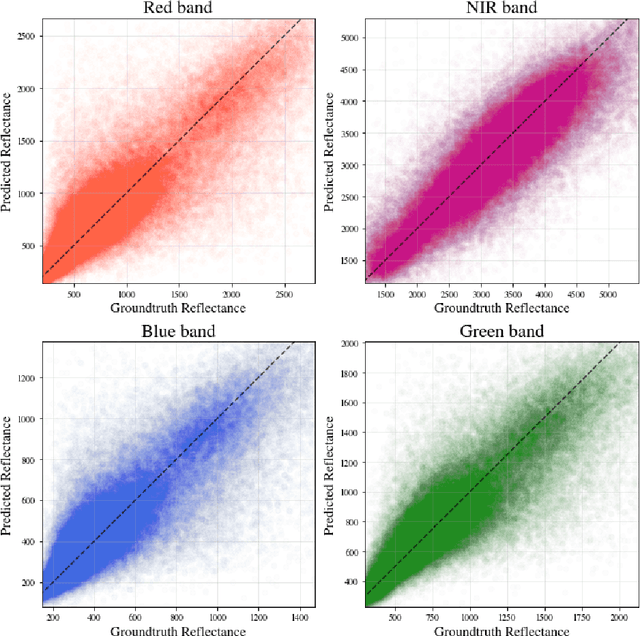

Abstract:Public satellite missions are commonly bound to a trade-off between spatial and temporal resolution as no single sensor provides fine-grained acquisitions with frequent coverage. This hinders their potential to assist vegetation monitoring or humanitarian actions, which require detecting rapid and detailed terrestrial surface changes. In this work, we probe the potential of deep generative models to produce high-resolution optical imagery by fusing products with different spatial and temporal characteristics. We introduce a dataset of co-registered Moderate Resolution Imaging Spectroradiometer (MODIS) and Landsat surface reflectance time series and demonstrate the ability of our generative model to blend coarse daily reflectance information into low-paced finer acquisitions. We benchmark our proposed model against state-of-the-art reflectance fusion algorithms.

Mixup Regularization for Region Proposal based Object Detectors

Mar 04, 2020

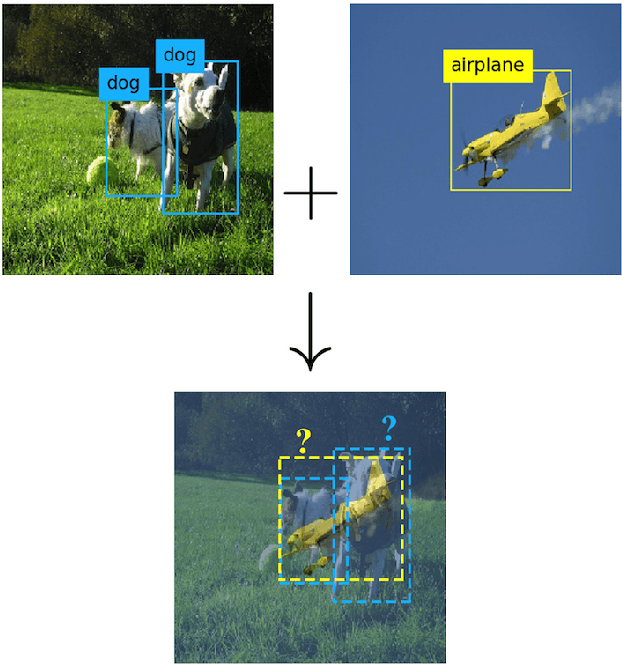

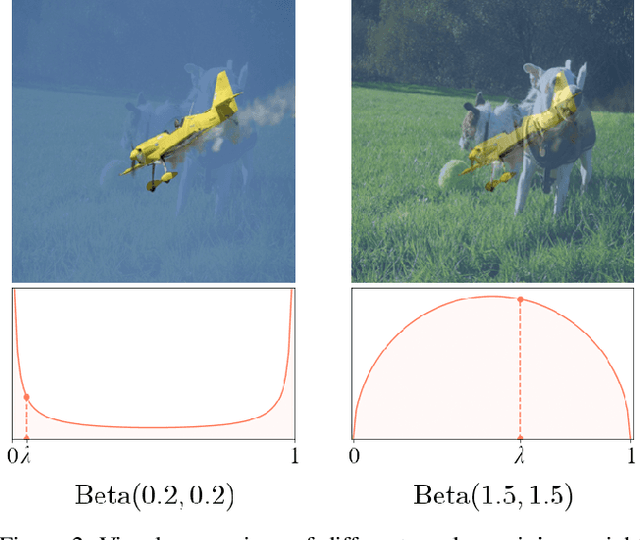

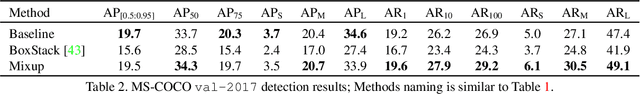

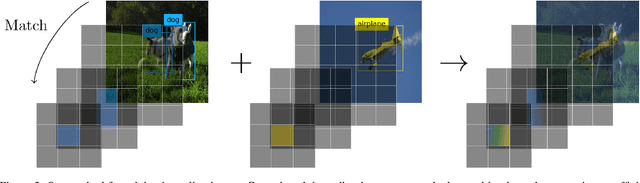

Abstract:Mixup - a neural network regularization technique based on linear interpolation of labeled sample pairs - has stood out by its capacity to improve model's robustness and generalizability through a surprisingly simple formalism. However, its extension to the field of object detection remains unclear as the interpolation of bounding boxes cannot be naively defined. In this paper, we propose to leverage the inherent region mapping structure of anchors to introduce a mixup-driven training regularization for region proposal based object detectors. The proposed method is benchmarked on standard datasets with challenging detection settings. Our experiments show an enhanced robustness to image alterations along with an ability to decontextualize detections, resulting in an improved generalization power.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge