Satoshi Koide

LaViC: Adapting Large Vision-Language Models to Visually-Aware Conversational Recommendation

Mar 30, 2025

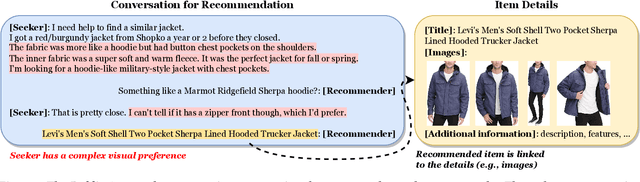

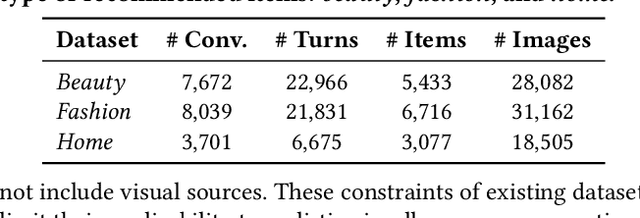

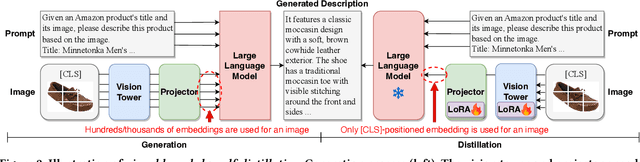

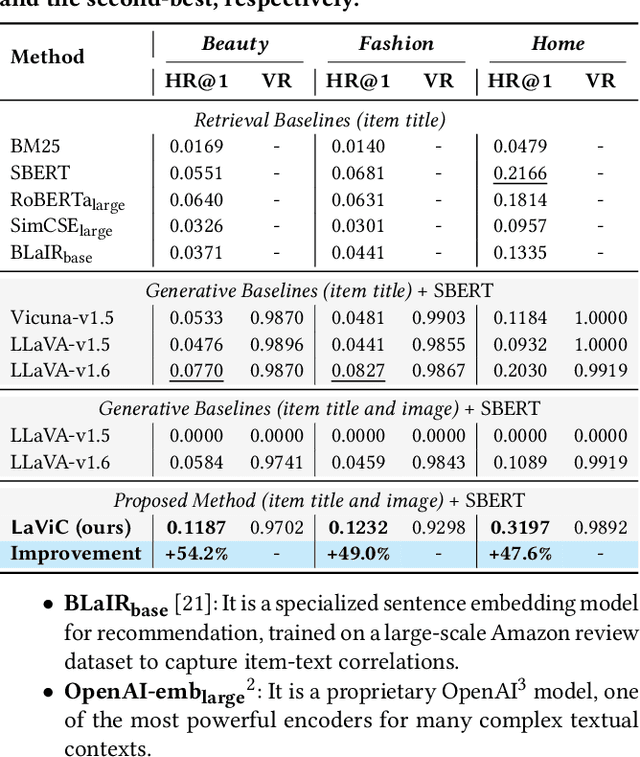

Abstract:Conversational recommender systems engage users in dialogues to refine their needs and provide more personalized suggestions. Although textual information suffices for many domains, visually driven categories such as fashion or home decor potentially require detailed visual information related to color, style, or design. To address this challenge, we propose LaViC (Large Vision-Language Conversational Recommendation Framework), a novel approach that integrates compact image representations into dialogue-based recommendation systems. LaViC leverages a large vision-language model in a two-stage process: (1) visual knowledge self-distillation, which condenses product images from hundreds of tokens into a small set of visual tokens in a self-distillation manner, significantly reducing computational overhead, and (2) recommendation prompt tuning, which enables the model to incorporate both dialogue context and distilled visual tokens, providing a unified mechanism for capturing textual and visual features. To support rigorous evaluation of visually-aware conversational recommendation, we construct a new dataset by aligning Reddit conversations with Amazon product listings across multiple visually oriented categories (e.g., fashion, beauty, and home). This dataset covers realistic user queries and product appearances in domains where visual details are crucial. Extensive experiments demonstrate that LaViC significantly outperforms text-only conversational recommendation methods and open-source vision-language baselines. Moreover, LaViC achieves competitive or superior accuracy compared to prominent proprietary baselines (e.g., GPT-3.5-turbo, GPT-4o-mini, and GPT-4o), demonstrating the necessity of explicitly using visual data for capturing product attributes and showing the effectiveness of our vision-language integration. Our code and dataset are available at https://github.com/jeon185/LaViC.

Impact of Tone-Aware Explanations in Recommender Systems

May 08, 2024

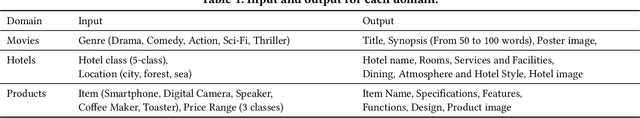

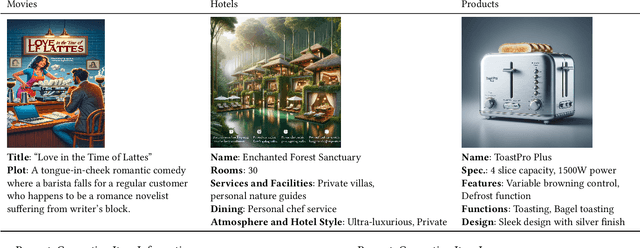

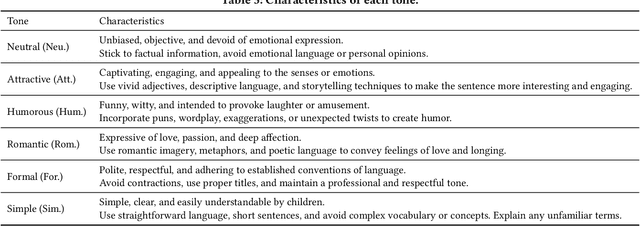

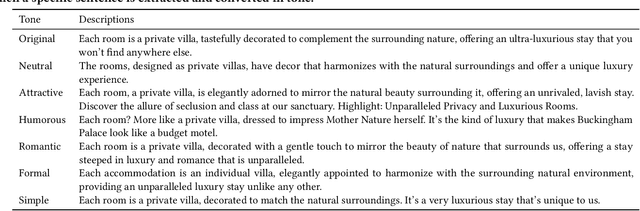

Abstract:In recommender systems, the presentation of explanations plays a crucial role in supporting users' decision-making processes. Although numerous existing studies have focused on the effects (transparency or persuasiveness) of explanation content, explanation expression is largely overlooked. Tone, such as formal and humorous, is directly linked to expressiveness and is an important element in human communication. However, studies on the impact of tone on explanations within the context of recommender systems are insufficient. Therefore, this study investigates the effect of explanation tones through an online user study from three aspects: perceived effects, domain differences, and user attributes. We create a dataset using a large language model to generate fictional items and explanations with various tones in the domain of movies, hotels, and home products. Collected data analysis reveals different perceived effects of tones depending on the domains. Moreover, user attributes such as age and personality traits are found to influence the impact of tone. This research underscores the critical role of tones in explanations within recommender systems, suggesting that attention to tone can enhance user experience.

One-Shot Domain Incremental Learning

Mar 25, 2024

Abstract:Domain incremental learning (DIL) has been discussed in previous studies on deep neural network models for classification. In DIL, we assume that samples on new domains are observed over time. The models must classify inputs on all domains. In practice, however, we may encounter a situation where we need to perform DIL under the constraint that the samples on the new domain are observed only infrequently. Therefore, in this study, we consider the extreme case where we have only one sample from the new domain, which we call one-shot DIL. We first empirically show that existing DIL methods do not work well in one-shot DIL. We have analyzed the reason for this failure through various investigations. According to our analysis, we clarify that the difficulty of one-shot DIL is caused by the statistics in the batch normalization layers. Therefore, we propose a technique regarding these statistics and demonstrate the effectiveness of our technique through experiments on open datasets.

Deep generative model super-resolves spatially correlated multiregional climate data

Sep 26, 2022Abstract:Super-resolving the coarse outputs of global climate simulations, termed downscaling, is crucial in making political and social decisions on systems requiring long-term climate change projections. Existing fast super-resolution techniques, however, have yet to preserve the spatially correlated nature of climatological data, which is particularly important when we address systems with spatial expanse, such as the development of transportation infrastructure. Herein, we show an adversarial network-based machine learning enables us to correctly reconstruct the inter-regional spatial correlations in downscaling with high magnification up to fifty, while maintaining the pixel-wise statistical consistency. Direct comparison with the measured meteorological data of temperature and precipitation distributions reveals that integrating climatologically important physical information is essential for the accurate downscaling, which prompts us to call our approach $\pi$SRGAN (Physics Informed Super-Resolution Generative Adversarial Network). The present method has a potential application to the inter-regionally consistent assessment of the climate change impact.

Partial Wasserstein Covering

Jun 02, 2021

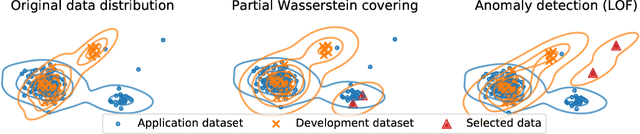

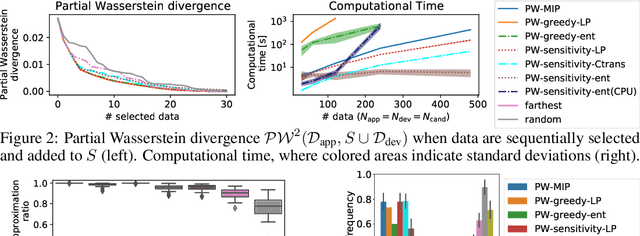

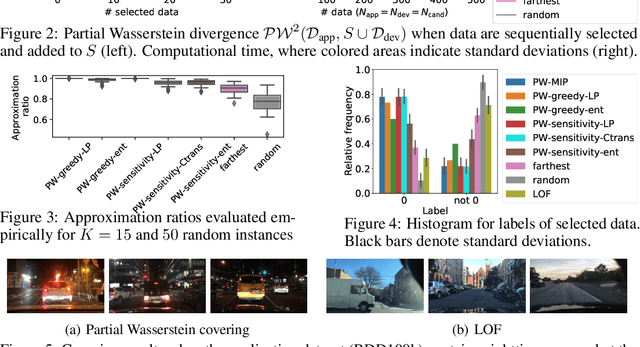

Abstract:We consider a general task called partial Wasserstein covering with the goal of emulating a large dataset (e.g., application dataset) using a small dataset (e.g., development dataset) in terms of the empirical distribution by selecting a small subset from a candidate dataset and adding it to the small dataset. We model this task as a discrete optimization problem with partial Wasserstein divergence as an objective function. Although this problem is NP-hard, we prove that it has the submodular property, allowing us to use a greedy algorithm with a 0.63 approximation. However, the greedy algorithm is still inefficient because it requires linear programming for each objective function evaluation. To overcome this difficulty, we propose quasi-greedy algorithms for acceleration, which consist of a series of techniques such as sensitivity analysis based on strong duality and the so-called $C$-transform in the optimal transport field. Experimentally, we demonstrate that we can efficiently make two datasets similar in terms of partial Wasserstein divergence, including driving scene datasets.

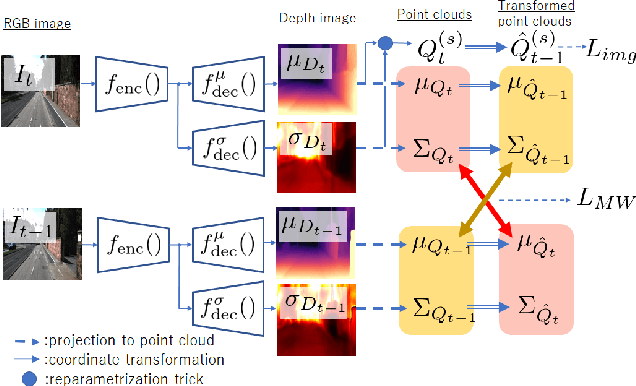

Variational Monocular Depth Estimation for Reliability Prediction

Nov 24, 2020

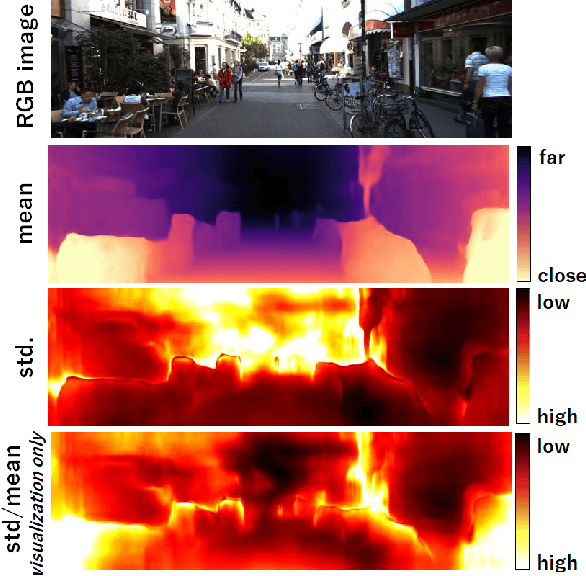

Abstract:Self-supervised learning for monocular depth estimation is widely investigated as an alternative to supervised learning approach, that requires a lot of ground truths. Previous works have successfully improved the accuracy of depth estimation by modifying the model structure, adding objectives, and masking dynamic objects and occluded area. However, when using such estimated depth image in applications, such as autonomous vehicles, and robots, we have to uniformly believe the estimated depth at each pixel position. This could lead to fatal errors in performing the tasks, because estimated depth at some pixels may make a bigger mistake. In this paper, we theoretically formulate a variational model for the monocular depth estimation to predict the reliability of the estimated depth image. Based on the results, we can exclude the estimated depths with low reliability or refine them for actual use. The effectiveness of the proposed method is quantitatively and qualitatively demonstrated using the KITTI benchmark and Make3D dataset.

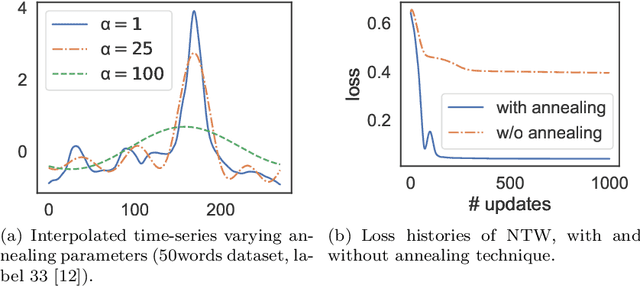

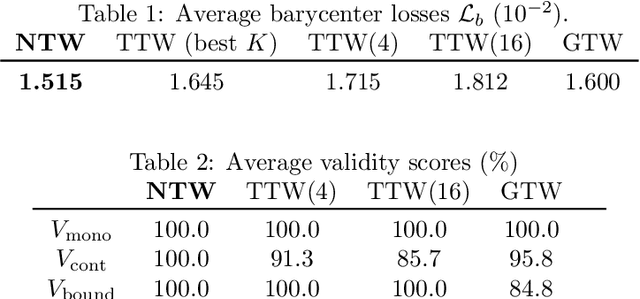

Neural Time Warping For Multiple Sequence Alignment

Jun 29, 2020

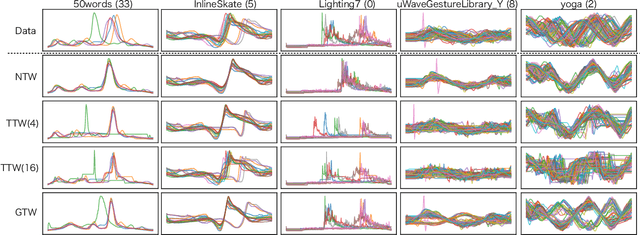

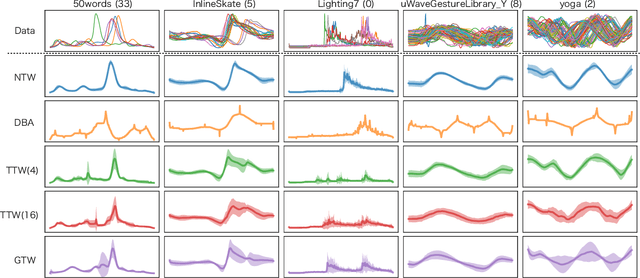

Abstract:Multiple sequences alignment (MSA) is a traditional and challenging task for time-series analyses. The MSA problem is formulated as a discrete optimization problem and is typically solved by dynamic programming. However, the computational complexity increases exponentially with respect to the number of input sequences. In this paper, we propose neural time warping (NTW) that relaxes the original MSA to a continuous optimization and obtains the alignments using a neural network. The solution obtained by NTW is guaranteed to be a feasible solution for the original discrete optimization problem under mild conditions. Our experimental results show that NTW successfully aligns a hundred time-series and significantly outperforms existing methods for solving the MSA problem. In addition, we show a method for obtaining average time-series data as one of applications of NTW. Compared to the existing barycenters, the mean time series data retains the features of the input time-series data.

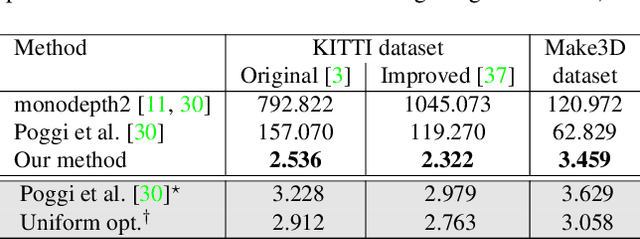

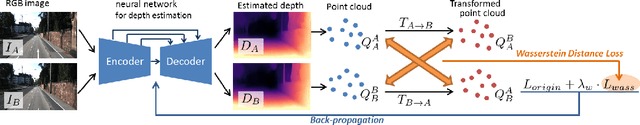

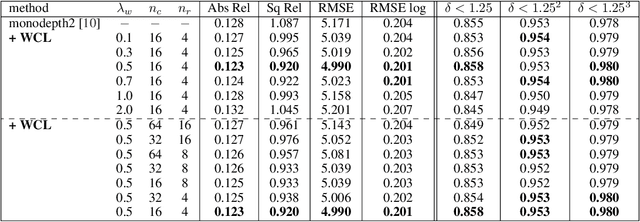

PLG-IN: Pluggable Geometric Consistency Loss with Wasserstein Distance in Monocular Depth Estimation

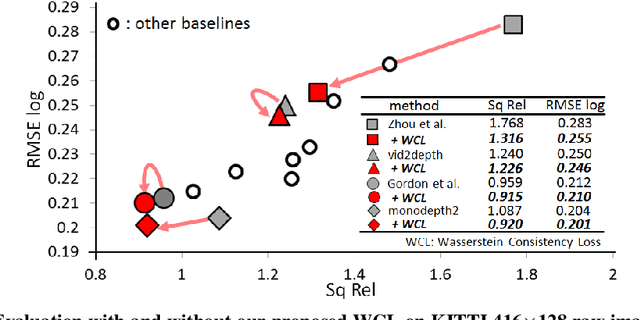

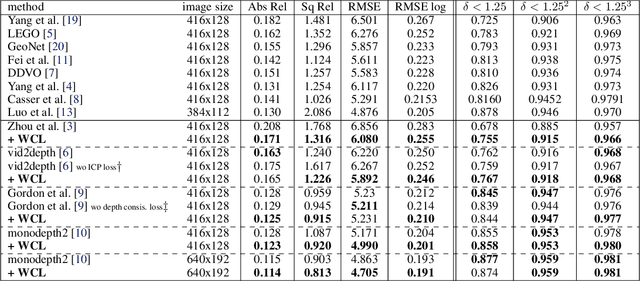

Jun 03, 2020

Abstract:We propose a novel objective to penalize geometric inconsistencies, to improve the performance of depth estimation from monocular camera images. Our objective is designed with the Wasserstein distance between two point clouds estimated from images with different camera poses. The Wasserstein distance can impose a soft and symmetric coupling between two point clouds, which suitably keeps geometric constraints and leads differentiable objective. By adding our objective to the original ones of other state-of-the-art methods, we can effectively penalize a geometric inconsistency and obtain a highly accurate depth estimation. Our proposed method is evaluated on the Eigen split of the KITTI raw dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge