Hiroaki Yoshida

Predicting unobserved climate time series data at distant areas via spatial correlation using reservoir computing

Jun 05, 2024Abstract:Collecting time series data spatially distributed in many locations is often important for analyzing climate change and its impacts on ecosystems. However, comprehensive spatial data collection is not always feasible, requiring us to predict climate variables at some locations. This study focuses on a prediction of climatic elements, specifically near-surface temperature and pressure, at a target location apart from a data observation point. Our approach uses two prediction methods: reservoir computing (RC), known as a machine learning framework with low computational requirements, and vector autoregression models (VAR), recognized as a statistical method for analyzing time series data. Our results show that the accuracy of the predictions degrades with the distance between the observation and target locations. We quantitatively estimate the distance in which effective predictions are possible. We also find that in the context of climate data, a geographical distance is associated with data correlation, and a strong data correlation significantly improves the prediction accuracy with RC. In particular, RC outperforms VAR in predicting highly correlated data within the predictive range. These findings suggest that machine learning-based methods can be used more effectively to predict climatic elements in remote locations by assessing the distance to them from the data observation point in advance. Our study on low-cost and accurate prediction of climate variables has significant value for climate change strategies.

Deep generative model super-resolves spatially correlated multiregional climate data

Sep 26, 2022Abstract:Super-resolving the coarse outputs of global climate simulations, termed downscaling, is crucial in making political and social decisions on systems requiring long-term climate change projections. Existing fast super-resolution techniques, however, have yet to preserve the spatially correlated nature of climatological data, which is particularly important when we address systems with spatial expanse, such as the development of transportation infrastructure. Herein, we show an adversarial network-based machine learning enables us to correctly reconstruct the inter-regional spatial correlations in downscaling with high magnification up to fifty, while maintaining the pixel-wise statistical consistency. Direct comparison with the measured meteorological data of temperature and precipitation distributions reveals that integrating climatologically important physical information is essential for the accurate downscaling, which prompts us to call our approach $\pi$SRGAN (Physics Informed Super-Resolution Generative Adversarial Network). The present method has a potential application to the inter-regionally consistent assessment of the climate change impact.

SapientML: Synthesizing Machine Learning Pipelines by Learning from Human-Written Solutions

Feb 18, 2022

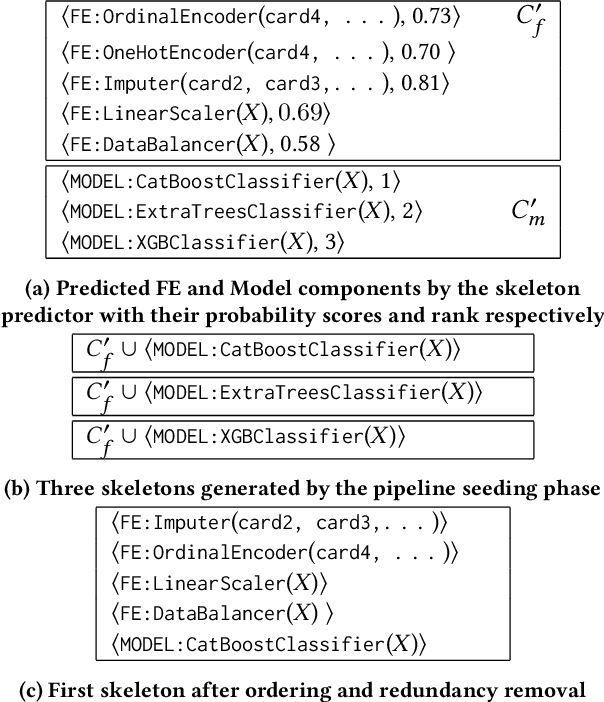

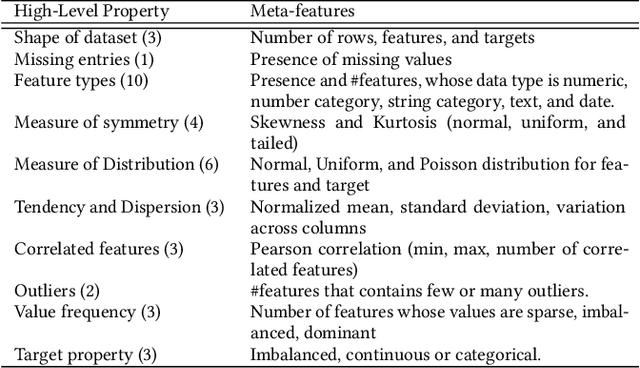

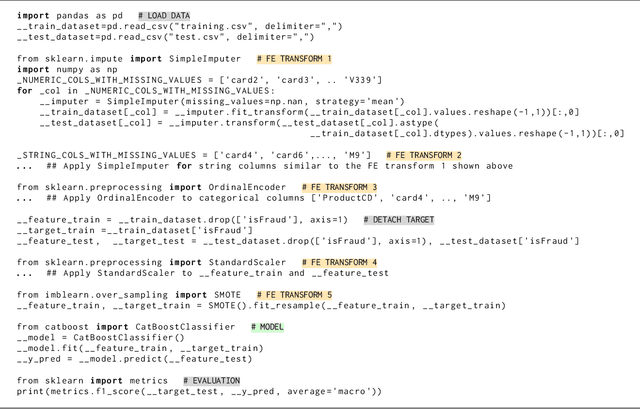

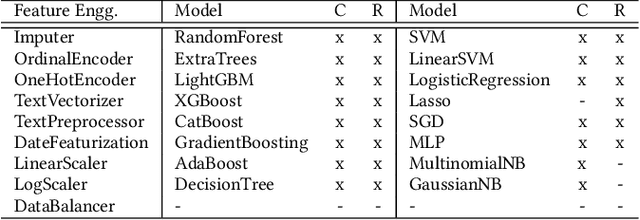

Abstract:Automatic machine learning, or AutoML, holds the promise of truly democratizing the use of machine learning (ML), by substantially automating the work of data scientists. However, the huge combinatorial search space of candidate pipelines means that current AutoML techniques, generate sub-optimal pipelines, or none at all, especially on large, complex datasets. In this work we propose an AutoML technique SapientML, that can learn from a corpus of existing datasets and their human-written pipelines, and efficiently generate a high-quality pipeline for a predictive task on a new dataset. To combat the search space explosion of AutoML, SapientML employs a novel divide-and-conquer strategy realized as a three-stage program synthesis approach, that reasons on successively smaller search spaces. The first stage uses a machine-learned model to predict a set of plausible ML components to constitute a pipeline. In the second stage, this is then refined into a small pool of viable concrete pipelines using syntactic constraints derived from the corpus and the machine-learned model. Dynamically evaluating these few pipelines, in the third stage, provides the best solution. We instantiate SapientML as part of a fully automated tool-chain that creates a cleaned, labeled learning corpus by mining Kaggle, learns from it, and uses the learned models to then synthesize pipelines for new predictive tasks. We have created a training corpus of 1094 pipelines spanning 170 datasets, and evaluated SapientML on a set of 41 benchmark datasets, including 10 new, large, real-world datasets from Kaggle, and against 3 state-of-the-art AutoML tools and 2 baselines. Our evaluation shows that SapientML produces the best or comparable accuracy on 27 of the benchmarks while the second best tool fails to even produce a pipeline on 9 of the instances.

Reservoir Computing with Diverse Timescales for Prediction of Multiscale Dynamics

Aug 21, 2021

Abstract:Machine learning approaches have recently been leveraged as a substitute or an aid for physical/mathematical modeling approaches to dynamical systems. To develop an efficient machine learning method dedicated to modeling and prediction of multiscale dynamics, we propose a reservoir computing model with diverse timescales by using a recurrent network of heterogeneous leaky integrator neurons. In prediction tasks with fast-slow chaotic dynamical systems including a large gap in timescales of their subsystems dynamics, we demonstrate that the proposed model has a higher potential than the existing standard model and yields a performance comparable to the best one of the standard model even without an optimization of the leak rate parameter. Our analysis reveals that the timescales required for producing each component of target dynamics are appropriately and flexibly selected from the reservoir dynamics by model training.

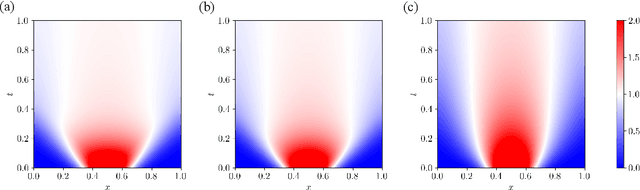

Optimal Transport-based Coverage Control for Swarm Robot Systems: Generalization of the Voronoi Tessellation-based Method

Nov 16, 2020

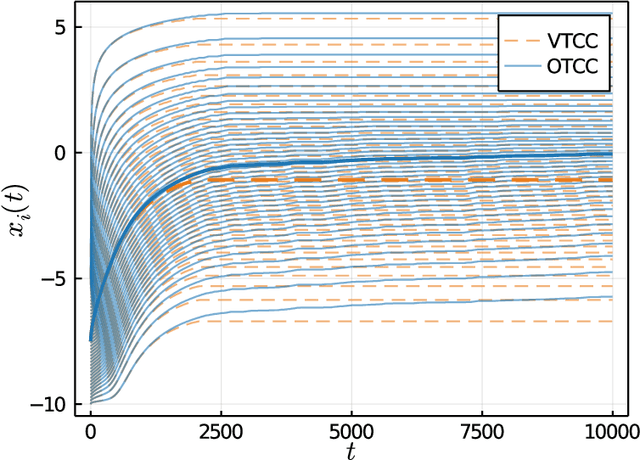

Abstract:Swarm robot systems, which consist of many cooperating mobile robots, have attracted attention for their environmental adaptability and fault tolerance advantages. One of the most important tasks for such systems is coverage control, in which robots autonomously deploy to approximate a given spatial distribution. In this study, we formulate a coverage control paradigm using the concept of optimal transport and propose a novel control technique, which we have termed the optimal transport-based coverage control (OTCC) method. The proposed OTCC, derived via the gradient flow of the cost function in the Kantorovich dual problem, is shown to covers a widely used existing control method as a special case. We also perform a Lyapunov stability analysis of the controlled system, and provide numerical calculations to show that the OTCC reproduces target distributions with better performance than the existing control method.

Optimal Coverage Control for Swarm Robot Systems using a Mean Field Game

Apr 16, 2020

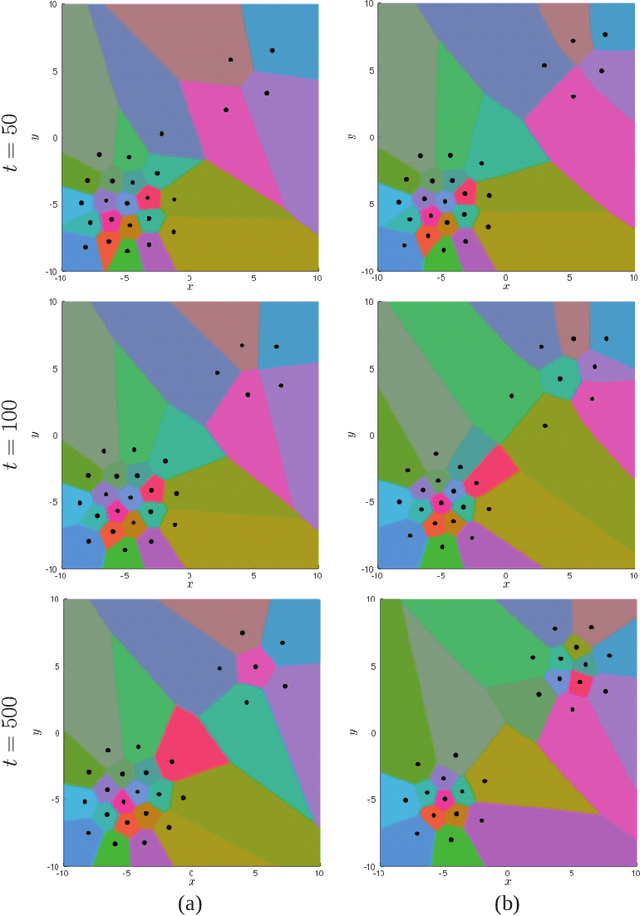

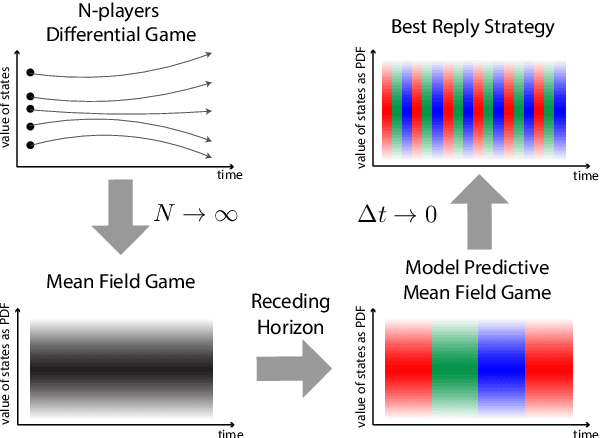

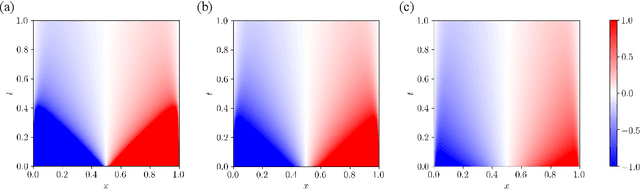

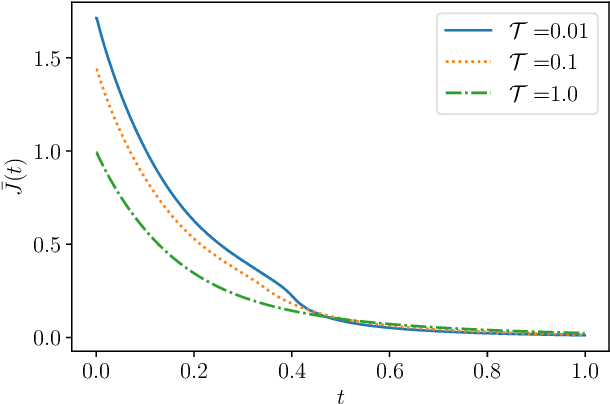

Abstract:Swarm robot systems, in which many robots cooperate to perform one task, have attracted a great of attention in recent years. In controlling these systems, the trade-off between the global optimality and the scalability is a major challenge. In the present paper, we focus on the mean field game (MFG) as a possible control method for swarm robot systems. The MFG is a framework to deduce a macroscopic model for describing robot density profiles from the microscopic robot dynamics. For a coverage control problem aiming at uniformly distributing robots over space, we extend the original MFG in order to present two methods for optimally controlling swarm robots: the model predictive mean field game (MP-MFG) and the best reply strategy (BRS). Importantly, the MP-MFG converges to the BRS in the limit of prediction time going to zero, which is also confirmed by our numerical experiments. In addition, we show numerically that the optimal input is obtained in both the MP-MFG and the BRS, and widening the prediction time of the MP-MFG improves the control performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge