Sarvapali D. Ramchurn

Distributed Nash Equilibrium Seeking Algorithm in Aggregative Games for Heterogeneous Multi-Robot Systems

Sep 19, 2025Abstract:This paper develops a distributed Nash Equilibrium seeking algorithm for heterogeneous multi-robot systems. The algorithm utilises distributed optimisation and output control to achieve the Nash equilibrium by leveraging information shared among neighbouring robots. Specifically, we propose a distributed optimisation algorithm that calculates the Nash equilibrium as a tailored reference for each robot and designs output control laws for heterogeneous multi-robot systems to track it in an aggregative game. We prove that our algorithm is guaranteed to converge and result in efficient outcomes. The effectiveness of our approach is demonstrated through numerical simulations and empirical testing with physical robots.

VLM-Guided Visual Place Recognition for Planet-Scale Geo-Localization

Jul 23, 2025Abstract:Geo-localization from a single image at planet scale (essentially an advanced or extreme version of the kidnapped robot problem) is a fundamental and challenging task in applications such as navigation, autonomous driving and disaster response due to the vast diversity of locations, environmental conditions, and scene variations. Traditional retrieval-based methods for geo-localization struggle with scalability and perceptual aliasing, while classification-based approaches lack generalization and require extensive training data. Recent advances in vision-language models (VLMs) offer a promising alternative by leveraging contextual understanding and reasoning. However, while VLMs achieve high accuracy, they are often prone to hallucinations and lack interpretability, making them unreliable as standalone solutions. In this work, we propose a novel hybrid geo-localization framework that combines the strengths of VLMs with retrieval-based visual place recognition (VPR) methods. Our approach first leverages a VLM to generate a prior, effectively guiding and constraining the retrieval search space. We then employ a retrieval step, followed by a re-ranking mechanism that selects the most geographically plausible matches based on feature similarity and proximity to the initially estimated coordinates. We evaluate our approach on multiple geo-localization benchmarks and show that it consistently outperforms prior state-of-the-art methods, particularly at street (up to 4.51%) and city level (up to 13.52%). Our results demonstrate that VLM-generated geographic priors in combination with VPR lead to scalable, robust, and accurate geo-localization systems.

Safe and Socially Aware Multi-Robot Coordination in Multi-Human Social Care Settings

Jul 03, 2025Abstract:This research investigates strategies for multi-robot coordination in multi-human environments. It proposes a multi-objective learning-based coordination approach to addressing the problem of path planning, navigation, task scheduling, task allocation, and human-robot interaction in multi-human multi-robot (MHMR) settings.

TAT-VPR: Ternary Adaptive Transformer for Dynamic and Efficient Visual Place Recognition

May 22, 2025Abstract:TAT-VPR is a ternary-quantized transformer that brings dynamic accuracy-efficiency trade-offs to visual SLAM loop-closure. By fusing ternary weights with a learned activation-sparsity gate, the model can control computation by up to 40% at run-time without degrading performance (Recall@1). The proposed two-stage distillation pipeline preserves descriptor quality, letting it run on micro-UAV and embedded SLAM stacks while matching state-of-the-art localization accuracy.

TeTRA-VPR: A Ternary Transformer Approach for Compact Visual Place Recognition

Mar 04, 2025

Abstract:Visual Place Recognition (VPR) localizes a query image by matching it against a database of geo-tagged reference images, making it essential for navigation and mapping in robotics. Although Vision Transformer (ViT) solutions deliver high accuracy, their large models often exceed the memory and compute budgets of resource-constrained platforms such as drones and mobile robots. To address this issue, we propose TeTRA, a ternary transformer approach that progressively quantizes the ViT backbone to 2-bit precision and binarizes its final embedding layer, offering substantial reductions in model size and latency. A carefully designed progressive distillation strategy preserves the representational power of a full-precision teacher, allowing TeTRA to retain or even surpass the accuracy of uncompressed convolutional counterparts, despite using fewer resources. Experiments on standard VPR benchmarks demonstrate that TeTRA reduces memory consumption by up to 69% compared to efficient baselines, while lowering inference latency by 35%, with either no loss or a slight improvement in recall@1. These gains enable high-accuracy VPR on power-constrained, memory-limited robotic platforms, making TeTRA an appealing solution for real-world deployment.

Position: Towards a Responsible LLM-empowered Multi-Agent Systems

Feb 03, 2025

Abstract:The rise of Agent AI and Large Language Model-powered Multi-Agent Systems (LLM-MAS) has underscored the need for responsible and dependable system operation. Tools like LangChain and Retrieval-Augmented Generation have expanded LLM capabilities, enabling deeper integration into MAS through enhanced knowledge retrieval and reasoning. However, these advancements introduce critical challenges: LLM agents exhibit inherent unpredictability, and uncertainties in their outputs can compound across interactions, threatening system stability. To address these risks, a human-centered design approach with active dynamic moderation is essential. Such an approach enhances traditional passive oversight by facilitating coherent inter-agent communication and effective system governance, allowing MAS to achieve desired outcomes more efficiently.

Image-based Geo-localization for Robotics: Are Black-box Vision-Language Models there yet?

Jan 28, 2025

Abstract:The advances in Vision-Language models (VLMs) offer exciting opportunities for robotic applications involving image geo-localization, the problem of identifying the geo-coordinates of a place based on visual data only. Recent research works have focused on using a VLM as embeddings extractor for geo-localization, however, the most sophisticated VLMs may only be available as black boxes that are accessible through an API, and come with a number of limitations: there is no access to training data, model features and gradients; retraining is not possible; the number of predictions may be limited by the API; training on model outputs is often prohibited; and queries are open-ended. The utilization of a VLM as a stand-alone, zero-shot geo-localization system using a single text-based prompt is largely unexplored. To bridge this gap, this paper undertakes the first systematic study, to the best of our knowledge, to investigate the potential of some of the state-of-the-art VLMs as stand-alone, zero-shot geo-localization systems in a black-box setting with realistic constraints. We consider three main scenarios for this thorough investigation: a) fixed text-based prompt; b) semantically-equivalent text-based prompts; and c) semantically-equivalent query images. We also take into account the auto-regressive and probabilistic generation process of the VLMs when investigating their utility for geo-localization task by using model consistency as a metric in addition to traditional accuracy. Our work provides new insights in the capabilities of different VLMs for the above-mentioned scenarios.

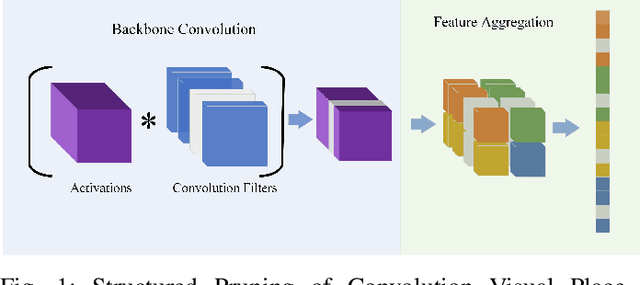

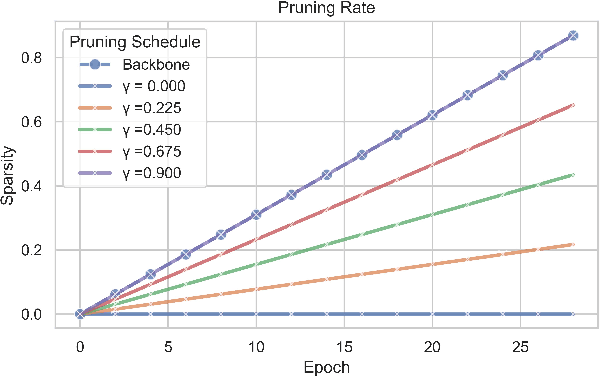

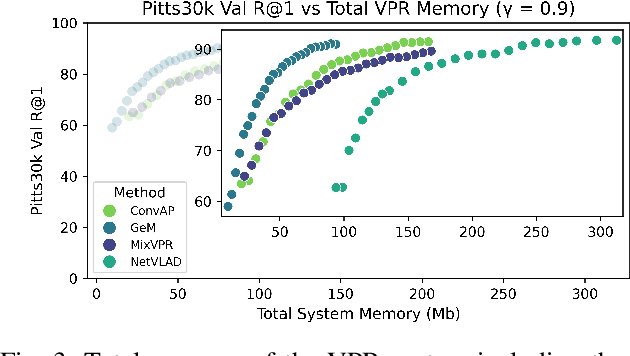

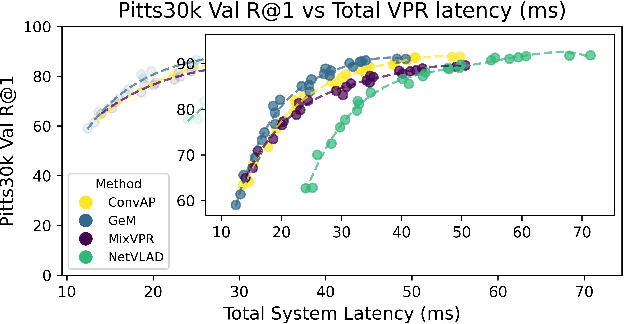

Structured Pruning for Efficient Visual Place Recognition

Sep 12, 2024

Abstract:Visual Place Recognition (VPR) is fundamental for the global re-localization of robots and devices, enabling them to recognize previously visited locations based on visual inputs. This capability is crucial for maintaining accurate mapping and localization over large areas. Given that VPR methods need to operate in real-time on embedded systems, it is critical to optimize these systems for minimal resource consumption. While the most efficient VPR approaches employ standard convolutional backbones with fixed descriptor dimensions, these often lead to redundancy in the embedding space as well as in the network architecture. Our work introduces a novel structured pruning method, to not only streamline common VPR architectures but also to strategically remove redundancies within the feature embedding space. This dual focus significantly enhances the efficiency of the system, reducing both map and model memory requirements and decreasing feature extraction and retrieval latencies. Our approach has reduced memory usage and latency by 21% and 16%, respectively, across models, while minimally impacting recall@1 accuracy by less than 1%. This significant improvement enhances real-time applications on edge devices with negligible accuracy loss.

Mapping Safe Zones for Co-located Human-UAV Interaction

Sep 03, 2024Abstract:Recent advances in robotics bring us closer to the reality of living, co-habiting, and sharing personal spaces with robots. However, it is not clear how close a co-located robot can be to a human in a shared environment without making the human uncomfortable or anxious. This research aims to map safe and comfortable zones for co-located aerial robots. The objective is to identify the distances at which a drone causes discomfort to a co-located human and to create a map showing no-fly, moderate-fly, and safe-fly zones. We recruited a total of 18 participants and conducted two indoor laboratory experiments, one with a single drone and the other set with two drones. Our results show that multiple drones cause more discomfort when close to a co-located human than a single drone. We observed that distances below 200 cm caused discomfort, the moderate fly zone was 200 - 300 cm, and the safe-fly zone was any distance greater than 300 cm in single drone experiments. The safe zones were pushed further away by 100 cm for the multiple drone experiments. In this paper, we present the preliminary findings on safe-fly zones for multiple drones. Further work would investigate the impact of a higher number of aerial robots, the speed of approach, direction of travel, and noise level on co-located humans, and autonomously develop 3D models of trust zones and safe zones for co-located aerial swarms.

Trade-offs of Dynamic Control Structure in Human-swarm Systems

Aug 05, 2024Abstract:Swarm robotics is a study of simple robots that exhibit complex behaviour only by interacting locally with other robots and their environment. The control in swarm robotics is mainly distributed whereas centralised control is widely used in other fields of robotics. Centralised and decentralised control strategies both pose a unique set of benefits and drawbacks for the control of multi-robot systems. While decentralised systems are more scalable and resilient, they are less efficient compared to the centralised systems and they lead to excessive data transmissions to the human operators causing cognitive overload. We examine the trade-offs of each of these approaches in a human-swarm system to perform an environmental monitoring task and propose a flexible hybrid approach, which combines elements of hierarchical and decentralised systems. We find that a flexible hybrid system can outperform a centralised system (in our environmental monitoring task by 19.2%) while reducing the number of messages sent to a human operator (here by 23.1%). We conclude that establishing centralisation for a system is not always optimal for performance and that utilising aspects of centralised and decentralised systems can keep the swarm from hindering its performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge