Sarthak Arora

Examining the Mental Health Impact of Misinformation on Social Media Using a Hybrid Transformer-Based Approach

Mar 04, 2025Abstract:Social media has significantly reshaped interpersonal communication, fostering connectivity while also enabling the proliferation of misinformation. The unchecked spread of false narratives has profound effects on mental health, contributing to increased stress, anxiety, and misinformation-driven paranoia. This study presents a hybrid transformer-based approach using a RoBERTa-LSTM classifier to detect misinformation, assess its impact on mental health, and classify disorders linked to misinformation exposure. The proposed models demonstrate accuracy rates of 98.4, 87.8, and 77.3 in detecting misinformation, mental health implications, and disorder classification, respectively. Furthermore, Pearson's Chi-Squared Test for Independence (p-value = 0.003871) validates the direct correlation between misinformation and deteriorating mental well-being. This study underscores the urgent need for better misinformation management strategies to mitigate its psychological repercussions. Future research could explore broader datasets incorporating linguistic, demographic, and cultural variables to deepen the understanding of misinformation-induced mental health distress.

Was that Sarcasm?: A Literature Survey on Sarcasm Detection

Nov 30, 2024Abstract:Sarcasm is hard to interpret as human beings. Being able to interpret sarcasm is often termed as a sign of intelligence, given the complex nature of sarcasm. Hence, this is a field of Natural Language Processing which is still complex for computers to decipher. This Literature Survey delves into different aspects of sarcasm detection, to create an understanding of the underlying problems faced during detection, approaches used to solve this problem, and different forms of available datasets for sarcasm detection.

Using 3-D LiDAR Data for Safe Physical Human-Robot Interaction

Jun 02, 2024Abstract:This paper explores the use of 3D lidar in a physical Human-Robot Interaction (pHRI) scenario. To achieve the aforementioned, experiments were conducted to mimic a modern shop-floor environment. Data was collected from a pool of seventeen participants while performing pre-determined tasks in a shared workspace with the robot. To demonstrate an end-to-end case; a perception pipeline was developed that leverages reflectivity, signal, near-infrared, and point-cloud data from a 3-D lidar. This data is then used to perform safety based control whilst satisfying the speed and separation monitoring (SSM) criteria. In order to support the perception pipeline, a state-of-the-art object detection network was leveraged and fine-tuned by transfer learning. An analysis is provided along with results of the perception and the safety based controller. Additionally, this system is compared with the previous work.

Queer In AI: A Case Study in Community-Led Participatory AI

Apr 10, 2023Abstract:We present Queer in AI as a case study for community-led participatory design in AI. We examine how participatory design and intersectional tenets started and shaped this community's programs over the years. We discuss different challenges that emerged in the process, look at ways this organization has fallen short of operationalizing participatory and intersectional principles, and then assess the organization's impact. Queer in AI provides important lessons and insights for practitioners and theorists of participatory methods broadly through its rejection of hierarchy in favor of decentralization, success at building aid and programs by and for the queer community, and effort to change actors and institutions outside of the queer community. Finally, we theorize how communities like Queer in AI contribute to the participatory design in AI more broadly by fostering cultures of participation in AI, welcoming and empowering marginalized participants, critiquing poor or exploitative participatory practices, and bringing participation to institutions outside of individual research projects. Queer in AI's work serves as a case study of grassroots activism and participatory methods within AI, demonstrating the potential of community-led participatory methods and intersectional praxis, while also providing challenges, case studies, and nuanced insights to researchers developing and using participatory methods.

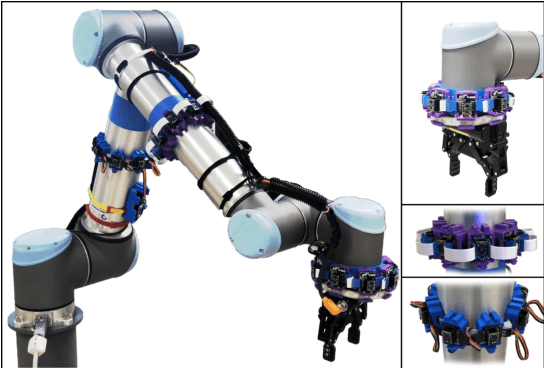

Human Position Detection & Tracking with On-robot Time-of-Flight Laser Ranging Sensors

Sep 21, 2019

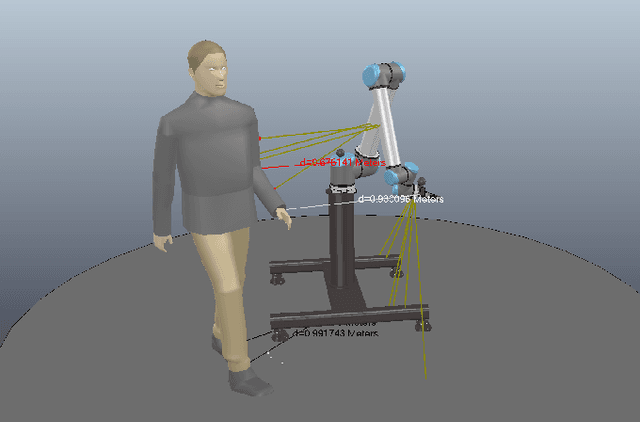

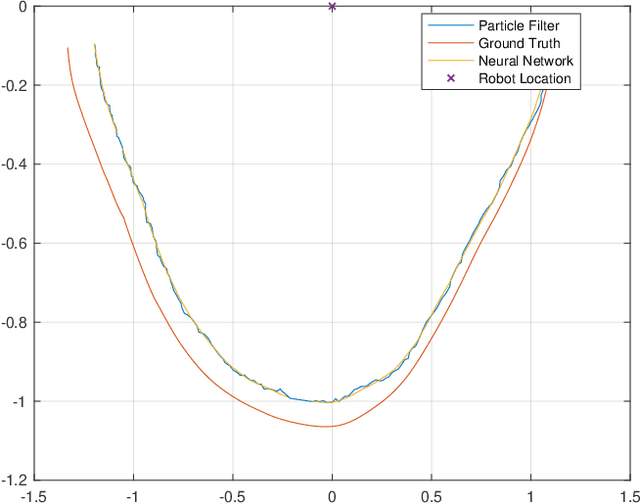

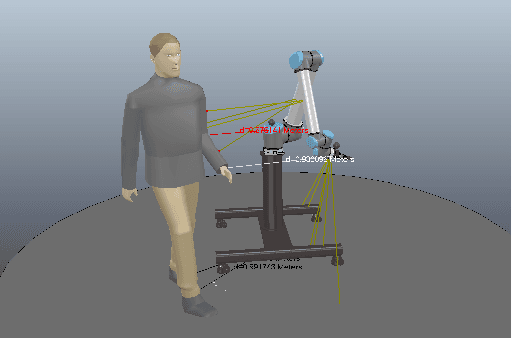

Abstract:In this paper, we propose a simple methodology to detect the partial pose of a human occupying the manipulator work-space using only on-robot time--of--flight laser ranging sensors. The sensors are affixed on each link of the robot in a circular array fashion where each array possesses sixteen single unit laser ranging lidar(s). The detection is performed by leveraging an artificial neural network which takes a highly sparse 3-D point cloud input to produce an estimate of the partial pose which is the ground projection frame of the human footprint. We also present a particle filter based approach to the tracking problem when the input data is unreliable. Ultimately, the simulation results are presented and analyzed.

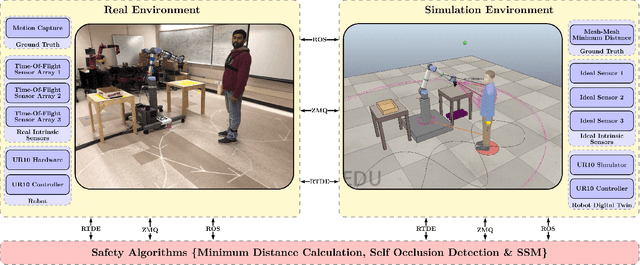

HRC-SoS: Human Robot Collaboration Experimentation Platform as System of Systems

May 03, 2019

Abstract:This paper presents an experimentation platform for human robot collaboration as a system of systems as well as proposes a conceptual framework describing the aspects of Human Robot Collaboration. These aspects are Awareness, Intelligence and Compliance of the system. Based on this framework case studies describing experiment setups performed using this platform are discussed. Each experiment highlights the use of the subsystems such as the digital twin, motion capture system, human-physiological monitoring system, data collection system and robot control and interface systems. A highlight of this paper showcases a subsystem with the ability to monitor human physiological feedback during a human robot collaboration task.

Speed and Separation Monitoring using on-robot Time--of--Flight laser--ranging sensor arrays

Apr 16, 2019

Abstract:In this paper, a speed and separation monitoring (SSM) based safety controller using three time-of-flight ranging sensor arrays fastened to the robot links, is implemented. Based on the human-robot minimum distance and their relative velocities, a controller output characterized by a modulating robot operation speed is obtained. To avert self-avoidance, a self occlusion detection method is implemented using ray-casting technique to filter out the distance values associated with the robot-self and the restricted robot workspace. For validation, the robot workspace is monitored using a motion capture setup to create a digital twin of the human and robot. This setup is used to compare the safety,performance and productivity of various versions of SSM safety configurations based on minimum distance between human and robot calculated using on-robot Time-of-Flight sensors, motion capture and a 2D scanning lidar.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge