Shitij Kumar

Human Position Detection & Tracking with On-robot Time-of-Flight Laser Ranging Sensors

Sep 21, 2019

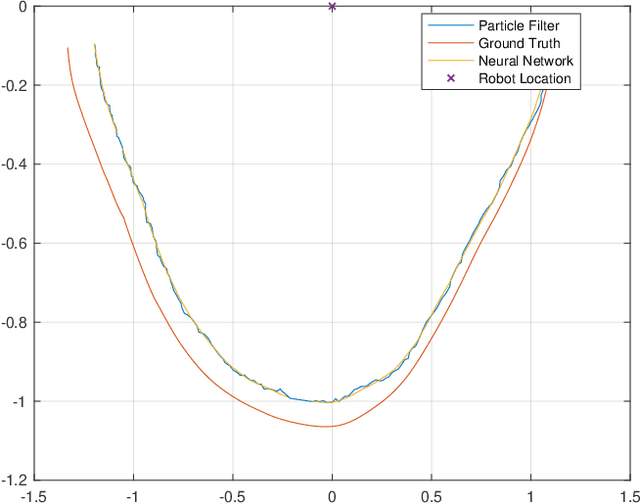

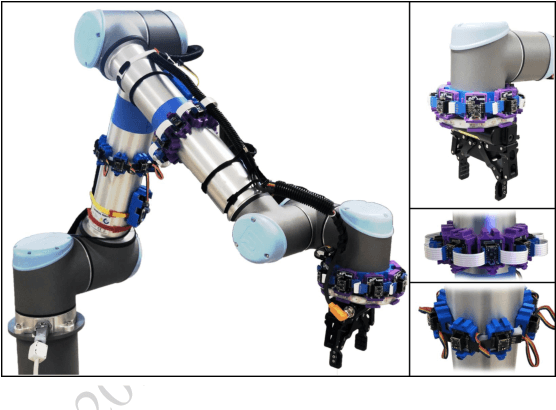

Abstract:In this paper, we propose a simple methodology to detect the partial pose of a human occupying the manipulator work-space using only on-robot time--of--flight laser ranging sensors. The sensors are affixed on each link of the robot in a circular array fashion where each array possesses sixteen single unit laser ranging lidar(s). The detection is performed by leveraging an artificial neural network which takes a highly sparse 3-D point cloud input to produce an estimate of the partial pose which is the ground projection frame of the human footprint. We also present a particle filter based approach to the tracking problem when the input data is unreliable. Ultimately, the simulation results are presented and analyzed.

A Framework for Monitoring Human Physiological Response during Human Robot Collaborative Task

Jul 26, 2019

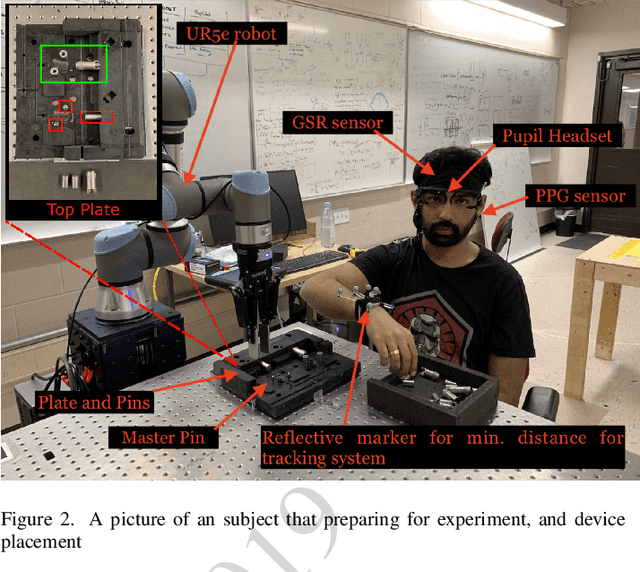

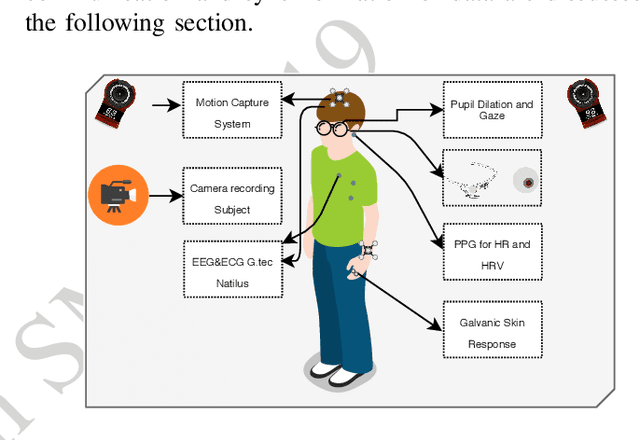

Abstract:In this paper, a framework for monitoring human physiological response during Human-Robot Collaborative (HRC) task is presented. The framework highlights the importance of generation of event markers related to both human and robot, and also synchronization of data collected. This framework enables continuous data collection during an HRC task when changing robot movements as a form of stimuli to invoke a human physiological response. It also presents two case studies based on this framework and a data visualization tool for representation and easy analysis of the collected data during an HRC experiment.

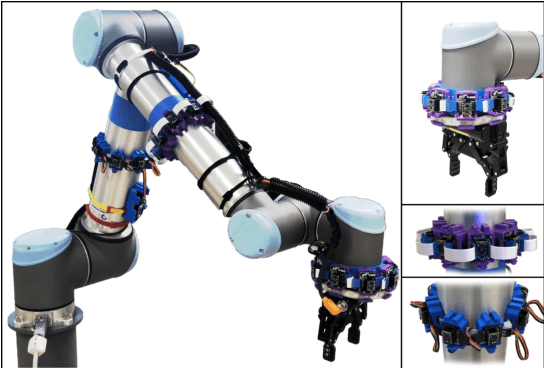

Sensing Volume Coverage of Robot Workspace using On-Robot Time-of-Flight Sensor Arrays for Safe Human Robot Interaction

Jul 03, 2019

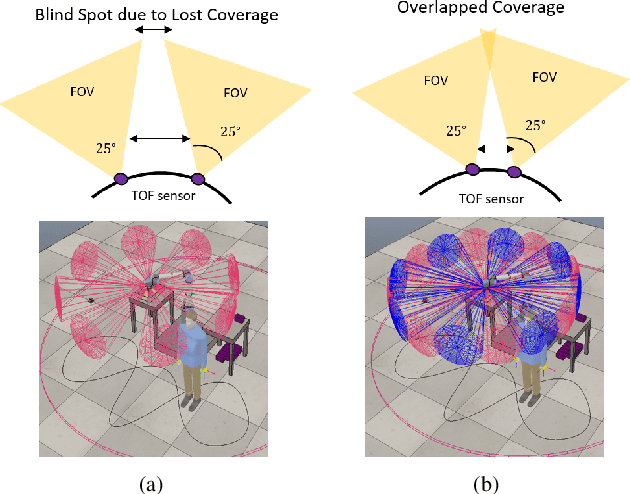

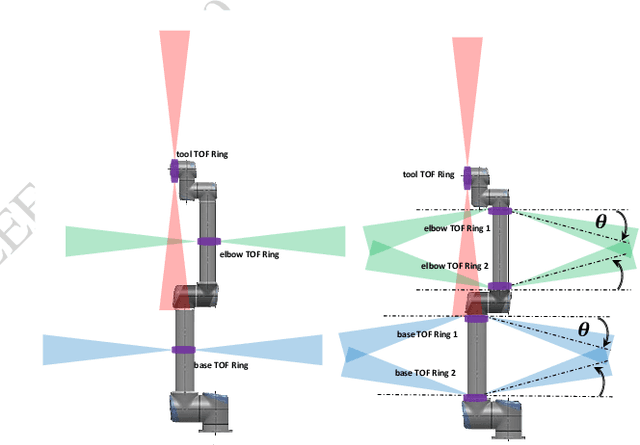

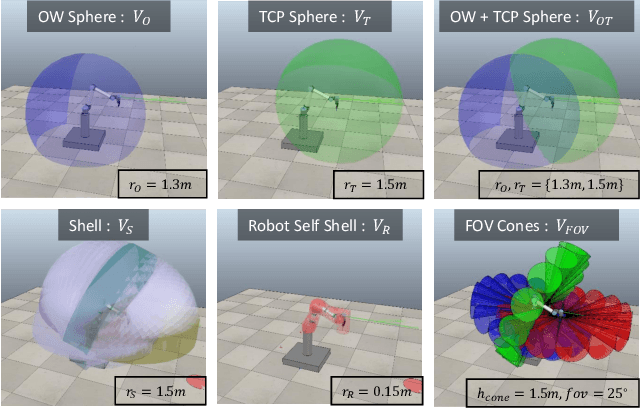

Abstract:In this paper, an analysis of the sensing volume coverage of robot workspace as well as the shared human-robot collaborative workspace for various configurations of on-robot Time-of-Flight (ToF) sensor array rings is presented. A methodology for volumetry using octrees to quantify the detection/sensing volume of the sensors is proposed. The change in sensing volume coverage by increasing the number of sensors per ToF sensor array ring and also increasing the number of rings mounted on robot link is also studied. Considerations of maximum ideal volume around the robot workspace that a given ToF sensor array ring placement and orientation setup should cover for safe human robot interaction are presented. The sensing volume coverage measurements in this maximum ideal volume are tabulated and observations on various ToF configurations and their coverage for close and far zones of the robot are determined.

Cyber-Physical Testbed for Human-Robot Collaborative Task Planning and Execution

May 30, 2019

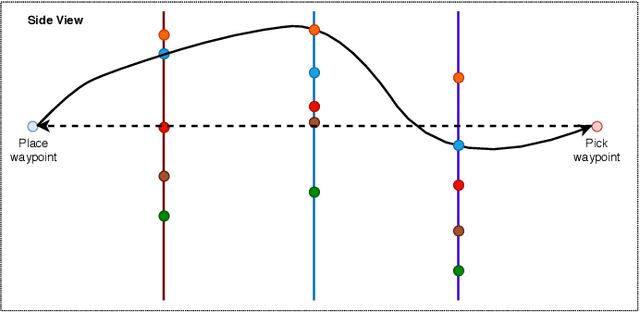

Abstract:In this paper, we present a cyber-physical testbed created to enable a human-robot team to perform a shared task in a shared workspace. The testbed is suitable for the implementation of a tabletop manipulation task, a common human-robot collaboration scenario. The testbed integrates elements that exist in the physical and virtual world. In this work, we report the insights we gathered throughout our exploration in understanding and implementing task planning and execution for human-robot team.

HRC-SoS: Human Robot Collaboration Experimentation Platform as System of Systems

May 03, 2019

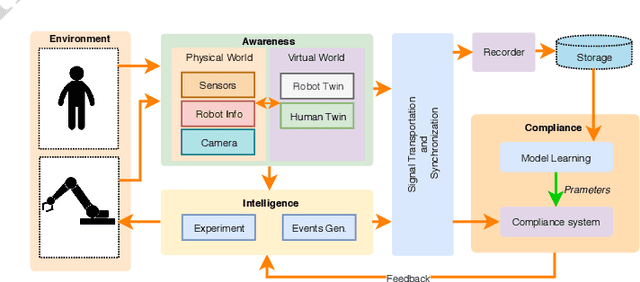

Abstract:This paper presents an experimentation platform for human robot collaboration as a system of systems as well as proposes a conceptual framework describing the aspects of Human Robot Collaboration. These aspects are Awareness, Intelligence and Compliance of the system. Based on this framework case studies describing experiment setups performed using this platform are discussed. Each experiment highlights the use of the subsystems such as the digital twin, motion capture system, human-physiological monitoring system, data collection system and robot control and interface systems. A highlight of this paper showcases a subsystem with the ability to monitor human physiological feedback during a human robot collaboration task.

Speed and Separation Monitoring using on-robot Time--of--Flight laser--ranging sensor arrays

Apr 16, 2019

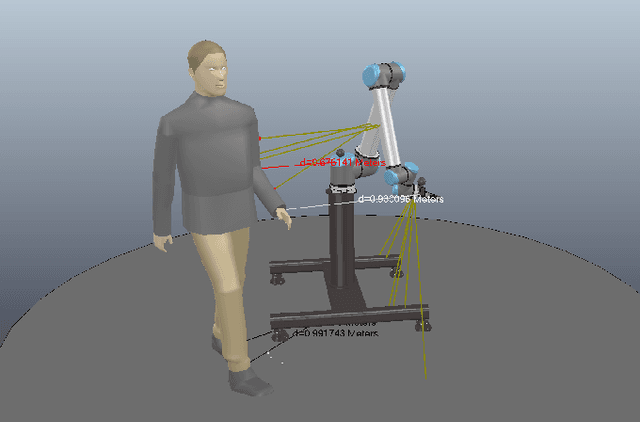

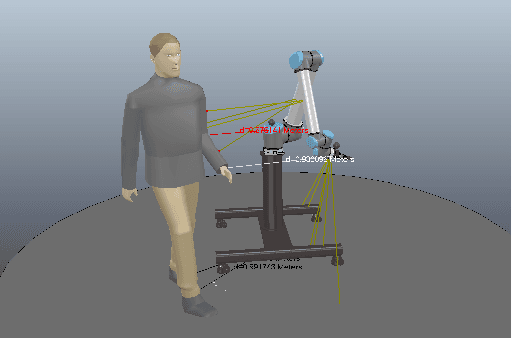

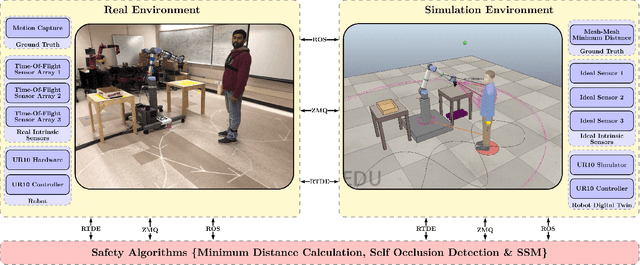

Abstract:In this paper, a speed and separation monitoring (SSM) based safety controller using three time-of-flight ranging sensor arrays fastened to the robot links, is implemented. Based on the human-robot minimum distance and their relative velocities, a controller output characterized by a modulating robot operation speed is obtained. To avert self-avoidance, a self occlusion detection method is implemented using ray-casting technique to filter out the distance values associated with the robot-self and the restricted robot workspace. For validation, the robot workspace is monitored using a motion capture setup to create a digital twin of the human and robot. This setup is used to compare the safety,performance and productivity of various versions of SSM safety configurations based on minimum distance between human and robot calculated using on-robot Time-of-Flight sensors, motion capture and a 2D scanning lidar.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge