Sang-Woon Jeon

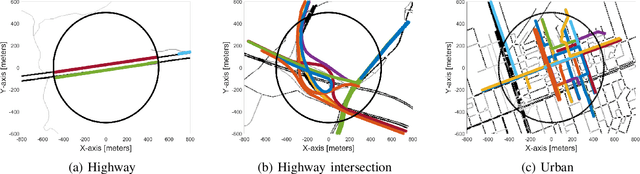

3D UAV Trajectory Planning for IoT Data Collection via Matrix-Based Evolutionary Computation

Oct 08, 2024

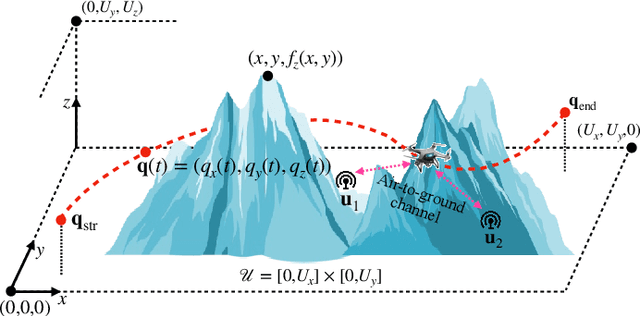

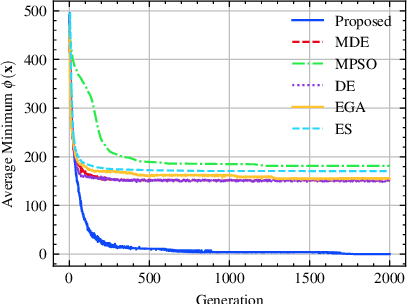

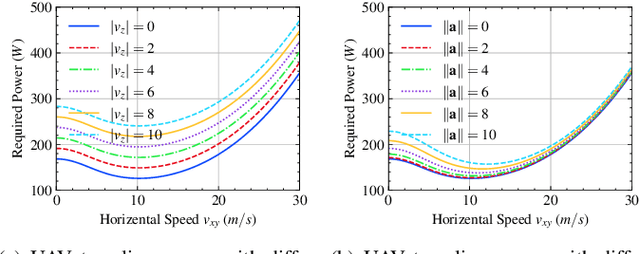

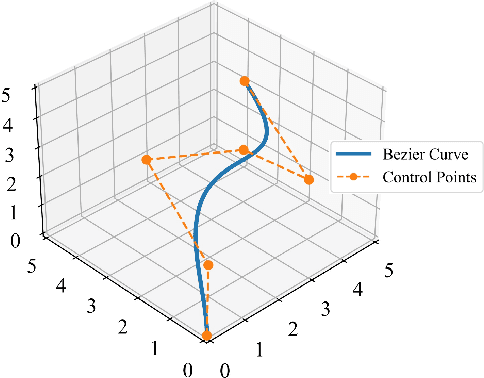

Abstract:UAVs are increasingly becoming vital tools in various wireless communication applications including internet of things (IoT) and sensor networks, thanks to their rapid and agile non-terrestrial mobility. Despite recent research, planning three-dimensional (3D) UAV trajectories over a continuous temporal-spatial domain remains challenging due to the need to solve computationally intensive optimization problems. In this paper, we study UAV-assisted IoT data collection aimed at minimizing total energy consumption while accounting for the UAV's physical capabilities, the heterogeneous data demands of IoT nodes, and 3D terrain. We propose a matrix-based differential evolution with constraint handling (MDE-CH), a computation-efficient evolutionary algorithm designed to address non-convex constrained optimization problems with several different types of constraints. Numerical evaluations demonstrate that the proposed MDE-CH algorithm provides a continuous 3D temporal-spatial UAV trajectory capable of efficiently minimizing energy consumption under various practical constraints and outperforms the conventional fly-hover-fly model for both two-dimensional (2D) and 3D trajectory planning.

Optimal Batch Allocation for Wireless Federated Learning

Apr 03, 2024

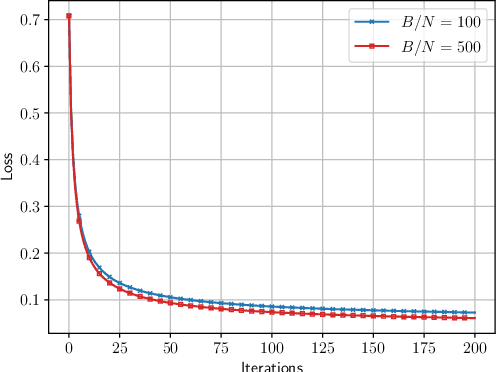

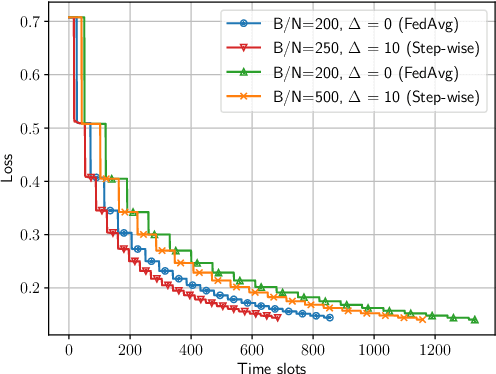

Abstract:Federated learning aims to construct a global model that fits the dataset distributed across local devices without direct access to private data, leveraging communication between a server and the local devices. In the context of a practical communication scheme, we study the completion time required to achieve a target performance. Specifically, we analyze the number of iterations required for federated learning to reach a specific optimality gap from a minimum global loss. Subsequently, we characterize the time required for each iteration under two fundamental multiple access schemes: time-division multiple access (TDMA) and random access (RA). We propose a step-wise batch allocation, demonstrated to be optimal for TDMA-based federated learning systems. Additionally, we show that the non-zero batch gap between devices provided by the proposed step-wise batch allocation significantly reduces the completion time for RA-based learning systems. Numerical evaluations validate these analytical results through real-data experiments, highlighting the remarkable potential for substantial completion time reduction.

Hybrid Online-Offline Learning for Task Offloading in Mobile Edge Computing Systems

Feb 27, 2024

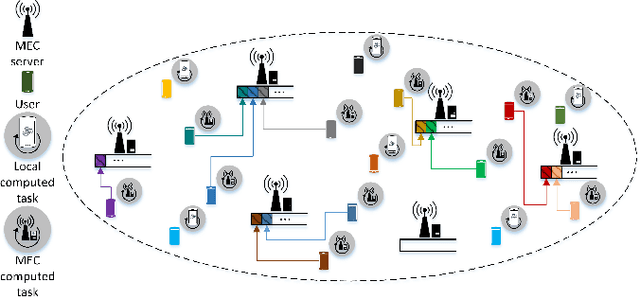

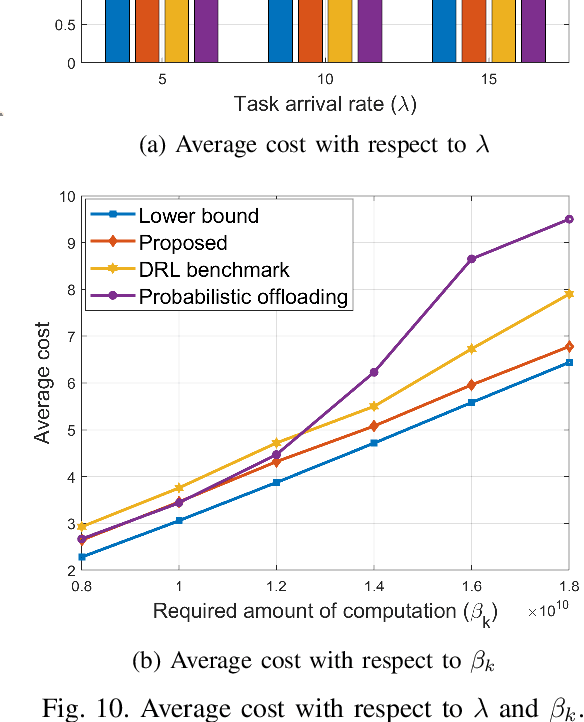

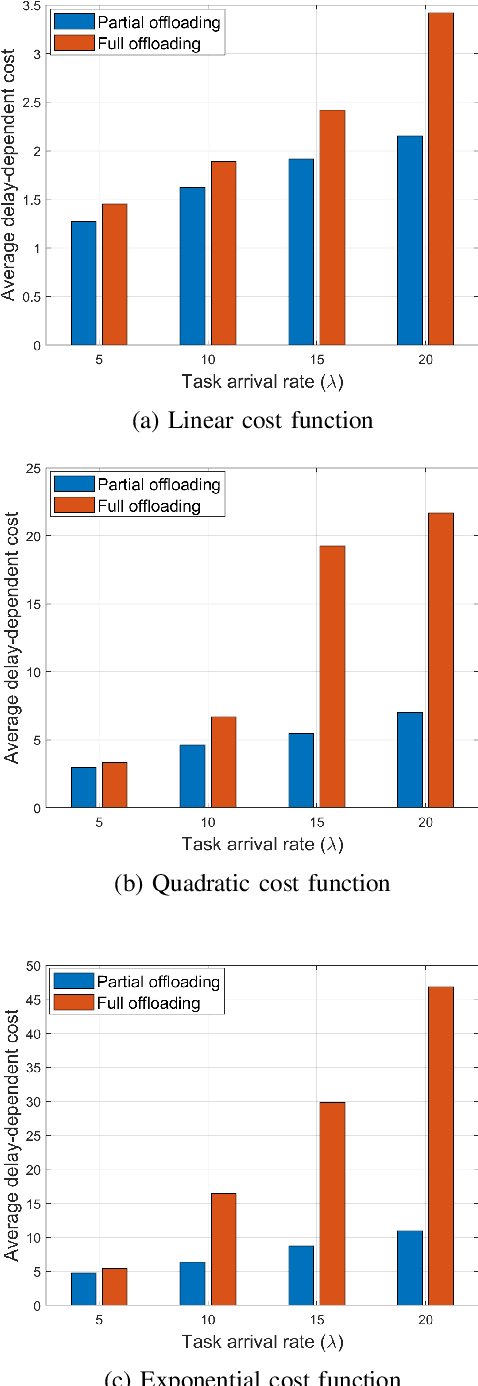

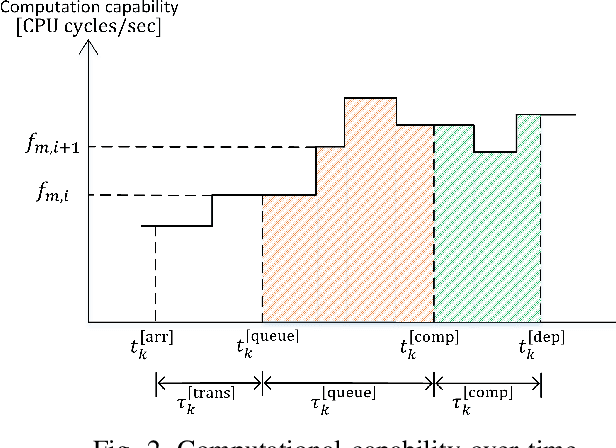

Abstract:We consider a multi-user multi-server mobile edge computing (MEC) system, in which users arrive on a network randomly over time and generate computation tasks, which will be computed either locally on their own computing devices or be offloaded to one of the MEC servers. Under such a dynamic network environment, we propose a novel task offloading policy based on hybrid online-offline learning, which can efficiently reduce the overall computation delay and energy consumption only with information available at nearest MEC servers from each user. We provide a practical signaling and learning framework that can train deep neural networks for both online and offline learning and can adjust its offloading policy based on the queuing status of each MEC server and network dynamics. Numerical results demonstrate that the proposed scheme significantly reduces the average computation delay for a broad class of network environments compared to the conventional offloading methods. It is further shown that the proposed hybrid online-offline learning framework can be extended to a general cost function reflecting both delay and energy-dependent metrics.

* accepted by IEEE Transactions on Wireless Communications

Rate-Splitting Multiple Access for 6G Networks: Ten Promising Scenarios and Applications

Jun 22, 2023Abstract:In the upcoming 6G era, multiple access (MA) will play an essential role in achieving high throughput performances required in a wide range of wireless applications. Since MA and interference management are closely related issues, the conventional MA techniques are limited in that they cannot provide near-optimal performance in universal interference regimes. Recently, rate-splitting multiple access (RSMA) has been gaining much attention. RSMA splits an individual message into two parts: a common part, decodable by every user, and a private part, decodable only by the intended user. Each user first decodes the common message and then decodes its private message by applying successive interference cancellation (SIC). By doing so, RSMA not only embraces the existing MA techniques as special cases but also provides significant performance gains by efficiently mitigating inter-user interference in a broad range of interference regimes. In this article, we first present the theoretical foundation of RSMA. Subsequently, we put forth four key benefits of RSMA: spectral efficiency, robustness, scalability, and flexibility. Upon this, we describe how RSMA can enable ten promising scenarios and applications along with future research directions to pave the way for 6G.

CEC: Crowdsourcing-based Evolutionary Computation for Distributed Optimization

Apr 12, 2023Abstract:Crowdsourcing is an emerging computing paradigm that takes advantage of the intelligence of a crowd to solve complex problems effectively. Besides collecting and processing data, it is also a great demand for the crowd to conduct optimization. Inspired by this, this paper intends to introduce crowdsourcing into evolutionary computation (EC) to propose a crowdsourcing-based evolutionary computation (CEC) paradigm for distributed optimization. EC is helpful for optimization tasks of crowdsourcing and in turn, crowdsourcing can break the spatial limitation of EC for large-scale distributed optimization. Therefore, this paper firstly introduces the paradigm of crowdsourcing-based distributed optimization. Then, CEC is elaborated. CEC performs optimization based on a server and a group of workers, in which the server dispatches a large task to workers. Workers search for promising solutions through EC optimizers and cooperate with connected neighbors. To eliminate uncertainties brought by the heterogeneity of worker behaviors and devices, the server adopts the competitive ranking and uncertainty detection strategy to guide the cooperation of workers. To illustrate the satisfactory performance of CEC, a crowdsourcing-based swarm optimizer is implemented as an example for extensive experiments. Comparison results on benchmark functions and a distributed clustering optimization problem demonstrate the potential applications of CEC.

Reconfigurable Intelligent Surface Aided Hybrid Beamforming: Optimal Placement and Beamforming Design

Mar 21, 2023

Abstract:We consider reconfigurable intelligent surface (RIS) aided sixth-generation (6G) terahertz (THz) communications for indoor environment in which a base station (BS) wishes to send independent messages to its serving users with the help of multiple RISs. For indoor environment, various obstacles such as pillars, walls, and other objects can result in no line-of-sight signal path between the BS and a user, which can significantly degrade performance. To overcome such limitation of indoor THz communication, we firstly optimize the placement of RISs to maximize the coverage area. Under the optimized RIS placement, we propose 3D hybrid beamforming at the BS and phase adjustment at RISs, which are jointly performed at the BS and RISs via codebook-based 3D beam scanning with low complexity. Numerical simulations demonstrate that the proposed scheme significantly improves the average sum rate compared to the cases of no RIS and randomly deployed RISs. It is further shown that the proposed codebook-based 3D beam scanning efficiently aligns analog beams between BS--user links or BS--RIS--user links and, as a consequence, achieves the average sum rate close to that of coherent beam alignment requiring global channel state information.

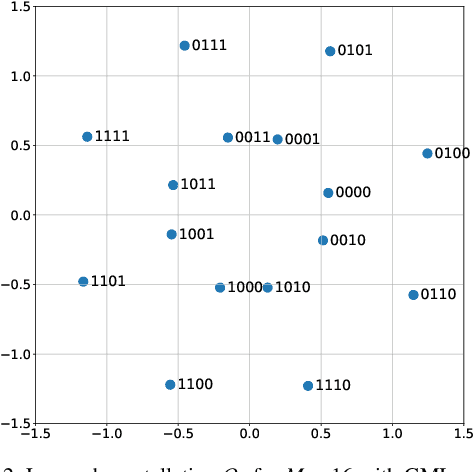

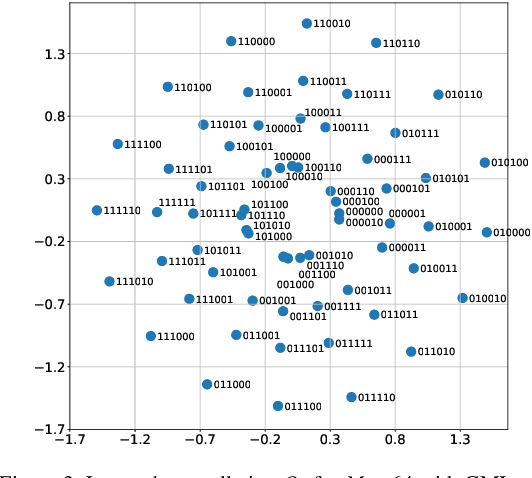

Hybrid Neural Coded Modulation: Design and Training Methods

Feb 04, 2022

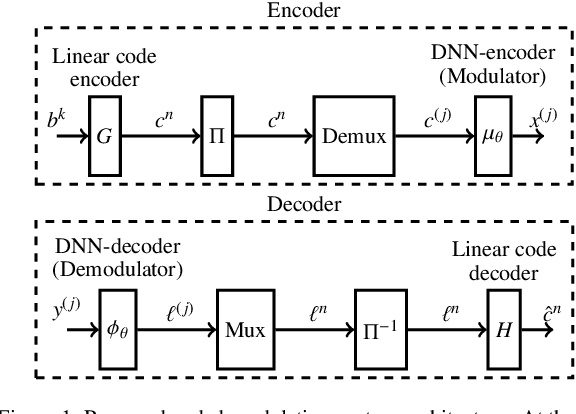

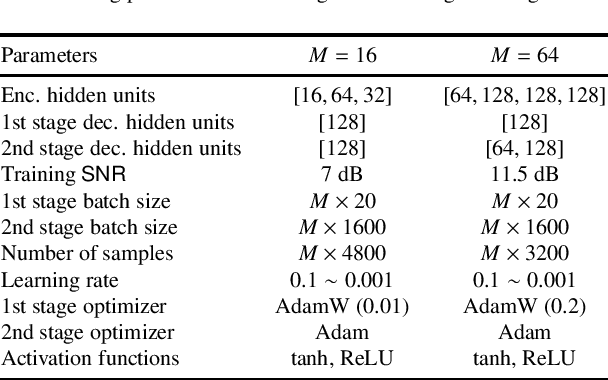

Abstract:We propose a hybrid coded modulation scheme which composes of inner and outer codes. The outer-code can be any standard binary linear code with efficient soft decoding capability (e.g. low-density parity-check (LDPC) codes). The inner code is designed using a deep neural network (DNN) which takes the channel coded bits and outputs modulated symbols. For training the DNN, we propose to use a loss function that is inspired by the generalized mutual information. The resulting constellations are shown to outperform the conventional quadrature amplitude modulation (QAM) based coding scheme for modulation order 16 and 64 with 5G standard LDPC codes.

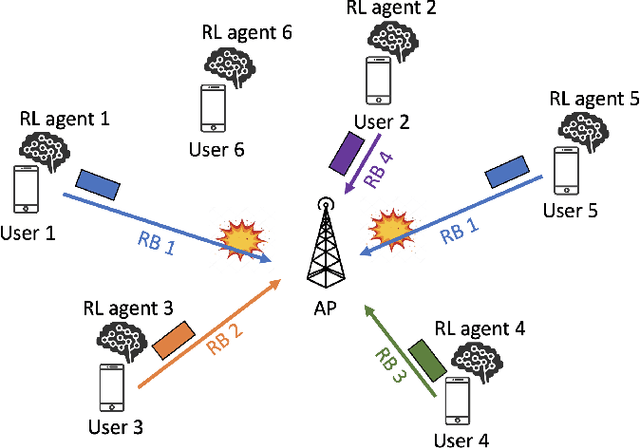

Dynamic Multichannel Access via Multi-agent Reinforcement Learning: Throughput and Fairness Guarantees

May 10, 2021

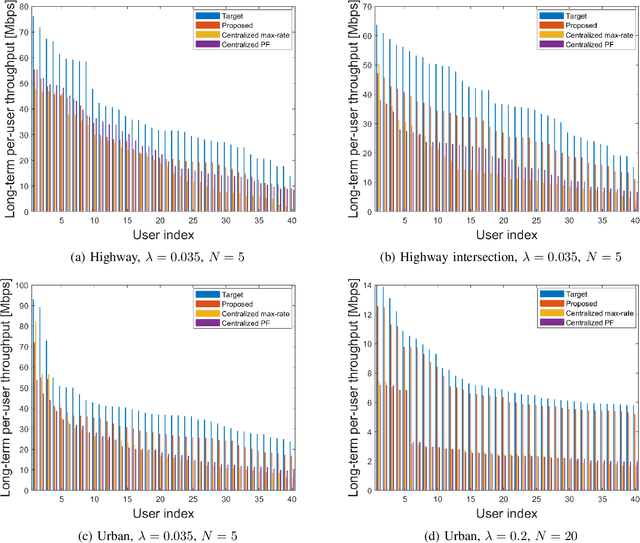

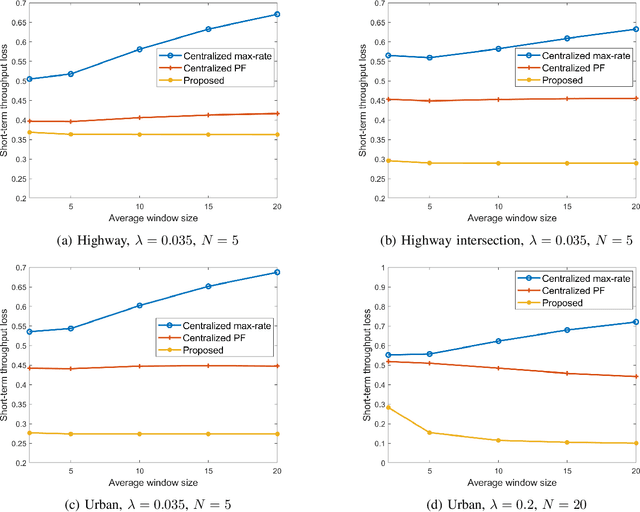

Abstract:We consider a multichannel random access system in which each user accesses a single channel at each time slot to communicate with an access point (AP). Users arrive to the system at random and be activated for a certain period of time slots and then disappear from the system. Under such dynamic network environment, we propose a distributed multichannel access protocol based on multi-agent reinforcement learning (RL) to improve both throughput and fairness between active users. Unlike the previous approaches adjusting channel access probabilities at each time slot, the proposed RL algorithm deterministically selects a set of channel access policies for several consecutive time slots. To effectively reduce the complexity of the proposed RL algorithm, we adopt a branching dueling Q-network architecture and propose an efficient training methodology for producing proper Q-values over time-varying user sets. We perform extensive simulations on realistic traffic environments and demonstrate that the proposed online learning improves both throughput and fairness compared to the conventional RL approaches and centralized scheduling policies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge