Jinseok Choi

Power-Constrained and Quantized MIMO-RSMA Systems with Imperfect CSIT: Joint Precoding, Antenna Selection, and Power Control

Aug 07, 2025Abstract:To utilize the full potential of the available power at a base station (BS), we propose a joint precoding, antenna selection, and transmit power control algorithm for a total power budget at the BS. We formulate a sum spectral efficiency (SE) maximization problem for downlink multi-user multiple-input multiple-output (MIMO) rate-splitting multiple access (RSMA) systems with arbitrary-resolution digital-to-analog converters (DACs). We reformulate the problem by defining the ergodic sum SE using the conditional average rate approach to handle imperfect channel state information at the transmitter (CSIT), and by using approximation techniques to make the problem more tractable. Then, we decompose the problem into precoding direction and power control subproblems. We solve the precoding direction subproblem by identifying a superior Lagrangian stationary point, and the power control subproblem using gradient descent. We also propose a complexity-reduction approach that is more suitable for massive MIMO systems. Simulation results not only validate the proposed algorithm but also reveal that when utilizing the full potential of the power budget at the BS, medium-resolution DACs with 8-11 bits may actually be more power-efficient than low-resolution DACs.

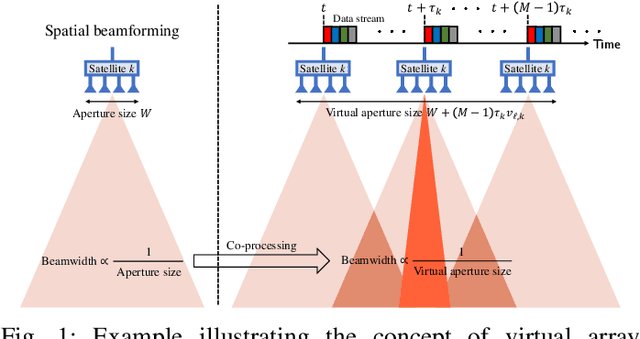

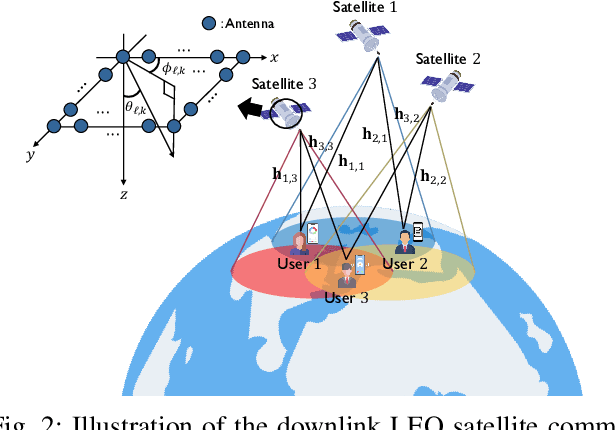

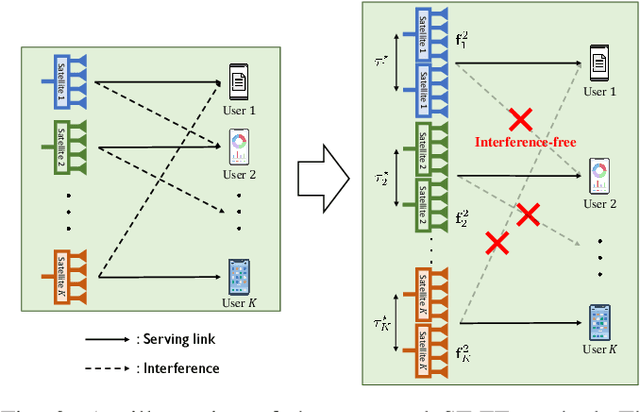

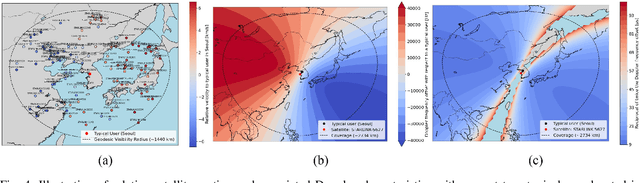

Space-Time Beamforming for LEO Satellite Communications

May 12, 2025

Abstract:Inter-beam interference poses a significant challenge in low Earth orbit (LEO) satellite communications due to dense satellite constellations. To address this issue, we introduce spacetime beamforming, a novel paradigm that leverages the spacetime channel vector, uniquely determined by the angle of arrival (AoA) and relative Doppler shift, to optimize beamforming between a moving satellite transmitter and a ground station user. We propose two space-time beamforming techniques: spacetime zero-forcing (ST-ZF) and space-time signal-to-leakage-plus-noise ratio (ST-SLNR) maximization. In a partially connected interference channel, ST-ZF achieves a 3dB SNR gain over the conventional interference avoidance method using maximum ratio transmission beamforming. Moreover, in general interference networks, ST-SLNR beamforming significantly enhances sum spectral efficiency compared to conventional interference management approaches. These results demonstrate the effectiveness of space-time beamforming in improving spectral efficiency and interference mitigation for next-generation LEO satellite networks.

A New Interpretation of the Time-Interleaved ADC Mismatch Problem: A Tracking-Based Hybrid Calibration Approach

Mar 13, 2025Abstract:Time-interleaved ADCs (TI-ADCs) achieve high sampling rates by interleaving multiple sub-ADCs in parallel. Mismatch errors between the sub-ADCs, however, can significantly degrade the signal quality, which is a main performance bottleneck. This paper presents a hybrid calibration approach by interpreting the mismatch problem as a tracking problem, and uses the extended Kalman filter for online estimation and compensation of the mismatch errors. After estimation, the desired signal is reconstructed using a truncated fractional delay filter and a high-pass filter. Simulations demonstrate that our algorithm substantially outperforms the existing hybrid calibration method in both mismatch estimation and compensation.

Scalable Beamforming Design for Multi-RIS-Aided MU-MIMO Systems with Imperfect CSIT

Jan 02, 2025Abstract:A reconfigurable intelligent surface (RIS) has emerged as a promising solution for enhancing the capabilities of wireless communications. This paper presents a scalable beamforming design for maximizing the spectral efficiency (SE) of multi-RIS-aided communications through joint optimization of the precoder and RIS phase shifts in multi-user multiple-input multiple-output (MU-MIMO) systems under imperfect channel state information at the transmitter (CSIT). To address key challenges of the joint optimization problem, we first decompose it into two subproblems with deriving a proper lower bound. We then leverage a generalized power iteration (GPI) approach to identify a superior local optimal precoding solution. We further extend this approach to the RIS design using regularization; we set a RIS regularization function to efficiently handle the unitmodulus constraints, and also find the superior local optimal solution for RIS phase shifts under the GPI-based optimization framework. Subsequently, we propose an alternating optimization method. In particular, utilizing the block-diagonal structure of the matrices the GPI method, the proposed algorithm offers multi-RIS scalable beamforming as well as superior SE performance. Simulations validate the proposed method in terms of both the sum SE performance and the scalability.

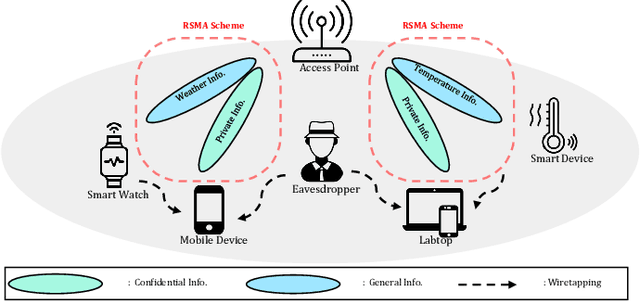

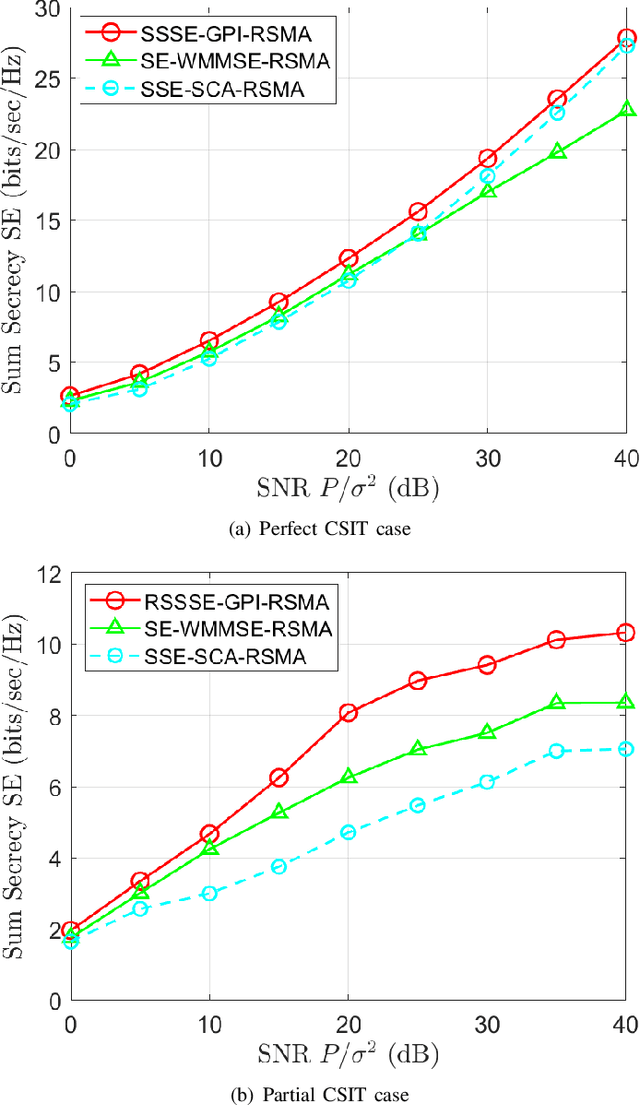

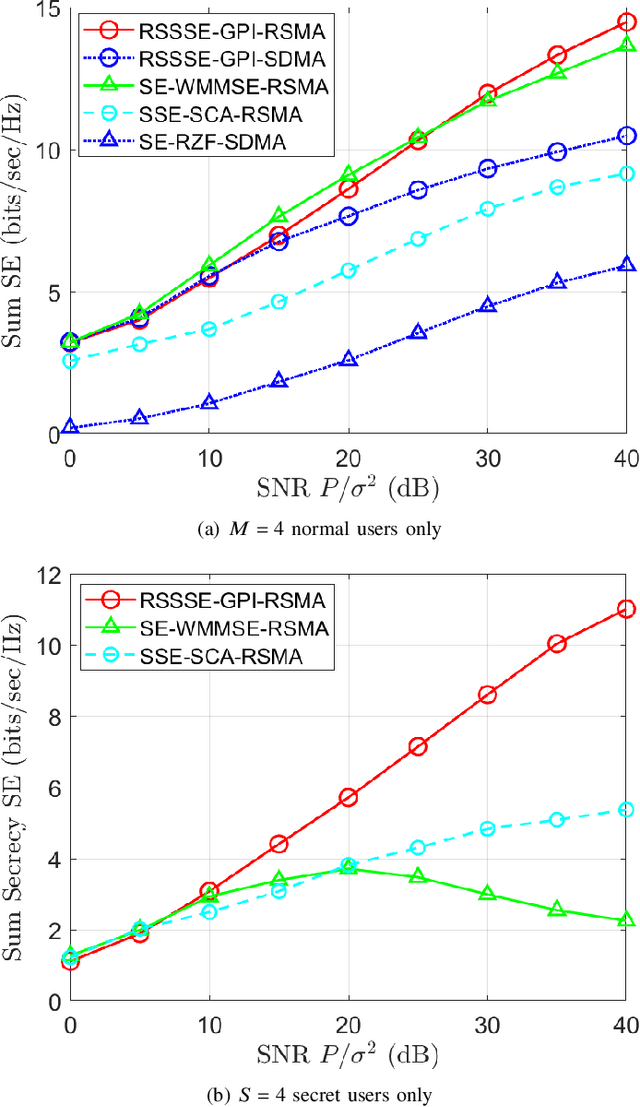

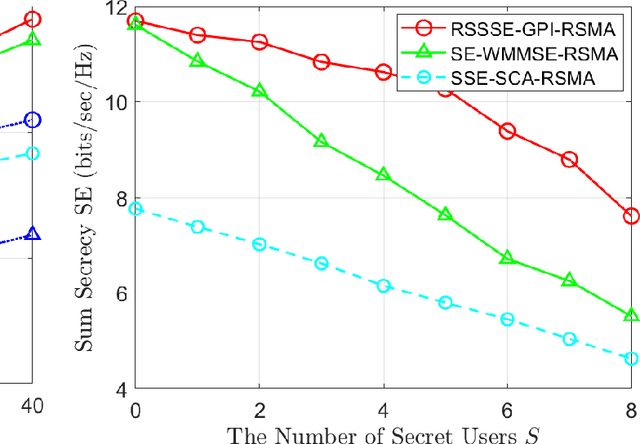

A Selective Secure Precoding Framework for MU-MIMO Rate-Splitting Multiple Access Networks Under Limited CSIT

Dec 26, 2024

Abstract:In this paper, we propose a robust and adaptable secure precoding framework designed to encapsulate a intricate scenario where legitimate users have different information security: secure private or normal public information. Leveraging rate-splitting multiple access (RSMA), we formulate the sum secrecy spectral efficiency (SE) maximization problem in downlink multi-user multiple-input multiple-output (MIMO) systems with multi-eavesdropper. To resolve the challenges including the heterogeneity of security, non-convexity, and non-smoothness of the problem, we initially approximate the problem using a LogSumExp technique. Subsequently, we derive the first-order optimality condition in the form of a generalized eigenvalue problem. We utilize a power iteration-based method to solve the condition, thereby achieving a superior local optimal solution. The proposed algorithm is further extended to a more realistic scenario involving limited channel state information at the transmitter (CSIT). To effectively utilize the limited channel information, we employ a conditional average rate approach. Handling the conditional average by deriving useful bounds, we establish a lower bound for the objective function under the conditional average. Then we apply the similar optimization method as for the perfect CSIT case. In simulations, we validate the proposed algorithm in terms of the sum secrecy SE.

Low-Earth Orbit Satellite Network Analysis: Coverage under Distance-Dependent Shadowing

Sep 06, 2024Abstract:This paper offers a thorough analysis of the coverage performance of Low Earth Orbit (LEO) satellite networks using a strongest satellite association approach, with a particular emphasis on shadowing effects modeled through a Poisson point process (PPP)-based network framework. We derive an analytical expression for the coverage probability, which incorporates key system parameters and a distance-dependent shadowing probability function, explicitly accounting for both line-of-sight and non-line-of-sight propagation channels. To enhance the practical relevance of our findings, we provide both lower and upper bounds for the coverage probability and introduce a closed-form solution based on a simplified shadowing model. Our analysis reveals several important network design insights, including the enhancement of coverage probability by distance-dependent shadowing effects and the identification of an optimal satellite altitude that balances beam gain benefits with interference drawbacks. Notably, our PPP-based network model shows strong alignment with other established models, confirming its accuracy and applicability across a variety of satellite network configurations. The insights gained from our analysis are valuable for optimizing LEO satellite deployment strategies and improving network performance in diverse scenarios.

Spectrum Sharing Between Low Earth Orbit Satellite and Terrestrial Networks: A Stochastic Geometry Perspective Analysis

Aug 22, 2024Abstract:Low Earth orbit (LEO) satellite networks with mega constellations have the potential to provide 5G and beyond services ubiquitously. However, these networks may introduce mutual interference to both satellite and terrestrial networks, particularly when sharing spectrum resources. In this paper, we present a system-level performance analysis to address these interference issues using the tool of stochastic geometry. We model the spatial distributions of satellites, satellite users, terrestrial base stations (BSs), and terrestrial users using independent Poisson point processes on the surfaces of concentric spheres. Under these spatial models, we derive analytical expressions for the ergodic spectral efficiency of uplink (UL) and downlink (DL) satellite networks when they share spectrum with both UL and DL terrestrial networks. These derived ergodic expressions capture comprehensive network parameters, including the densities of satellite and terrestrial networks, the path-loss exponent, and fading. From our analysis, we determine the conditions under which spectrum sharing with UL terrestrial networks is advantageous for both UL and DL satellite networks. Our key finding is that the optimal spectrum sharing configuration among the four possible configurations depends on the density ratio between terrestrial BSs and users, providing a design guideline for spectrum management. Simulation results confirm the accuracy of our derived expressions.

FDD Massive MIMO: How to Optimally Combine UL Pilot and Limited DL CSI Feedback?

May 14, 2024

Abstract:In frequency-division duplexing (FDD) multiple-input multiple-output (MIMO) systems, obtaining accurate downlink channel state information (CSI) for precoding is vastly challenging due to the tremendous feedback overhead with the growing number of antennas. Utilizing uplink pilots for downlink CSI estimation is a promising approach that can eliminate CSI feedback. However, the downlink CSI estimation accuracy diminishes significantly as the number of channel paths increases, resulting in reduced spectral efficiency. In this paper, we demonstrate that achieving downlink spectral efficiency comparable to perfect CSI is feasible by combining uplink CSI with limited downlink CSI feedback information. Our proposed downlink CSI feedback strategy transmits quantized phase information of downlink channel paths, deviating from conventional limited methods. We put forth a mean square error (MSE)-optimal downlink channel reconstruction method by jointly exploiting the uplink CSI and the limited downlink CSI. Armed with the MSE-optimal estimator, we derive the MSE as a function of the number of feedback bits for phase quantization. Subsequently, we present an optimal feedback bit allocation method for minimizing the MSE in the reconstructed channel through phase quantization. Utilizing a robust downlink precoding technique, we establish that the proposed downlink channel reconstruction method is sufficient for attaining a sum-spectral efficiency comparable to perfect CSI.

Full-Duplex MU-MIMO Systems with Coarse Quantization: How Many Bits Do We Need?

Mar 19, 2024Abstract:This paper investigates full-duplex (FD) multi-user multiple-input multiple-output (MU-MIMO) system design with coarse quantization. We first analyze the impact of self-interference (SI) on quantization in FD single-input single-output systems. The analysis elucidates that the minimum required number of analog-to-digital converter (ADC) bits is logarithmically proportional to the ratio of total received power to the received power of desired signals. Motivated by this, we design a FD MIMO beamforming method that effectively manages the SI. Dividing a spectral efficiency maximization beamforming problem into two sub-problems for alternating optimization, we address the first by optimizing the precoder: obtaining a generalized eigenvalue problem from the first-order optimality condition, where the principal eigenvector is the optimal stationary solution, and adopting a power iteration method to identify this eigenvector. Subsequently, a quantization-aware minimum mean square error combiner is computed for the derived precoder. Through numerical studies, we observe that the proposed beamformer reduces the minimum required number of ADC bits for achieving higher spectral efficiency than that of half-duplex (HD) systems, compared to FD benchmarks. The overall analysis shows that, unlike with quantized HD systems, more than 6 bits are required for the ADC to fully realize the potential of the quantized FD system.

Nonlinear Self-Interference Cancellation With Learnable Orthonormal Polynomials for Full-Duplex Wireless Systems

Mar 17, 2024Abstract:Nonlinear self-interference cancellation (SIC) is essential for full-duplex communication systems, which can offer twice the spectral efficiency of traditional half-duplex systems. The challenge of nonlinear SIC is similar to the classic problem of system identification in adaptive filter theory, whose crux lies in identifying the optimal nonlinear basis functions for a nonlinear system. This becomes especially difficult when the system input has a non-stationary distribution. In this paper, we propose a novel algorithm for nonlinear digital SIC that adaptively constructs orthonormal polynomial basis functions according to the non-stationary moments of the transmit signal. By combining these basis functions with the least mean squares (LMS) algorithm, we introduce a new SIC technique, called as the adaptive orthonormal polynomial LMS (AOP-LMS) algorithm. To reduce computational complexity for practical systems, we augment our approach with a precomputed look-up table, which maps a given modulation and coding scheme to its corresponding basis functions. Numerical simulation indicates that our proposed method surpasses existing state-of-the-art SIC algorithms in terms of convergence speed and mean squared error when the transmit signal is non-stationary, such as with adaptive modulation and coding. Experimental evaluation with a wireless testbed confirms that our proposed approach outperforms existing digital SIC algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge