Hyowon Lee

Nonlinear Self-Interference Cancellation With Learnable Orthonormal Polynomials for Full-Duplex Wireless Systems

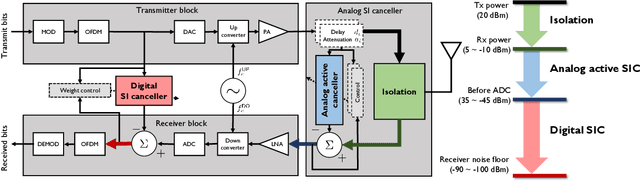

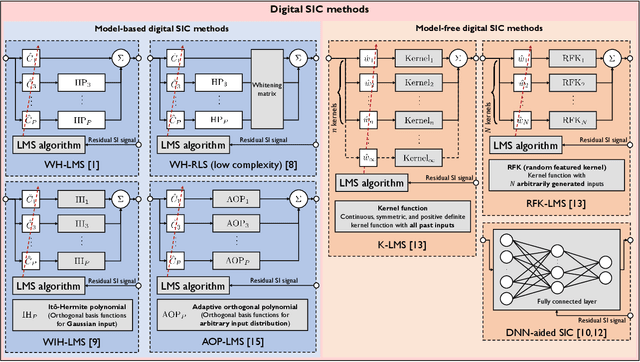

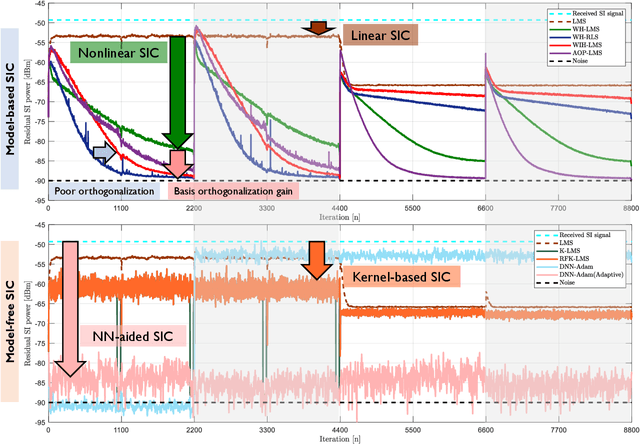

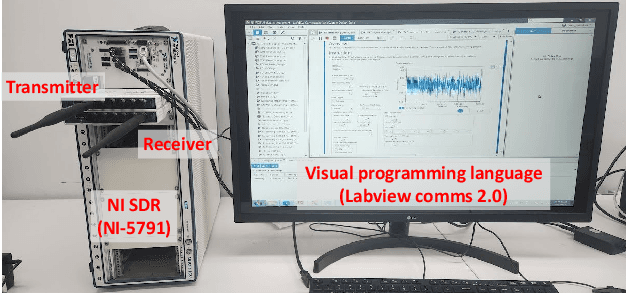

Mar 17, 2024Abstract:Nonlinear self-interference cancellation (SIC) is essential for full-duplex communication systems, which can offer twice the spectral efficiency of traditional half-duplex systems. The challenge of nonlinear SIC is similar to the classic problem of system identification in adaptive filter theory, whose crux lies in identifying the optimal nonlinear basis functions for a nonlinear system. This becomes especially difficult when the system input has a non-stationary distribution. In this paper, we propose a novel algorithm for nonlinear digital SIC that adaptively constructs orthonormal polynomial basis functions according to the non-stationary moments of the transmit signal. By combining these basis functions with the least mean squares (LMS) algorithm, we introduce a new SIC technique, called as the adaptive orthonormal polynomial LMS (AOP-LMS) algorithm. To reduce computational complexity for practical systems, we augment our approach with a precomputed look-up table, which maps a given modulation and coding scheme to its corresponding basis functions. Numerical simulation indicates that our proposed method surpasses existing state-of-the-art SIC algorithms in terms of convergence speed and mean squared error when the transmit signal is non-stationary, such as with adaptive modulation and coding. Experimental evaluation with a wireless testbed confirms that our proposed approach outperforms existing digital SIC algorithms.

Machine Translation to Control Formality Features in the Target Language

Nov 22, 2023Abstract:Formality plays a significant role in language communication, especially in low-resource languages such as Hindi, Japanese and Korean. These languages utilise formal and informal expressions to convey messages based on social contexts and relationships. When a language translation technique is used to translate from a source language that does not pertain the formality (e.g. English) to a target language that does, there is a missing information on formality that could be a challenge in producing an accurate outcome. This research explores how this issue should be resolved when machine learning methods are used to translate from English to languages with formality, using Hindi as the example data. This was done by training a bilingual model in a formality-controlled setting and comparing its performance with a pre-trained multilingual model in a similar setting. Since there are not a lot of training data with ground truth, automated annotation techniques were employed to increase the data size. The primary modeling approach involved leveraging transformer models, which have demonstrated effectiveness in various natural language processing tasks. We evaluate the official formality accuracy(ACC) by comparing the predicted masked tokens with the ground truth. This metric provides a quantitative measure of how well the translations align with the desired outputs. Our study showcases a versatile translation strategy that considers the nuances of formality in the target language, catering to diverse language communication needs and scenarios.

On the Learning of Digital Self-Interference Cancellation in Full-Duplex Radios

Aug 11, 2023

Abstract:Full-duplex communication systems have the potential to achieve significantly higher data rates and lower latency compared to their half-duplex counterparts. This advantage stems from their ability to transmit and receive data simultaneously. However, to enable successful full-duplex operation, the primary challenge lies in accurately eliminating strong self-interference (SI). Overcoming this challenge involves addressing various issues, including the nonlinearity of power amplifiers, the time-varying nature of the SI channel, and the non-stationary transmit data distribution. In this article, we present a review of recent advancements in digital self-interference cancellation (SIC) algorithms. Our focus is on comparing the effectiveness of adaptable model-based SIC methods with their model-free counterparts that leverage data-driven machine learning techniques. Through our comparison study under practical scenarios, we demonstrate that the model-based SIC approach offers a more robust solution to the time-varying SI channel and the non-stationary transmission, achieving optimal SIC performance in terms of the convergence rate while maintaining low computational complexity. To validate our findings, we conduct experiments using a software-defined radio testbed that conforms to the IEEE 802.11a standards. The experimental results demonstrate the robustness of the model-based SIC methods, providing practical evidence of their effectiveness.

Automatically detecting activities of daily living from in-home sensors as indicators of routine behaviour in an older population

Jul 10, 2023Abstract:Objective: The NEX project has developed an integrated Internet of Things (IoT) system coupled with data analytics to offer unobtrusive health and wellness monitoring supporting older adults living independently at home. Monitoring {currently} involves visualising a set of automatically detected activities of daily living (ADLs) for each participant. The detection of ADLs is achieved {} to allow the incorporation of additional participants whose ADLs are detected without re-training the system. Methods: Following an extensive User Needs and Requirements study involving 426 participants, a pilot trial and a friendly trial of the deployment, an Action Research Cycle (ARC) trial was completed. This involved 23 participants over a 10-week period each with c.20 IoT sensors in their homes. During the ARC trial, participants each took part in two data-informed briefings which presented visualisations of their own in-home activities. The briefings also gathered training data on the accuracy of detected activities. Association rule mining was then used on the combination of data from sensors and participant feedback to improve the automatic detection of ADLs. Results: Association rule mining was used to detect a range of ADLs for each participant independently of others and was then used to detect ADLs across participants using a single set of rules {for each ADL}. This allows additional participants to be added without the necessity of them providing training data. Conclusions: Additional participants can be added to the NEX system without the necessity to re-train the system for automatic detection of the set of their activities of daily living.

Analysis of Individual Conversational Volatility in Tandem Telecollaboration for Second Language Learning

Jun 28, 2022

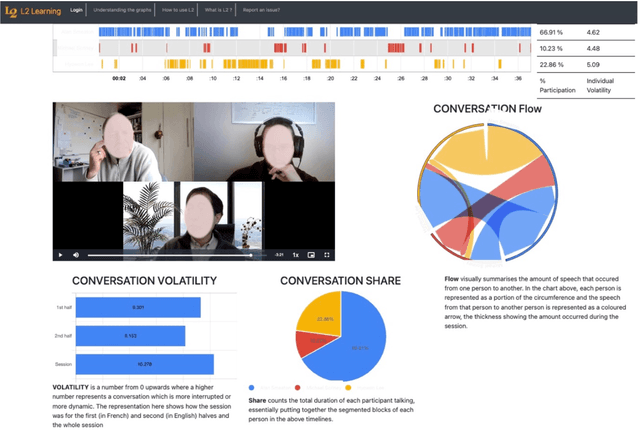

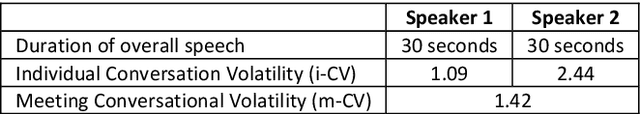

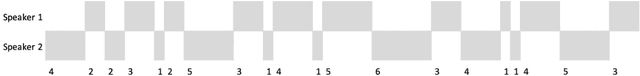

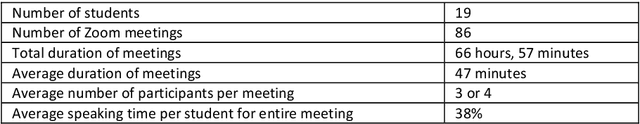

Abstract:Second language learning can be enabled by tandem collaboration where students are grouped into video conference calls while learning the native language of other student(s) on the calls. This places students in an online environment where the more outgoing can actively contribute and engage in dialogue while those more shy and unsure of their second language skills can sit back and coast through the calls. We have built and deployed the L2L system which records timings of conversational utterances from all participants in a call. We generate visualisations including participation rates and timelines for each student in each call and present these on a dashboard. We have recently developed a measure called personal conversational volatility for how dynamic has been each student's contribution to the dialogue in each call. We present an analysis of conversational volatility measures for a sample of 19 individual English-speaking students from our University who are learning Frenchm, in each of 86 tandem telecollaboration calls over one teaching semester. Our analysis shows there is a need to look into the nature of the interactions and see if the choices of discussion topics assigned to them were too difficult for some students and that may have influenced their engagement in some way.

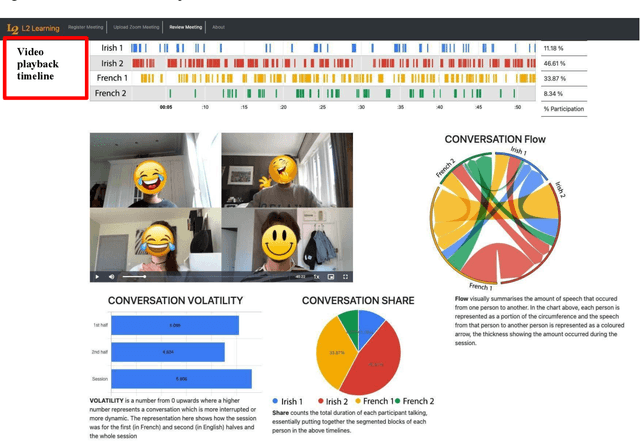

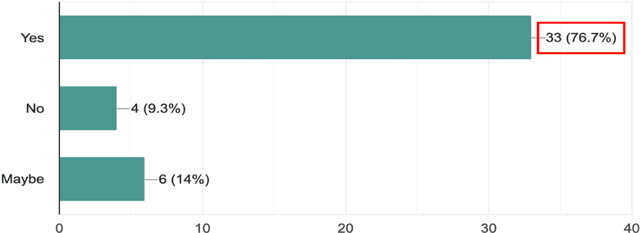

Facilitating reflection in teletandem through automatically generated conversation metrics and playback video

Nov 18, 2021

Abstract:This pilot study focuses on a tool called L2L that allows second language (L2) learners to visualise and analyse their Zoom interactions with native speakers. L2L uses the Zoom transcript to automatically generate conversation metrics and its playback feature with timestamps allows students to replay any chosen portion of the conversation for post-session reflection and self-review. This exploratory study investigates a seven-week teletandem project, where undergraduate students from an Irish University learning French (B2) interacted with their peers from a French University learning English (B2+) via Zoom. The data collected from a survey (N=43) and semi-structured interviews (N=35) show that the quantitative conversation metrics and qualitative review of the synchronous content helped raise students' confidence levels while engaging with native speakers. Furthermore, it allowed them to set tangible goals to improve their participation, and be more aware of what, why and how they are learning.

* 5 pages

Attention Based Video Summaries of Live Online Zoom Classes

Jan 15, 2021Abstract:This paper describes a system developed to help University students get more from their online lectures, tutorials, laboratory and other live sessions. We do this by logging their attention levels on their laptops during live Zoom sessions and providing them with personalised video summaries of those live sessions. Using facial attention analysis software we create personalised video summaries composed of just the parts where a student's attention was below some threshold. We can also factor in other criteria into video summary generation such as parts where the student was not paying attention while others in the class were, and parts of the video that other students have replayed extensively which a given student has not. Attention and usage based video summaries of live classes are a form of personalised content, they are educational video segments recommended to highlight important parts of live sessions, useful in both topic understanding and in exam preparation. The system also allows a Professor to review the aggregated attention levels of those in a class who attended a live session and logged their attention levels. This allows her to see which parts of the live activity students were paying most, and least, attention to. The Help-Me-Watch system is deployed and in use at our University in a way that protects student's personal data, operating in a GDPR-compliant way.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge