Hybrid Online-Offline Learning for Task Offloading in Mobile Edge Computing Systems

Paper and Code

Feb 27, 2024

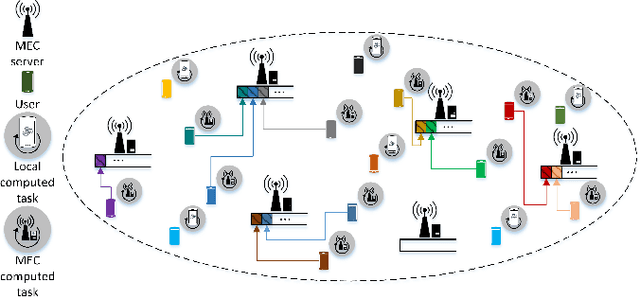

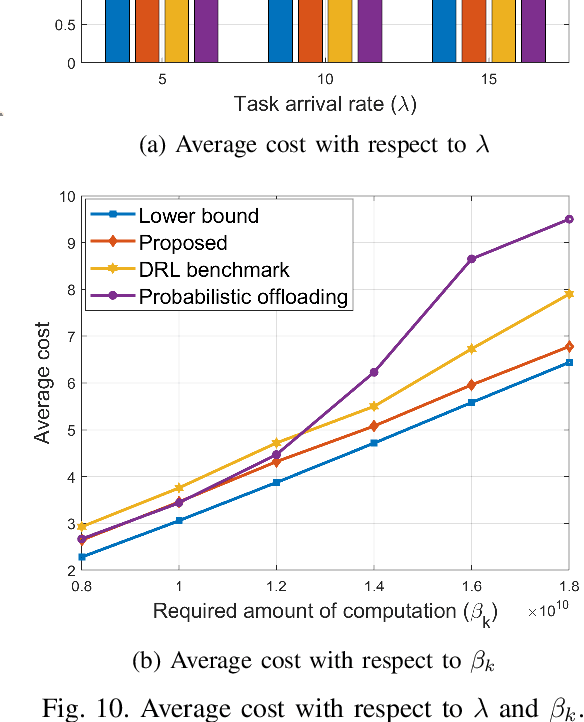

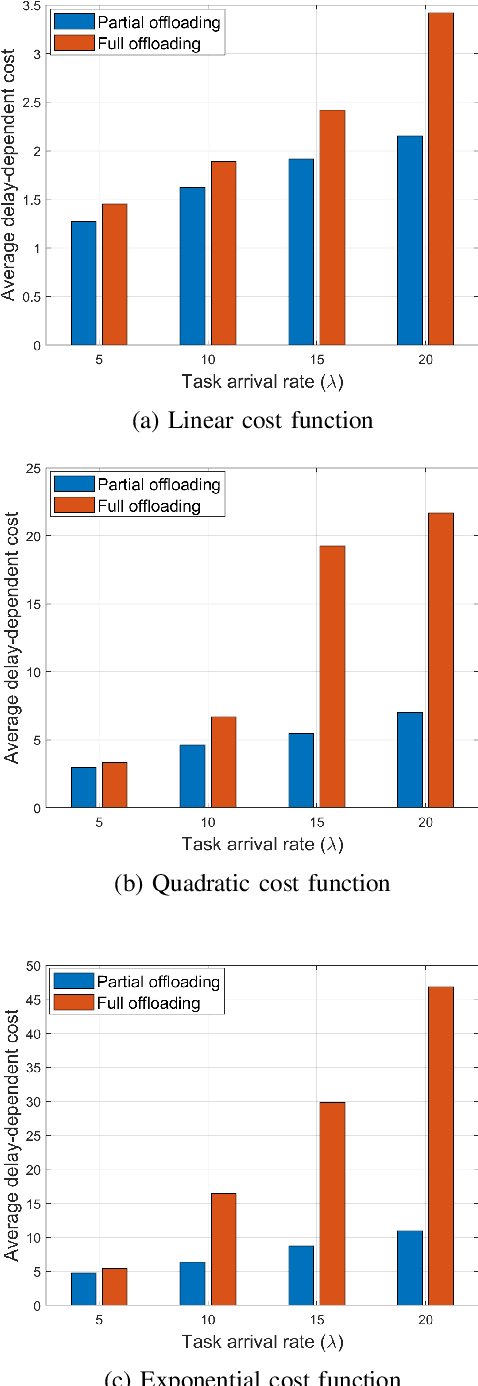

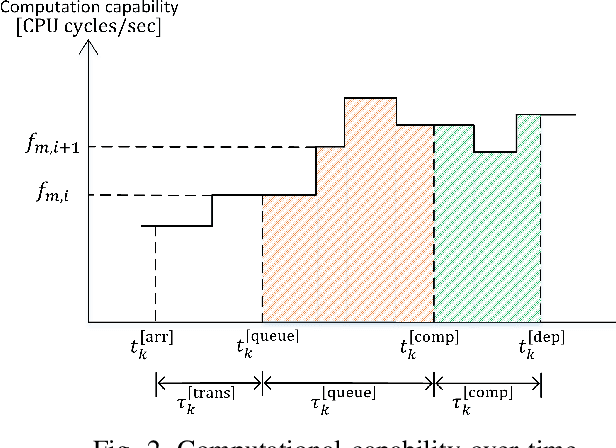

We consider a multi-user multi-server mobile edge computing (MEC) system, in which users arrive on a network randomly over time and generate computation tasks, which will be computed either locally on their own computing devices or be offloaded to one of the MEC servers. Under such a dynamic network environment, we propose a novel task offloading policy based on hybrid online-offline learning, which can efficiently reduce the overall computation delay and energy consumption only with information available at nearest MEC servers from each user. We provide a practical signaling and learning framework that can train deep neural networks for both online and offline learning and can adjust its offloading policy based on the queuing status of each MEC server and network dynamics. Numerical results demonstrate that the proposed scheme significantly reduces the average computation delay for a broad class of network environments compared to the conventional offloading methods. It is further shown that the proposed hybrid online-offline learning framework can be extended to a general cost function reflecting both delay and energy-dependent metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge