Jongjin Jeong

Dynamic Multichannel Access via Multi-agent Reinforcement Learning: Throughput and Fairness Guarantees

May 10, 2021

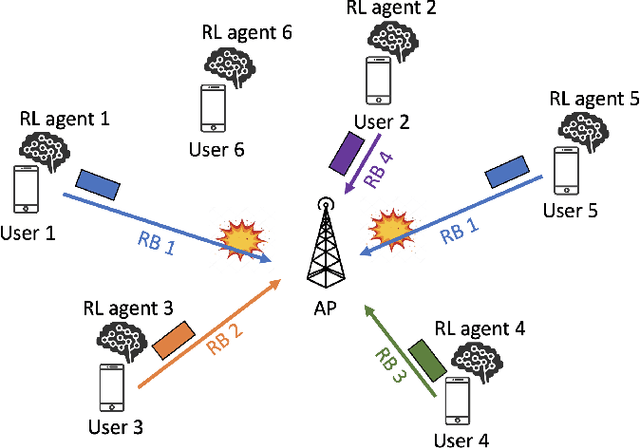

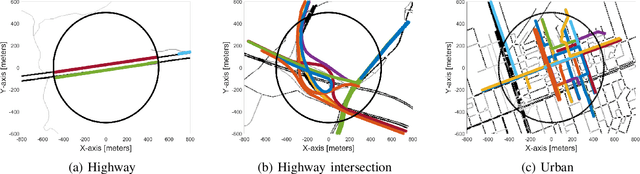

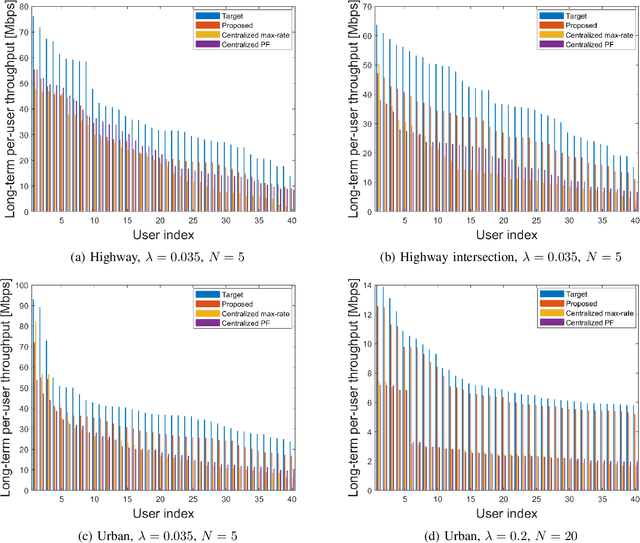

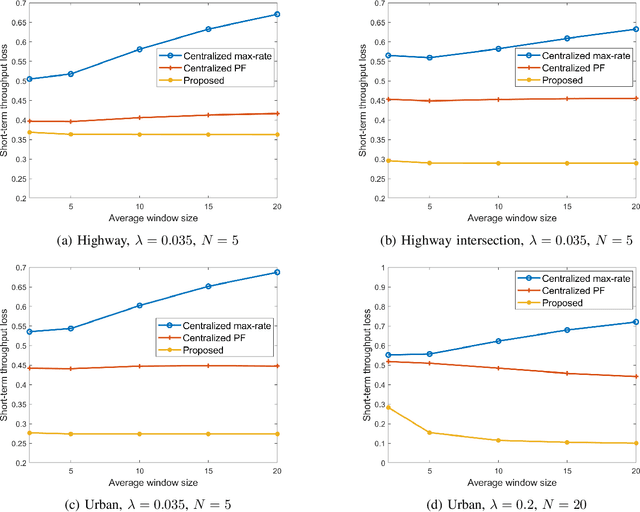

Abstract:We consider a multichannel random access system in which each user accesses a single channel at each time slot to communicate with an access point (AP). Users arrive to the system at random and be activated for a certain period of time slots and then disappear from the system. Under such dynamic network environment, we propose a distributed multichannel access protocol based on multi-agent reinforcement learning (RL) to improve both throughput and fairness between active users. Unlike the previous approaches adjusting channel access probabilities at each time slot, the proposed RL algorithm deterministically selects a set of channel access policies for several consecutive time slots. To effectively reduce the complexity of the proposed RL algorithm, we adopt a branching dueling Q-network architecture and propose an efficient training methodology for producing proper Q-values over time-varying user sets. We perform extensive simulations on realistic traffic environments and demonstrate that the proposed online learning improves both throughput and fairness compared to the conventional RL approaches and centralized scheduling policies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge