Sami Sarsa

Evaluating Language Models for Generating and Judging Programming Feedback

Jul 05, 2024

Abstract:The emergence of large language models (LLMs) has transformed research and practice in a wide range of domains. Within the computing education research (CER) domain, LLMs have received plenty of attention especially in the context of learning programming. Much of the work on LLMs in CER has however focused on applying and evaluating proprietary models. In this article, we evaluate the efficiency of open-source LLMs in generating high-quality feedback for programming assignments, and in judging the quality of the programming feedback, contrasting the results against proprietary models. Our evaluations on a dataset of students' submissions to Python introductory programming exercises suggest that the state-of-the-art open-source LLMs (Meta's Llama3) are almost on-par with proprietary models (GPT-4o) in both the generation and assessment of programming feedback. We further demonstrate the efficiency of smaller LLMs in the tasks, and highlight that there are a wide range of LLMs that are accessible even for free for educators and practitioners.

Open Source Language Models Can Provide Feedback: Evaluating LLMs' Ability to Help Students Using GPT-4-As-A-Judge

May 08, 2024Abstract:Large language models (LLMs) have shown great potential for the automatic generation of feedback in a wide range of computing contexts. However, concerns have been voiced around the privacy and ethical implications of sending student work to proprietary models. This has sparked considerable interest in the use of open source LLMs in education, but the quality of the feedback that such open models can produce remains understudied. This is a concern as providing flawed or misleading generated feedback could be detrimental to student learning. Inspired by recent work that has utilised very powerful LLMs, such as GPT-4, to evaluate the outputs produced by less powerful models, we conduct an automated analysis of the quality of the feedback produced by several open source models using a dataset from an introductory programming course. First, we investigate the viability of employing GPT-4 as an automated evaluator by comparing its evaluations with those of a human expert. We observe that GPT-4 demonstrates a bias toward positively rating feedback while exhibiting moderate agreement with human raters, showcasing its potential as a feedback evaluator. Second, we explore the quality of feedback generated by several leading open-source LLMs by using GPT-4 to evaluate the feedback. We find that some models offer competitive performance with popular proprietary LLMs, such as ChatGPT, indicating opportunities for their responsible use in educational settings.

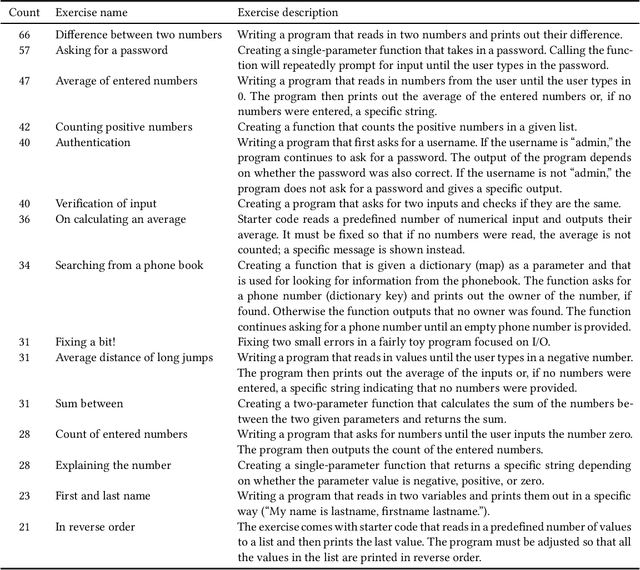

Benchmarking Educational Program Repair

May 08, 2024Abstract:The emergence of large language models (LLMs) has sparked enormous interest due to their potential application across a range of educational tasks. For example, recent work in programming education has used LLMs to generate learning resources, improve error messages, and provide feedback on code. However, one factor that limits progress within the field is that much of the research uses bespoke datasets and different evaluation metrics, making direct comparisons between results unreliable. Thus, there is a pressing need for standardization and benchmarks that facilitate the equitable comparison of competing approaches. One task where LLMs show great promise is program repair, which can be used to provide debugging support and next-step hints to students. In this article, we propose a novel educational program repair benchmark. We curate two high-quality publicly available programming datasets, present a unified evaluation procedure introducing a novel evaluation metric rouge@k for approximating the quality of repairs, and evaluate a set of five recent models to establish baseline performance.

Decoding Logic Errors: A Comparative Study on Bug Detection by Students and Large Language Models

Nov 27, 2023Abstract:Identifying and resolving logic errors can be one of the most frustrating challenges for novices programmers. Unlike syntax errors, for which a compiler or interpreter can issue a message, logic errors can be subtle. In certain conditions, buggy code may even exhibit correct behavior -- in other cases, the issue might be about how a problem statement has been interpreted. Such errors can be hard to spot when reading the code, and they can also at times be missed by automated tests. There is great educational potential in automatically detecting logic errors, especially when paired with suitable feedback for novices. Large language models (LLMs) have recently demonstrated surprising performance for a range of computing tasks, including generating and explaining code. These capabilities are closely linked to code syntax, which aligns with the next token prediction behavior of LLMs. On the other hand, logic errors relate to the runtime performance of code and thus may not be as well suited to analysis by LLMs. To explore this, we investigate the performance of two popular LLMs, GPT-3 and GPT-4, for detecting and providing a novice-friendly explanation of logic errors. We compare LLM performance with a large cohort of introductory computing students $(n=964)$ solving the same error detection task. Through a mixed-methods analysis of student and model responses, we observe significant improvement in logic error identification between the previous and current generation of LLMs, and find that both LLM generations significantly outperform students. We outline how such models could be integrated into computing education tools, and discuss their potential for supporting students when learning programming.

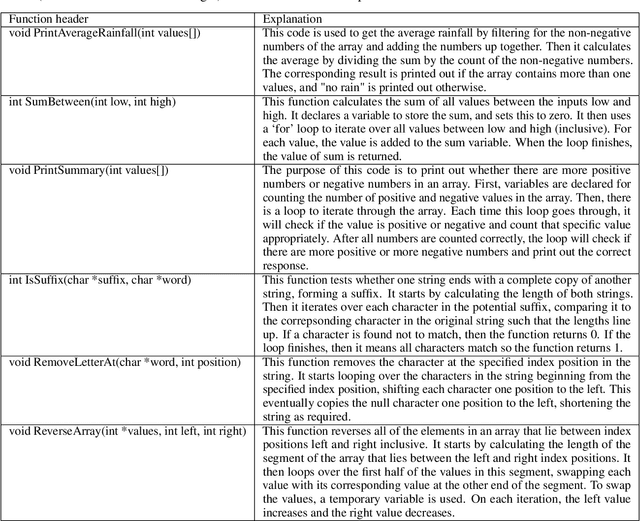

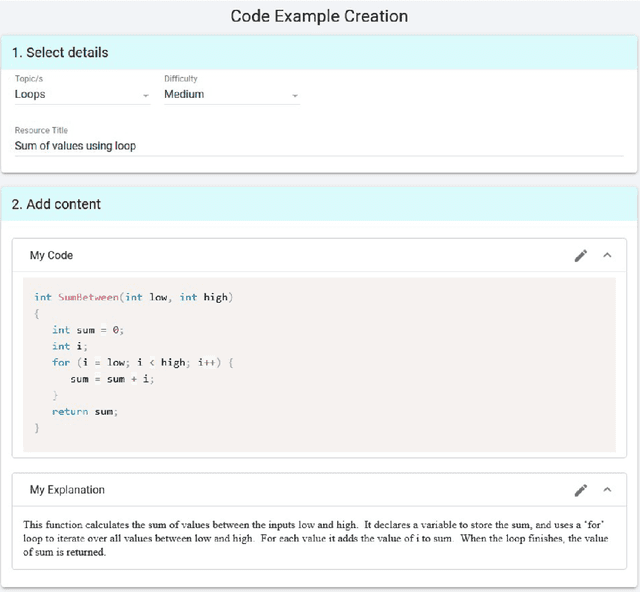

Can We Trust AI-Generated Educational Content? Comparative Analysis of Human and AI-Generated Learning Resources

Jul 03, 2023

Abstract:As an increasing number of students move to online learning platforms that deliver personalized learning experiences, there is a great need for the production of high-quality educational content. Large language models (LLMs) appear to offer a promising solution to the rapid creation of learning materials at scale, reducing the burden on instructors. In this study, we investigated the potential for LLMs to produce learning resources in an introductory programming context, by comparing the quality of the resources generated by an LLM with those created by students as part of a learnersourcing activity. Using a blind evaluation, students rated the correctness and helpfulness of resources generated by AI and their peers, after both were initially provided with identical exemplars. Our results show that the quality of AI-generated resources, as perceived by students, is equivalent to the quality of resources generated by their peers. This suggests that AI-generated resources may serve as viable supplementary material in certain contexts. Resources generated by LLMs tend to closely mirror the given exemplars, whereas student-generated resources exhibit greater variety in terms of content length and specific syntax features used. The study highlights the need for further research exploring different types of learning resources and a broader range of subject areas, and understanding the long-term impact of AI-generated resources on learning outcomes.

Exploring the Responses of Large Language Models to Beginner Programmers' Help Requests

Jun 09, 2023

Abstract:Background and Context: Over the past year, large language models (LLMs) have taken the world by storm. In computing education, like in other walks of life, many opportunities and threats have emerged as a consequence. Objectives: In this article, we explore such opportunities and threats in a specific area: responding to student programmers' help requests. More specifically, we assess how good LLMs are at identifying issues in problematic code that students request help on. Method: We collected a sample of help requests and code from an online programming course. We then prompted two different LLMs (OpenAI Codex and GPT-3.5) to identify and explain the issues in the students' code and assessed the LLM-generated answers both quantitatively and qualitatively. Findings: GPT-3.5 outperforms Codex in most respects. Both LLMs frequently find at least one actual issue in each student program (GPT-3.5 in 90% of the cases). Neither LLM excels at finding all the issues (GPT-3.5 finding them 57% of the time). False positives are common (40% chance for GPT-3.5). The advice that the LLMs provide on the issues is often sensible. The LLMs perform better on issues involving program logic rather than on output formatting. Model solutions are frequently provided even when the LLM is prompted not to. LLM responses to prompts in a non-English language are only slightly worse than responses to English prompts. Implications: Our results continue to highlight the utility of LLMs in programming education. At the same time, the results highlight the unreliability of LLMs: LLMs make some of the same mistakes that students do, perhaps especially when formatting output as required by automated assessment systems. Our study informs teachers interested in using LLMs as well as future efforts to customize LLMs for the needs of programming education.

Computing Education in the Era of Generative AI

Jun 05, 2023

Abstract:The computing education community has a rich history of pedagogical innovation designed to support students in introductory courses, and to support teachers in facilitating student learning. Very recent advances in artificial intelligence have resulted in code generation models that can produce source code from natural language problem descriptions -- with impressive accuracy in many cases. The wide availability of these models and their ease of use has raised concerns about potential impacts on many aspects of society, including the future of computing education. In this paper, we discuss the challenges and opportunities such models present to computing educators, with a focus on introductory programming classrooms. We summarize the results of two recent articles, the first evaluating the performance of code generation models on typical introductory-level programming problems, and the second exploring the quality and novelty of learning resources generated by these models. We consider likely impacts of such models upon pedagogical practice in the context of the most recent advances at the time of writing.

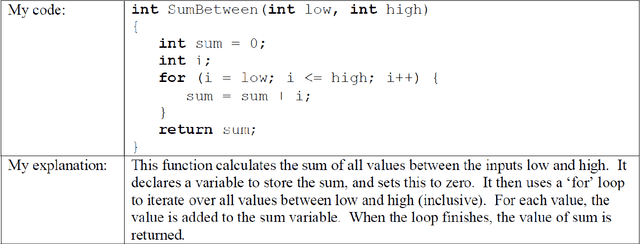

Comparing Code Explanations Created by Students and Large Language Models

Apr 08, 2023Abstract:Reasoning about code and explaining its purpose are fundamental skills for computer scientists. There has been extensive research in the field of computing education on the relationship between a student's ability to explain code and other skills such as writing and tracing code. In particular, the ability to describe at a high-level of abstraction how code will behave over all possible inputs correlates strongly with code writing skills. However, developing the expertise to comprehend and explain code accurately and succinctly is a challenge for many students. Existing pedagogical approaches that scaffold the ability to explain code, such as producing exemplar code explanations on demand, do not currently scale well to large classrooms. The recent emergence of powerful large language models (LLMs) may offer a solution. In this paper, we explore the potential of LLMs in generating explanations that can serve as examples to scaffold students' ability to understand and explain code. To evaluate LLM-created explanations, we compare them with explanations created by students in a large course ($n \approx 1000$) with respect to accuracy, understandability and length. We find that LLM-created explanations, which can be produced automatically on demand, are rated as being significantly easier to understand and more accurate summaries of code than student-created explanations. We discuss the significance of this finding, and suggest how such models can be incorporated into introductory programming education.

Deep Learning Models for Knowledge Tracing: Review and Empirical Evaluation

Dec 30, 2021

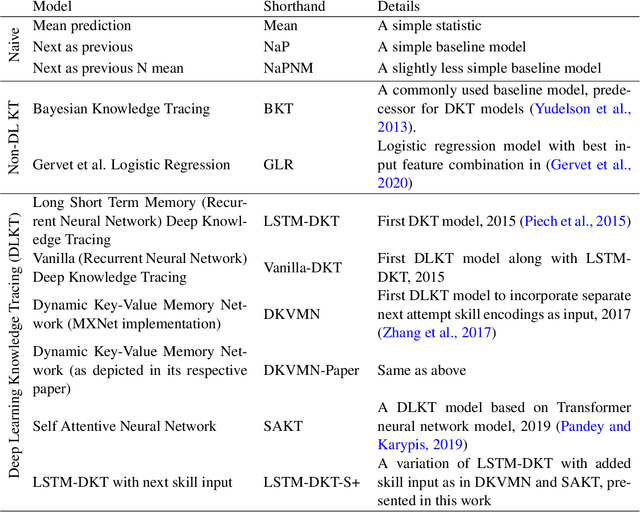

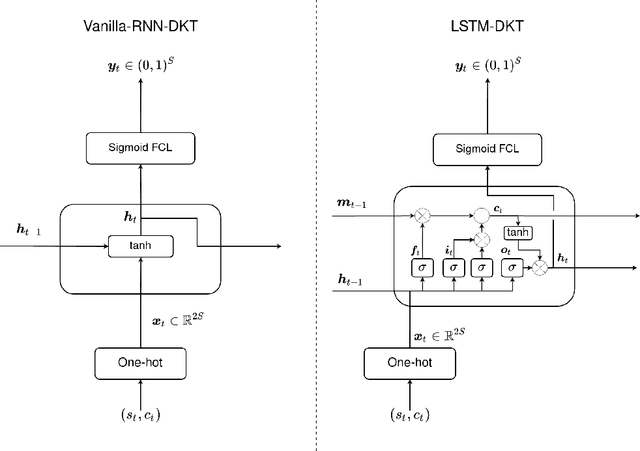

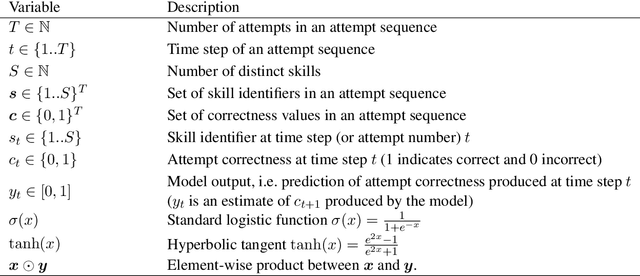

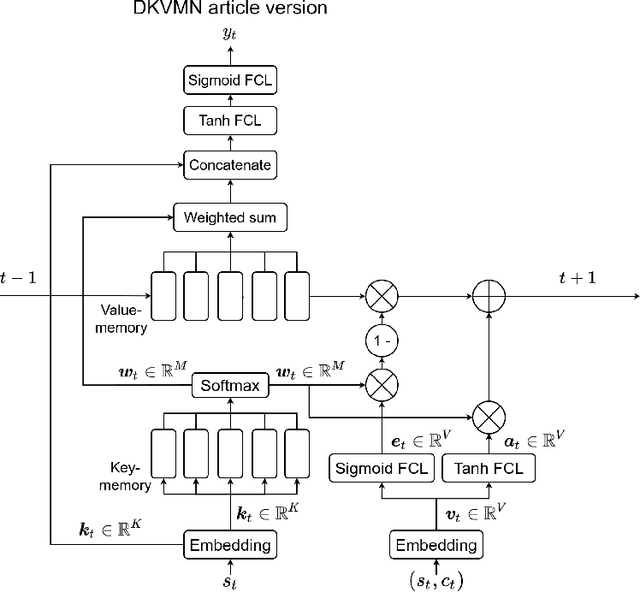

Abstract:In this work, we review and evaluate a body of deep learning knowledge tracing (DLKT) models with openly available and widely-used data sets, and with a novel data set of students learning to program. The evaluated DLKT models have been reimplemented for assessing reproducibility and replicability of previously reported results. We test different input and output layer variations found in the compared models that are independent of the main architectures of the models, and different maximum attempt count options that have been implicitly and explicitly used in some studies. Several metrics are used to reflect on the quality of the evaluated knowledge tracing models. The evaluated knowledge tracing models include Vanilla-DKT, two Long Short-Term Memory Deep Knowledge Tracing (LSTM-DKT) variants, two Dynamic Key-Value Memory Network (DKVMN) variants, and Self-Attentive Knowledge Tracing (SAKT). We evaluate logistic regression, Bayesian Knowledge Tracing (BKT) and simple non-learning models as baselines. Our results suggest that the DLKT models in general outperform non-DLKT models, and the relative differences between the DLKT models are subtle and often vary between datasets. Our results also show that naive models such as mean prediction can yield better performance than more sophisticated knowledge tracing models, especially in terms of accuracy. Further, our metric and hyperparameter analysis shows that the metric used to select the best model hyperparameters has a noticeable effect on the performance of the models, and that metric choice can affect model ranking. We also study the impact of input and output layer variations, filtering out long attempt sequences, and non-model properties such as randomness and hardware. Finally, we discuss model performance replicability and related issues. Our model implementations, evaluation code, and data are published as a part of this work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge