Ruoyou Wu

Cross-Sequence Semi-Supervised Learning for Multi-Parametric MRI-Based Visual Pathway Delineation

May 26, 2025Abstract:Accurately delineating the visual pathway (VP) is crucial for understanding the human visual system and diagnosing related disorders. Exploring multi-parametric MR imaging data has been identified as an important way to delineate VP. However, due to the complex cross-sequence relationships, existing methods cannot effectively model the complementary information from different MRI sequences. In addition, these existing methods heavily rely on large training data with labels, which is labor-intensive and time-consuming to obtain. In this work, we propose a novel semi-supervised multi-parametric feature decomposition framework for VP delineation. Specifically, a correlation-constrained feature decomposition (CFD) is designed to handle the complex cross-sequence relationships by capturing the unique characteristics of each MRI sequence and easing the multi-parametric information fusion process. Furthermore, a consistency-based sample enhancement (CSE) module is developed to address the limited labeled data issue, by generating and promoting meaningful edge information from unlabeled data. We validate our framework using two public datasets, and one in-house Multi-Shell Diffusion MRI (MDM) dataset. Experimental results demonstrate the superiority of our approach in terms of delineation performance when compared to seven state-of-the-art approaches.

3D Anatomical Structure-guided Deep Learning for Accurate Diffusion Microstructure Imaging

Feb 25, 2025Abstract:Diffusion magnetic resonance imaging (dMRI) is a crucial non-invasive technique for exploring the microstructure of the living human brain. Traditional hand-crafted and model-based tissue microstructure reconstruction methods often require extensive diffusion gradient sampling, which can be time-consuming and limits the clinical applicability of tissue microstructure information. Recent advances in deep learning have shown promise in microstructure estimation; however, accurately estimating tissue microstructure from clinically feasible dMRI scans remains challenging without appropriate constraints. This paper introduces a novel framework that achieves high-fidelity and rapid diffusion microstructure imaging by simultaneously leveraging anatomical information from macro-level priors and mutual information across parameters. This approach enhances time efficiency while maintaining accuracy in microstructure estimation. Experimental results demonstrate that our method outperforms four state-of-the-art techniques, achieving a peak signal-to-noise ratio (PSNR) of 30.51$\pm$0.58 and a structural similarity index measure (SSIM) of 0.97$\pm$0.004 in estimating parametric maps of multiple diffusion models. Notably, our method achieves a 15$\times$ acceleration compared to the dense sampling approach, which typically utilizes 270 diffusion gradients.

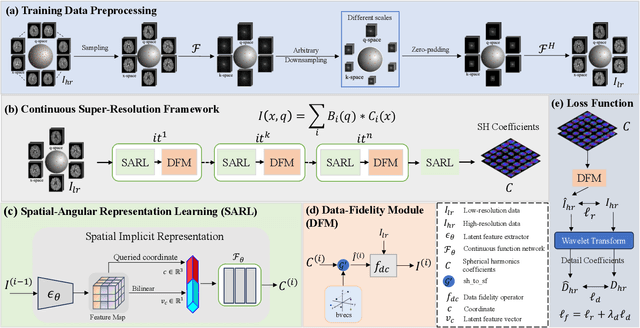

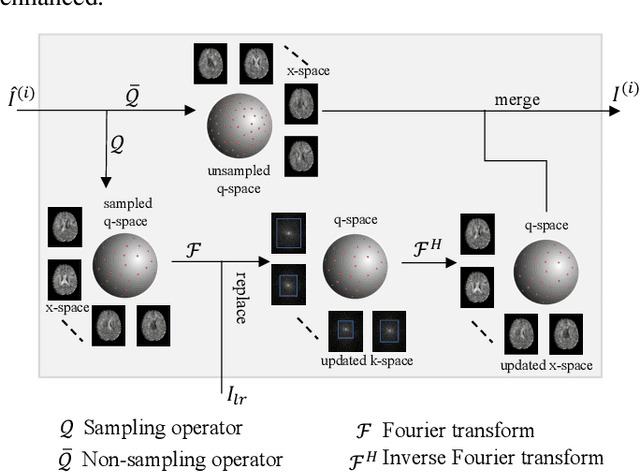

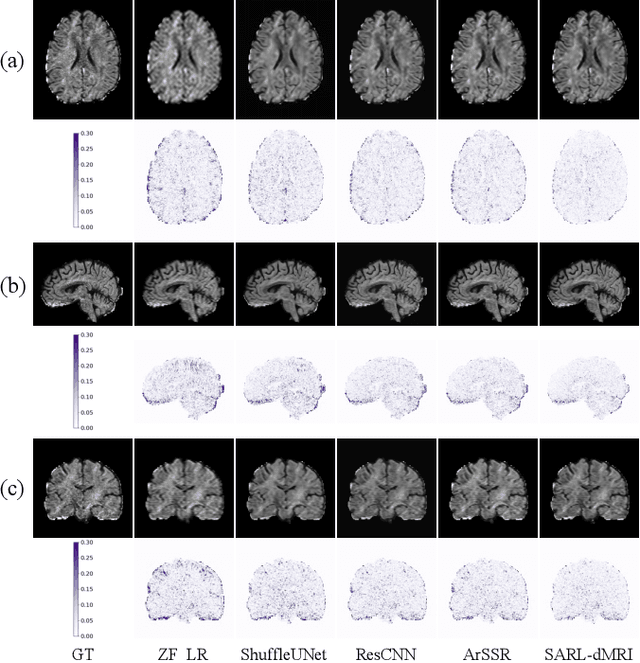

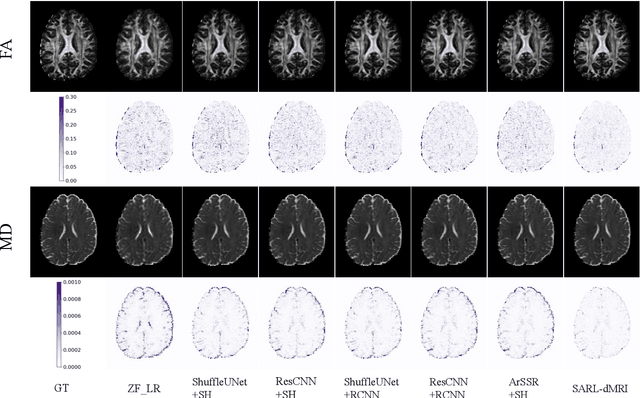

Spatial-Angular Representation Learning for High-Fidelity Continuous Super-Resolution in Diffusion MRI

Jan 27, 2025

Abstract:Diffusion magnetic resonance imaging (dMRI) often suffers from low spatial and angular resolution due to inherent limitations in imaging hardware and system noise, adversely affecting the accurate estimation of microstructural parameters with fine anatomical details. Deep learning-based super-resolution techniques have shown promise in enhancing dMRI resolution without increasing acquisition time. However, most existing methods are confined to either spatial or angular super-resolution, limiting their effectiveness in capturing detailed microstructural features. Furthermore, traditional pixel-wise loss functions struggle to recover intricate image details essential for high-resolution reconstruction. To address these challenges, we propose SARL-dMRI, a novel Spatial-Angular Representation Learning framework for high-fidelity, continuous super-resolution in dMRI. SARL-dMRI explores implicit neural representations and spherical harmonics to model continuous spatial and angular representations, simultaneously enhancing both spatial and angular resolution while improving microstructural parameter estimation accuracy. To further preserve image fidelity, a data-fidelity module and wavelet-based frequency loss are introduced, ensuring the super-resolved images remain consistent with the original input and retain fine details. Extensive experiments demonstrate that, compared to five other state-of-the-art methods, our method significantly enhances dMRI data resolution, improves the accuracy of microstructural parameter estimation, and provides better generalization capabilities. It maintains stable performance even under a 45$\times$ downsampling factor.

DeepMpMRI: Tensor-decomposition Regularized Learning for Fast and High-Fidelity Multi-Parametric Microstructural MR Imaging

May 06, 2024Abstract:Deep learning has emerged as a promising approach for learning the nonlinear mapping between diffusion-weighted MR images and tissue parameters, which enables automatic and deep understanding of the brain microstructures. However, the efficiency and accuracy in the multi-parametric estimations are still limited since previous studies tend to estimate multi-parametric maps with dense sampling and isolated signal modeling. This paper proposes DeepMpMRI, a unified framework for fast and high-fidelity multi-parametric estimation from various diffusion models using sparsely sampled q-space data. DeepMpMRI is equipped with a newly designed tensor-decomposition-based regularizer to effectively capture fine details by exploiting the correlation across parameters. In addition, we introduce a Nesterov-based adaptive learning algorithm that optimizes the regularization parameter dynamically to enhance the performance. DeepMpMRI is an extendable framework capable of incorporating flexible network architecture. Experimental results demonstrate the superiority of our approach over 5 state-of-the-art methods in simultaneously estimating multi-parametric maps for various diffusion models with fine-grained details both quantitatively and qualitatively, achieving 4.5 - 22.5$\times$ acceleration compared to the dense sampling of a total of 270 diffusion gradients.

CSR-dMRI: Continuous Super-Resolution of Diffusion MRI with Anatomical Structure-assisted Implicit Neural Representation Learning

Apr 04, 2024Abstract:Deep learning-based dMRI super-resolution methods can effectively enhance image resolution by leveraging the learning capabilities of neural networks on large datasets. However, these methods tend to learn a fixed scale mapping between low-resolution (LR) and high-resolution (HR) images, overlooking the need for radiologists to scale the images at arbitrary resolutions. Moreover, the pixel-wise loss in the image domain tends to generate over-smoothed results, losing fine textures and edge information. To address these issues, we propose a novel continuous super-resolution of dMRI with anatomical structure-assisted implicit neural representation learning method, called CSR-dMRI. Specifically, the CSR-dMRI model consists of two components. The first is the latent feature extractor, which primarily extracts latent space feature maps from LR dMRI and anatomical images while learning structural prior information from the anatomical images. The second is the implicit function network, which utilizes voxel coordinates and latent feature vectors to generate voxel intensities at corresponding positions. Additionally, a frequency-domain-based loss is introduced to preserve the structural and texture information, further enhancing the image quality. Extensive experiments on the publicly available HCP dataset validate the effectiveness of our approach. Furthermore, our method demonstrates superior generalization capability and can be applied to arbitrary-scale super-resolution, including non-integer scale factors, expanding its applicability beyond conventional approaches.

Knowledge-driven deep learning for fast MR imaging: undersampled MR image reconstruction from supervised to un-supervised learning

Feb 05, 2024Abstract:Deep learning (DL) has emerged as a leading approach in accelerating MR imaging. It employs deep neural networks to extract knowledge from available datasets and then applies the trained networks to reconstruct accurate images from limited measurements. Unlike natural image restoration problems, MR imaging involves physics-based imaging processes, unique data properties, and diverse imaging tasks. This domain knowledge needs to be integrated with data-driven approaches. Our review will introduce the significant challenges faced by such knowledge-driven DL approaches in the context of fast MR imaging along with several notable solutions, which include learning neural networks and addressing different imaging application scenarios. The traits and trends of these techniques have also been given which have shifted from supervised learning to semi-supervised learning, and finally, to unsupervised learning methods. In addition, MR vendors' choices of DL reconstruction have been provided along with some discussions on open questions and future directions, which are critical for the reliable imaging systems.

Simultaneous q-Space Sampling Optimization and Reconstruction for Fast and High-fidelity Diffusion Magnetic Resonance Imaging

Jan 03, 2024Abstract:Diffusion Magnetic Resonance Imaging (dMRI) plays a crucial role in the noninvasive investigation of tissue microstructural properties and structural connectivity in the \textit{in vivo} human brain. However, to effectively capture the intricate characteristics of water diffusion at various directions and scales, it is important to employ comprehensive q-space sampling. Unfortunately, this requirement leads to long scan times, limiting the clinical applicability of dMRI. To address this challenge, we propose SSOR, a Simultaneous q-Space sampling Optimization and Reconstruction framework. We jointly optimize a subset of q-space samples using a continuous representation of spherical harmonic functions and a reconstruction network. Additionally, we integrate the unique properties of diffusion magnetic resonance imaging (dMRI) in both the q-space and image domains by applying $l1$-norm and total-variation regularization. The experiments conducted on HCP data demonstrate that SSOR has promising strengths both quantitatively and qualitatively and exhibits robustness to noise.

AID-DTI: Accelerating High-fidelity Diffusion Tensor Imaging with Detail-Preserving Model-based Deep Learning

Jan 03, 2024Abstract:Deep learning has shown great potential in accelerating diffusion tensor imaging (DTI). Nevertheless, existing methods tend to suffer from Rician noise and detail loss in reconstructing the DTI-derived parametric maps especially when sparsely sampled q-space data are used. This paper proposes a novel method, AID-DTI (Accelerating hIgh fiDelity Diffusion Tensor Imaging), to facilitate fast and accurate DTI with only six measurements. AID-DTI is equipped with a newly designed Singular Value Decomposition (SVD)-based regularizer, which can effectively capture fine details while suppressing noise during network training. Experimental results on Human Connectome Project (HCP) data consistently demonstrate that the proposed method estimates DTI parameter maps with fine-grained details and outperforms three state-of-the-art methods both quantitatively and qualitatively.

Generalizable Learning Reconstruction for Accelerating MR Imaging via Federated Neural Architecture Search

Aug 27, 2023

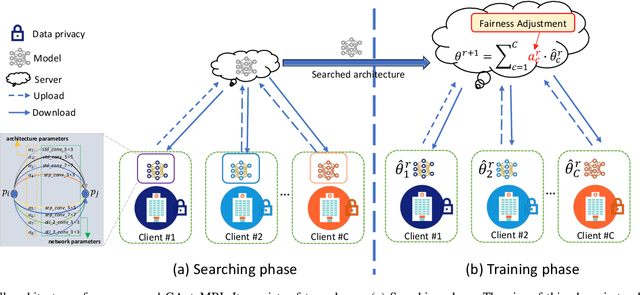

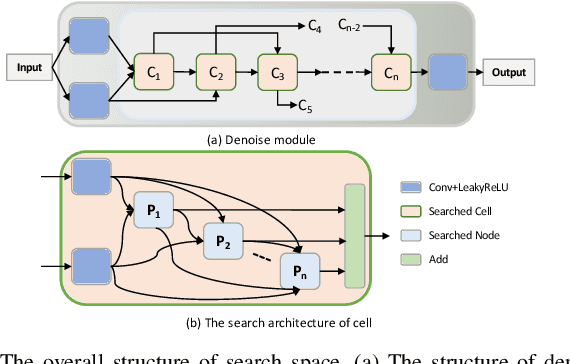

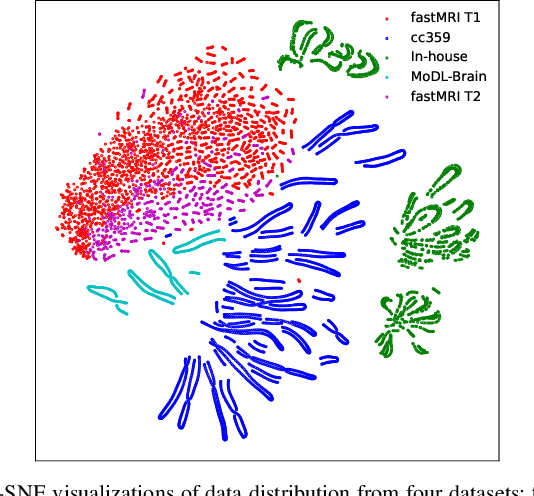

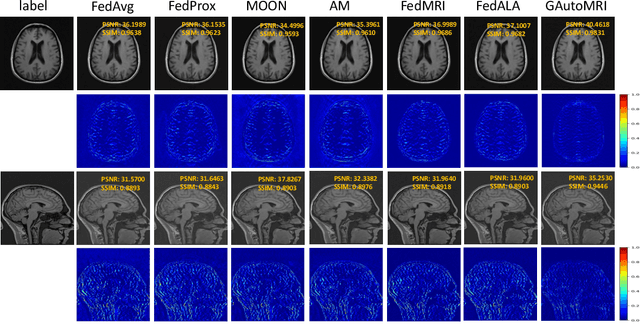

Abstract:Heterogeneous data captured by different scanning devices and imaging protocols can affect the generalization performance of the deep learning magnetic resonance (MR) reconstruction model. While a centralized training model is effective in mitigating this problem, it raises concerns about privacy protection. Federated learning is a distributed training paradigm that can utilize multi-institutional data for collaborative training without sharing data. However, existing federated learning MR image reconstruction methods rely on models designed manually by experts, which are complex and computational expensive, suffering from performance degradation when facing heterogeneous data distributions. In addition, these methods give inadequate consideration to fairness issues, namely, ensuring that the model's training does not introduce bias towards any specific dataset's distribution. To this end, this paper proposes a generalizable federated neural architecture search framework for accelerating MR imaging (GAutoMRI). Specifically, automatic neural architecture search is investigated for effective and efficient neural network representation learning of MR images from different centers. Furthermore, we design a fairness adjustment approach that can enable the model to learn features fairly from inconsistent distributions of different devices and centers, and thus enforce the model generalize to the unseen center. Extensive experiments show that our proposed GAutoMRI has better performances and generalization ability compared with six state-of-the-art federated learning methods. Moreover, the GAutoMRI model is significantly more lightweight, making it an efficient choice for MR image reconstruction tasks. The code will be made available at https://github.com/ternencewu123/GAutoMRI.

FedAutoMRI: Federated Neural Architecture Search for MR Image Reconstruction

Jul 21, 2023

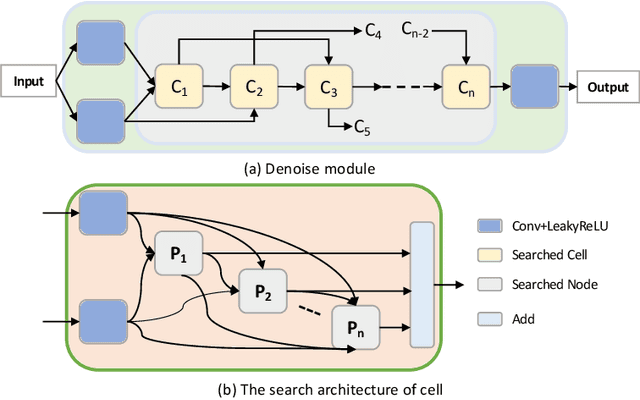

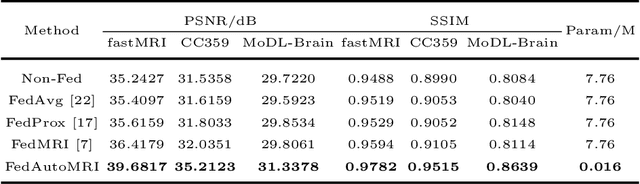

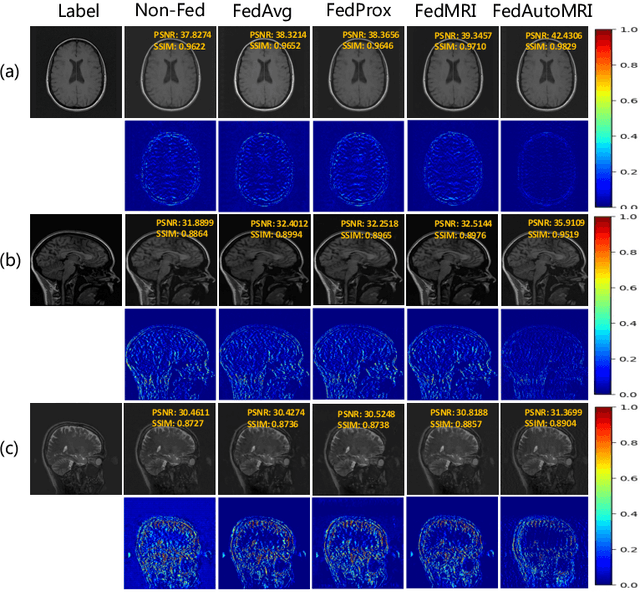

Abstract:Centralized training methods have shown promising results in MR image reconstruction, but privacy concerns arise when gathering data from multiple institutions. Federated learning, a distributed collaborative training scheme, can utilize multi-center data without the need to transfer data between institutions. However, existing federated learning MR image reconstruction methods rely on manually designed models which have extensive parameters and suffer from performance degradation when facing heterogeneous data distributions. To this end, this paper proposes a novel FederAted neUral archiTecture search approach fOr MR Image reconstruction (FedAutoMRI). The proposed method utilizes differentiable architecture search to automatically find the optimal network architecture. In addition, an exponential moving average method is introduced to improve the robustness of the client model to address the data heterogeneity issue. To the best of our knowledge, this is the first work to use federated neural architecture search for MR image reconstruction. Experimental results demonstrate that our proposed FedAutoMRI can achieve promising performances while utilizing a lightweight model with only a small number of model parameters compared to the classical federated learning methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge