Juan Zou

Spatial-Angular Representation Learning for High-Fidelity Continuous Super-Resolution in Diffusion MRI

Jan 27, 2025

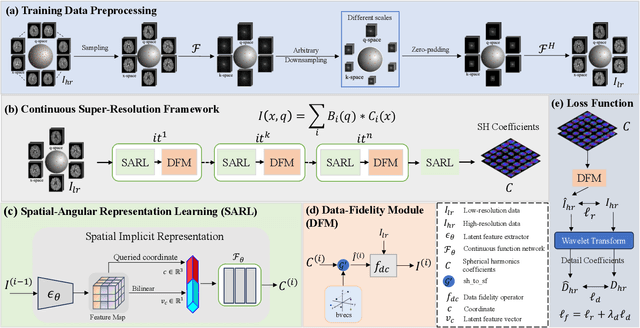

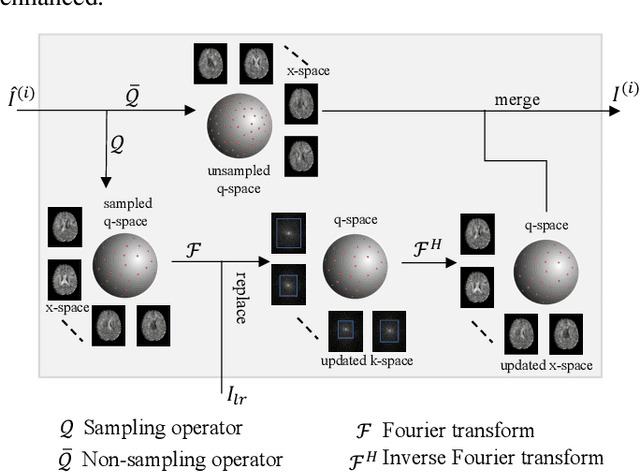

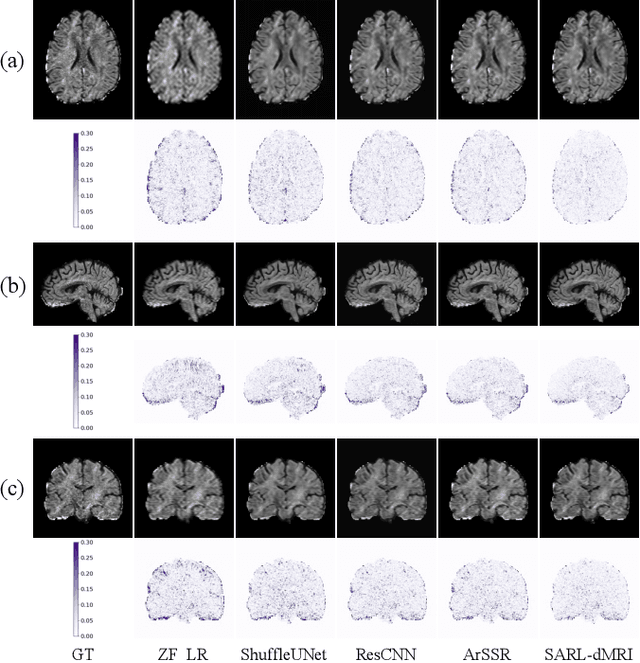

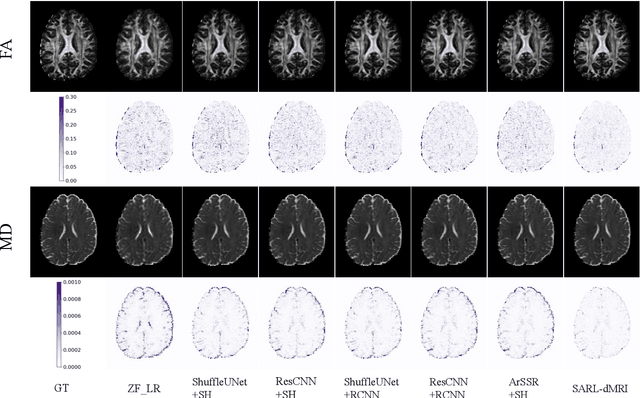

Abstract:Diffusion magnetic resonance imaging (dMRI) often suffers from low spatial and angular resolution due to inherent limitations in imaging hardware and system noise, adversely affecting the accurate estimation of microstructural parameters with fine anatomical details. Deep learning-based super-resolution techniques have shown promise in enhancing dMRI resolution without increasing acquisition time. However, most existing methods are confined to either spatial or angular super-resolution, limiting their effectiveness in capturing detailed microstructural features. Furthermore, traditional pixel-wise loss functions struggle to recover intricate image details essential for high-resolution reconstruction. To address these challenges, we propose SARL-dMRI, a novel Spatial-Angular Representation Learning framework for high-fidelity, continuous super-resolution in dMRI. SARL-dMRI explores implicit neural representations and spherical harmonics to model continuous spatial and angular representations, simultaneously enhancing both spatial and angular resolution while improving microstructural parameter estimation accuracy. To further preserve image fidelity, a data-fidelity module and wavelet-based frequency loss are introduced, ensuring the super-resolved images remain consistent with the original input and retain fine details. Extensive experiments demonstrate that, compared to five other state-of-the-art methods, our method significantly enhances dMRI data resolution, improves the accuracy of microstructural parameter estimation, and provides better generalization capabilities. It maintains stable performance even under a 45$\times$ downsampling factor.

DeepMpMRI: Tensor-decomposition Regularized Learning for Fast and High-Fidelity Multi-Parametric Microstructural MR Imaging

May 06, 2024Abstract:Deep learning has emerged as a promising approach for learning the nonlinear mapping between diffusion-weighted MR images and tissue parameters, which enables automatic and deep understanding of the brain microstructures. However, the efficiency and accuracy in the multi-parametric estimations are still limited since previous studies tend to estimate multi-parametric maps with dense sampling and isolated signal modeling. This paper proposes DeepMpMRI, a unified framework for fast and high-fidelity multi-parametric estimation from various diffusion models using sparsely sampled q-space data. DeepMpMRI is equipped with a newly designed tensor-decomposition-based regularizer to effectively capture fine details by exploiting the correlation across parameters. In addition, we introduce a Nesterov-based adaptive learning algorithm that optimizes the regularization parameter dynamically to enhance the performance. DeepMpMRI is an extendable framework capable of incorporating flexible network architecture. Experimental results demonstrate the superiority of our approach over 5 state-of-the-art methods in simultaneously estimating multi-parametric maps for various diffusion models with fine-grained details both quantitatively and qualitatively, achieving 4.5 - 22.5$\times$ acceleration compared to the dense sampling of a total of 270 diffusion gradients.

CSR-dMRI: Continuous Super-Resolution of Diffusion MRI with Anatomical Structure-assisted Implicit Neural Representation Learning

Apr 04, 2024Abstract:Deep learning-based dMRI super-resolution methods can effectively enhance image resolution by leveraging the learning capabilities of neural networks on large datasets. However, these methods tend to learn a fixed scale mapping between low-resolution (LR) and high-resolution (HR) images, overlooking the need for radiologists to scale the images at arbitrary resolutions. Moreover, the pixel-wise loss in the image domain tends to generate over-smoothed results, losing fine textures and edge information. To address these issues, we propose a novel continuous super-resolution of dMRI with anatomical structure-assisted implicit neural representation learning method, called CSR-dMRI. Specifically, the CSR-dMRI model consists of two components. The first is the latent feature extractor, which primarily extracts latent space feature maps from LR dMRI and anatomical images while learning structural prior information from the anatomical images. The second is the implicit function network, which utilizes voxel coordinates and latent feature vectors to generate voxel intensities at corresponding positions. Additionally, a frequency-domain-based loss is introduced to preserve the structural and texture information, further enhancing the image quality. Extensive experiments on the publicly available HCP dataset validate the effectiveness of our approach. Furthermore, our method demonstrates superior generalization capability and can be applied to arbitrary-scale super-resolution, including non-integer scale factors, expanding its applicability beyond conventional approaches.

An Evolutionary Network Architecture Search Framework with Adaptive Multimodal Fusion for Hand Gesture Recognition

Mar 27, 2024Abstract:Hand gesture recognition (HGR) based on multimodal data has attracted considerable attention owing to its great potential in applications. Various manually designed multimodal deep networks have performed well in multimodal HGR (MHGR), but most of existing algorithms require a lot of expert experience and time-consuming manual trials. To address these issues, we propose an evolutionary network architecture search framework with the adaptive multimodel fusion (AMF-ENAS). Specifically, we design an encoding space that simultaneously considers fusion positions and ratios of the multimodal data, allowing for the automatic construction of multimodal networks with different architectures through decoding. Additionally, we consider three input streams corresponding to intra-modal surface electromyography (sEMG), intra-modal accelerometer (ACC), and inter-modal sEMG-ACC. To automatically adapt to various datasets, the ENAS framework is designed to automatically search a MHGR network with appropriate fusion positions and ratios. To the best of our knowledge, this is the first time that ENAS has been utilized in MHGR to tackle issues related to the fusion position and ratio of multimodal data. Experimental results demonstrate that AMF-ENAS achieves state-of-the-art performance on the Ninapro DB2, DB3, and DB7 datasets.

Multiple Population Alternate Evolution Neural Architecture Search

Mar 11, 2024

Abstract:The effectiveness of Evolutionary Neural Architecture Search (ENAS) is influenced by the design of the search space. Nevertheless, common methods including the global search space, scalable search space and hierarchical search space have certain limitations. Specifically, the global search space requires a significant amount of computational resources and time, the scalable search space sacrifices the diversity of network structures and the hierarchical search space increases the search cost in exchange for network diversity. To address above limitation, we propose a novel paradigm of searching neural network architectures and design the Multiple Population Alternate Evolution Neural Architecture Search (MPAE), which can achieve module diversity with a smaller search cost. MPAE converts the search space into L interconnected units and sequentially searches the units, then the above search of the entire network be cycled several times to reduce the impact of previous units on subsequent units. To accelerate the population evolution process, we also propose the the population migration mechanism establishes an excellent migration archive and transfers the excellent knowledge and experience in the migration archive to new populations. The proposed method requires only 0.3 GPU days to search a neural network on the CIFAR dataset and achieves the state-of-the-art results.

G-EvoNAS: Evolutionary Neural Architecture Search Based on Network Growth

Mar 05, 2024Abstract:The evolutionary paradigm has been successfully applied to neural network search(NAS) in recent years. Due to the vast search complexity of the global space, current research mainly seeks to repeatedly stack partial architectures to build the entire model or to seek the entire model based on manually designed benchmark modules. The above two methods are attempts to reduce the search difficulty by narrowing the search space. To efficiently search network architecture in the global space, this paper proposes another solution, namely a computationally efficient neural architecture evolutionary search framework based on network growth (G-EvoNAS). The complete network is obtained by gradually deepening different Blocks. The process begins from a shallow network, grows and evolves, and gradually deepens into a complete network, reducing the search complexity in the global space. Then, to improve the ranking accuracy of the network, we reduce the weight coupling of each network in the SuperNet by pruning the SuperNet according to elite groups at different growth stages. The G-EvoNAS is tested on three commonly used image classification datasets, CIFAR10, CIFAR100, and ImageNet, and compared with various state-of-the-art algorithms, including hand-designed networks and NAS networks. Experimental results demonstrate that G-EvoNAS can find a neural network architecture comparable to state-of-the-art designs in 0.2 GPU days.

Simultaneous q-Space Sampling Optimization and Reconstruction for Fast and High-fidelity Diffusion Magnetic Resonance Imaging

Jan 03, 2024Abstract:Diffusion Magnetic Resonance Imaging (dMRI) plays a crucial role in the noninvasive investigation of tissue microstructural properties and structural connectivity in the \textit{in vivo} human brain. However, to effectively capture the intricate characteristics of water diffusion at various directions and scales, it is important to employ comprehensive q-space sampling. Unfortunately, this requirement leads to long scan times, limiting the clinical applicability of dMRI. To address this challenge, we propose SSOR, a Simultaneous q-Space sampling Optimization and Reconstruction framework. We jointly optimize a subset of q-space samples using a continuous representation of spherical harmonic functions and a reconstruction network. Additionally, we integrate the unique properties of diffusion magnetic resonance imaging (dMRI) in both the q-space and image domains by applying $l1$-norm and total-variation regularization. The experiments conducted on HCP data demonstrate that SSOR has promising strengths both quantitatively and qualitatively and exhibits robustness to noise.

AID-DTI: Accelerating High-fidelity Diffusion Tensor Imaging with Detail-Preserving Model-based Deep Learning

Jan 03, 2024Abstract:Deep learning has shown great potential in accelerating diffusion tensor imaging (DTI). Nevertheless, existing methods tend to suffer from Rician noise and detail loss in reconstructing the DTI-derived parametric maps especially when sparsely sampled q-space data are used. This paper proposes a novel method, AID-DTI (Accelerating hIgh fiDelity Diffusion Tensor Imaging), to facilitate fast and accurate DTI with only six measurements. AID-DTI is equipped with a newly designed Singular Value Decomposition (SVD)-based regularizer, which can effectively capture fine details while suppressing noise during network training. Experimental results on Human Connectome Project (HCP) data consistently demonstrate that the proposed method estimates DTI parameter maps with fine-grained details and outperforms three state-of-the-art methods both quantitatively and qualitatively.

Combining Kernelized Autoencoding and Centroid Prediction for Dynamic Multi-objective Optimization

Dec 02, 2023Abstract:Evolutionary algorithms face significant challenges when dealing with dynamic multi-objective optimization because Pareto optimal solutions and/or Pareto optimal fronts change. This paper proposes a unified paradigm, which combines the kernelized autoncoding evolutionary search and the centriod-based prediction (denoted by KAEP), for solving dynamic multi-objective optimization problems (DMOPs). Specifically, whenever a change is detected, KAEP reacts effectively to it by generating two subpopulations. The first subpoulation is generated by a simple centriod-based prediction strategy. For the second initial subpopulation, the kernel autoencoder is derived to predict the moving of the Pareto-optimal solutions based on the historical elite solutions. In this way, an initial population is predicted by the proposed combination strategies with good convergence and diversity, which can be effective for solving DMOPs. The performance of our proposed method is compared with five state-of-the-art algorithms on a number of complex benchmark problems. Empirical results fully demonstrate the superiority of our proposed method on most test instances.

TS-ENAS:Two-Stage Evolution for Cell-based Network Architecture Search

Oct 14, 2023

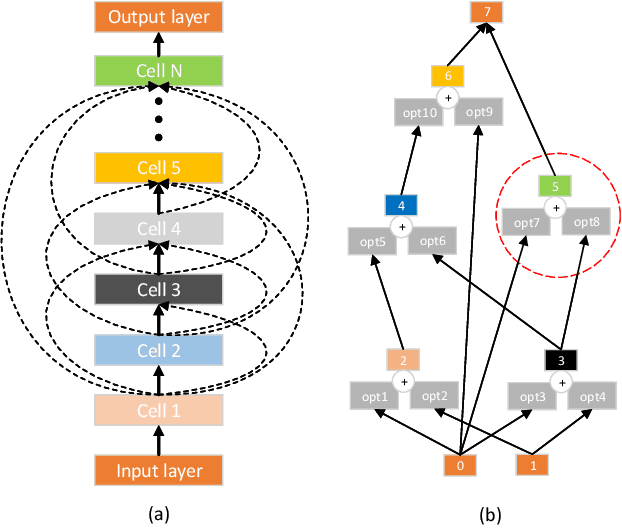

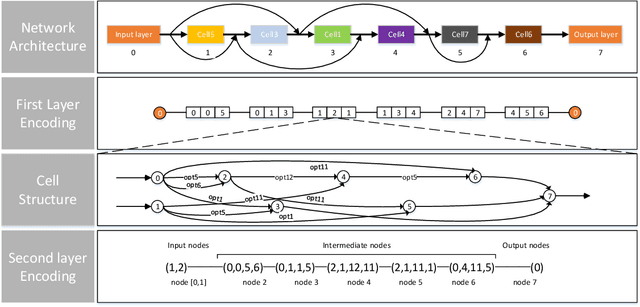

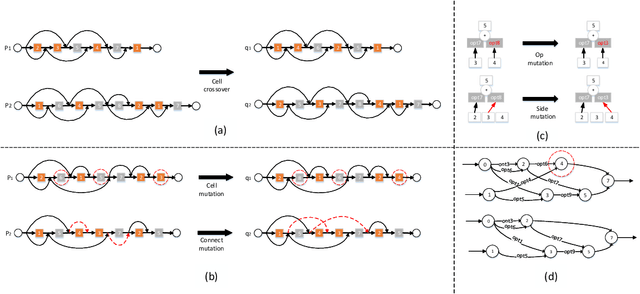

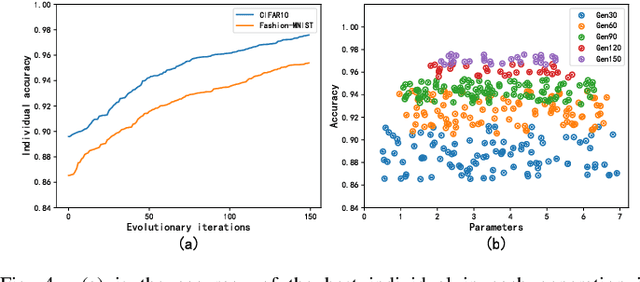

Abstract:Neural network architecture search provides a solution to the automatic design of network structures. However, it is difficult to search the whole network architecture directly. Although using stacked cells to search neural network architectures is an effective way to reduce the complexity of searching, these methods do not able find the global optimal neural network structure since the number of layers, cells and connection methods is fixed. In this paper, we propose a Two-Stage Evolution for cell-based Network Architecture Search(TS-ENAS), including one-stage searching based on stacked cells and second-stage adjusting these cells. In our algorithm, a new cell-based search space and an effective two-stage encoding method are designed to represent cells and neural network structures. In addition, a cell-based weight inheritance strategy is designed to initialize the weight of the network, which significantly reduces the running time of the algorithm. The proposed methods are extensively tested and compared on four image classification dataset, Fashion-MNIST, CIFAR10, CIFAR100 and ImageNet and compared with 22 state-of-the-art algorithms including hand-designed networks and NAS networks. The experimental results show that TS-ENAS can more effectively find the neural network architecture with comparative performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge