Robin Burke

What News Recommendation Research Did (But Mostly Didn't) Teach Us About Building A News Recommender

Sep 15, 2025Abstract:One of the goals of recommender systems research is to provide insights and methods that can be used by practitioners to build real-world systems that deliver high-quality recommendations to actual people grounded in their genuine interests and needs. We report on our experience trying to apply the news recommendation literature to build POPROX, a live platform for news recommendation research, and reflect on the extent to which the current state of research supports system-building efforts. Our experience highlights several unexpected challenges encountered in building personalization features that are commonly found in products from news aggregators and publishers, and shows how those difficulties are connected to surprising gaps in the literature. Finally, we offer a set of lessons learned from building a live system with a persistent user base and highlight opportunities to make future news recommendation research more applicable and impactful in practice.

Envy-Free but Still Unfair: Envy-Freeness Up To One Item (EF-1) in Personalized Recommendation

Sep 10, 2025Abstract:Envy-freeness and the relaxation to Envy-freeness up to one item (EF-1) have been used as fairness concepts in the economics, game theory, and social choice literatures since the 1960s, and have recently gained popularity within the recommendation systems communities. In this short position paper we will give an overview of envy-freeness and its use in economics and recommendation systems; and illustrate why envy is not appropriate to measure fairness for use in settings where personalization plays a role.

Decoupled Recommender Systems: Exploring Alternative Recommender Ecosystem Designs

Mar 05, 2025

Abstract:Recommender ecosystems are an emerging subject of research. Such research examines how the characteristics of algorithms, recommendation consumers, and item providers influence system dynamics and long-term outcomes. One architectural possibility that has not yet been widely explored in this line of research is the consequences of a configuration in which recommendation algorithms are decoupled from the platforms they serve. This is sometimes called "the friendly neighborhood algorithm store" or "middleware" model. We are particularly interested in how such architectures might offer a range of different distributions of utility across consumers, providers, and recommendation platforms. In this paper, we create a model of a recommendation ecosystem that incorporates algorithm choice and examine the outcomes of such a design.

De-centering the (Traditional) User: Multistakeholder Evaluation of Recommender Systems

Jan 09, 2025Abstract:Multistakeholder recommender systems are those that account for the impacts and preferences of multiple groups of individuals, not just the end users receiving recommendations. Due to their complexity, evaluating these systems cannot be restricted to the overall utility of a single stakeholder, as is often the case of more mainstream recommender system applications. In this article, we focus our discussion on the intricacies of the evaluation of multistakeholder recommender systems. We bring attention to the different aspects involved in the evaluation of multistakeholder recommender systems - from the range of stakeholders involved (including but not limited to producers and consumers) to the values and specific goals of each relevant stakeholder. Additionally, we discuss how to move from theoretical principles to practical implementation, providing specific use case examples. Finally, we outline open research directions for the RecSys community to explore. We aim to provide guidance to researchers and practitioners about how to think about these complex and domain-dependent issues of evaluation in the course of designing, developing, and researching applications with multistakeholder aspects.

Post-Userist Recommender Systems : A Manifesto

Oct 09, 2024Abstract:We define userist recommendation as an approach to recommender systems framed solely in terms of the relation between the user and system. Post-userist recommendation posits a larger field of relations in which stakeholders are embedded and distinguishes the recommendation function (which can potentially connect creators with audiences) from generative media. We argue that in the era of generative media, userist recommendation becomes indistinguishable from personalized media generation, and therefore post-userist recommendation is the only path forward for recommender systems research.

Social Choice for Heterogeneous Fairness in Recommendation

Oct 06, 2024

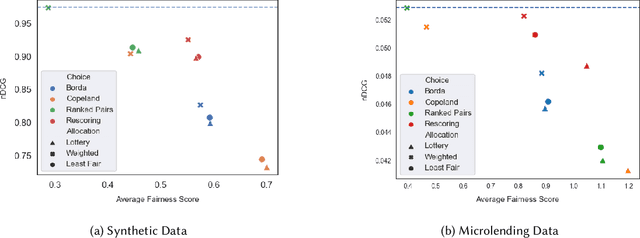

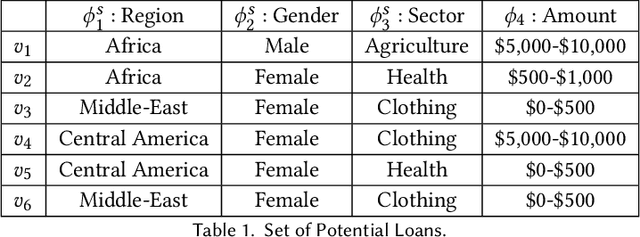

Abstract:Algorithmic fairness in recommender systems requires close attention to the needs of a diverse set of stakeholders that may have competing interests. Previous work in this area has often been limited by fixed, single-objective definitions of fairness, built into algorithms or optimization criteria that are applied to a single fairness dimension or, at most, applied identically across dimensions. These narrow conceptualizations limit the ability to adapt fairness-aware solutions to the wide range of stakeholder needs and fairness definitions that arise in practice. Our work approaches recommendation fairness from the standpoint of computational social choice, using a multi-agent framework. In this paper, we explore the properties of different social choice mechanisms and demonstrate the successful integration of multiple, heterogeneous fairness definitions across multiple data sets.

Data Generation via Latent Factor Simulation for Fairness-aware Re-ranking

Sep 21, 2024

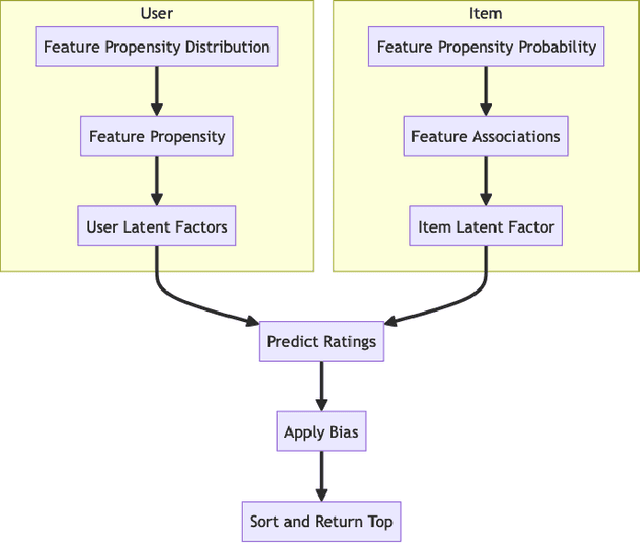

Abstract:Synthetic data is a useful resource for algorithmic research. It allows for the evaluation of systems under a range of conditions that might be difficult to achieve in real world settings. In recommender systems, the use of synthetic data is somewhat limited; some work has concentrated on building user-item interaction data at large scale. We believe that fairness-aware recommendation research can benefit from simulated data as it allows the study of protected groups and their interactions without depending on sensitive data that needs privacy protection. In this paper, we propose a novel type of data for fairness-aware recommendation: synthetic recommender system outputs that can be used to study re-ranking algorithms.

Exploring Social Choice Mechanisms for Recommendation Fairness in SCRUF

Sep 10, 2023

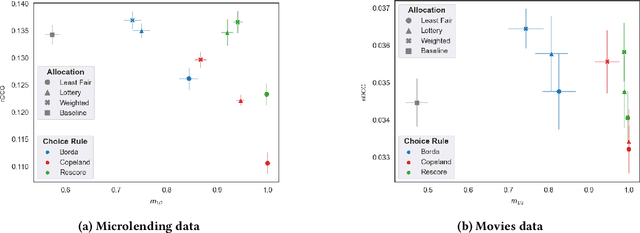

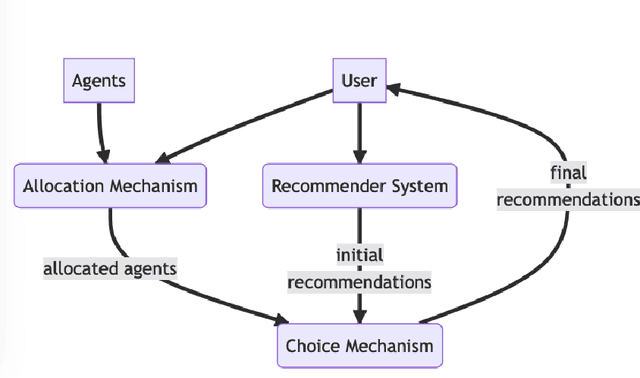

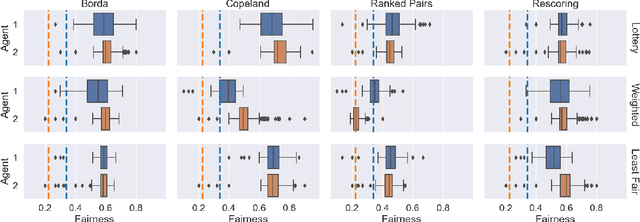

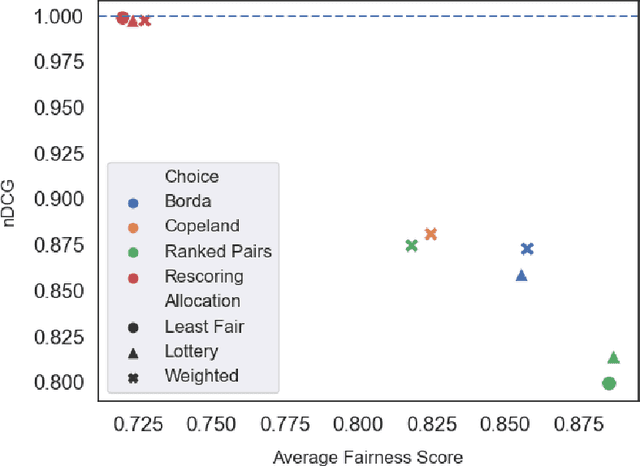

Abstract:Fairness problems in recommender systems often have a complexity in practice that is not adequately captured in simplified research formulations. A social choice formulation of the fairness problem, operating within a multi-agent architecture of fairness concerns, offers a flexible and multi-aspect alternative to fairness-aware recommendation approaches. Leveraging social choice allows for increased generality and the possibility of tapping into well-studied social choice algorithms for resolving the tension between multiple, competing fairness concerns. This paper explores a range of options for choice mechanisms in multi-aspect fairness applications using both real and synthetic data and shows that different classes of choice and allocation mechanisms yield different but consistent fairness / accuracy tradeoffs. We also show that a multi-agent formulation offers flexibility in adapting to user population dynamics.

Dynamic fairness-aware recommendation through multi-agent social choice

Mar 03, 2023

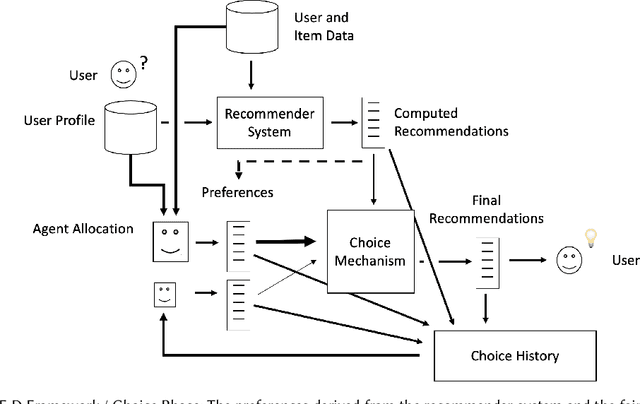

Abstract:Algorithmic fairness in the context of personalized recommendation presents significantly different challenges to those commonly encountered in classification tasks. Researchers studying classification have generally considered fairness to be a matter of achieving equality of outcomes between a protected and unprotected group, and built algorithmic interventions on this basis. We argue that fairness in real-world application settings in general, and especially in the context of personalized recommendation, is much more complex and multi-faceted, requiring a more general approach. We propose a model to formalize multistakeholder fairness in recommender systems as a two stage social choice problem. In particular, we express recommendation fairness as a novel combination of an allocation and an aggregation problem, which integrate both fairness concerns and personalized recommendation provisions, and derive new recommendation techniques based on this formulation. Simulations demonstrate the ability of the framework to integrate multiple fairness concerns in a dynamic way.

Who Pays? Personalization, Bossiness and the Cost of Fairness

Sep 08, 2022Abstract:Fairness-aware recommender systems that have a provider-side fairness concern seek to ensure that protected group(s) of providers have a fair opportunity to promote their items or products. There is a ``cost of fairness'' borne by the consumer side of the interaction when such a solution is implemented. This consumer-side cost raises its own questions of fairness, particularly when personalization is used to control the impact of the fairness constraint. In adopting a personalized approach to the fairness objective, researchers may be opening their systems up to strategic behavior on the part of users. This type of incentive has been studied in the computational social choice literature under the terminology of ``bossiness''. The concern is that a bossy user may be able to shift the cost of fairness to others, improving their own outcomes and worsening those for others. This position paper introduces the concept of bossiness, shows its application in fairness-aware recommendation and discusses strategies for reducing this strategic incentive.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge