Julia Neidhardt

De-centering the (Traditional) User: Multistakeholder Evaluation of Recommender Systems

Jan 09, 2025Abstract:Multistakeholder recommender systems are those that account for the impacts and preferences of multiple groups of individuals, not just the end users receiving recommendations. Due to their complexity, evaluating these systems cannot be restricted to the overall utility of a single stakeholder, as is often the case of more mainstream recommender system applications. In this article, we focus our discussion on the intricacies of the evaluation of multistakeholder recommender systems. We bring attention to the different aspects involved in the evaluation of multistakeholder recommender systems - from the range of stakeholders involved (including but not limited to producers and consumers) to the values and specific goals of each relevant stakeholder. Additionally, we discuss how to move from theoretical principles to practical implementation, providing specific use case examples. Finally, we outline open research directions for the RecSys community to explore. We aim to provide guidance to researchers and practitioners about how to think about these complex and domain-dependent issues of evaluation in the course of designing, developing, and researching applications with multistakeholder aspects.

AustroTox: A Dataset for Target-Based Austrian German Offensive Language Detection

Jun 12, 2024

Abstract:Model interpretability in toxicity detection greatly profits from token-level annotations. However, currently such annotations are only available in English. We introduce a dataset annotated for offensive language detection sourced from a news forum, notable for its incorporation of the Austrian German dialect, comprising 4,562 user comments. In addition to binary offensiveness classification, we identify spans within each comment constituting vulgar language or representing targets of offensive statements. We evaluate fine-tuned language models as well as large language models in a zero- and few-shot fashion. The results indicate that while fine-tuned models excel in detecting linguistic peculiarities such as vulgar dialect, large language models demonstrate superior performance in detecting offensiveness in AustroTox. We publish the data and code.

The Role of Bias in News Recommendation in the Perception of the Covid-19 Pandemic

Sep 15, 2022

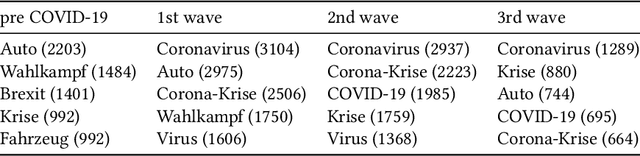

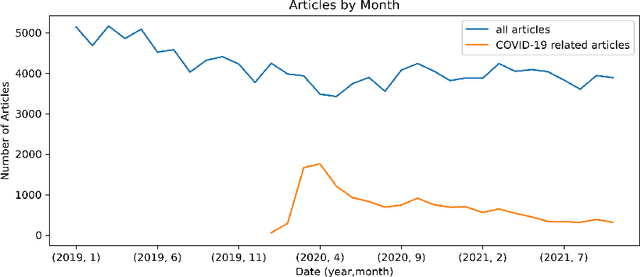

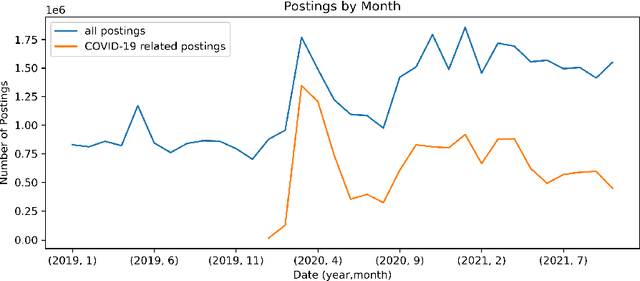

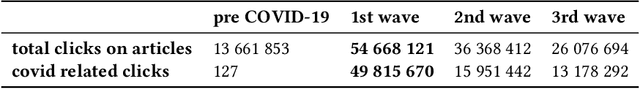

Abstract:News recommender systems (NRs) have been shown to shape public discourse and to enforce behaviors that have a critical, oftentimes detrimental effect on democracies. Earlier research on the impact of media bias has revealed their strong impact on opinions and preferences. Responsible NRs are supposed to have depolarizing capacities, once they go beyond accuracy measures. We performed sequence prediction by using the BERT4Rec algorithm to investigate the interplay of news of coverage and user behavior. Based on live data and training of a large data set from one news outlet "event bursts", "rally around the flag" effect and "filter bubbles" were investigated in our interdisciplinary approach between data science and psychology. Potentials for fair NRs that go beyond accuracy measures are outlined via training of the models with a large data set of articles, keywords, and user behavior. The development of the news coverage and user behavior of the COVID-19 pandemic from primarily medical to broader political content and debates was traced. Our study provides first insights for future development of responsible news recommendation that acknowledges user preferences while stimulating diversity and accountability instead of accuracy, only.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge