Martin Homola

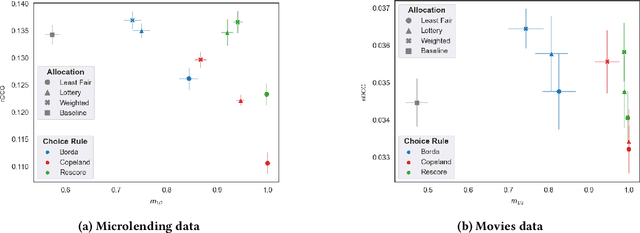

Social Choice for Heterogeneous Fairness in Recommendation

Oct 06, 2024

Abstract:Algorithmic fairness in recommender systems requires close attention to the needs of a diverse set of stakeholders that may have competing interests. Previous work in this area has often been limited by fixed, single-objective definitions of fairness, built into algorithms or optimization criteria that are applied to a single fairness dimension or, at most, applied identically across dimensions. These narrow conceptualizations limit the ability to adapt fairness-aware solutions to the wide range of stakeholder needs and fairness definitions that arise in practice. Our work approaches recommendation fairness from the standpoint of computational social choice, using a multi-agent framework. In this paper, we explore the properties of different social choice mechanisms and demonstrate the successful integration of multiple, heterogeneous fairness definitions across multiple data sets.

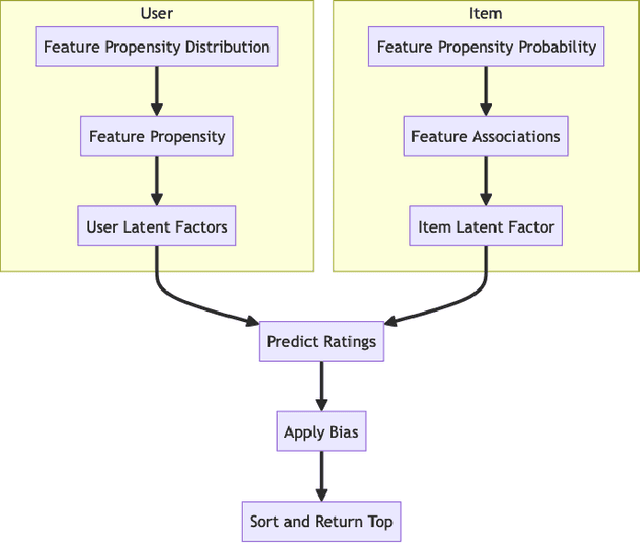

Data Generation via Latent Factor Simulation for Fairness-aware Re-ranking

Sep 21, 2024

Abstract:Synthetic data is a useful resource for algorithmic research. It allows for the evaluation of systems under a range of conditions that might be difficult to achieve in real world settings. In recommender systems, the use of synthetic data is somewhat limited; some work has concentrated on building user-item interaction data at large scale. We believe that fairness-aware recommendation research can benefit from simulated data as it allows the study of protected groups and their interactions without depending on sensitive data that needs privacy protection. In this paper, we propose a novel type of data for fairness-aware recommendation: synthetic recommender system outputs that can be used to study re-ranking algorithms.

Explainable Malware Detection with Tailored Logic Explained Networks

May 05, 2024Abstract:Malware detection is a constant challenge in cybersecurity due to the rapid development of new attack techniques. Traditional signature-based approaches struggle to keep pace with the sheer volume of malware samples. Machine learning offers a promising solution, but faces issues of generalization to unseen samples and a lack of explanation for the instances identified as malware. However, human-understandable explanations are especially important in security-critical fields, where understanding model decisions is crucial for trust and legal compliance. While deep learning models excel at malware detection, their black-box nature hinders explainability. Conversely, interpretable models often fall short in performance. To bridge this gap in this application domain, we propose the use of Logic Explained Networks (LENs), which are a recently proposed class of interpretable neural networks providing explanations in the form of First-Order Logic (FOL) rules. This paper extends the application of LENs to the complex domain of malware detection, specifically using the large-scale EMBER dataset. In the experimental results we show that LENs achieve robustness that exceeds traditional interpretable methods and that are rivaling black-box models. Moreover, we introduce a tailored version of LENs that is shown to generate logic explanations with higher fidelity with respect to the model's predictions.

Workshop Notes of the 6th International Workshop on Acquisition, Representation and Reasoning about Context with Logic (ARCOE-Logic 2014)

Dec 30, 2014Abstract:ARCOE-Logic 2014, the 6th International Workshop on Acquisition, Representation and Reasoning about Context with Logic, was held in co-location with the 19th International Conference on Knowledge Engineering and Knowledge Management (EKAW 2014) on November 25, 2014 in Link\"oping, Sweden. These notes contain the five papers which were accepted and presented at the workshop.

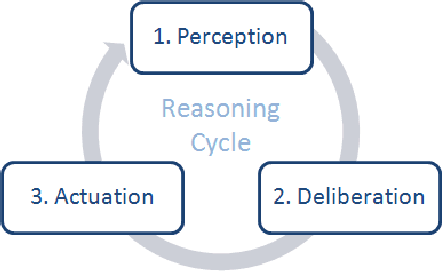

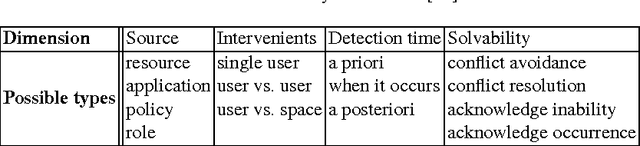

Different Types of Conflicting Knowledge in AmI Environments

Dec 26, 2014

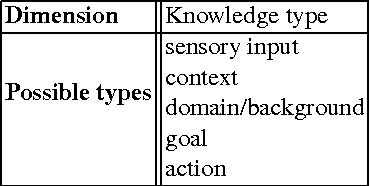

Abstract:We characterize different types of conflicts that may occur in complex distributed multi-agent scenarios, such as in Ambient Intelligence (AmI) environments, and we argue that these conflicts should be resolved in a suitable order and with the appropriate strategies for each individual conflict type. We call for further research with the goal of turning conflict resolution in AmI environments and similar multi-agent domains into a more coordinated and agreed upon process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge