Masoud Mansoury

From Insight to Intervention: Interpretable Neuron Steering for Controlling Popularity Bias in Recommender Systems

Jan 21, 2026Abstract:Popularity bias is a pervasive challenge in recommender systems, where a few popular items dominate attention while the majority of less popular items remain underexposed. This imbalance can reduce recommendation quality and lead to unfair item exposure. Although existing mitigation methods address this issue to some extent, they often lack transparency in how they operate. In this paper, we propose a post-hoc approach, PopSteer, that leverages a Sparse Autoencoder (SAE) to both interpret and mitigate popularity bias in recommendation models. The SAE is trained to replicate a trained model's behavior while enabling neuron-level interpretability. By introducing synthetic users with strong preferences for either popular or unpopular items, we identify neurons encoding popularity signals through their activation patterns. We then steer recommendations by adjusting the activations of the most biased neurons. Experiments on three public datasets with a sequential recommendation model demonstrate that PopSteer significantly enhances fairness with minimal impact on accuracy, while providing interpretable insights and fine-grained control over the fairness-accuracy trade-off.

The Unfairness of Multifactorial Bias in Recommendation

Jan 19, 2026Abstract:Popularity bias and positivity bias are two prominent sources of bias in recommender systems. Both arise from input data, propagate through recommendation models, and lead to unfair or suboptimal outcomes. Popularity bias occurs when a small subset of items receives most interactions, while positivity bias stems from the over-representation of high rating values. Although each bias has been studied independently, their combined effect, to which we refer to as multifactorial bias, remains underexplored. In this work, we examine how multifactorial bias influences item-side fairness, focusing on exposure bias, which reflects the unequal visibility of items in recommendation outputs. Through simulation studies, we find that positivity bias is disproportionately concentrated on popular items, further amplifying their over-exposure. Motivated by this insight, we adapt a percentile-based rating transformation as a pre-processing strategy to mitigate multifactorial bias. Experiments using six recommendation algorithms across four public datasets show that this approach improves exposure fairness with negligible accuracy loss. We also demonstrate that integrating this pre-processing step into post-processing fairness pipelines enhances their effectiveness and efficiency, enabling comparable or better fairness with reduced computational cost. These findings highlight the importance of addressing multifactorial bias and demonstrate the practical value of simple, data-driven pre-processing methods for improving fairness in recommender systems.

Opening the Black Box: Interpretable Remedies for Popularity Bias in Recommender Systems

Aug 24, 2025Abstract:Popularity bias is a well-known challenge in recommender systems, where a small number of popular items receive disproportionate attention, while the majority of less popular items are largely overlooked. This imbalance often results in reduced recommendation quality and unfair exposure of items. Although existing mitigation techniques address this bias to some extent, they typically lack transparency in how they operate. In this paper, we propose a post-hoc method using a Sparse Autoencoder (SAE) to interpret and mitigate popularity bias in deep recommendation models. The SAE is trained to replicate a pre-trained model's behavior while enabling neuron-level interpretability. By introducing synthetic users with clear preferences for either popular or unpopular items, we identify neurons encoding popularity signals based on their activation patterns. We then adjust the activations of the most biased neurons to steer recommendations toward fairer exposure. Experiments on two public datasets using a sequential recommendation model show that our method significantly improves fairness with minimal impact on accuracy. Moreover, it offers interpretability and fine-grained control over the fairness-accuracy trade-off.

Mitigating Popularity Bias in Counterfactual Explanations using Large Language Models

Aug 12, 2025Abstract:Counterfactual explanations (CFEs) offer a tangible and actionable way to explain recommendations by showing users a "what-if" scenario that demonstrates how small changes in their history would alter the system's output. However, existing CFE methods are susceptible to bias, generating explanations that might misalign with the user's actual preferences. In this paper, we propose a pre-processing step that leverages large language models to filter out-of-character history items before generating an explanation. In experiments on two public datasets, we focus on popularity bias and apply our approach to ACCENT, a neural CFE framework. We find that it creates counterfactuals that are more closely aligned with each user's popularity preferences than ACCENT alone.

Using LLMs to Capture Users' Temporal Context for Recommendation

Aug 11, 2025Abstract:Effective recommender systems demand dynamic user understanding, especially in complex, evolving environments. Traditional user profiling often fails to capture the nuanced, temporal contextual factors of user preferences, such as transient short-term interests and enduring long-term tastes. This paper presents an assessment of Large Language Models (LLMs) for generating semantically rich, time-aware user profiles. We do not propose a novel end-to-end recommendation architecture; instead, the core contribution is a systematic investigation into the degree of LLM effectiveness in capturing the dynamics of user context by disentangling short-term and long-term preferences. This approach, framing temporal preferences as dynamic user contexts for recommendations, adaptively fuses these distinct contextual components into comprehensive user embeddings. The evaluation across Movies&TV and Video Games domains suggests that while LLM-generated profiles offer semantic depth and temporal structure, their effectiveness for context-aware recommendations is notably contingent on the richness of user interaction histories. Significant gains are observed in dense domains (e.g., Movies&TV), whereas improvements are less pronounced in sparse environments (e.g., Video Games). This work highlights LLMs' nuanced potential in enhancing user profiling for adaptive, context-aware recommendations, emphasizing the critical role of dataset characteristics for practical applicability.

Temporal User Profiling with LLMs: Balancing Short-Term and Long-Term Preferences for Recommendations

Aug 11, 2025Abstract:Accurately modeling user preferences is crucial for improving the performance of content-based recommender systems. Existing approaches often rely on simplistic user profiling methods, such as averaging or concatenating item embeddings, which fail to capture the nuanced nature of user preference dynamics, particularly the interactions between long-term and short-term preferences. In this work, we propose LLM-driven Temporal User Profiling (LLM-TUP), a novel method for user profiling that explicitly models short-term and long-term preferences by leveraging interaction timestamps and generating natural language representations of user histories using a large language model (LLM). These representations are encoded into high-dimensional embeddings using a pre-trained BERT model, and an attention mechanism is applied to dynamically fuse the short-term and long-term embeddings into a comprehensive user profile. Experimental results on real-world datasets demonstrate that LLM-TUP achieves substantial improvements over several baselines, underscoring the effectiveness of our temporally aware user-profiling approach and the use of semantically rich user profiles, generated by LLMs, for personalized content-based recommendation.

Towards Explainable Temporal User Profiling with LLMs

May 01, 2025Abstract:Accurately modeling user preferences is vital not only for improving recommendation performance but also for enhancing transparency in recommender systems. Conventional user profiling methods, such as averaging item embeddings, often overlook the evolving, nuanced nature of user interests, particularly the interplay between short-term and long-term preferences. In this work, we leverage large language models (LLMs) to generate natural language summaries of users' interaction histories, distinguishing recent behaviors from more persistent tendencies. Our framework not only models temporal user preferences but also produces natural language profiles that can be used to explain recommendations in an interpretable manner. These textual profiles are encoded via a pre-trained model, and an attention mechanism dynamically fuses the short-term and long-term embeddings into a comprehensive user representation. Beyond boosting recommendation accuracy over multiple baselines, our approach naturally supports explainability: the interpretable text summaries and attention weights can be exposed to end users, offering insights into why specific items are suggested. Experiments on real-world datasets underscore both the performance gains and the promise of generating clearer, more transparent justifications for content-based recommendations.

Towards Carbon Footprint-Aware Recommender Systems for Greener Item Recommendation

Mar 21, 2025

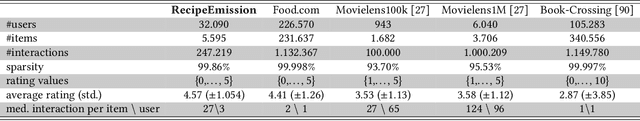

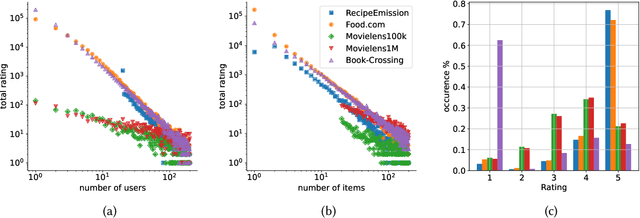

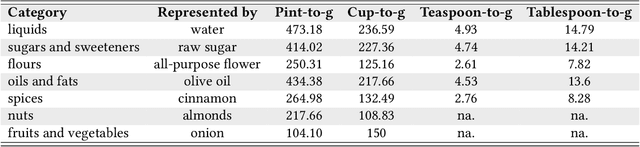

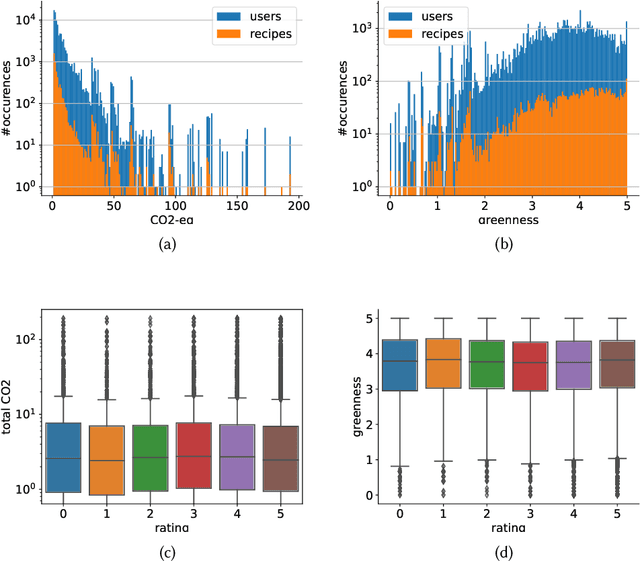

Abstract:The commodity and widespread use of online shopping are having an unprecedented impact on climate, with emission figures from key actors that are easily comparable to those of a large-scale metropolis. Despite online shopping being fueled by recommender systems (RecSys) algorithms, the role and potential of the latter in promoting more sustainable choices is little studied. One of the main reasons for this could be attributed to the lack of a dataset containing carbon footprint emissions for the items. While building such a dataset is a rather challenging task, its presence is pivotal for opening the doors to novel perspectives, evaluations, and methods for RecSys research. In this paper, we target this bottleneck and study the environmental role of RecSys algorithms. First, we mine a dataset that includes carbon footprint emissions for its items. Then, we benchmark conventional RecSys algorithms in terms of accuracy and sustainability as two faces of the same coin. We find that RecSys algorithms optimized for accuracy overlook greenness and that longer recommendation lists are greener but less accurate. Then, we show that a simple reranking approach that accounts for the item's carbon footprint can establish a better trade-off between accuracy and greenness. This reranking approach is modular, ready to use, and can be applied to any RecSys algorithm without the need to alter the underlying mechanisms or retrain models. Our results show that a small sacrifice of accuracy can lead to significant improvements of recommendation greenness across all algorithms and list lengths. Arguably, this accuracy-greenness trade-off could even be seen as an enhancement of user satisfaction, particularly for purpose-driven users who prioritize the environmental impact of their choices. We anticipate this work will serve as the starting point for studying RecSys for more sustainable recommendations.

Correcting for Popularity Bias in Recommender Systems via Item Loss Equalization

Oct 07, 2024Abstract:Recommender Systems (RS) often suffer from popularity bias, where a small set of popular items dominate the recommendation results due to their high interaction rates, leaving many less popular items overlooked. This phenomenon disproportionately benefits users with mainstream tastes while neglecting those with niche interests, leading to unfairness among users and exacerbating disparities in recommendation quality across different user groups. In this paper, we propose an in-processing approach to address this issue by intervening in the training process of recommendation models. Drawing inspiration from fair empirical risk minimization in machine learning, we augment the objective function of the recommendation model with an additional term aimed at minimizing the disparity in loss values across different item groups during the training process. Our approach is evaluated through extensive experiments on two real-world datasets and compared against state-of-the-art baselines. The results demonstrate the superior efficacy of our method in mitigating the unfairness of popularity bias while incurring only negligible loss in recommendation accuracy.

Mitigating Exposure Bias in Online Learning to Rank Recommendation: A Novel Reward Model for Cascading Bandits

Aug 08, 2024

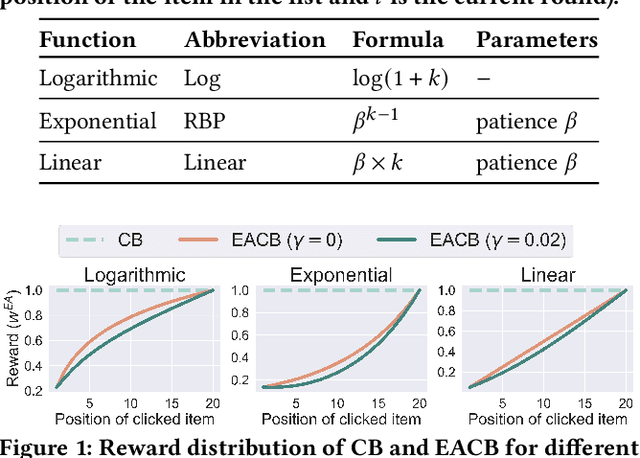

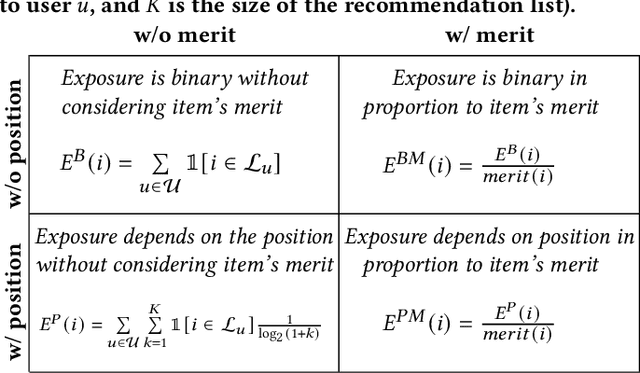

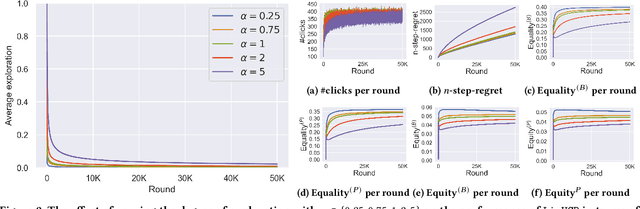

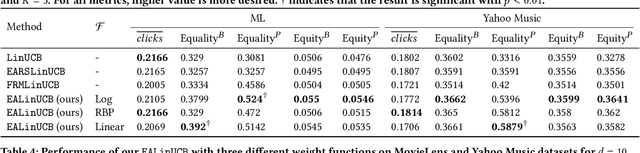

Abstract:Exposure bias is a well-known issue in recommender systems where items and suppliers are not equally represented in the recommendation results. This bias becomes particularly problematic over time as a few items are repeatedly over-represented in recommendation lists, leading to a feedback loop that further amplifies this bias. Although extensive research has addressed this issue in model-based or neighborhood-based recommendation algorithms, less attention has been paid to online recommendation models, such as those based on top-K contextual bandits, where recommendation models are dynamically updated with ongoing user feedback. In this paper, we study exposure bias in a class of well-known contextual bandit algorithms known as Linear Cascading Bandits. We analyze these algorithms in their ability to handle exposure bias and provide a fair representation of items in the recommendation results. Our analysis reveals that these algorithms fail to mitigate exposure bias in the long run during the course of ongoing user interactions. We propose an Exposure-Aware reward model that updates the model parameters based on two factors: 1) implicit user feedback and 2) the position of the item in the recommendation list. The proposed model mitigates exposure bias by controlling the utility assigned to the items based on their exposure in the recommendation list. Our experiments with two real-world datasets show that our proposed reward model improves the exposure fairness of the linear cascading bandits over time while maintaining the recommendation accuracy. It also outperforms the current baselines. Finally, we prove a high probability upper regret bound for our proposed model, providing theoretical guarantees for its performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge