Farzad Eskandanian

Beyond Static Calibration: The Impact of User Preference Dynamics on Calibrated Recommendation

May 16, 2024

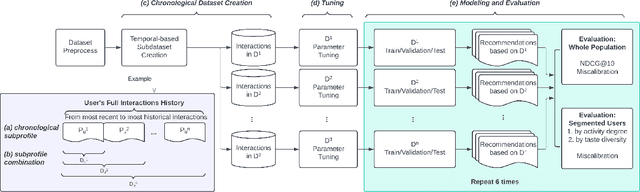

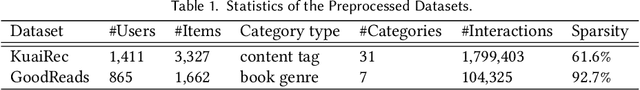

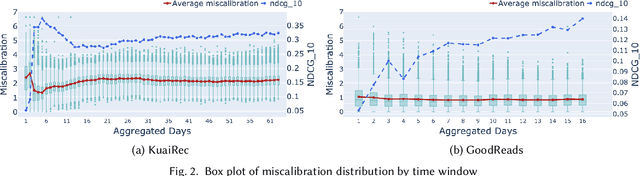

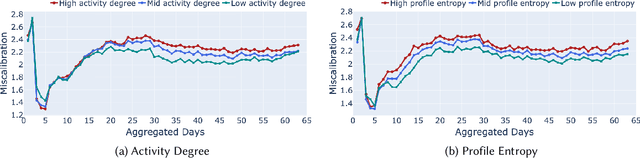

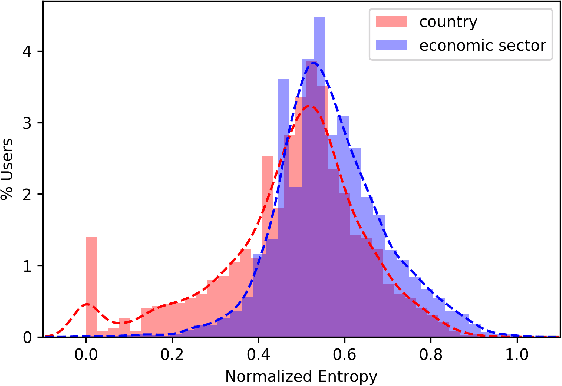

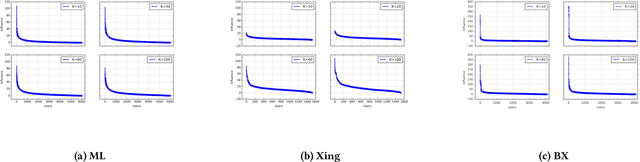

Abstract:Calibration in recommender systems is an important performance criterion that ensures consistency between the distribution of user preference categories and that of recommendations generated by the system. Standard methods for mitigating miscalibration typically assume that user preference profiles are static, and they measure calibration relative to the full history of user's interactions, including possibly outdated and stale preference categories. We conjecture that this approach can lead to recommendations that, while appearing calibrated, in fact, distort users' true preferences. In this paper, we conduct a preliminary investigation of recommendation calibration at a more granular level, taking into account evolving user preferences. By analyzing differently sized training time windows from the most recent interactions to the oldest, we identify the most relevant segment of user's preferences that optimizes the calibration metric. We perform an exploratory analysis with datasets from different domains with distinctive user-interaction characteristics. We demonstrate how the evolving nature of user preferences affects recommendation calibration, and how this effect is manifested differently depending on the characteristics of the data in a given domain. Datasets, codes, and more detailed experimental results are available at: https://github.com/nicolelin13/DynamicCalibrationUMAP.

"And the Winner Is": Dynamic Lotteries for Multi-group Fairness-Aware Recommendation

Sep 05, 2020

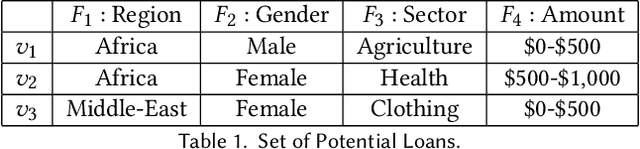

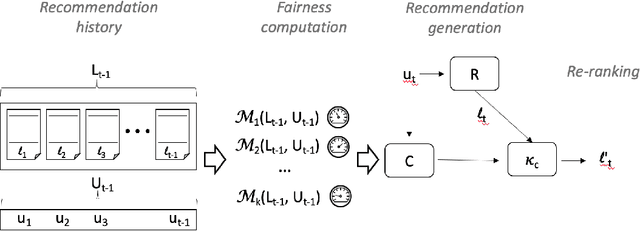

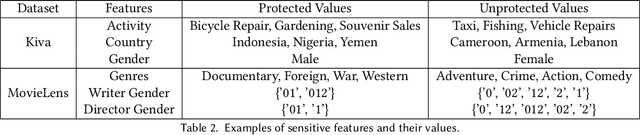

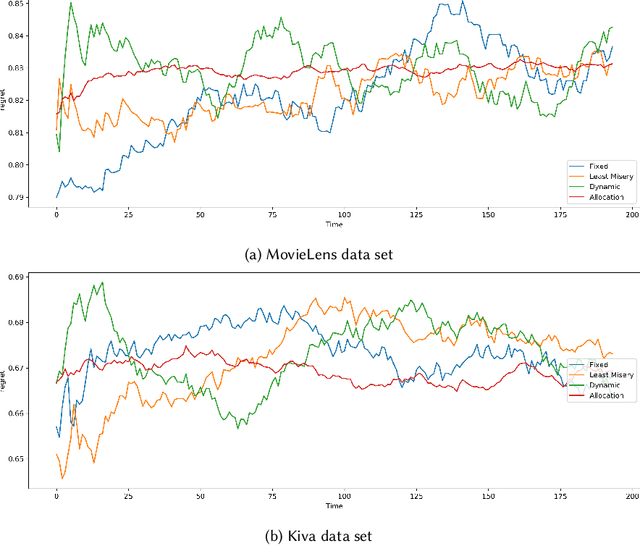

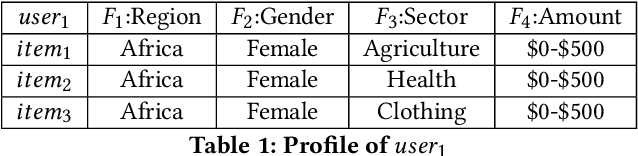

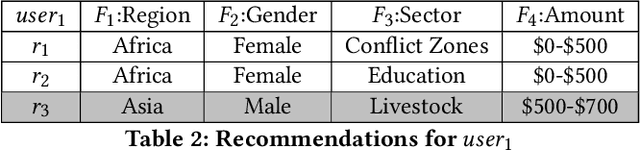

Abstract:As recommender systems are being designed and deployed for an increasing number of socially-consequential applications, it has become important to consider what properties of fairness these systems exhibit. There has been considerable research on recommendation fairness. However, we argue that the previous literature has been based on simple, uniform and often uni-dimensional notions of fairness assumptions that do not recognize the real-world complexities of fairness-aware applications. In this paper, we explicitly represent the design decisions that enter into the trade-off between accuracy and fairness across multiply-defined and intersecting protected groups, supporting multiple fairness metrics. The framework also allows the recommender to adjust its performance based on the historical view of recommendations that have been delivered over a time horizon, dynamically rebalancing between fairness concerns. Within this framework, we formulate lottery-based mechanisms for choosing between fairness concerns, and demonstrate their performance in two recommendation domains.

Using Stable Matching to Optimize the Balance between Accuracy and Diversity in Recommendation

Jun 05, 2020

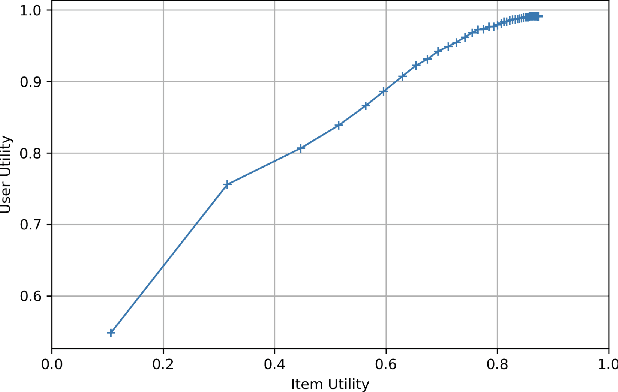

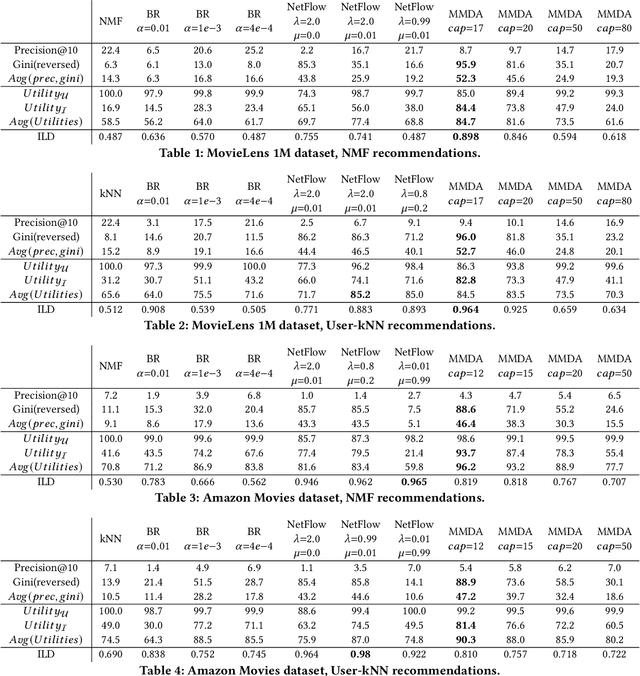

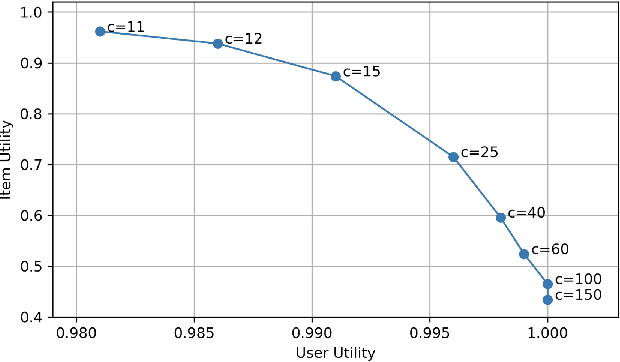

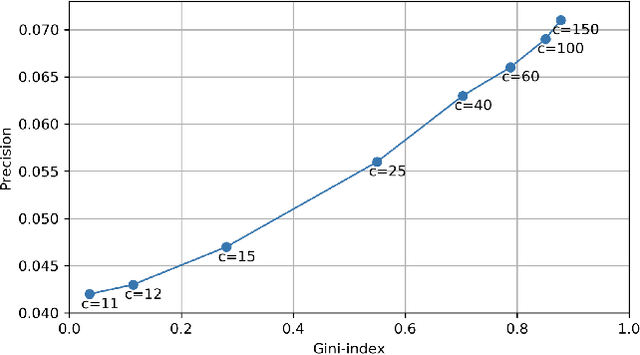

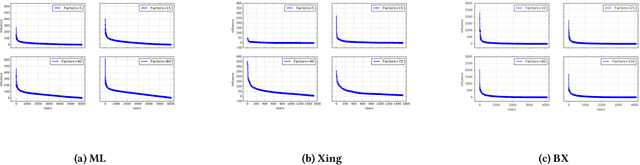

Abstract:Increasing aggregate diversity (or catalog coverage) is an important system-level objective in many recommendation domains where it may be desirable to mitigate the popularity bias and to improve the coverage of long-tail items in recommendations given to users. This is especially important in multistakeholder recommendation scenarios where it may be important to optimize utilities not just for the end user, but also for other stakeholders such as item sellers or producers who desire a fair representation of their items across recommendation lists produced by the system. Unfortunately, attempts to increase aggregate diversity often result in lower recommendation accuracy for end users. Thus, addressing this problem requires an approach that can effectively manage the trade-offs between accuracy and aggregate diversity. In this work, we propose a two-sided post-processing approach in which both user and item utilities are considered. Our goal is to maximize aggregate diversity while minimizing loss in recommendation accuracy. Our solution is a generalization of the Deferred Acceptance algorithm which was proposed as an efficient algorithm to solve the well-known stable matching problem. We prove that our algorithm results in a unique user-optimal stable match between items and users. Using three recommendation datasets, we empirically demonstrate the effectiveness of our approach in comparison to several baselines. In particular, our results show that the proposed solution is quite effective in increasing aggregate diversity and item-side utility while optimizing recommendation accuracy for end users.

Opportunistic Multi-aspect Fairness through Personalized Re-ranking

May 21, 2020

Abstract:As recommender systems have become more widespread and moved into areas with greater social impact, such as employment and housing, researchers have begun to seek ways to ensure fairness in the results that such systems produce. This work has primarily focused on developing recommendation approaches in which fairness metrics are jointly optimized along with recommendation accuracy. However, the previous work had largely ignored how individual preferences may limit the ability of an algorithm to produce fair recommendations. Furthermore, with few exceptions, researchers have only considered scenarios in which fairness is measured relative to a single sensitive feature or attribute (such as race or gender). In this paper, we present a re-ranking approach to fairness-aware recommendation that learns individual preferences across multiple fairness dimensions and uses them to enhance provider fairness in recommendation results. Specifically, we show that our opportunistic and metric-agnostic approach achieves a better trade-off between accuracy and fairness than prior re-ranking approaches and does so across multiple fairness dimensions.

Power of the Few: Analyzing the Impact of Influential Users in Collaborative Recommender Systems

May 14, 2019

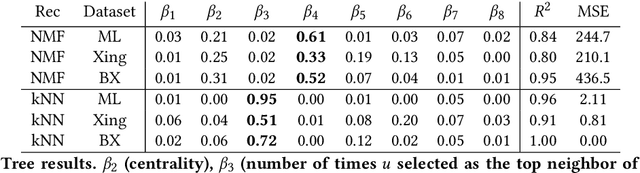

Abstract:Like other social systems, in collaborative filtering a small number of "influential" users may have a large impact on the recommendations of other users, thus affecting the overall behavior of the system. Identifying influential users and studying their impact on other users is an important problem because it provides insight into how small groups can inadvertently or intentionally affect the behavior of the system as a whole. Modeling these influences can also shed light on patterns and relationships that would otherwise be difficult to discern, hopefully leading to more transparency in how the system generates personalized content. In this work we first formalize the notion of "influence" in collaborative filtering using an Influence Discrimination Model. We then empirically identify and characterize influential users and analyze their impact on the system under different underlying recommendation algorithms and across three different recommendation domains: job, movie and book recommendations. Insights from these experiments can help in designing systems that are not only optimized for accuracy, but are also tuned to mitigate the impact of influential users when it might lead to potential imbalance or unfairness in the system's outcomes.

Modeling the Dynamics of User Preferences for Sequence-Aware Recommendation Using Hidden Markov Models

May 14, 2019

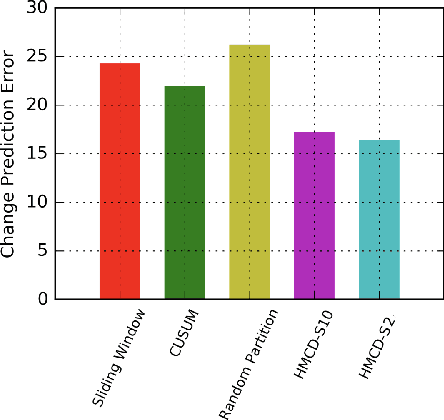

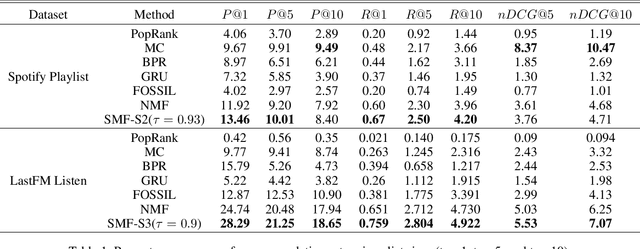

Abstract:In a variety of online settings involving interaction with end-users it is critical for the systems to adapt to changes in user preferences. User preferences on items tend to change over time due to a variety of factors such as change in context, the task being performed, or other short-term or long-term external factors. Recommender systems need to be able to capture these dynamics in user preferences in order to remain tuned to the most current interests of users. In this work we present a recommendation framework which takes into account the dynamics of user preferences. We propose an approach based on Hidden Markov Models (HMM) to identify change-points in the sequence of user interactions which reflect significant changes in preference according to the sequential behavior of all the users in the data. The proposed framework leverages the identified change points to generate recommendations using a sequence-aware non-negative matrix factorization model. We empirically demonstrate the effectiveness of the HMM-based change detection method as compared to standard baseline methods. Additionally, we evaluate the performance of the proposed recommendation method and show that it compares favorably to state-of-the-art sequence-aware recommendation models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge