Raphael Memmesheimer

OMCL: Open-vocabulary Monte Carlo Localization

Dec 17, 2025Abstract:Robust robot localization is an important prerequisite for navigation planning. If the environment map was created from different sensors, robot measurements must be robustly associated with map features. In this work, we extend Monte Carlo Localization using vision-language features. These open-vocabulary features enable to robustly compute the likelihood of visual observations, given a camera pose and a 3D map created from posed RGB-D images or aligned point clouds. The abstract vision-language features enable to associate observations and map elements from different modalities. Global localization can be initialized by natural language descriptions of the objects present in the vicinity of locations. We evaluate our approach using Matterport3D and Replica for indoor scenes and demonstrate generalization on SemanticKITTI for outdoor scenes.

EL3DD: Extended Latent 3D Diffusion for Language Conditioned Multitask Manipulation

Nov 17, 2025

Abstract:Acting in human environments is a crucial capability for general-purpose robots, necessitating a robust understanding of natural language and its application to physical tasks. This paper seeks to harness the capabilities of diffusion models within a visuomotor policy framework that merges visual and textual inputs to generate precise robotic trajectories. By employing reference demonstrations during training, the model learns to execute manipulation tasks specified through textual commands within the robot's immediate environment. The proposed research aims to extend an existing model by leveraging improved embeddings, and adapting techniques from diffusion models for image generation. We evaluate our methods on the CALVIN dataset, proving enhanced performance on various manipulation tasks and an increased long-horizon success rate when multiple tasks are executed in sequence. Our approach reinforces the usefulness of diffusion models and contributes towards general multitask manipulation.

LIAM: Multimodal Transformer for Language Instructions, Images, Actions and Semantic Maps

Mar 15, 2025

Abstract:The availability of large language models and open-vocabulary object perception methods enables more flexibility for domestic service robots. The large variability of domestic tasks can be addressed without implementing each task individually by providing the robot with a task description along with appropriate environment information. In this work, we propose LIAM - an end-to-end model that predicts action transcripts based on language, image, action, and map inputs. Language and image inputs are encoded with a CLIP backbone, for which we designed two pre-training tasks to fine-tune its weights and pre-align the latent spaces. We evaluate our method on the ALFRED dataset, a simulator-generated benchmark for domestic tasks. Our results demonstrate the importance of pre-aligning embedding spaces from different modalities and the efficacy of incorporating semantic maps.

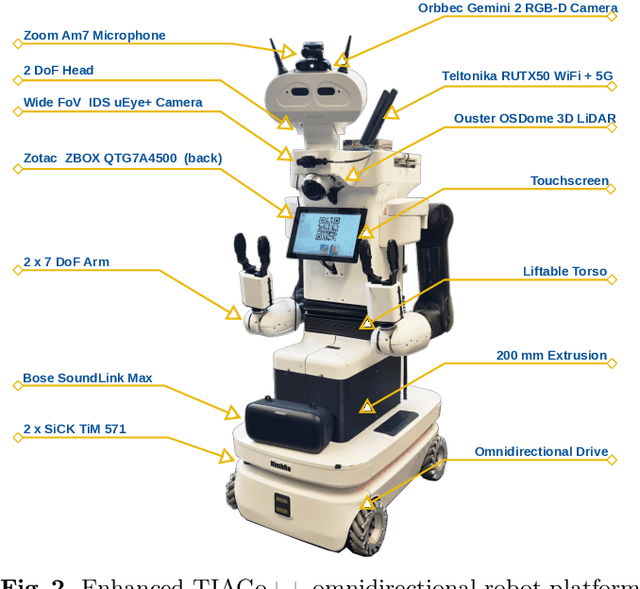

RoboCup@Home 2024 OPL Winner NimbRo: Anthropomorphic Service Robots using Foundation Models for Perception and Planning

Dec 19, 2024

Abstract:We present the approaches and contributions of the winning team NimbRo@Home at the RoboCup@Home 2024 competition in the Open Platform League held in Eindhoven, NL. Further, we describe our hardware setup and give an overview of the results for the task stages and the final demonstration. For this year's competition, we put a special emphasis on open-vocabulary object segmentation and grasping approaches that overcome the labeling overhead of supervised vision approaches, commonly used in RoboCup@Home. We successfully demonstrated that we can segment and grasp non-labeled objects by text descriptions. Further, we extensively employed LLMs for natural language understanding and task planning. Throughout the competition, our approaches showed robustness and generalization capabilities. A video of our performance can be found online.

Person Segmentation and Action Classification for Multi-Channel Hemisphere Field of View LiDAR Sensors

Nov 17, 2024

Abstract:Robots need to perceive persons in their surroundings for safety and to interact with them. In this paper, we present a person segmentation and action classification approach that operates on 3D scans of hemisphere field of view LiDAR sensors. We recorded a data set with an Ouster OSDome-64 sensor consisting of scenes where persons perform three different actions and annotated it. We propose a method based on a MaskDINO model to detect and segment persons and to recognize their actions from combined spherical projected multi-channel representations of the LiDAR data with an additional positional encoding. Our approach demonstrates good performance for the person segmentation task and further performs well for the estimation of the person action states walking, waving, and sitting. An ablation study provides insights about the individual channel contributions for the person segmentation task. The trained models, code and dataset are made publicly available.

A Comparison of Prompt Engineering Techniques for Task Planning and Execution in Service Robotics

Oct 30, 2024Abstract:Recent advances in LLM have been instrumental in autonomous robot control and human-robot interaction by leveraging their vast general knowledge and capabilities to understand and reason across a wide range of tasks and scenarios. Previous works have investigated various prompt engineering techniques for improving the performance of \glspl{LLM} to accomplish tasks, while others have proposed methods that utilize LLMs to plan and execute tasks based on the available functionalities of a given robot platform. In this work, we consider both lines of research by comparing prompt engineering techniques and combinations thereof within the application of high-level task planning and execution in service robotics. We define a diverse set of tasks and a simple set of functionalities in simulation, and measure task completion accuracy and execution time for several state-of-the-art models.

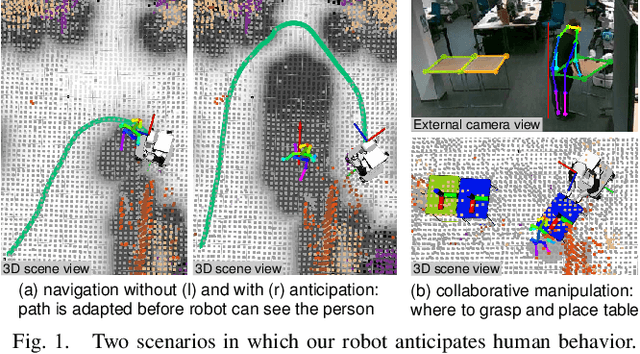

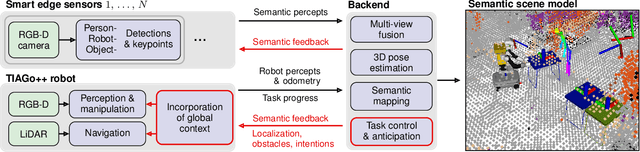

Anticipating Human Behavior for Safe Navigation and Efficient Collaborative Manipulation with Mobile Service Robots

Oct 07, 2024

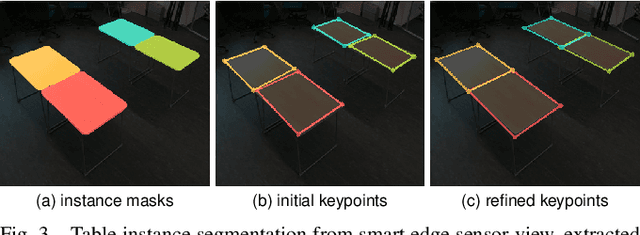

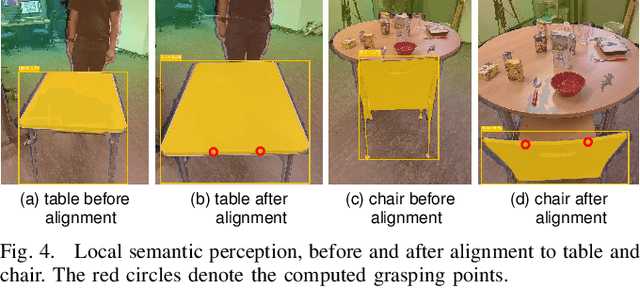

Abstract:The anticipation of human behavior is a crucial capability for robots to interact with humans safely and efficiently. We employ a smart edge sensor network to provide global observations along with future predictions and goal information to integrate anticipatory behavior for the control of a mobile manipulation robot. We present approaches to anticipate human behavior in the context of safe navigation and a collaborative mobile manipulation task. First, we anticipate human motion by employing projections of human trajectories from smart edge sensor network observations into the planning map of a mobile robot. Second, we anticipate human intentions in a collaborative furniture-carrying task to achieve a given goal. Our experiments indicate that anticipating human behavior allows for safer navigation and more efficient collaboration. Finally, we showcase an integrated system that anticipates human behavior and collaborates with a human to achieve a target room layout, including the placement of tables and chairs.

Self-centering 3-DOF feet controller for hands-free locomotion control in telepresence and virtual reality

Aug 05, 2024

Abstract:We present a novel seated foot controller for handling 3-DOF aimed to control locomotion for telepresence robotics and virtual reality environments. Tilting the feet on two axes yields in forward, backward and sideways motion. In addition, a separate rotary joint allows for rotation around the vertical axis. Attached springs on all joints self-center the controller. The HTC Vive tracker is used to translate the trackers' orientation into locomotion commands. The proposed self-centering foot controller was used successfully for the ANA Avatar XPRIZE competition, where a naive operator traversed the robot through a longer distance, surpassing obstacles while solving various interaction and manipulation tasks in between. We publicly provide the models of the mostly 3D-printed feet controller for reproduction.

Cleaning Robots in Public Spaces: A Survey and Proposal for Benchmarking Based on Stakeholders Interviews

Jul 23, 2024Abstract:Autonomous cleaning robots for public spaces have potential for addressing current societal challenges, such as labor shortages and cleanliness in public spaces. Other application domains like autonomous driving, bin picking, or search and rescue have shown that benchmarking platforms and approaches in competitive settings can advance their respective research fields, resulting in more applicable systems under real-world conditions. For this paper, we analyzed seven semi-structured, qualitative stakeholder interviews about outdoor cleaning, identified current needs as well as limitations, and considered those results for the development of a benchmarking scenario based on the previous observations.

NimbRo wins ANA Avatar XPRIZE Immersive Telepresence Competition: Human-Centric Evaluation and Lessons Learned

Aug 28, 2023

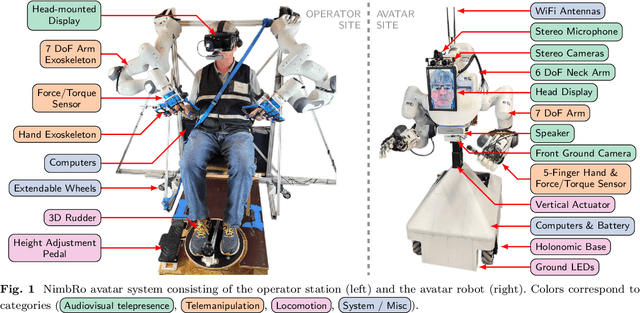

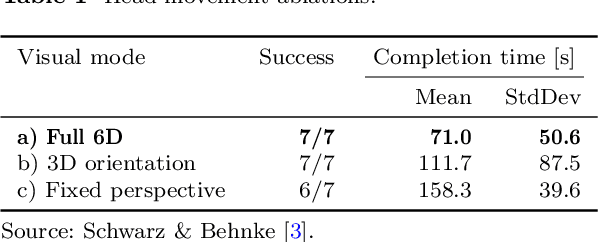

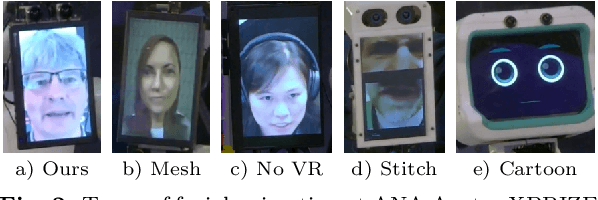

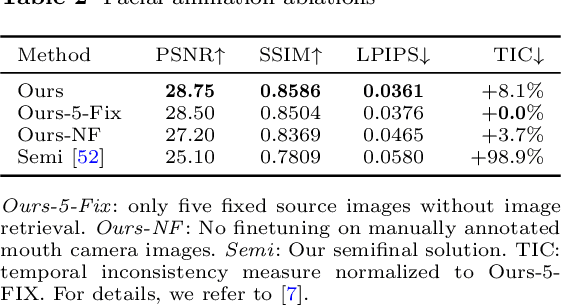

Abstract:Robotic avatar systems can enable immersive telepresence with locomotion, manipulation, and communication capabilities. We present such an avatar system, based on the key components of immersive 3D visualization and transparent force-feedback telemanipulation. Our avatar robot features an anthropomorphic upper body with dexterous hands. The remote human operator drives the arms and fingers through an exoskeleton-based operator station, which provides force feedback both at the wrist and for each finger. The robot torso is mounted on a holonomic base, providing omnidirectional locomotion on flat floors, controlled using a 3D rudder device. Finally, the robot features a 6D movable head with stereo cameras, which stream images to a VR display worn by the operator. Movement latency is hidden using spherical rendering. The head also carries a telepresence screen displaying an animated image of the operator's face, enabling direct interaction with remote persons. Our system won the \$10M ANA Avatar XPRIZE competition, which challenged teams to develop intuitive and immersive avatar systems that could be operated by briefly trained judges. We analyze our successful participation in the semifinals and finals and provide insight into our operator training and lessons learned. In addition, we evaluate our system in a user study that demonstrates its intuitive and easy usability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge