Bastian Pätzold

Leveraging Vision-Language Models for Open-Vocabulary Instance Segmentation and Tracking

Mar 18, 2025Abstract:This paper introduces a novel approach that leverages the capabilities of vision-language models (VLMs) by integrating them with established approaches for open-vocabulary detection (OVD), instance segmentation, and tracking. We utilize VLM-generated structured descriptions to identify visible object instances, collect application-relevant attributes, and inform an open-vocabulary detector to extract corresponding bounding boxes that are passed to a video segmentation model providing precise segmentation masks and tracking capabilities. Once initialized, this model can then directly extract segmentation masks, allowing processing of image streams in real time with minimal computational overhead. Tracks can be updated online as needed by generating new structured descriptions and corresponding open-vocabulary detections. This combines the descriptive power of VLMs with the grounding capability of OVD and the pixel-level understanding and speed of video segmentation. Our evaluation across datasets and robotics platforms demonstrates the broad applicability of this approach, showcasing its ability to extract task-specific attributes from non-standard objects in dynamic environments.

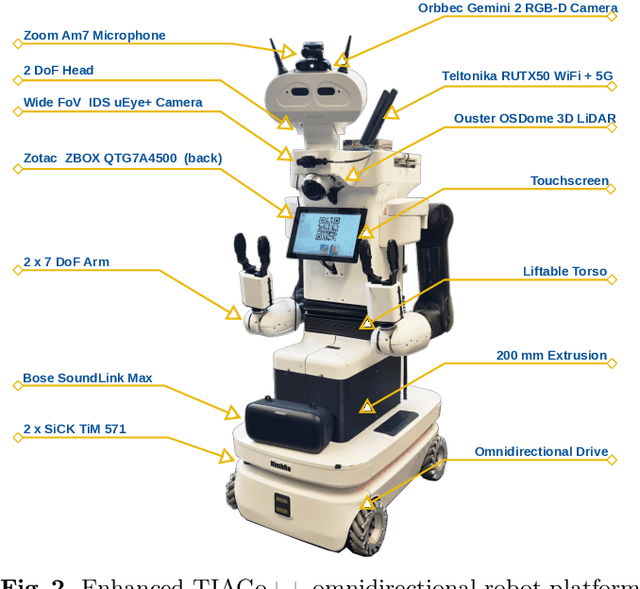

RoboCup@Home 2024 OPL Winner NimbRo: Anthropomorphic Service Robots using Foundation Models for Perception and Planning

Dec 19, 2024

Abstract:We present the approaches and contributions of the winning team NimbRo@Home at the RoboCup@Home 2024 competition in the Open Platform League held in Eindhoven, NL. Further, we describe our hardware setup and give an overview of the results for the task stages and the final demonstration. For this year's competition, we put a special emphasis on open-vocabulary object segmentation and grasping approaches that overcome the labeling overhead of supervised vision approaches, commonly used in RoboCup@Home. We successfully demonstrated that we can segment and grasp non-labeled objects by text descriptions. Further, we extensively employed LLMs for natural language understanding and task planning. Throughout the competition, our approaches showed robustness and generalization capabilities. A video of our performance can be found online.

A Comparison of Prompt Engineering Techniques for Task Planning and Execution in Service Robotics

Oct 30, 2024Abstract:Recent advances in LLM have been instrumental in autonomous robot control and human-robot interaction by leveraging their vast general knowledge and capabilities to understand and reason across a wide range of tasks and scenarios. Previous works have investigated various prompt engineering techniques for improving the performance of \glspl{LLM} to accomplish tasks, while others have proposed methods that utilize LLMs to plan and execute tasks based on the available functionalities of a given robot platform. In this work, we consider both lines of research by comparing prompt engineering techniques and combinations thereof within the application of high-level task planning and execution in service robotics. We define a diverse set of tasks and a simple set of functionalities in simulation, and measure task completion accuracy and execution time for several state-of-the-art models.

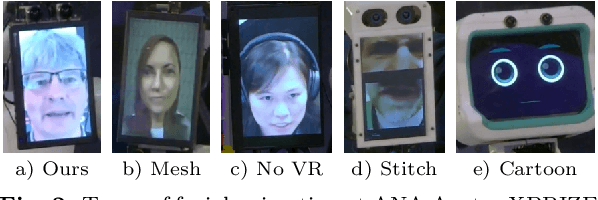

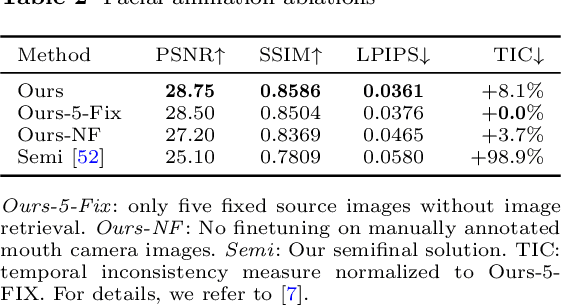

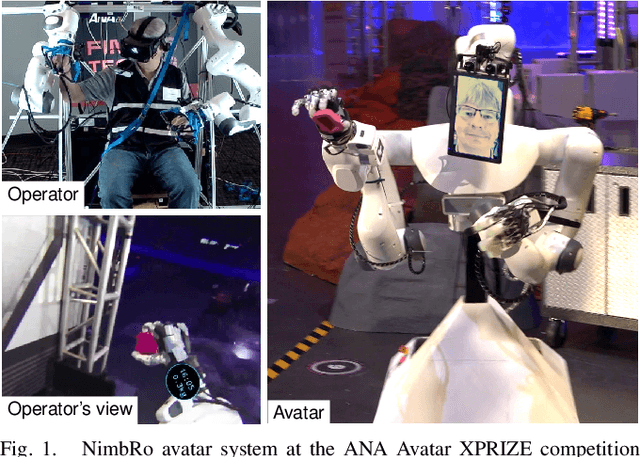

NimbRo wins ANA Avatar XPRIZE Immersive Telepresence Competition: Human-Centric Evaluation and Lessons Learned

Aug 28, 2023

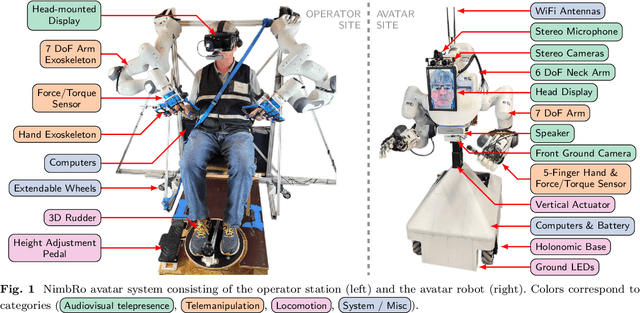

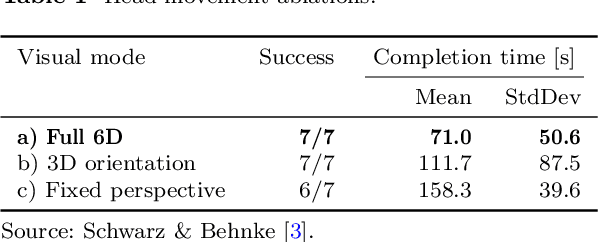

Abstract:Robotic avatar systems can enable immersive telepresence with locomotion, manipulation, and communication capabilities. We present such an avatar system, based on the key components of immersive 3D visualization and transparent force-feedback telemanipulation. Our avatar robot features an anthropomorphic upper body with dexterous hands. The remote human operator drives the arms and fingers through an exoskeleton-based operator station, which provides force feedback both at the wrist and for each finger. The robot torso is mounted on a holonomic base, providing omnidirectional locomotion on flat floors, controlled using a 3D rudder device. Finally, the robot features a 6D movable head with stereo cameras, which stream images to a VR display worn by the operator. Movement latency is hidden using spherical rendering. The head also carries a telepresence screen displaying an animated image of the operator's face, enabling direct interaction with remote persons. Our system won the \$10M ANA Avatar XPRIZE competition, which challenged teams to develop intuitive and immersive avatar systems that could be operated by briefly trained judges. We analyze our successful participation in the semifinals and finals and provide insight into our operator training and lessons learned. In addition, we evaluate our system in a user study that demonstrates its intuitive and easy usability.

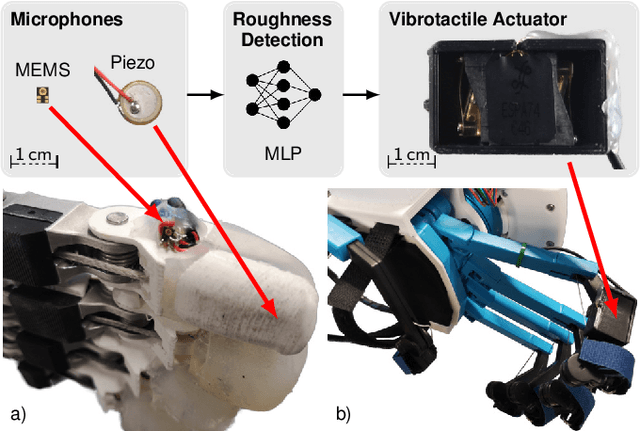

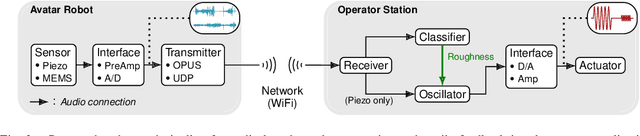

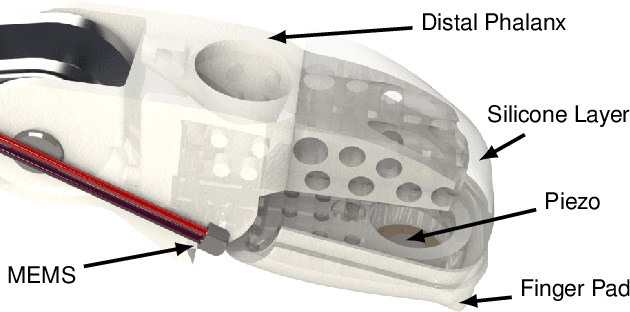

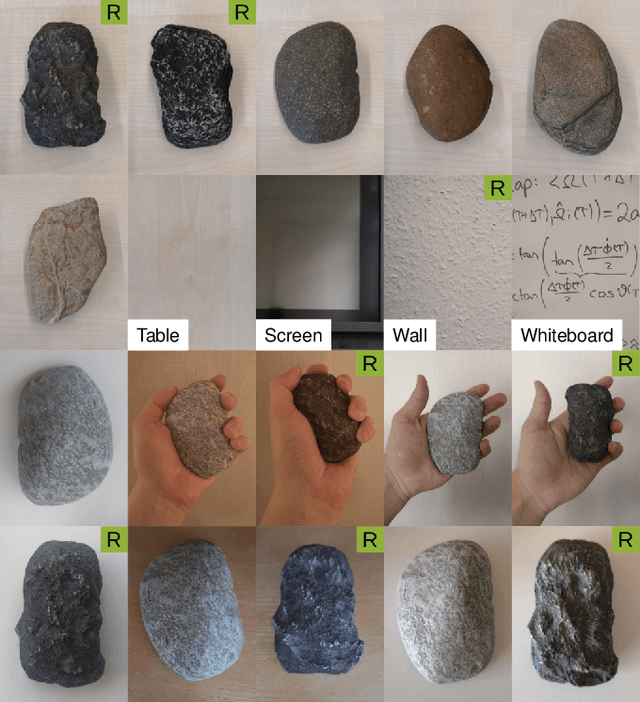

Audio-based Roughness Sensing and Tactile Feedback for Haptic Perception in Telepresence

Mar 13, 2023

Abstract:Haptic perception is incredibly important for immersive teleoperation of robots, especially for accomplishing manipulation tasks. We propose a low-cost haptic sensing and rendering system, which is capable of detecting and displaying surface roughness. As the robot fingertip moves across a surface of interest, two microphones capture sound coupled directly through the fingertip and through the air, respectively. A learning-based detector system analyzes the data in real-time and gives roughness estimates with both high temporal resolution and low latency. Finally, an audio-based haptic actuator displays the result to the human operator. We demonstrate the effectiveness of our system through experiments and our winning entry in the ANA Avatar XPRIZE competition finals, where impartial judges solved a roughness-based selection task even without additional vision feedback. We publish our dataset used for training and evaluation together with our trained models to enable reproducibility.

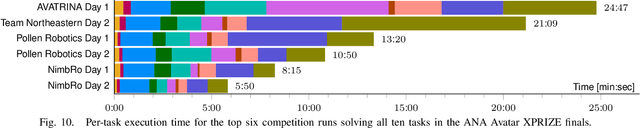

Robust Immersive Telepresence and Mobile Telemanipulation: NimbRo wins ANA Avatar XPRIZE Finals

Mar 06, 2023

Abstract:Robotic avatar systems promise to bridge distances and reduce the need for travel. We present the updated NimbRo avatar system, winner of the $5M grand prize at the international ANA Avatar XPRIZE competition, which required participants to build intuitive and immersive telepresence robots that could be operated by briefly trained operators. We describe key improvements for the finals compared to the system used in the semifinals: To operate without a power- and communications tether, we integrate a battery and a robust redundant wireless communication system. Video and audio data are compressed using low-latency HEVC and Opus codecs. We propose a new locomotion control device with tunable resistance force. To increase flexibility, the robot's upper-body height can be adjusted by the operator. We describe essential monitoring and robustness tools which enabled the success at the competition. Finally, we analyze our performance at the competition finals and discuss lessons learned.

Online Marker-free Extrinsic Camera Calibration using Person Keypoint Detections

Sep 15, 2022

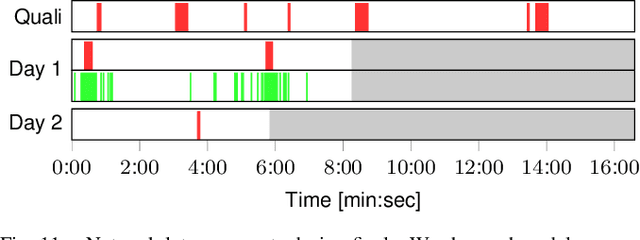

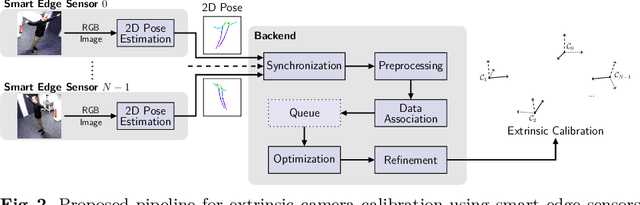

Abstract:Calibration of multi-camera systems, i.e. determining the relative poses between the cameras, is a prerequisite for many tasks in computer vision and robotics. Camera calibration is typically achieved using offline methods that use checkerboard calibration targets. These methods, however, often are cumbersome and lengthy, considering that a new calibration is required each time any camera pose changes. In this work, we propose a novel, marker-free online method for the extrinsic calibration of multiple smart edge sensors, relying solely on 2D human keypoint detections that are computed locally on the sensor boards from RGB camera images. Our method assumes the intrinsic camera parameters to be known and requires priming with a rough initial estimate of the camera poses. The person keypoint detections from multiple views are received at a central backend where they are synchronized, filtered, and assigned to person hypotheses. We use these person hypotheses to repeatedly solve optimization problems in the form of factor graphs. Given suitable observations of one or multiple persons traversing the scene, the estimated camera poses converge towards a coherent extrinsic calibration within a few minutes. We evaluate our approach in real-world settings and show that the calibration with our method achieves lower reprojection errors compared to a reference calibration generated by an offline method using a traditional calibration target.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge