Rahul Paul

Current Topological and Machine Learning Applications for Bias Detection in Text

Nov 22, 2023

Abstract:Institutional bias can impact patient outcomes, educational attainment, and legal system navigation. Written records often reflect bias, and once bias is identified; it is possible to refer individuals for training to reduce bias. Many machine learning tools exist to explore text data and create predictive models that can search written records to identify real-time bias. However, few previous studies investigate large language model embeddings and geometric models of biased text data to understand geometry's impact on bias modeling accuracy. To overcome this issue, this study utilizes the RedditBias database to analyze textual biases. Four transformer models, including BERT and RoBERTa variants, were explored. Post-embedding, t-SNE allowed two-dimensional visualization of data. KNN classifiers differentiated bias types, with lower k-values proving more effective. Findings suggest BERT, particularly mini BERT, excels in bias classification, while multilingual models lag. The recommendation emphasizes refining monolingual models and exploring domain-specific biases.

Deep Learning Models May Spuriously Classify Covid-19 from X-ray Images Based on Confounders

Jan 08, 2021

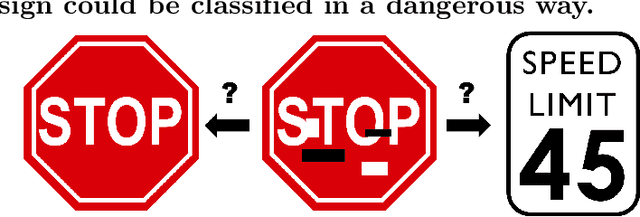

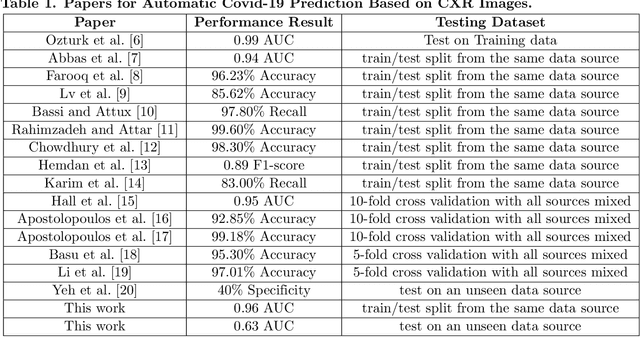

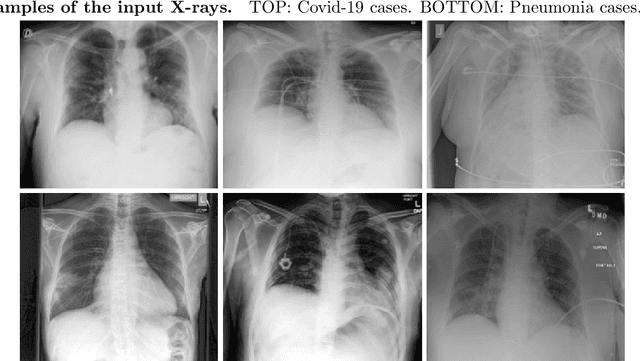

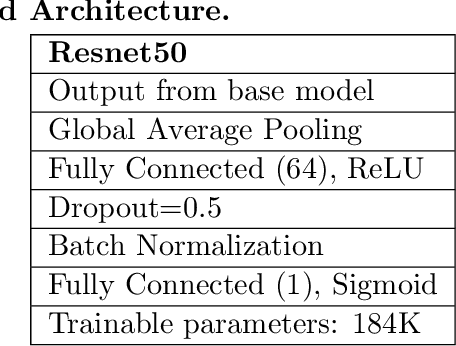

Abstract:Identifying who is infected with the Covid-19 virus is critical for controlling its spread. X-ray machines are widely available worldwide and can quickly provide images that can be used for diagnosis. A number of recent studies claim it may be possible to build highly accurate models, using deep learning, to detect Covid-19 from chest X-ray images. This paper explores the robustness and generalization ability of convolutional neural network models in diagnosing Covid-19 disease from frontal-view (AP/PA), raw chest X-ray images that were lung field cropped. Some concerning observations are made about high performing models that have learned to rely on confounding features related to the data source, rather than the patient's lung pathology, when differentiating between Covid-19 positive and negative labels. Specifically, these models likely made diagnoses based on confounding factors such as patient age or image processing artifacts, rather than medically relevant information.

Finding Covid-19 from Chest X-rays using Deep Learning on a Small Dataset

Apr 13, 2020Abstract:Testing for COVID-19 has been unable to keep up with the demand. Further, the false negative rate is projected to be as high as 30% and test results can take some time to obtain. X-ray machines are widely available and provide images for diagnosis quickly. This paper explores how useful chest X-ray images can be in diagnosing COVID-19 disease. We have obtained 122 chest X-rays of COVID-19 and over 4,000 chest X-rays of viral and bacterial pneumonia. A pretrained deep convolutional neural network has been tuned on 102 COVID-19 cases and 102 other pneumonia cases in a 10-fold cross validation. The results were all 102 COVID-19 cases were correctly classified and there were 8 false positives resulting in an AUC of 0.997. On a test set of 20 unseen COVID-19 cases all were correctly classified and more than 95% of 4171 other pneumonia examples were correctly classified. This study has flaws, most critically a lack of information about where in the disease process the COVID-19 cases were and the small data set size. More COVID-19 case images will enable a better answer to the question of how useful chest X-rays can be for diagnosing COVID-19 (so please send them).

Harnessing the Power of Deep Learning Methods in Healthcare: Neonatal Pain Assessment from Crying Sound

Sep 05, 2019

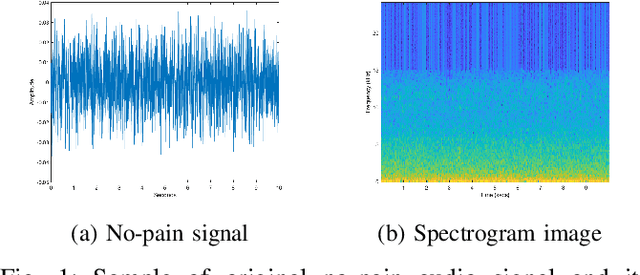

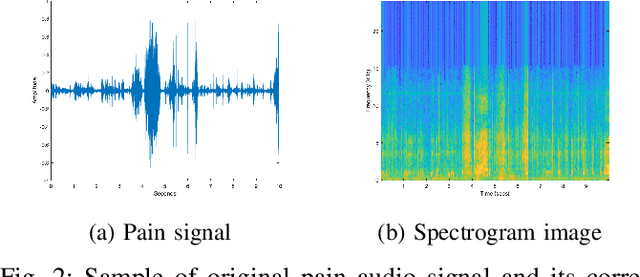

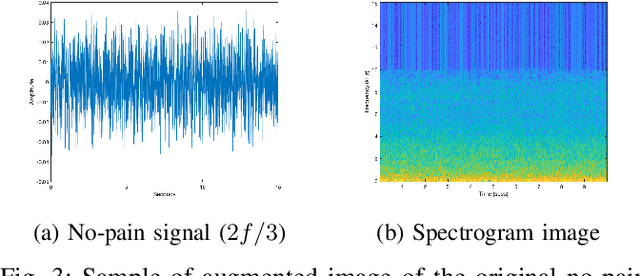

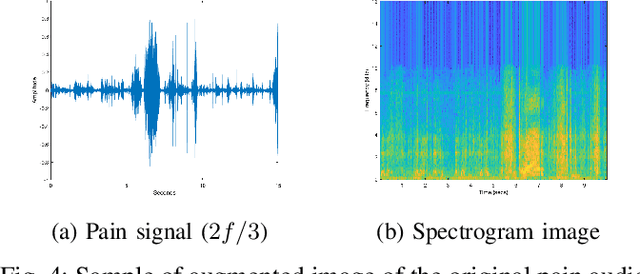

Abstract:Neonatal pain assessment in clinical environments is challenging as it is discontinuous and biased. Facial/body occlusion can occur in such settings due to clinical condition, developmental delays, prone position, or other external factors. In such cases, crying sound can be used to effectively assess neonatal pain. In this paper, we investigate the use of a novel CNN architecture (N-CNN) along with other CNN architectures (VGG16 and ResNet50) for assessing pain from crying sounds of neonates. The experimental results demonstrate that using our novel N-CNN for assessing pain from the sounds of neonates has a strong clinical potential and provides a viable alternative to the current assessment practice.

Classifying cooking object's state using a tuned VGG convolutional neural network

May 30, 2018

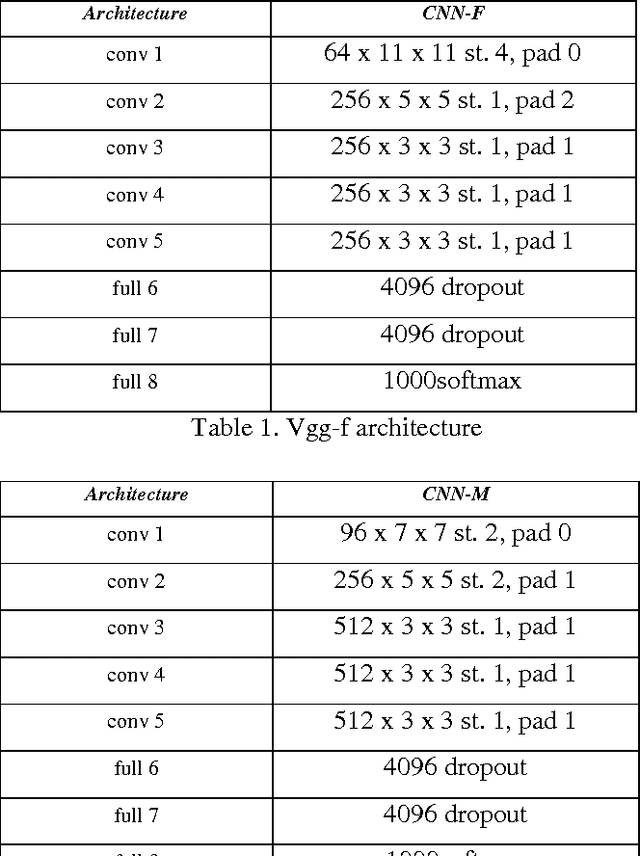

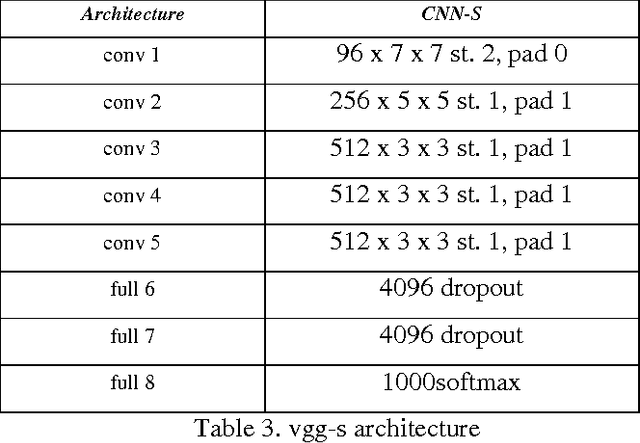

Abstract:In robotics, knowing the object states and recognizing the desired states are very important. Objects at different states would require different grasping. To achieve different states, different manipulations would be required, as well as different grasping. To analyze the objects at different states, a dataset of cooking objects was created. Cooking consists of various cutting techniques needed for different dishes (e.g. diced, julienne etc.). Identifying each of this state of cooking objects by the human can be difficult sometimes too. In this paper, we have analyzed seven different cooking object states by tuning a convolutional neural network (CNN). For this task, images were downloaded and annotated by students and they are divided into training and a completely different test set. By tuning the vgg-16 CNN 77% accuracy was obtained. The work presented in this paper focuses on classification between various object states rather than task recognition or recipe prediction. This framework can be easily adapted in any other object state classification activity.

Pouring Sequence Prediction using Recurrent Neural Network

May 23, 2018

Abstract:Human does their daily activity and cooking by teaching and imitating with the help of their vision and understanding of the difference between materials. Teaching a robot to do coking and daily work is difficult because of variation in environment, handling objects at different states etc. Pouring is a simple human daily life activity. In this paper, an approach to get pouring sequences were analyzed for determining the velocity of pouring and weight of the container. Then recurrent neural network (RNN) was used to build a neural network to learn that complex sequence and predict for unseen pouring sequences. Dynamic time warping (DTW) was used to evaluate the prediction performance of the trained model.

Make Your Bone Great Again : A study on Osteoporosis Classification

Jul 17, 2017

Abstract:Osteoporosis can be identified by looking at 2D x-ray images of the bone. The high degree of similarity between images of a healthy bone and a diseased one makes classification a challenge. A good bone texture characterization technique is essential for identifying osteoporosis cases. Standard texture feature extraction techniques like Local Binary Pattern (LBP), Gray Level Co-occurrence Matrix (GLCM) have been used for this purpose. In this paper, we draw a comparison between deep features extracted from convolution neural network against these traditional features. Our results show that deep features have more discriminative power as classifiers trained on them always outperform the ones trained on traditional features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge