Rangachar Kasturi

Multimodal Spatio-Temporal Deep Learning Approach for Neonatal Postoperative Pain Assessment

Dec 03, 2020

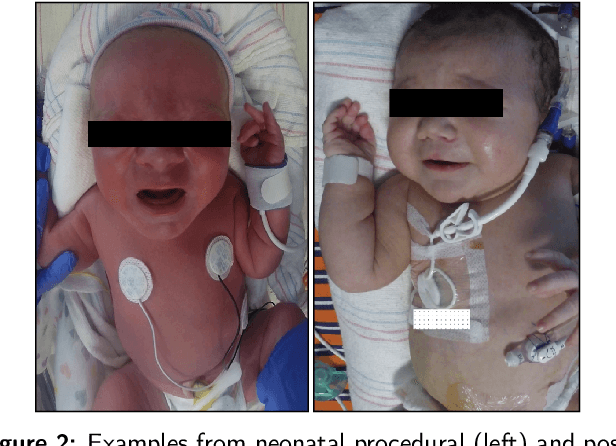

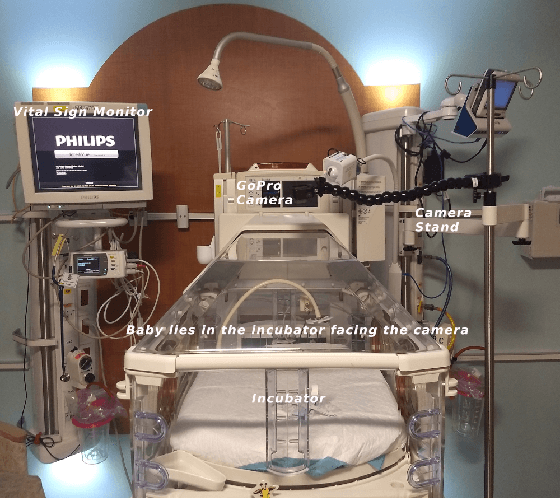

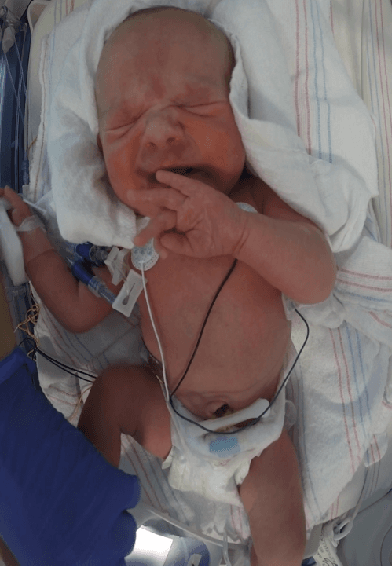

Abstract:The current practice for assessing neonatal postoperative pain relies on bedside caregivers. This practice is subjective, inconsistent, slow, and discontinuous. To develop a reliable medical interpretation, several automated approaches have been proposed to enhance the current practice. These approaches are unimodal and focus mainly on assessing neonatal procedural (acute) pain. As pain is a multimodal emotion that is often expressed through multiple modalities, the multimodal assessment of pain is necessary especially in case of postoperative (acute prolonged) pain. Additionally, spatio-temporal analysis is more stable over time and has been proven to be highly effective at minimizing misclassification errors. In this paper, we present a novel multimodal spatio-temporal approach that integrates visual and vocal signals and uses them for assessing neonatal postoperative pain. We conduct comprehensive experiments to investigate the effectiveness of the proposed approach. We compare the performance of the multimodal and unimodal postoperative pain assessment, and measure the impact of temporal information integration. The experimental results, on a real-world dataset, show that the proposed multimodal spatio-temporal approach achieves the highest AUC (0.87) and accuracy (79%), which are on average 6.67% and 6.33% higher than unimodal approaches. The results also show that the integration of temporal information markedly improves the performance as compared to the non-temporal approach as it captures changes in the pain dynamic. These results demonstrate that the proposed approach can be used as a viable alternative to manual assessment, which would tread a path toward fully automated pain monitoring in clinical settings, point-of-care testing, and homes.

First Investigation Into the Use of Deep Learning for Continuous Assessment of Neonatal Postoperative Pain

Mar 24, 2020

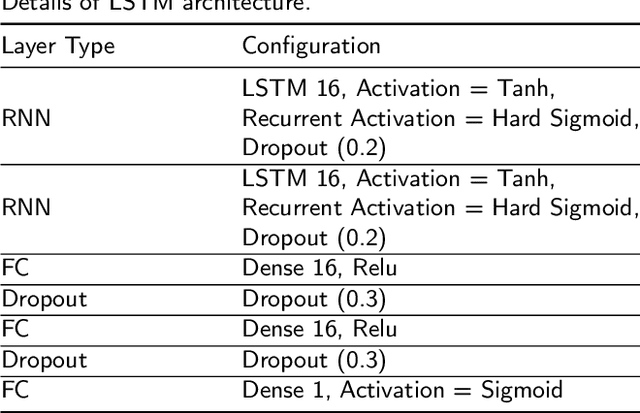

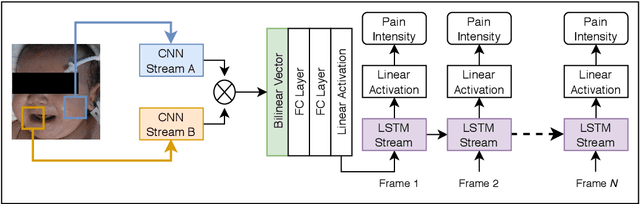

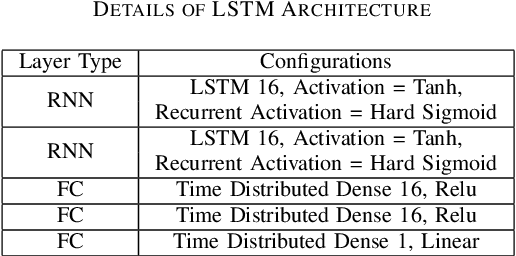

Abstract:This paper presents the first investigation into the use of fully automated deep learning framework for assessing neonatal postoperative pain. It specifically investigates the use of Bilinear Convolutional Neural Network (B-CNN) to extract facial features during different levels of postoperative pain followed by modeling the temporal pattern using Recurrent Neural Network (RNN). Although acute and postoperative pain have some common characteristics (e.g., visual action units), postoperative pain has a different dynamic, and it evolves in a unique pattern over time. Our experimental results indicate a clear difference between the pattern of acute and postoperative pain. They also suggest the efficiency of using a combination of bilinear CNN with RNN model for the continuous assessment of postoperative pain intensity.

Harnessing the Power of Deep Learning Methods in Healthcare: Neonatal Pain Assessment from Crying Sound

Sep 05, 2019

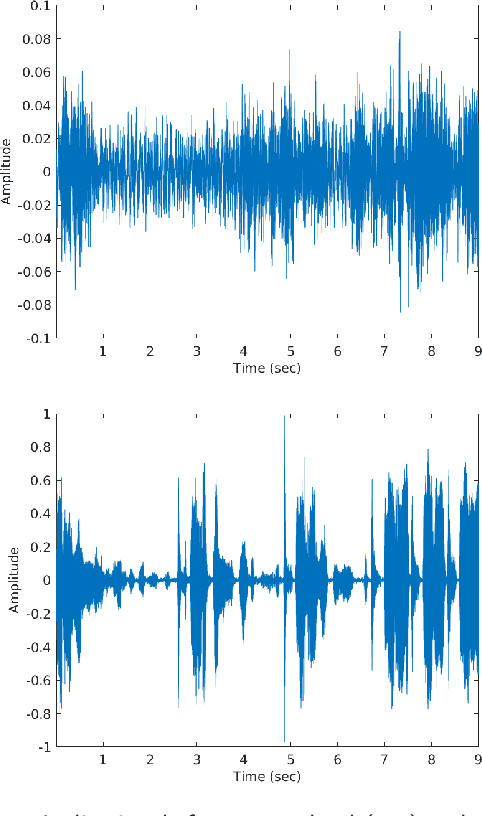

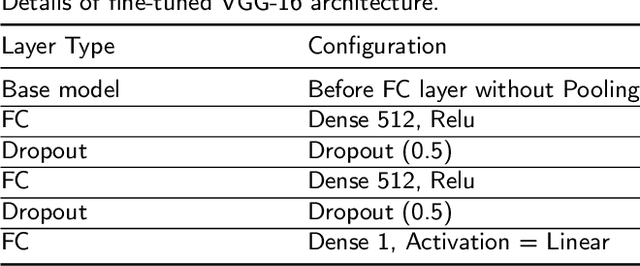

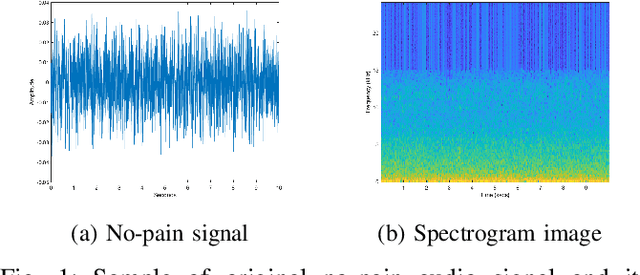

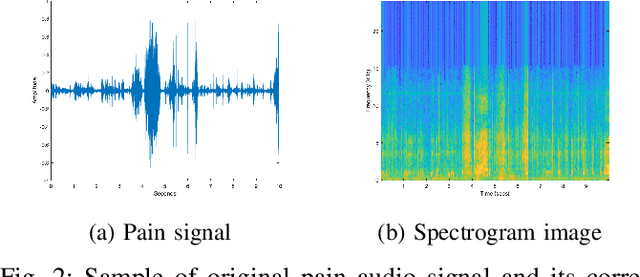

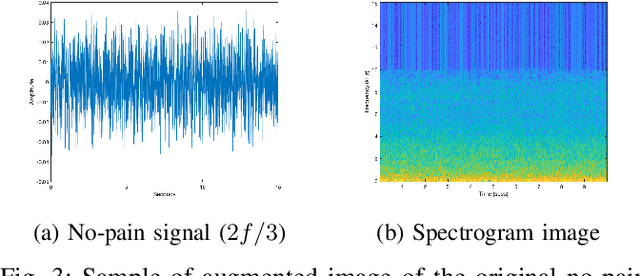

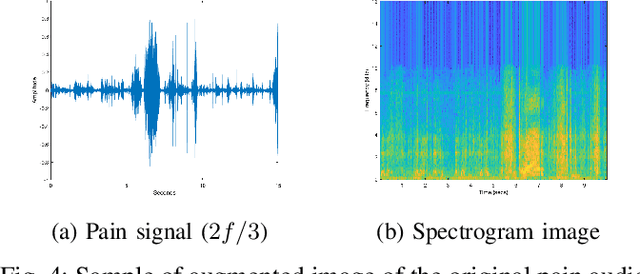

Abstract:Neonatal pain assessment in clinical environments is challenging as it is discontinuous and biased. Facial/body occlusion can occur in such settings due to clinical condition, developmental delays, prone position, or other external factors. In such cases, crying sound can be used to effectively assess neonatal pain. In this paper, we investigate the use of a novel CNN architecture (N-CNN) along with other CNN architectures (VGG16 and ResNet50) for assessing pain from crying sounds of neonates. The experimental results demonstrate that using our novel N-CNN for assessing pain from the sounds of neonates has a strong clinical potential and provides a viable alternative to the current assessment practice.

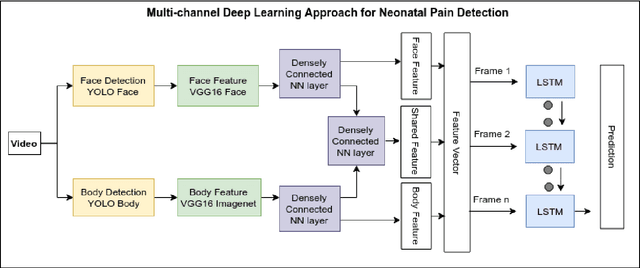

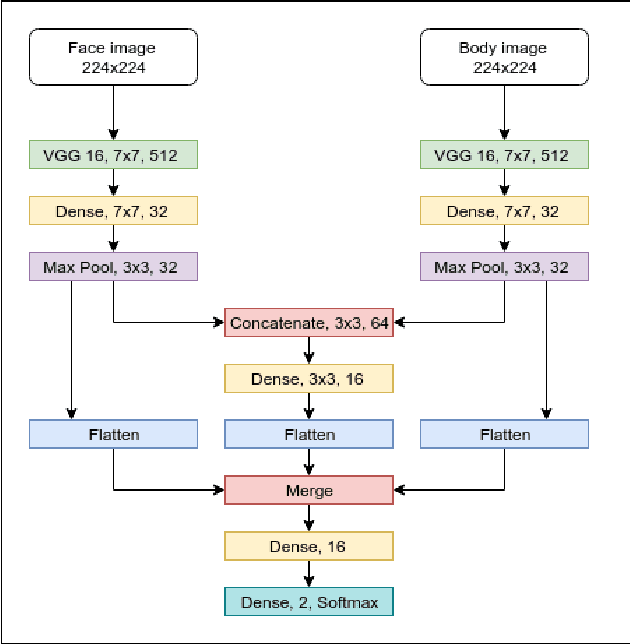

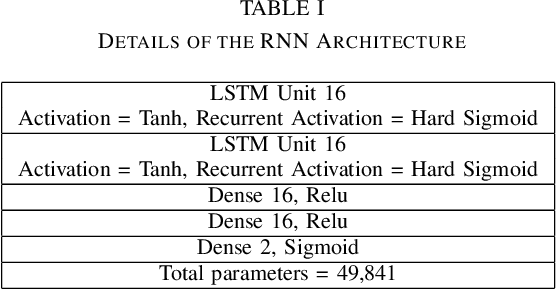

Multi-Channel Neural Network for Assessing Neonatal Pain from Videos

Aug 25, 2019

Abstract:Neonates do not have the ability to either articulate pain or communicate it non-verbally by pointing. The current clinical standard for assessing neonatal pain is intermittent and highly subjective. This discontinuity and subjectivity can lead to inconsistent assessment, and therefore, inadequate treatment. In this paper, we propose a multi-channel deep learning framework for assessing neonatal pain from videos. The proposed framework integrates information from two pain indicators or channels, namely facial expression and body movement, using convolutional neural network (CNN). It also integrates temporal information using a recurrent neural network (LSTM). The experimental results prove the efficiency and superiority of the proposed temporal and multi-channel framework as compared to existing similar methods.

Neonatal Pain Expression Recognition Using Transfer Learning

Jul 04, 2018

Abstract:Transfer learning using pre-trained Convolutional Neural Networks (CNNs) has been successfully applied to images for different classification tasks. In this paper, we propose a new pipeline for pain expression recognition in neonates using transfer learning. Specifically, we propose to exploit a pre-trained CNN that was originally trained on a relatively similar dataset for face recognition (VGG Face) as well as CNNs that were pre-trained on a relatively different dataset for image classification (iVGG F,M, and S) to extract deep features from neonates' faces. In the final stage, several supervised machine learning classifiers are trained to classify neonates' facial expression into pain or no pain expression. The proposed pipeline achieved, on a testing dataset, 0.841 AUC and 90.34 accuracy, which is approx. 7 higher than the accuracy of handcrafted traditional features. We also propose to combine deep features with traditional features and hypothesize that the mixed features would improve pain classification performance. Combining deep features with traditional features achieved 92.71 accuracy and 0.948 AUC. These results show that transfer learning, which is a faster and more practical option than training CNN from the scratch, can be used to extract useful features for pain expression recognition in neonates. It also shows that combining deep features with traditional handcrafted features is a good practice to improve the performance of pain expression recognition and possibly the performance of similar applications.

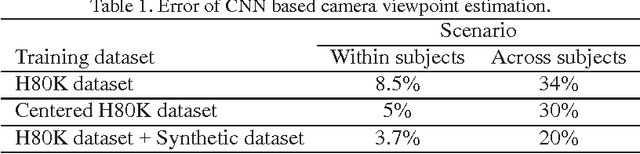

Learning camera viewpoint using CNN to improve 3D body pose estimation

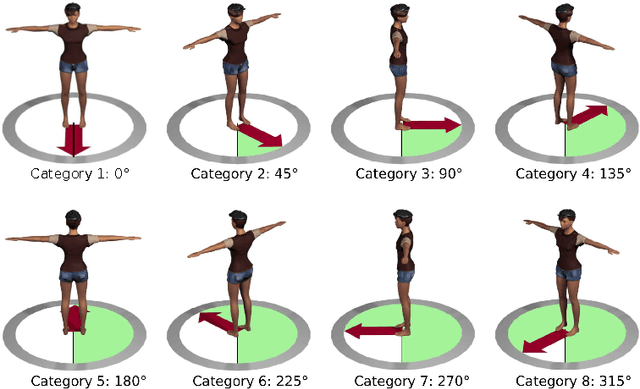

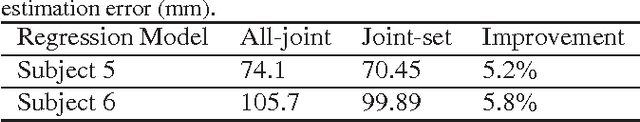

Sep 18, 2016

Abstract:The objective of this work is to estimate 3D human pose from a single RGB image. Extracting image representations which incorporate both spatial relation of body parts and their relative depth plays an essential role in accurate3D pose reconstruction. In this paper, for the first time, we show that camera viewpoint in combination to 2D joint lo-cations significantly improves 3D pose accuracy without the explicit use of perspective geometry mathematical models.To this end, we train a deep Convolutional Neural Net-work (CNN) to learn categorical camera viewpoint. To make the network robust against clothing and body shape of the subject in the image, we utilized 3D computer rendering to synthesize additional training images. We test our framework on the largest 3D pose estimation bench-mark, Human3.6m, and achieve up to 20% error reduction compared to the state-of-the-art approaches that do not use body part segmentation.

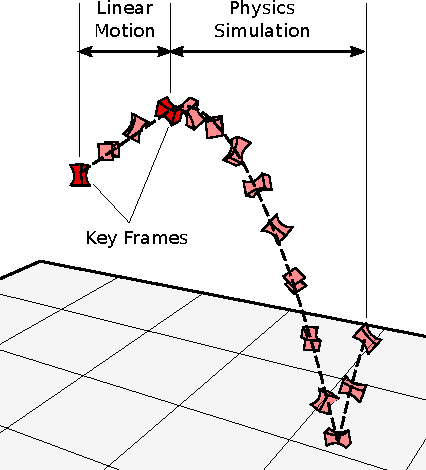

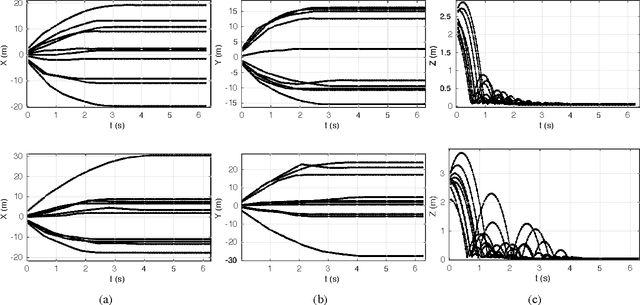

Autonomous driving challenge: To Infer the property of a dynamic object based on its motion pattern using recurrent neural network

Sep 10, 2016

Abstract:In autonomous driving applications a critical challenge is to identify action to take to avoid an obstacle on collision course. For example, when a heavy object is suddenly encountered it is critical to stop the vehicle or change the lane even if it causes other traffic disruptions. However,there are situations when it is preferable to collide with the object rather than take an action that would result in a much more serious accident than collision with the object. For example, a heavy object which falls from a truck should be avoided whereas a bouncing ball or a soft target such as a foam box need not be.We present a novel method to discriminate between the motion characteristics of these types of objects based on their physical properties such as bounciness, elasticity, etc.In this preliminary work, we use recurrent neural net-work with LSTM cells to train a classifier to classify objects based on their motion trajectories. We test the algorithm on synthetic data, and, as a proof of concept, demonstrate its effectiveness on a limited set of real-world data.

Machine-based Multimodal Pain Assessment Tool for Infants: A Review

Jul 07, 2016

Abstract:The current practice of assessing infants' pain depends on using subjective tools that fail to meet rigorous psychometric standards and requires continuous monitoring by health professionals. Therefore, pain may be misinterpreted or totally missed leading to misdiagnosis and over/under treatment. To address these shortcomings, the current practice can be augmented with a machine-based assessment tool that continuously monitors various pain cues and provides a consistent and minimally biased evaluation of pain. Several machine-based approaches have been proposed to assess infants' pain based on analysis of whether behavioral or physiological pain indictors (i.e., single modality). The aim of this review paper is to provide the reader with the current machine-based approaches in assessing infants' pain. It also proposes the development of a multimodal machine-based pain assessment tool and presents preliminary implementation results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge