Gregory M. Goldgof

Single GPU Task Adaptation of Pathology Foundation Models for Whole Slide Image Analysis

Jun 05, 2025

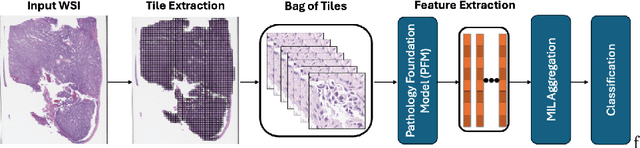

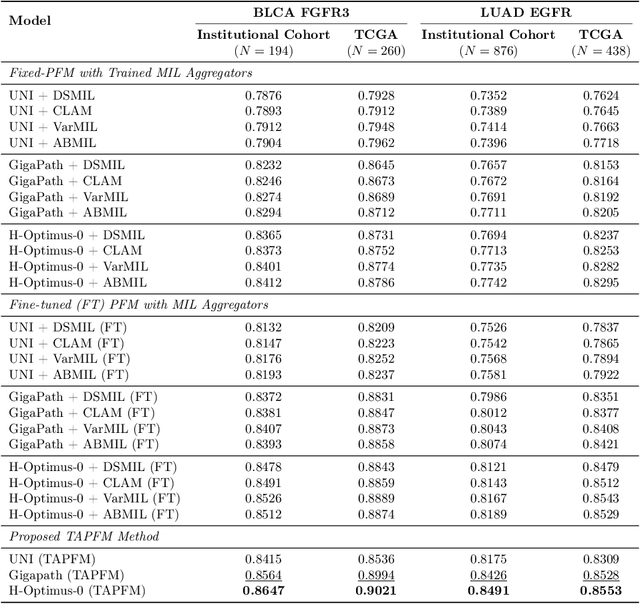

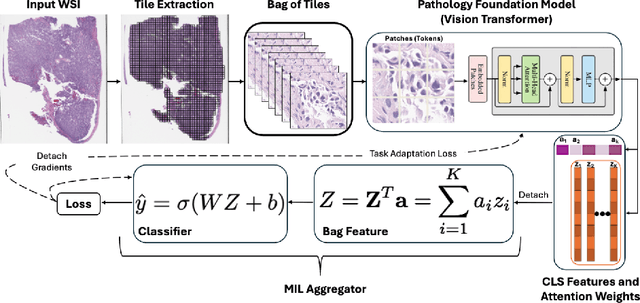

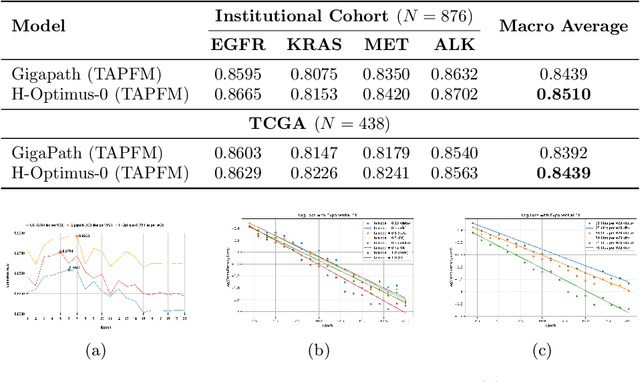

Abstract:Pathology foundation models (PFMs) have emerged as powerful tools for analyzing whole slide images (WSIs). However, adapting these pretrained PFMs for specific clinical tasks presents considerable challenges, primarily due to the availability of only weak (WSI-level) labels for gigapixel images, necessitating multiple instance learning (MIL) paradigm for effective WSI analysis. This paper proposes a novel approach for single-GPU \textbf{T}ask \textbf{A}daptation of \textbf{PFM}s (TAPFM) that uses vision transformer (\vit) attention for MIL aggregation while optimizing both for feature representations and attention weights. The proposed approach maintains separate computational graphs for MIL aggregator and the PFM to create stable training dynamics that align with downstream task objectives during end-to-end adaptation. Evaluated on mutation prediction tasks for bladder cancer and lung adenocarcinoma across institutional and TCGA cohorts, TAPFM consistently outperforms conventional approaches, with H-Optimus-0 (TAPFM) outperforming the benchmarks. TAPFM effectively handles multi-label classification of actionable mutations as well. Thus, TAPFM makes adaptation of powerful pre-trained PFMs practical on standard hardware for various clinical applications.

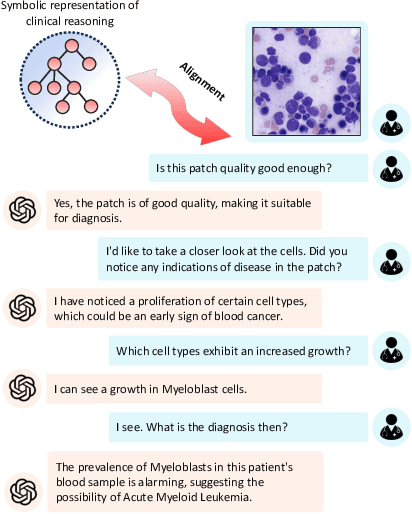

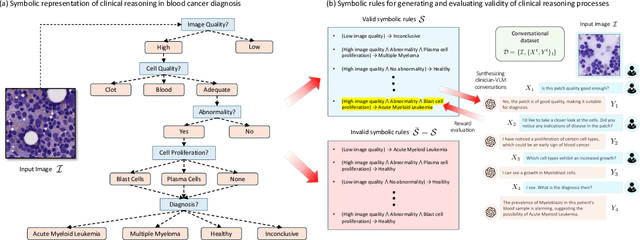

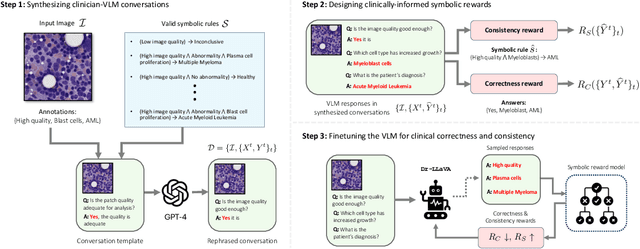

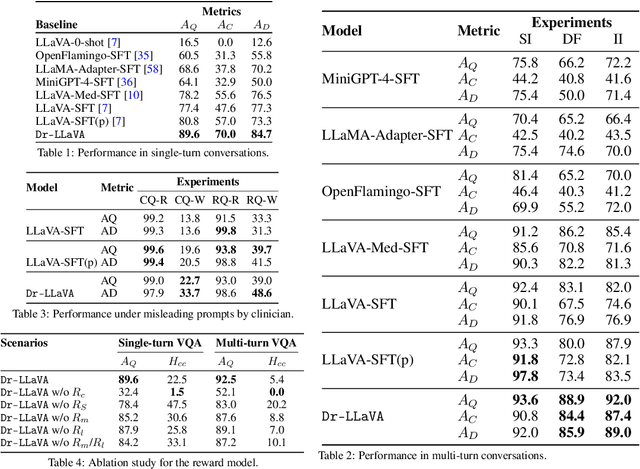

Dr-LLaVA: Visual Instruction Tuning with Symbolic Clinical Grounding

May 29, 2024

Abstract:Vision-Language Models (VLM) can support clinicians by analyzing medical images and engaging in natural language interactions to assist in diagnostic and treatment tasks. However, VLMs often exhibit "hallucinogenic" behavior, generating textual outputs not grounded in contextual multimodal information. This challenge is particularly pronounced in the medical domain, where we do not only require VLM outputs to be accurate in single interactions but also to be consistent with clinical reasoning and diagnostic pathways throughout multi-turn conversations. For this purpose, we propose a new alignment algorithm that uses symbolic representations of clinical reasoning to ground VLMs in medical knowledge. These representations are utilized to (i) generate GPT-4-guided visual instruction tuning data at scale, simulating clinician-VLM conversations with demonstrations of clinical reasoning, and (ii) create an automatic reward function that evaluates the clinical validity of VLM generations throughout clinician-VLM interactions. Our algorithm eliminates the need for human involvement in training data generation or reward model construction, reducing costs compared to standard reinforcement learning with human feedback (RLHF). We apply our alignment algorithm to develop Dr-LLaVA, a conversational VLM finetuned for analyzing bone marrow pathology slides, demonstrating strong performance in multi-turn medical conversations.

Aligning Synthetic Medical Images with Clinical Knowledge using Human Feedback

Jun 16, 2023

Abstract:Generative models capable of capturing nuanced clinical features in medical images hold great promise for facilitating clinical data sharing, enhancing rare disease datasets, and efficiently synthesizing annotated medical images at scale. Despite their potential, assessing the quality of synthetic medical images remains a challenge. While modern generative models can synthesize visually-realistic medical images, the clinical validity of these images may be called into question. Domain-agnostic scores, such as FID score, precision, and recall, cannot incorporate clinical knowledge and are, therefore, not suitable for assessing clinical sensibility. Additionally, there are numerous unpredictable ways in which generative models may fail to synthesize clinically plausible images, making it challenging to anticipate potential failures and manually design scores for their detection. To address these challenges, this paper introduces a pathologist-in-the-loop framework for generating clinically-plausible synthetic medical images. Starting with a diffusion model pretrained using real images, our framework comprises three steps: (1) evaluating the generated images by expert pathologists to assess whether they satisfy clinical desiderata, (2) training a reward model that predicts the pathologist feedback on new samples, and (3) incorporating expert knowledge into the diffusion model by using the reward model to inform a finetuning objective. We show that human feedback significantly improves the quality of synthetic images in terms of fidelity, diversity, utility in downstream applications, and plausibility as evaluated by experts.

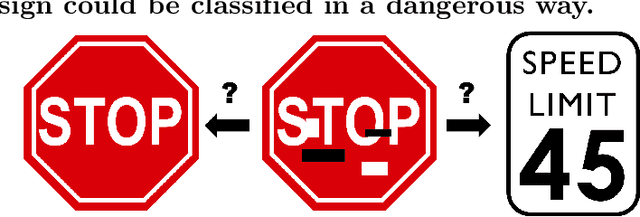

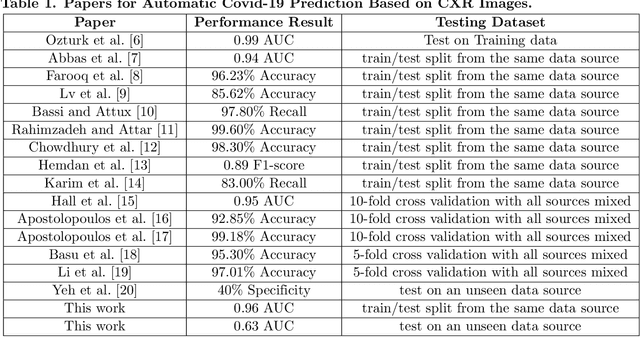

Deep Learning Models May Spuriously Classify Covid-19 from X-ray Images Based on Confounders

Jan 08, 2021

Abstract:Identifying who is infected with the Covid-19 virus is critical for controlling its spread. X-ray machines are widely available worldwide and can quickly provide images that can be used for diagnosis. A number of recent studies claim it may be possible to build highly accurate models, using deep learning, to detect Covid-19 from chest X-ray images. This paper explores the robustness and generalization ability of convolutional neural network models in diagnosing Covid-19 disease from frontal-view (AP/PA), raw chest X-ray images that were lung field cropped. Some concerning observations are made about high performing models that have learned to rely on confounding features related to the data source, rather than the patient's lung pathology, when differentiating between Covid-19 positive and negative labels. Specifically, these models likely made diagnoses based on confounding factors such as patient age or image processing artifacts, rather than medically relevant information.

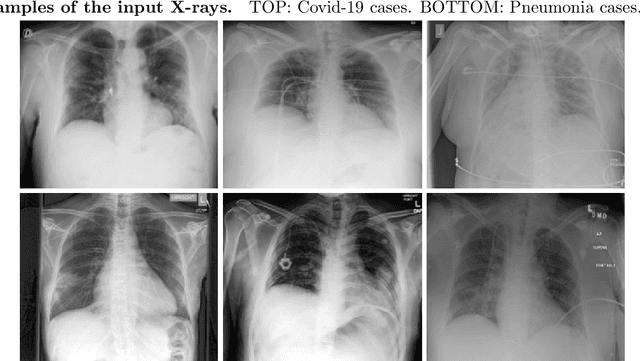

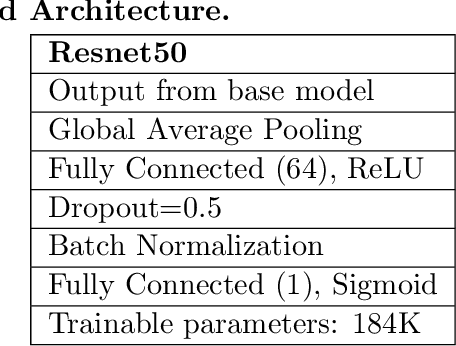

Finding Covid-19 from Chest X-rays using Deep Learning on a Small Dataset

Apr 13, 2020Abstract:Testing for COVID-19 has been unable to keep up with the demand. Further, the false negative rate is projected to be as high as 30% and test results can take some time to obtain. X-ray machines are widely available and provide images for diagnosis quickly. This paper explores how useful chest X-ray images can be in diagnosing COVID-19 disease. We have obtained 122 chest X-rays of COVID-19 and over 4,000 chest X-rays of viral and bacterial pneumonia. A pretrained deep convolutional neural network has been tuned on 102 COVID-19 cases and 102 other pneumonia cases in a 10-fold cross validation. The results were all 102 COVID-19 cases were correctly classified and there were 8 false positives resulting in an AUC of 0.997. On a test set of 20 unseen COVID-19 cases all were correctly classified and more than 95% of 4171 other pneumonia examples were correctly classified. This study has flaws, most critically a lack of information about where in the disease process the COVID-19 cases were and the small data set size. More COVID-19 case images will enable a better answer to the question of how useful chest X-rays can be for diagnosing COVID-19 (so please send them).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge