Ghulam Jilani Quadri

Understanding Bias in Perceiving Dimensionality Reduction Projections

Jul 28, 2025Abstract:Selecting the dimensionality reduction technique that faithfully represents the structure is essential for reliable visual communication and analytics. In reality, however, practitioners favor projections for other attractions, such as aesthetics and visual saliency, over the projection's structural faithfulness, a bias we define as visual interestingness. In this research, we conduct a user study that (1) verifies the existence of such bias and (2) explains why the bias exists. Our study suggests that visual interestingness biases practitioners' preferences when selecting projections for analysis, and this bias intensifies with color-encoded labels and shorter exposure time. Based on our findings, we discuss strategies to mitigate bias in perceiving and interpreting DR projections.

CLAMS: A Cluster Ambiguity Measure for Estimating Perceptual Variability in Visual Clustering

Aug 11, 2023Abstract:Visual clustering is a common perceptual task in scatterplots that supports diverse analytics tasks (e.g., cluster identification). However, even with the same scatterplot, the ways of perceiving clusters (i.e., conducting visual clustering) can differ due to the differences among individuals and ambiguous cluster boundaries. Although such perceptual variability casts doubt on the reliability of data analysis based on visual clustering, we lack a systematic way to efficiently assess this variability. In this research, we study perceptual variability in conducting visual clustering, which we call Cluster Ambiguity. To this end, we introduce CLAMS, a data-driven visual quality measure for automatically predicting cluster ambiguity in monochrome scatterplots. We first conduct a qualitative study to identify key factors that affect the visual separation of clusters (e.g., proximity or size difference between clusters). Based on study findings, we deploy a regression module that estimates the human-judged separability of two clusters. Then, CLAMS predicts cluster ambiguity by analyzing the aggregated results of all pairwise separability between clusters that are generated by the module. CLAMS outperforms widely-used clustering techniques in predicting ground truth cluster ambiguity. Meanwhile, CLAMS exhibits performance on par with human annotators. We conclude our work by presenting two applications for optimizing and benchmarking data mining techniques using CLAMS. The interactive demo of CLAMS is available at clusterambiguity.dev.

Make Your Bone Great Again : A study on Osteoporosis Classification

Jul 17, 2017

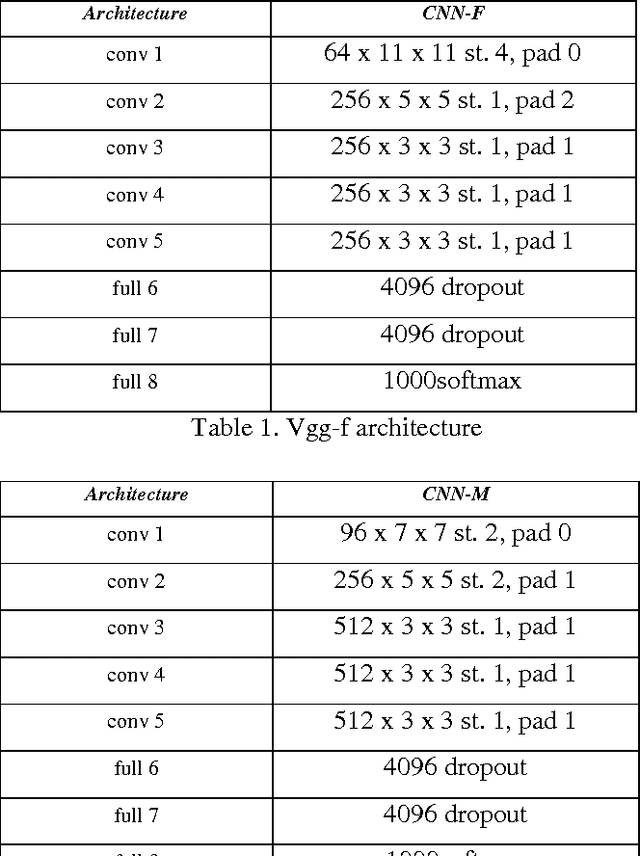

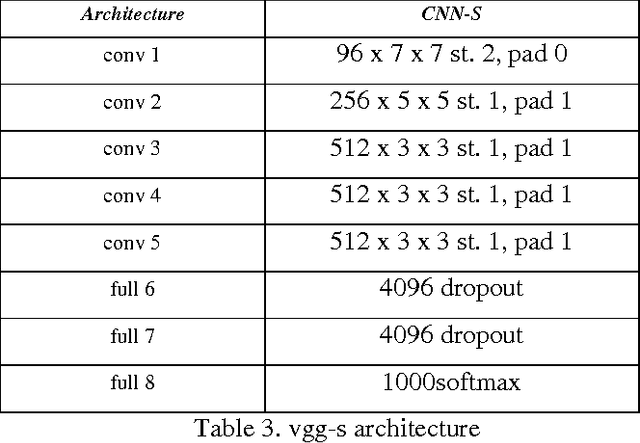

Abstract:Osteoporosis can be identified by looking at 2D x-ray images of the bone. The high degree of similarity between images of a healthy bone and a diseased one makes classification a challenge. A good bone texture characterization technique is essential for identifying osteoporosis cases. Standard texture feature extraction techniques like Local Binary Pattern (LBP), Gray Level Co-occurrence Matrix (GLCM) have been used for this purpose. In this paper, we draw a comparison between deep features extracted from convolution neural network against these traditional features. Our results show that deep features have more discriminative power as classifiers trained on them always outperform the ones trained on traditional features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge