Qingyu Yang

Wavelet-Enhanced Desnowing: A Novel Single Image Restoration Approach for Traffic Surveillance under Adverse Weather Conditions

Mar 03, 2025Abstract:Image restoration under adverse weather conditions refers to the process of removing degradation caused by weather particles while improving visual quality. Most existing deweathering methods rely on increasing the network scale and data volume to achieve better performance which requires more expensive computing power. Also, many methods lack generalization for specific applications. In the traffic surveillance screener, the main challenges are snow removal and veil effect elimination. In this paper, we propose a wavelet-enhanced snow removal method that use a Dual-Tree Complex Wavelet Transform feature enhancement module and a dynamic convolution acceleration module to address snow degradation in surveillance images. We also use a residual learning restoration module to remove veil effects caused by rain, snow, and fog. The proposed architecture extracts and analyzes information from snow-covered regions, significantly improving snow removal performance. And the residual learning restoration module removes veiling effects in images, enhancing clarity and detail. Experiments show that it performs better than some popular desnowing methods. Our approach also demonstrates effectiveness and accuracy when applied to real traffic surveillance images.

Zero-shot Load Forecasting for Integrated Energy Systems: A Large Language Model-based Framework with Multi-task Learning

Feb 24, 2025Abstract:The growing penetration of renewable energy sources in power systems has increased the complexity and uncertainty of load forecasting, especially for integrated energy systems with multiple energy carriers. Traditional forecasting methods heavily rely on historical data and exhibit limited transferability across different scenarios, posing significant challenges for emerging applications in smart grids and energy internet. This paper proposes the TSLLM-Load Forecasting Mechanism, a novel zero-shot load forecasting framework based on large language models (LLMs) to address these challenges. The framework consists of three key components: a data preprocessing module that handles multi-source energy load data, a time series prompt generation module that bridges the semantic gap between energy data and LLMs through multi-task learning and similarity alignment, and a prediction module that leverages pre-trained LLMs for accurate forecasting. The framework's effectiveness was validated on a real-world dataset comprising load profiles from 20 Australian solar-powered households, demonstrating superior performance in both conventional and zero-shot scenarios. In conventional testing, our method achieved a Mean Squared Error (MSE) of 0.4163 and a Mean Absolute Error (MAE) of 0.3760, outperforming existing approaches by at least 8\%. In zero-shot prediction experiments across 19 households, the framework maintained consistent accuracy with a total MSE of 11.2712 and MAE of 7.6709, showing at least 12\% improvement over current methods. The results validate the framework's potential for accurate and transferable load forecasting in integrated energy systems, particularly beneficial for renewable energy integration and smart grid applications.

MIPI 2023 Challenge on RGBW Fusion: Methods and Results

Apr 24, 2023

Abstract:Developing and integrating advanced image sensors with novel algorithms in camera systems are prevalent with the increasing demand for computational photography and imaging on mobile platforms. However, the lack of high-quality data for research and the rare opportunity for an in-depth exchange of views from industry and academia constrain the development of mobile intelligent photography and imaging (MIPI). With the success of the 1st MIPI Workshop@ECCV 2022, we introduce the second MIPI challenge, including four tracks focusing on novel image sensors and imaging algorithms. This paper summarizes and reviews the RGBW Joint Fusion and Denoise track on MIPI 2023. In total, 69 participants were successfully registered, and 4 teams submitted results in the final testing phase. The final results are evaluated using objective metrics, including PSNR, SSIM, LPIPS, and KLD. A detailed description of the top three models developed in this challenge is provided in this paper. More details of this challenge and the link to the dataset can be found at https://mipi-challenge.org/MIPI2023/.

MIPI 2023 Challenge on RGBW Remosaic: Methods and Results

Apr 20, 2023

Abstract:Developing and integrating advanced image sensors with novel algorithms in camera systems are prevalent with the increasing demand for computational photography and imaging on mobile platforms. However, the lack of high-quality data for research and the rare opportunity for an in-depth exchange of views from industry and academia constrain the development of mobile intelligent photography and imaging (MIPI). With the success of the 1st MIPI Workshop@ECCV 2022, we introduce the second MIPI challenge, including four tracks focusing on novel image sensors and imaging algorithms. This paper summarizes and reviews the RGBW Joint Remosaic and Denoise track on MIPI 2023. In total, 81 participants were successfully registered, and 4 teams submitted results in the final testing phase. The final results are evaluated using objective metrics, including PSNR, SSIM, LPIPS, and KLD. A detailed description of the top three models developed in this challenge is provided in this paper. More details of this challenge and the link to the dataset can be found at https://mipi-challenge.org/MIPI2023/.

MIPI 2022 Challenge on RGBW Sensor Fusion: Dataset and Report

Sep 27, 2022

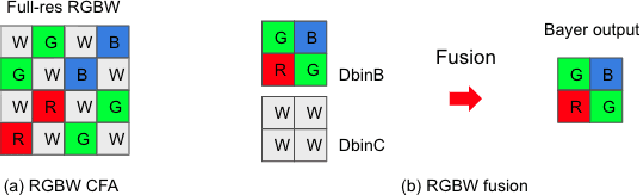

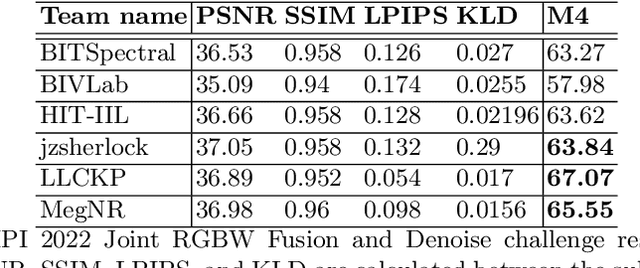

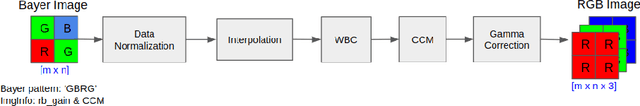

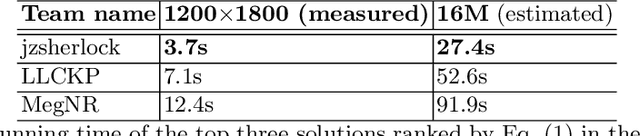

Abstract:Developing and integrating advanced image sensors with novel algorithms in camera systems are prevalent with the increasing demand for computational photography and imaging on mobile platforms. However, the lack of high-quality data for research and the rare opportunity for in-depth exchange of views from industry and academia constrain the development of mobile intelligent photography and imaging (MIPI). To bridge the gap, we introduce the first MIPI challenge, including five tracks focusing on novel image sensors and imaging algorithms. In this paper, RGBW Joint Fusion and Denoise, one of the five tracks, working on the fusion of binning-mode RGBW to Bayer, is introduced. The participants were provided with a new dataset including 70 (training) and 15 (validation) scenes of high-quality RGBW and Bayer pairs. In addition, for each scene, RGBW of different noise levels was provided at 24dB and 42dB. All the data were captured using an RGBW sensor in both outdoor and indoor conditions. The final results are evaluated using objective metrics, including PSNR, SSIM}, LPIPS, and KLD. A detailed description of all models developed in this challenge is provided in this paper. More details of this challenge and the link to the dataset can be found at https://github.com/mipi-challenge/MIPI2022.

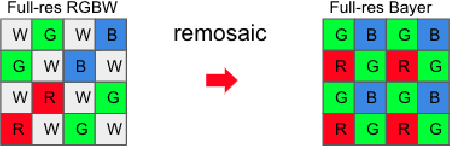

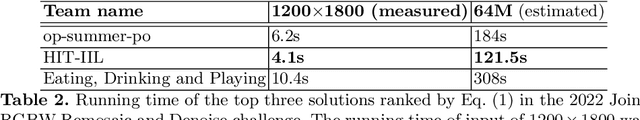

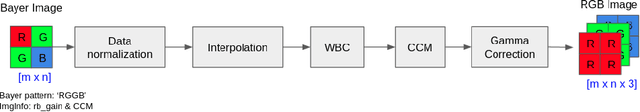

MIPI 2022 Challenge on RGBW Sensor Re-mosaic: Dataset and Report

Sep 15, 2022

Abstract:Developing and integrating advanced image sensors with novel algorithms in camera systems are prevalent with the increasing demand for computational photography and imaging on mobile platforms. However, the lack of high-quality data for research and the rare opportunity for in-depth exchange of views from industry and academia constrain the development of mobile intelligent photography and imaging (MIPI). To bridge the gap, we introduce the first MIPI challenge including five tracks focusing on novel image sensors and imaging algorithms. In this paper, RGBW Joint Remosaic and Denoise, one of the five tracks, working on the interpolation of RGBW CFA to Bayer at full resolution, is introduced. The participants were provided with a new dataset including 70 (training) and 15 (validation) scenes of high-quality RGBW and Bayer pairs. In addition, for each scene, RGBW of different noise levels was provided at 0dB, 24dB, and 42dB. All the data were captured using an RGBW sensor in both outdoor and indoor conditions. The final results are evaluated using objective metrics including PSNR, SSIM, LPIPS, and KLD. A detailed description of all models developed in this challenge is provided in this paper. More details of this challenge and the link to the dataset can be found at https://github.com/mipi-challenge/MIPI2022.

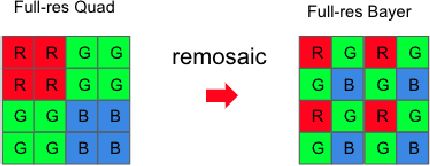

MIPI 2022 Challenge on Quad-Bayer Re-mosaic: Dataset and Report

Sep 15, 2022

Abstract:Developing and integrating advanced image sensors with novel algorithms in camera systems are prevalent with the increasing demand for computational photography and imaging on mobile platforms. However, the lack of high-quality data for research and the rare opportunity for in-depth exchange of views from industry and academia constrain the development of mobile intelligent photography and imaging (MIPI). To bridge the gap, we introduce the first MIPI challenge, including five tracks focusing on novel image sensors and imaging algorithms. In this paper, Quad Joint Remosaic and Denoise, one of the five tracks, working on the interpolation of Quad CFA to Bayer at full resolution, is introduced. The participants were provided a new dataset, including 70 (training) and 15 (validation) scenes of high-quality Quad and Bayer pairs. In addition, for each scene, Quad of different noise levels was provided at 0dB, 24dB, and 42dB. All the data were captured using a Quad sensor in both outdoor and indoor conditions. The final results are evaluated using objective metrics, including PSNR, SSIM, LPIPS, and KLD. A detailed description of all models developed in this challenge is provided in this paper. More details of this challenge and the link to the dataset can be found at https://github.com/mipi-challenge/MIPI2022.

MIPI 2022 Challenge on RGB+ToF Depth Completion: Dataset and Report

Sep 15, 2022

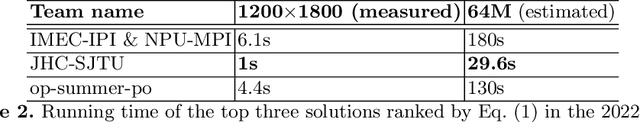

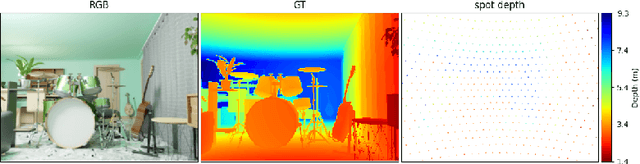

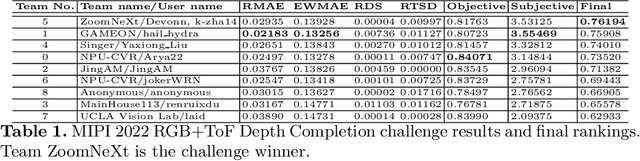

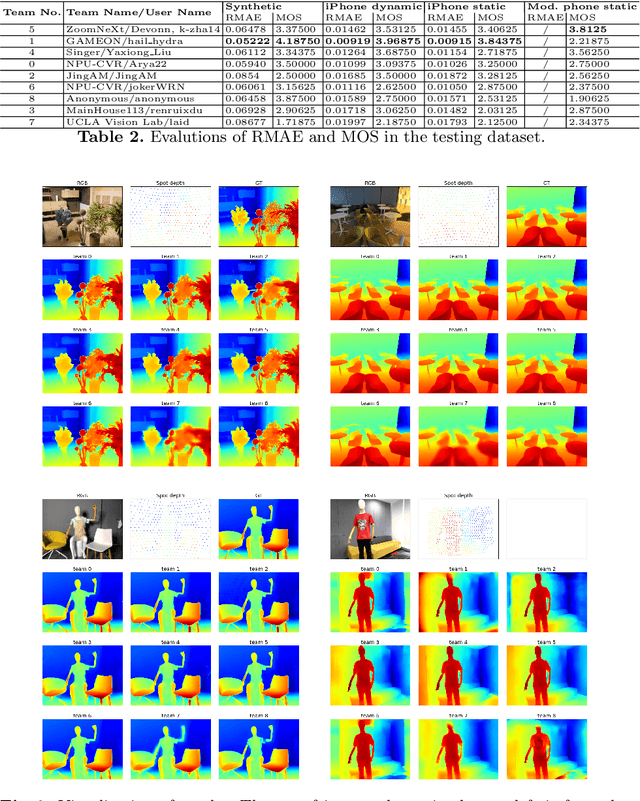

Abstract:Developing and integrating advanced image sensors with novel algorithms in camera systems is prevalent with the increasing demand for computational photography and imaging on mobile platforms. However, the lack of high-quality data for research and the rare opportunity for in-depth exchange of views from industry and academia constrain the development of mobile intelligent photography and imaging (MIPI). To bridge the gap, we introduce the first MIPI challenge including five tracks focusing on novel image sensors and imaging algorithms. In this paper, RGB+ToF Depth Completion, one of the five tracks, working on the fusion of RGB sensor and ToF sensor (with spot illumination) is introduced. The participants were provided with a new dataset called TetrasRGBD, which contains 18k pairs of high-quality synthetic RGB+Depth training data and 2.3k pairs of testing data from mixed sources. All the data are collected in an indoor scenario. We require that the running time of all methods should be real-time on desktop GPUs. The final results are evaluated using objective metrics and Mean Opinion Score (MOS) subjectively. A detailed description of all models developed in this challenge is provided in this paper. More details of this challenge and the link to the dataset can be found at https://github.com/mipi-challenge/MIPI2022.

MIPI 2022 Challenge on Under-Display Camera Image Restoration: Methods and Results

Sep 15, 2022

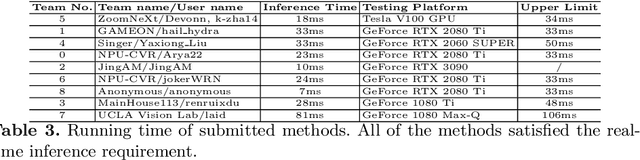

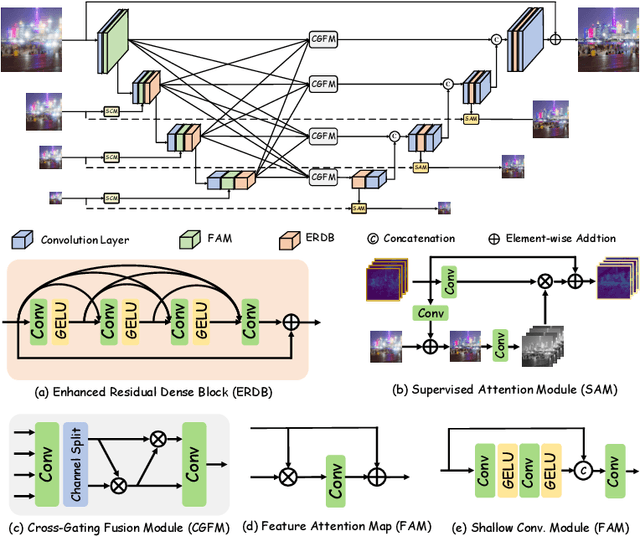

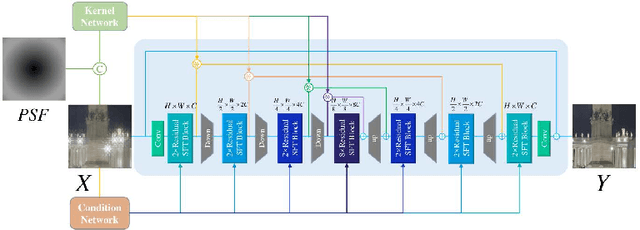

Abstract:Developing and integrating advanced image sensors with novel algorithms in camera systems are prevalent with the increasing demand for computational photography and imaging on mobile platforms. However, the lack of high-quality data for research and the rare opportunity for in-depth exchange of views from industry and academia constrain the development of mobile intelligent photography and imaging (MIPI). To bridge the gap, we introduce the first MIPI challenge including five tracks focusing on novel image sensors and imaging algorithms. In this paper, we summarize and review the Under-Display Camera (UDC) Image Restoration track on MIPI 2022. In total, 167 participants were successfully registered, and 19 teams submitted results in the final testing phase. The developed solutions in this challenge achieved state-of-the-art performance on Under-Display Camera Image Restoration. A detailed description of all models developed in this challenge is provided in this paper. More details of this challenge and the link to the dataset can be found at https://github.com/mipi-challenge/MIPI2022.

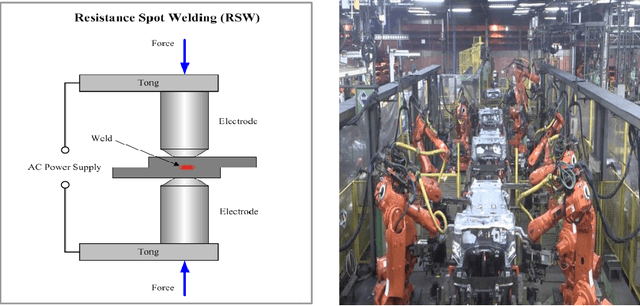

Statistical Method to Model the Quality Inconsistencies of the Welding Process

Feb 24, 2019

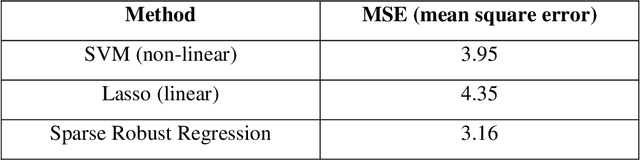

Abstract:Resistance Spot Welding (RSW) is an important manufacturing process that attracts increasing attention in automotive industry. However, due to the complexity of the manufacturing process, the corresponding product quality shows significant inconsistencies even under the same process setup. This paper develops a statistical method to capture the inconsistence of welding quality measurements (e.g., nugget width) based on process parameters to efficiently monitor product quality. The proposed method provides engineering efficiency and cost saving benefit through reduction of physical testing required for weldability and verification. The developed method is applied to the real-world welding process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge