Qian Xiao

Who Is Lagging Behind: Profiling Student Behaviors with Graph-Level Encoding in Curriculum-Based Online Learning Systems

Aug 26, 2025Abstract:The surge in the adoption of Intelligent Tutoring Systems (ITSs) in education, while being integral to curriculum-based learning, can inadvertently exacerbate performance gaps. To address this problem, student profiling becomes crucial for tracking progress, identifying struggling students, and alleviating disparities among students. Such profiling requires measuring student behaviors and performance across different aspects, such as content coverage, learning intensity, and proficiency in different concepts within a learning topic. In this study, we introduce CTGraph, a graph-level representation learning approach to profile learner behaviors and performance in a self-supervised manner. Our experiments demonstrate that CTGraph can provide a holistic view of student learning journeys, accounting for different aspects of student behaviors and performance, as well as variations in their learning paths as aligned to the curriculum structure. We also show that our approach can identify struggling students and provide comparative analysis of diverse groups to pinpoint when and where students are struggling. As such, our approach opens more opportunities to empower educators with rich insights into student learning journeys and paves the way for more targeted interventions.

Chart-HQA: A Benchmark for Hypothetical Question Answering in Charts

Mar 07, 2025Abstract:Multimodal Large Language Models (MLLMs) have garnered significant attention for their strong visual-semantic understanding. Most existing chart benchmarks evaluate MLLMs' ability to parse information from charts to answer questions. However, they overlook the inherent output biases of MLLMs, where models rely on their parametric memory to answer questions rather than genuinely understanding the chart content. To address this limitation, we introduce a novel Chart Hypothetical Question Answering (HQA) task, which imposes assumptions on the same question to compel models to engage in counterfactual reasoning based on the chart content. Furthermore, we introduce HAI, a human-AI interactive data synthesis approach that leverages the efficient text-editing capabilities of LLMs alongside human expert knowledge to generate diverse and high-quality HQA data at a low cost. Using HAI, we construct Chart-HQA, a challenging benchmark synthesized from publicly available data sources. Evaluation results on 18 MLLMs of varying model sizes reveal that current models face significant generalization challenges and exhibit imbalanced reasoning performance on the HQA task.

EDMB: Edge Detector with Mamba

Jan 08, 2025Abstract:Transformer-based models have made significant progress in edge detection, but their high computational cost is prohibitive. Recently, vision Mamba have shown excellent ability in efficiently capturing long-range dependencies. Drawing inspiration from this, we propose a novel edge detector with Mamba, termed EDMB, to efficiently generate high-quality multi-granularity edges. In EDMB, Mamba is combined with a global-local architecture, therefore it can focus on both global information and fine-grained cues. The fine-grained cues play a crucial role in edge detection, but are usually ignored by ordinary Mamba. We design a novel decoder to construct learnable Gaussian distributions by fusing global features and fine-grained features. And the multi-grained edges are generated by sampling from the distributions. In order to make multi-granularity edges applicable to single-label data, we introduce Evidence Lower Bound loss to supervise the learning of the distributions. On the multi-label dataset BSDS500, our proposed EDMB achieves competitive single-granularity ODS 0.837 and multi-granularity ODS 0.851 without multi-scale test or extra PASCAL-VOC data. Remarkably, EDMB can be extended to single-label datasets such as NYUDv2 and BIPED. The source code is available at https://github.com/Li-yachuan/EDMB.

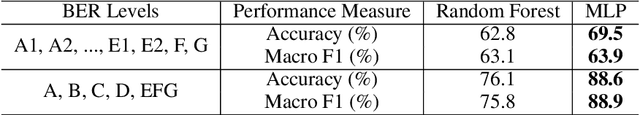

Model Failure or Data Corruption? Exploring Inconsistencies in Building Energy Ratings with Self-Supervised Contrastive Learning

Apr 14, 2024

Abstract:Building Energy Rating (BER) stands as a pivotal metric, enabling building owners, policymakers, and urban planners to understand the energy-saving potential through improving building energy efficiency. As such, enhancing buildings' BER levels is expected to directly contribute to the reduction of carbon emissions and promote climate improvement. Nonetheless, the BER assessment process is vulnerable to missing and inaccurate measurements. In this study, we introduce \texttt{CLEAR}, a data-driven approach designed to scrutinize the inconsistencies in BER assessments through self-supervised contrastive learning. We validated the effectiveness of \texttt{CLEAR} using a dataset representing Irish building stocks. Our experiments uncovered evidence of inconsistent BER assessments, highlighting measurement data corruption within this real-world dataset.

LuminLab: An AI-Powered Building Retrofit and Energy Modelling Platform

Apr 14, 2024

Abstract:This paper describes the technical and conceptual development of the LuminLab platform, an online tool that integrates a purpose-fit human-centric AI chatbot and predictive energy model into a streamlined front-end that can rapidly produce and discuss building retrofit plans in natural language. The platform provides users with the ability to engage with a range of possible retrofit pathways tailored to their individual budget and building needs on-demand. Given the complicated and costly nature of building retrofit projects, which rely on a variety of stakeholder groups with differing goals and incentives, we feel that AI-powered tools such as this have the potential to pragmatically de-silo knowledge, improve communication, and empower individual homeowners to undertake incremental retrofit projects that might not happen otherwise.

Compact Twice Fusion Network for Edge Detection

Jul 11, 2023Abstract:The significance of multi-scale features has been gradually recognized by the edge detection community. However, the fusion of multi-scale features increases the complexity of the model, which is not friendly to practical application. In this work, we propose a Compact Twice Fusion Network (CTFN) to fully integrate multi-scale features while maintaining the compactness of the model. CTFN includes two lightweight multi-scale feature fusion modules: a Semantic Enhancement Module (SEM) that can utilize the semantic information contained in coarse-scale features to guide the learning of fine-scale features, and a Pseudo Pixel-level Weighting (PPW) module that aggregate the complementary merits of multi-scale features by assigning weights to all features. Notwithstanding all this, the interference of texture noise makes the correct classification of some pixels still a challenge. For these hard samples, we propose a novel loss function, coined Dynamic Focal Loss, which reshapes the standard cross-entropy loss and dynamically adjusts the weights to correct the distribution of hard samples. We evaluate our method on three datasets, i.e., BSDS500, NYUDv2, and BIPEDv2. Compared with state-of-the-art methods, CTFN achieves competitive accuracy with less parameters and computational cost. Apart from the backbone, CTFN requires only 0.1M additional parameters, which reduces its computation cost to just 60% of other state-of-the-art methods. The codes are available at https://github.com/Li-yachuan/CTFN-pytorch-master.

Global Structure Knowledge-Guided Relation Extraction Method for Visually-Rich Document

May 23, 2023Abstract:Visual relation extraction (VRE) aims to extract relations between entities from visuallyrich documents. Existing methods usually predict relations for each entity pair independently based on entity features but ignore the global structure information, i.e., dependencies between entity pairs. The absence of global structure information may make the model struggle to learn long-range relations and easily predict conflicted results. To alleviate such limitations, we propose a GlObal Structure knowledgeguided relation Extraction (GOSE) framework, which captures dependencies between entity pairs in an iterative manner. Given a scanned image of the document, GOSE firstly generates preliminary relation predictions on entity pairs. Secondly, it mines global structure knowledge based on prediction results of the previous iteration and further incorporates global structure knowledge into entity representations. This "generate-capture-incorporate" schema is performed multiple times so that entity representations and global structure knowledge can mutually reinforce each other. Extensive experiments show that GOSE not only outperforms previous methods on the standard fine-tuning setting but also shows promising superiority in cross-lingual learning; even yields stronger data-efficient performance in the low-resource setting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge