Prerna Singh

Brain Age Group Classification Based on Resting State Functional Connectivity Metrics

Mar 27, 2025

Abstract:This study investigated age-related changes in functional connectivity using resting-state fMRI and explored the efficacy of traditional deep learning for classifying brain developmental stages (BDS). Functional connectivity was assessed using Seed-Based Phase Synchronization (SBPS) and Pearson correlation across 160 ROIs. Clustering was performed using t-SNE, and network topology was analyzed through graph-theoretic metrics. Adaptive learning was implemented to classify the age group by extracting bottleneck features through mobileNetV2. These deep features were embedded and classified using Random Forest and PCA. Results showed a shift in phase synchronization patterns from sensory-driven networks in youth to more distributed networks with aging. t-SNE revealed that SBPS provided the most distinct clustering of BDS. Global efficiency and participation coefficient followed an inverted U-shaped trajectory, while clustering coefficient and modularity exhibited a U-shaped pattern. MobileNet outperformed other models, achieving the highest classification accuracy for BDS. Aging was associated with reduced global integration and increased local connectivity, indicating functional network reorganization. While this study focused solely on functional connectivity from resting-state fMRI and a limited set of connectivity features, deep learning demonstrated superior classification performance, highlighting its potential for characterizing age-related brain changes.

Optimizing open-domain question answering with graph-based retrieval augmented generation

Mar 04, 2025

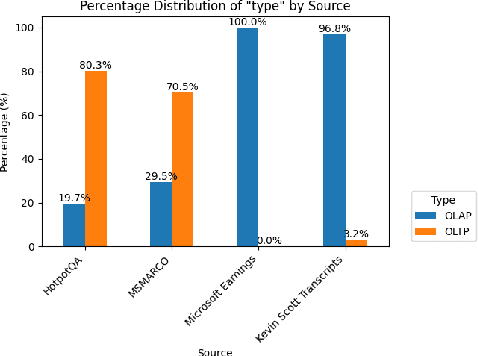

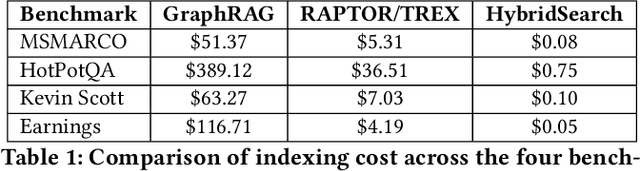

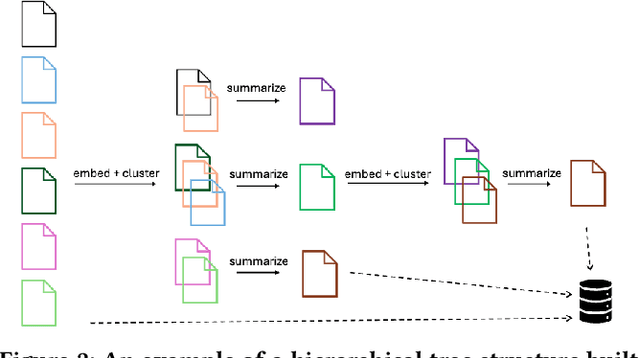

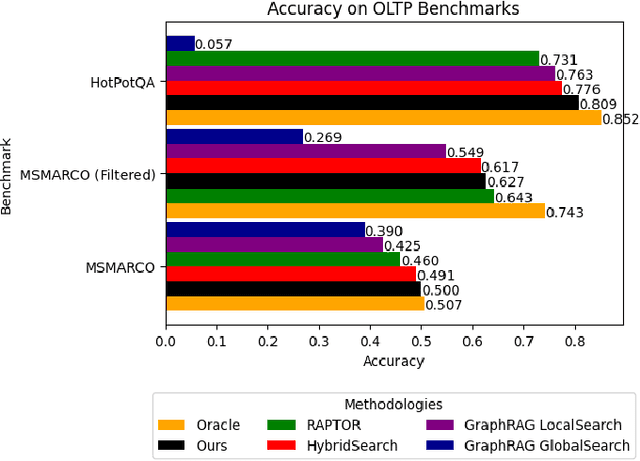

Abstract:In this work, we benchmark various graph-based retrieval-augmented generation (RAG) systems across a broad spectrum of query types, including OLTP-style (fact-based) and OLAP-style (thematic) queries, to address the complex demands of open-domain question answering (QA). Traditional RAG methods often fall short in handling nuanced, multi-document synthesis tasks. By structuring knowledge as graphs, we can facilitate the retrieval of context that captures greater semantic depth and enhances language model operations. We explore graph-based RAG methodologies and introduce TREX, a novel, cost-effective alternative that combines graph-based and vector-based retrieval techniques. Our benchmarking across four diverse datasets highlights the strengths of different RAG methodologies, demonstrates TREX's ability to handle multiple open-domain QA types, and reveals the limitations of current evaluation methods. In a real-world technical support case study, we demonstrate how TREX solutions can surpass conventional vector-based RAG in efficiently synthesizing data from heterogeneous sources. Our findings underscore the potential of augmenting large language models with advanced retrieval and orchestration capabilities, advancing scalable, graph-based AI solutions.

The RL/LLM Taxonomy Tree: Reviewing Synergies Between Reinforcement Learning and Large Language Models

Feb 02, 2024Abstract:In this work, we review research studies that combine Reinforcement Learning (RL) and Large Language Models (LLMs), two areas that owe their momentum to the development of deep neural networks. We propose a novel taxonomy of three main classes based on the way that the two model types interact with each other. The first class, RL4LLM, includes studies where RL is leveraged to improve the performance of LLMs on tasks related to Natural Language Processing. L4LLM is divided into two sub-categories depending on whether RL is used to directly fine-tune an existing LLM or to improve the prompt of the LLM. In the second class, LLM4RL, an LLM assists the training of an RL model that performs a task that is not inherently related to natural language. We further break down LLM4RL based on the component of the RL training framework that the LLM assists or replaces, namely reward shaping, goal generation, and policy function. Finally, in the third class, RL+LLM, an LLM and an RL agent are embedded in a common planning framework without either of them contributing to training or fine-tuning of the other. We further branch this class to distinguish between studies with and without natural language feedback. We use this taxonomy to explore the motivations behind the synergy of LLMs and RL and explain the reasons for its success, while pinpointing potential shortcomings and areas where further research is needed, as well as alternative methodologies that serve the same goal.

AI prediction of cardiovascular events using opportunistic epicardial adipose tissue assessments from CT calcium score

Jan 29, 2024

Abstract:Background: Recent studies have used basic epicardial adipose tissue (EAT) assessments (e.g., volume and mean HU) to predict risk of atherosclerosis-related, major adverse cardiovascular events (MACE). Objectives: Create novel, hand-crafted EAT features, 'fat-omics', to capture the pathophysiology of EAT and improve MACE prediction. Methods: We segmented EAT using a previously-validated deep learning method with optional manual correction. We extracted 148 radiomic features (morphological, spatial, and intensity) and used Cox elastic-net for feature reduction and prediction of MACE. Results: Traditional fat features gave marginal prediction (EAT-volume/EAT-mean-HU/ BMI gave C-index 0.53/0.55/0.57, respectively). Significant improvement was obtained with 15 fat-omics features (C-index=0.69, test set). High-risk features included volume-of-voxels-having-elevated-HU-[-50, -30-HU] and HU-negative-skewness, both of which assess high HU, which as been implicated in fat inflammation. Other high-risk features include kurtosis-of-EAT-thickness, reflecting the heterogeneity of thicknesses, and EAT-volume-in-the-top-25%-of-the-heart, emphasizing adipose near the proximal coronary arteries. Kaplan-Meyer plots of Cox-identified, high- and low-risk patients were well separated with the median of the fat-omics risk, while high-risk group having HR 2.4 times that of the low-risk group (P<0.001). Conclusion: Preliminary findings indicate an opportunity to use more finely tuned, explainable assessments on EAT for improved cardiovascular risk prediction.

Insights into Age-Related Functional Brain Changes during Audiovisual Integration Tasks: A Comprehensive EEG Source-Based Analysis

Nov 27, 2023

Abstract:The seamless integration of visual and auditory information is a fundamental aspect of human cognition. Although age-related functional changes in Audio-Visual Integration (AVI) have been extensively explored in the past, thorough studies across various age groups remain insufficient. Previous studies have provided valuable insights into agerelated AVI using EEG-based sensor data. However, these studies have been limited in their ability to capture spatial information related to brain source activation and their connectivity. To address these gaps, our study conducted a comprehensive audiovisual integration task with a specific focus on assessing the aging effects in various age groups, particularly middle-aged individuals. We presented visual, auditory, and audio-visual stimuli and recorded EEG data from Young (18-25 years), Transition (26- 33 years), and Middle (34-42 years) age cohort healthy participants. We aimed to understand how aging affects brain activation and functional connectivity among hubs during audio-visual tasks. Our findings revealed delayed brain activation in middleaged individuals, especially for bimodal stimuli. The superior temporal cortex and superior frontal gyrus showed significant changes in neuronal activation with aging. Lower frequency bands (theta and alpha) showed substantial changes with increasing age during AVI. Our findings also revealed that the AVI-associated brain regions can be clustered into five different brain networks using the k-means algorithm. Additionally, we observed increased functional connectivity in middle age, particularly in the frontal, temporal, and occipital regions. These results highlight the compensatory neural mechanisms involved in aging during cognitive tasks.

Brain Connectivity Features-based Age Group Classification using Temporal Asynchrony Audio-Visual Integration Task

May 01, 2023

Abstract:The process of integration of inputs from several sensory modalities in the human brain is referred to as multisensory integration. Age-related cognitive decline leads to a loss in the ability of the brain to conceive multisensory inputs. There has been considerable work done in the study of such cognitive changes for the old age groups. However, in the case of middle age groups, such analysis is limited. Motivated by this, in the current work, EEG-based functional connectivity during audiovisual temporal asynchrony integration task for middle-aged groups is explored. Investigation has been carried out during different tasks such as: unimodal audio, unimodal visual, and variations of audio-visual stimulus. A correlation-based functional connectivity analysis is done, and the changes among different age groups including: young (18-25 years), transition from young to middle age (25-33 years), and medium (33-41 years), are observed. Furthermore, features extracted from the connectivity graphs have been used to classify among the different age groups. Classification accuracies of $89.4\%$ and $88.4\%$ are obtained for the Audio and Audio-50-Visual stimuli cases with a Random Forest based classifier, thereby validating the efficacy of the proposed method.

Reorganization of resting state brain network functional connectivity across human brain developmental stages

Jun 16, 2022

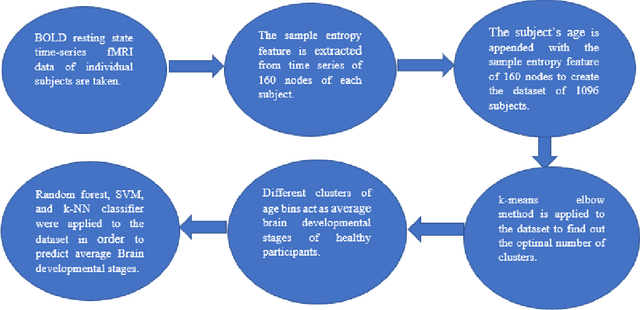

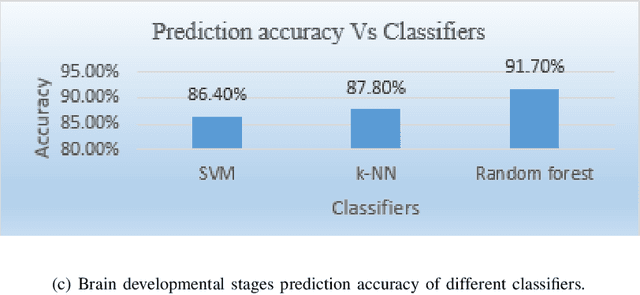

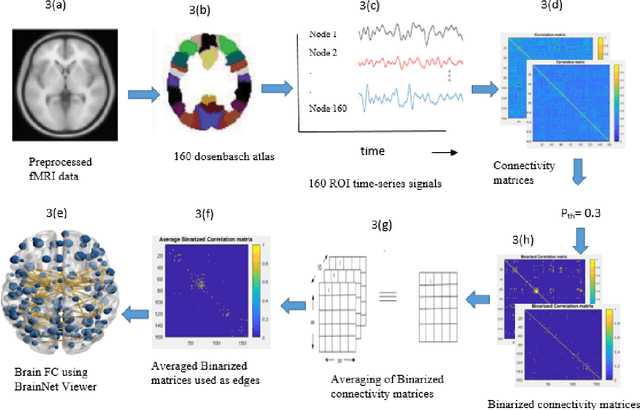

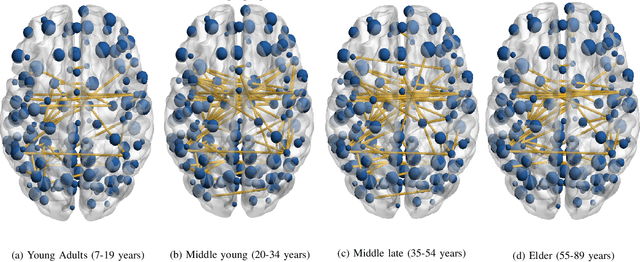

Abstract:The human brain is liable to undergo substantial alterations, anatomically and functionally with aging. Cognitive brain aging can either be healthy or degenerative in nature. Such degeneration of cognitive ability can lead to disorders such as Alzheimer's disease, dementia, schizophrenia, and multiple sclerosis. Furthermore, the brain network goes through various changes during healthy aging, and it is an active area of research. In this study, we have investigated the rs-functional connectivity of participants (in the age group of 7-89 years) using a publicly available HCP dataset. We have also explored how different brain networks are clustered using K-means clustering methods which have been further validated by the t-SNE algorithm. The changes in overall resting-state brain functional connectivity with changes in brain developmental stages have also been explored using BrainNet Viewer. Then, specifically within-cluster network and between-cluster network changes with increasing age have been studied using linear regression which ultimately shows a pattern of increase/decrease in the mean segregation of brain networks with healthy aging. Brain networks like Default Mode Network, Cingulo opercular Network, Sensory Motor Network, and Cerebellum Network have shown decreased segregation whereas Frontal Parietal Network and Occipital Network show increased segregation with healthy aging. Our results strongly suggest that the brain has four brain developmental stages and brain networks reorganize their functional connectivity during these brain developmental stages.

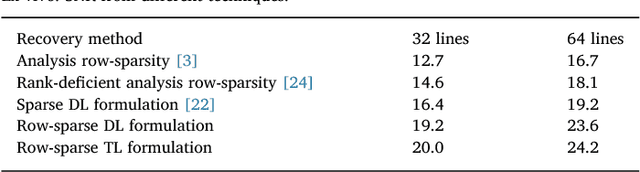

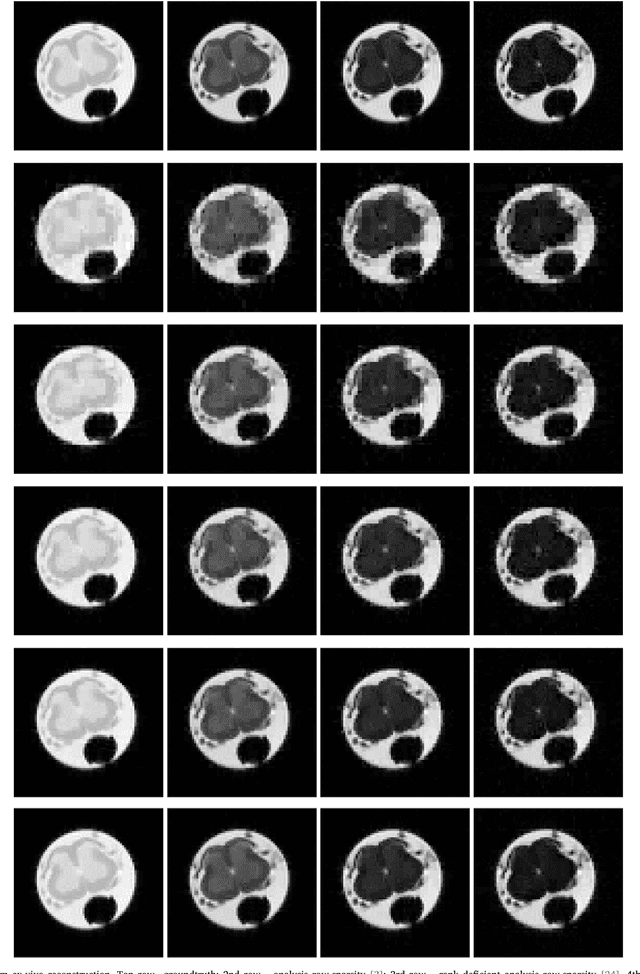

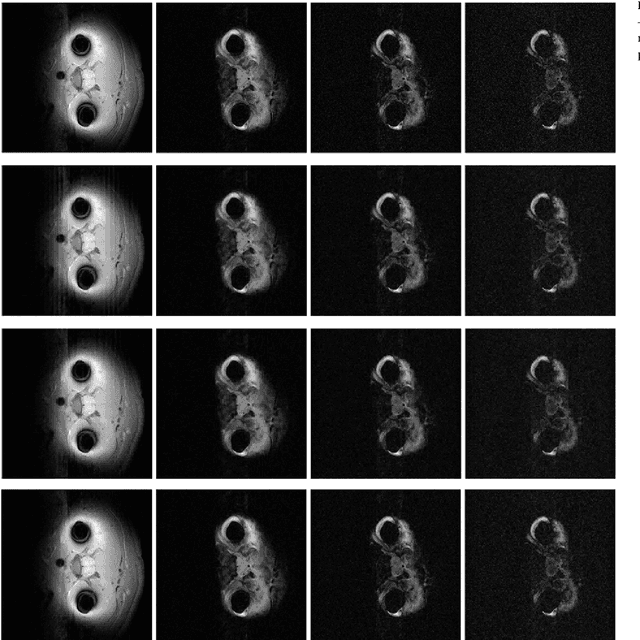

Multi-echo Reconstruction from Partial K-space Scans via Adaptively Learnt Basis

Dec 11, 2019

Abstract:In multi echo imaging, multiple T1/T2 weighted images of the same cross section is acquired. Acquiring multiple scans is time consuming. In order to accelerate, compressed sensing based techniques have been proposed. In recent times, it has been observed in several areas of traditional compressed sensing, that instead of using fixed basis (wavelet, DCT etc.), considerably better results can be achieved by learning the basis adaptively from the data. Motivated by these studies, we propose to employ such adaptive learning techniques to improve reconstruction of multi-echo scans. This work will be based on two basis learning models synthesis (better known as dictionary learning) and analysis (known as transform learning). We modify these basic methods by incorporating structure of the multi echo scans. Our work shows that we can indeed significantly improve multi-echo imaging over compressed sensing based techniques and other unstructured adaptive sparse recovery methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge