Prasad Vagdargi

Enhancing Context Through Contrast

Jan 06, 2024

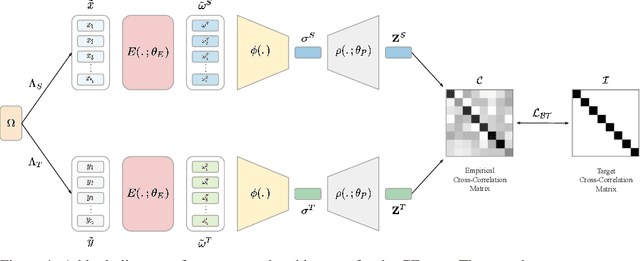

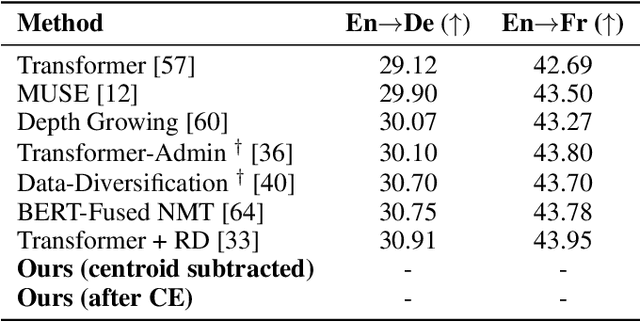

Abstract:Neural machine translation benefits from semantically rich representations. Considerable progress in learning such representations has been achieved by language modelling and mutual information maximization objectives using contrastive learning. The language-dependent nature of language modelling introduces a trade-off between the universality of the learned representations and the model's performance on the language modelling tasks. Although contrastive learning improves performance, its success cannot be attributed to mutual information alone. We propose a novel Context Enhancement step to improve performance on neural machine translation by maximizing mutual information using the Barlow Twins loss. Unlike other approaches, we do not explicitly augment the data but view languages as implicit augmentations, eradicating the risk of disrupting semantic information. Further, our method does not learn embeddings from scratch and can be generalised to any set of pre-trained embeddings. Finally, we evaluate the language-agnosticism of our embeddings through language classification and use them for neural machine translation to compare with state-of-the-art approaches.

Using Uncertainty in Deep Learning Reconstruction for Cone-Beam CT of the Brain

Aug 20, 2021

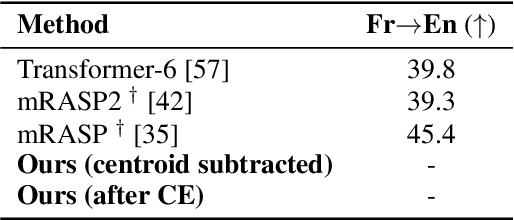

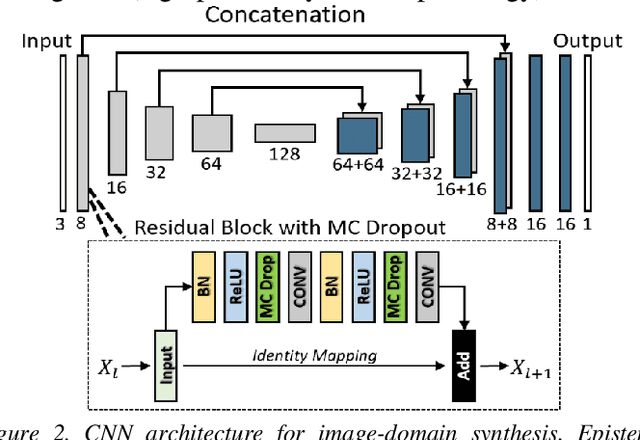

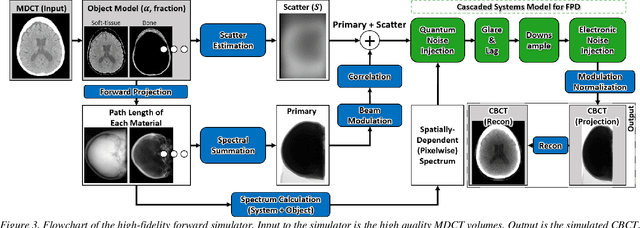

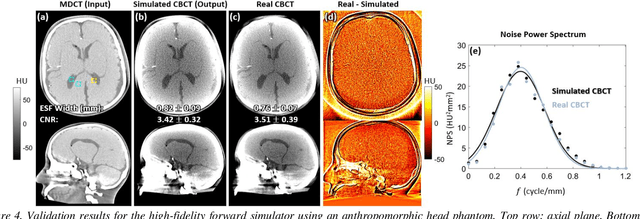

Abstract:Contrast resolution beyond the limits of conventional cone-beam CT (CBCT) systems is essential to high-quality imaging of the brain. We present a deep learning reconstruction method (dubbed DL-Recon) that integrates physically principled reconstruction models with DL-based image synthesis based on the statistical uncertainty in the synthesis image. A synthesis network was developed to generate a synthesized CBCT image (DL-Synthesis) from an uncorrected filtered back-projection (FBP) image. To improve generalizability (including accurate representation of lesions not seen in training), voxel-wise epistemic uncertainty of DL-Synthesis was computed using a Bayesian inference technique (Monte-Carlo dropout). In regions of high uncertainty, the DL-Recon method incorporates information from a physics-based reconstruction model and artifact-corrected projection data. Two forms of the DL-Recon method are proposed: (i) image-domain fusion of DL-Synthesis and FBP (DL-FBP) weighted by DL uncertainty; and (ii) a model-based iterative image reconstruction (MBIR) optimization using DL-Synthesis to compute a spatially varying regularization term based on DL uncertainty (DL-MBIR). The error in DL-Synthesis images was correlated with the uncertainty in the synthesis estimate. Compared to FBP and PWLS, the DL-Recon methods (both DL-FBP and DL-MBIR) showed ~50% reduction in noise (at matched spatial resolution) and ~40-70% improvement in image uniformity. Conventional DL-Synthesis alone exhibited ~10-60% under-estimation of lesion contrast and ~5-40% reduction in lesion segmentation accuracy (Dice coefficient) in simulated and real brain lesions, suggesting a lack of reliability / generalizability for structures unseen in the training data. DL-FBP and DL-MBIR improved the accuracy of reconstruction by directly incorporating information from the measurements in regions of high uncertainty.

A Mosquito Pick-and-Place System for PfSPZ-based Malaria Vaccine Production

Apr 12, 2020

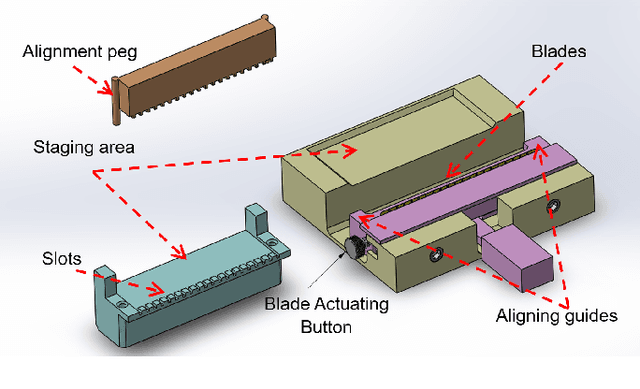

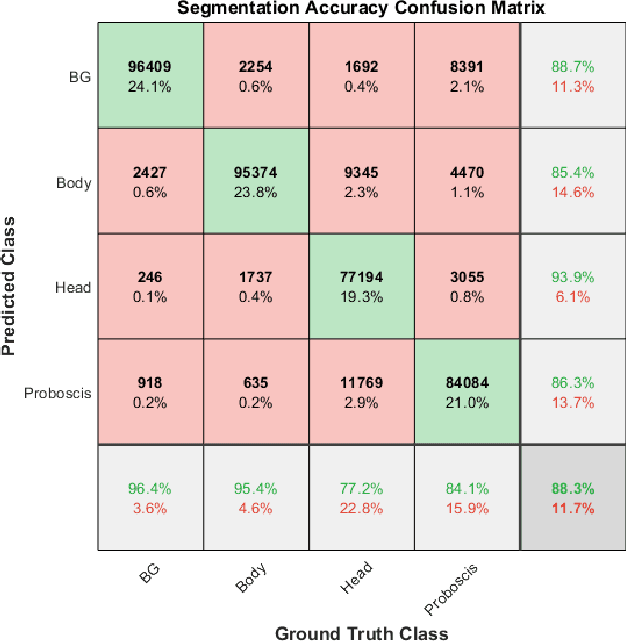

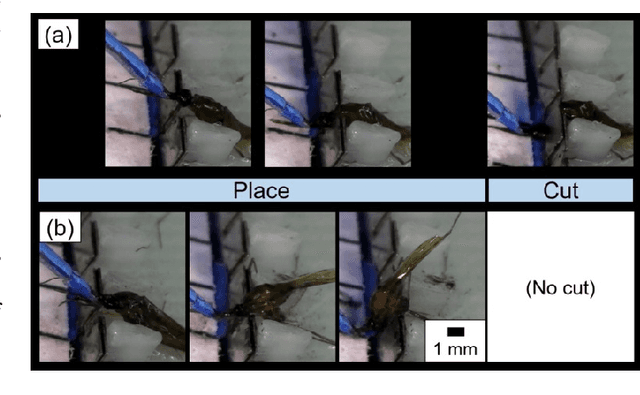

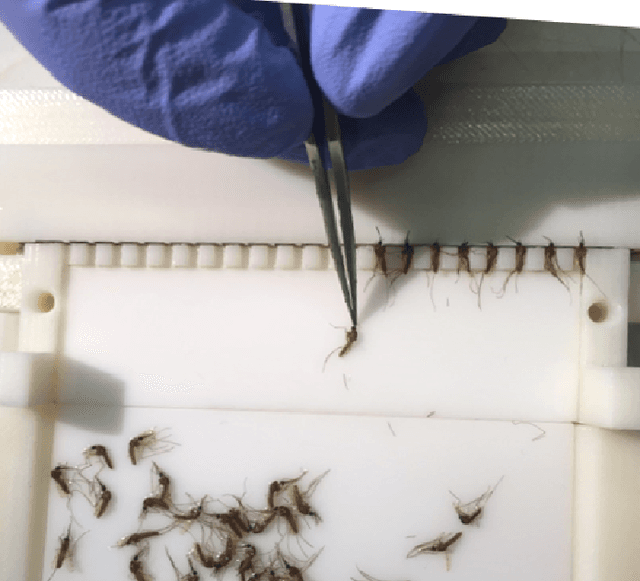

Abstract:The treatment of malaria is a global health challenge that stands to benefit from the widespread introduction of a vaccine for the disease. A method has been developed to create a live organism vaccine using the sporozoites (SPZ) of the parasite Plasmodium falciparum (Pf), which are concentrated in the salivary glands of infected mosquitoes. Current manual dissection methods to obtain these PfSPZ are not optimally efficient for large-scale vaccine production. We propose an improved dissection procedure and a mechanical fixture that increases the rate of mosquito dissection and helps to deskill this stage of the production process. We further demonstrate the automation of a key step in this production process, the picking and placing of mosquitoes from a staging apparatus into a dissection assembly. This unit test of a robotic mosquito pick-and-place system is performed using a custom-designed micro-gripper attached to a four degree of freedom (4-DOF) robot under the guidance of a computer vision system. Mosquitoes are autonomously grasped and pulled to a pair of notched dissection blades to remove the head of the mosquito, allowing access to the salivary glands. Placement into these blades is adapted based on output from computer vision to accommodate for the unique anatomy and orientation of each grasped mosquito. In this pilot test of the system on 50 mosquitoes, we demonstrate a 100% grasping accuracy and a 90% accuracy in placing the mosquito with its neck within the blade notches such that the head can be removed. This is a promising result for this difficult and non-standard pick-and-place task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge