Peter Grönquist

Beyond Calibration: Physically Informed Learning for Raw-to-Raw Mapping

Jun 11, 2025Abstract:Achieving consistent color reproduction across multiple cameras is essential for seamless image fusion and Image Processing Pipeline (ISP) compatibility in modern devices, but it is a challenging task due to variations in sensors and optics. Existing raw-to-raw conversion methods face limitations such as poor adaptability to changing illumination, high computational costs, or impractical requirements such as simultaneous camera operation and overlapping fields-of-view. We introduce the Neural Physical Model (NPM), a lightweight, physically-informed approach that simulates raw images under specified illumination to estimate transformations between devices. The NPM effectively adapts to varying illumination conditions, can be initialized with physical measurements, and supports training with or without paired data. Experiments on public datasets like NUS and BeyondRGB demonstrate that NPM outperforms recent state-of-the-art methods, providing robust chromatic consistency across different sensors and optical systems.

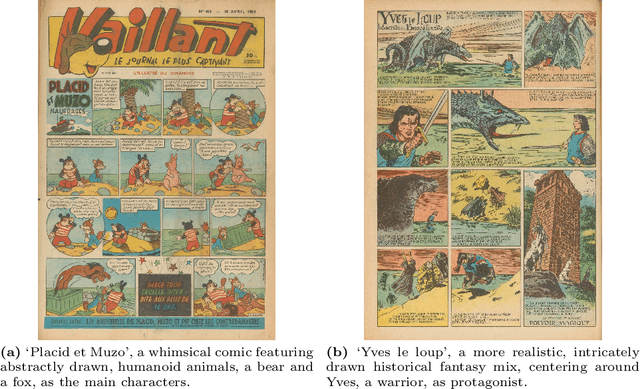

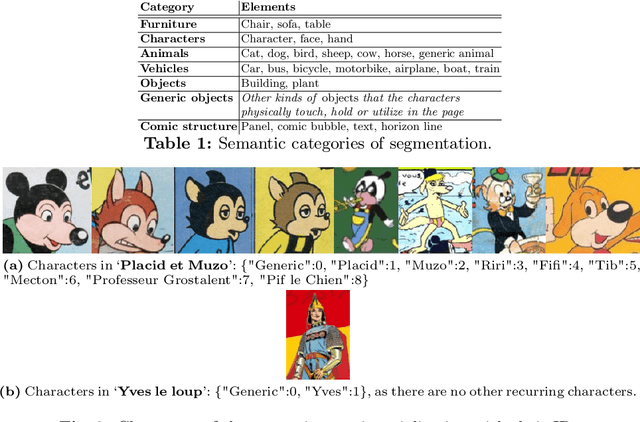

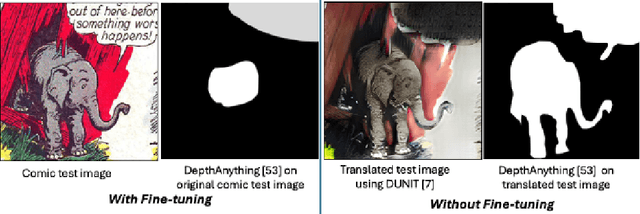

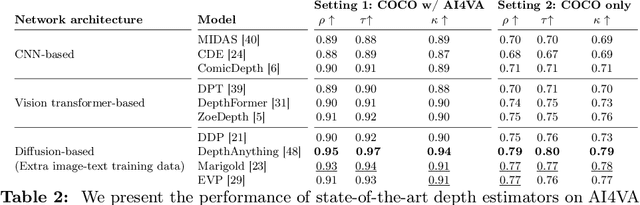

Unlocking Comics: The AI4VA Dataset for Visual Understanding

Oct 27, 2024

Abstract:In the evolving landscape of deep learning, there is a pressing need for more comprehensive datasets capable of training models across multiple modalities. Concurrently, in digital humanities, there is a growing demand to leverage technology for diverse media adaptation and creation, yet limited by sparse datasets due to copyright and stylistic constraints. Addressing this gap, our paper presents a novel dataset comprising Franco-Belgian comics from the 1950s annotated for tasks including depth estimation, semantic segmentation, saliency detection, and character identification. It consists of two distinct and consistent styles and incorporates object concepts and labels taken from natural images. By including such diverse information across styles, this dataset not only holds promise for computational creativity but also offers avenues for the digitization of art and storytelling innovation. This dataset is a crucial component of the AI4VA Workshop Challenges~\url{https://sites.google.com/view/ai4vaeccv2024}, where we specifically explore depth and saliency. Dataset details at \url{https://github.com/IVRL/AI4VA}.

Efficient Temporally-Aware DeepFake Detection using H.264 Motion Vectors

Nov 17, 2023Abstract:Video DeepFakes are fake media created with Deep Learning (DL) that manipulate a person's expression or identity. Most current DeepFake detection methods analyze each frame independently, ignoring inconsistencies and unnatural movements between frames. Some newer methods employ optical flow models to capture this temporal aspect, but they are computationally expensive. In contrast, we propose using the related but often ignored Motion Vectors (MVs) and Information Masks (IMs) from the H.264 video codec, to detect temporal inconsistencies in DeepFakes. Our experiments show that this approach is effective and has minimal computational costs, compared with per-frame RGB-only methods. This could lead to new, real-time temporally-aware DeepFake detection methods for video calls and streaming.

Deep Learning for Post-Processing Ensemble Weather Forecasts

May 18, 2020

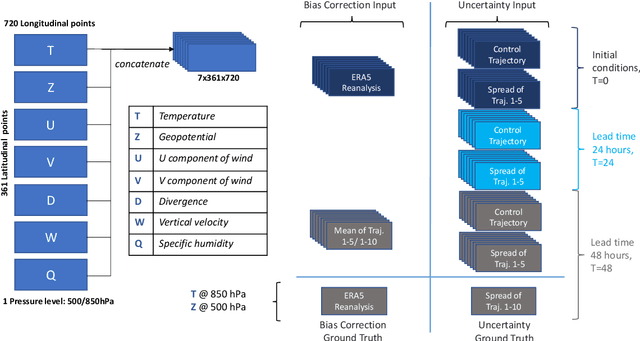

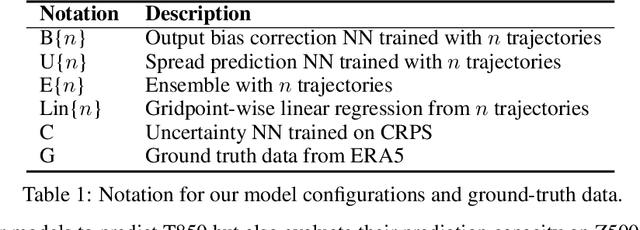

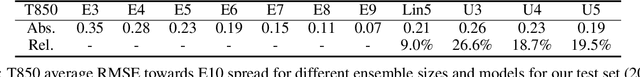

Abstract:Quantifying uncertainty in weather forecasts typically employs ensemble prediction systems, which consist of many perturbed trajectories run in parallel. These systems are associated with a high computational cost and often include statistical post-processing steps to inexpensively improve their raw prediction qualities. We propose a mixed prediction and post-processing model based on a subset of the original trajectories. In the model, we implement methods from deep learning to account for non-linear relationships that are not captured by current numerical models or other post-processing methods. Applied to global data, our mixed models achieve a relative improvement of the ensemble forecast skill of over 13%. We demonstrate that this is especially the case for extreme weather events on selected case studies, where we see an improvement in predictions by up to 26%. In addition, by using only half the trajectories, the computational costs of ensemble prediction systems can potentially be reduced, allowing weather forecasting pipelines to run higher resolution trajectories, and resulting in even more accurate raw ensemble forecasts.

Predicting Weather Uncertainty with Deep Convnets

Dec 04, 2019

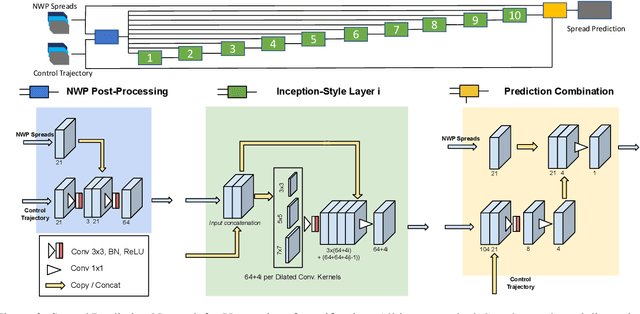

Abstract:Modern weather forecast models perform uncertainty quantification using ensemble prediction systems, which collect nonparametric statistics based on multiple perturbed simulations. To provide accurate estimation, dozens of such computationally intensive simulations must be run. We show that deep neural networks can be used on a small set of numerical weather simulations to estimate the spread of a weather forecast, significantly reducing computational cost. To train the system, we both modify the 3D U-Net architecture and explore models that incorporate temporal data. Our models serve as a starting point to improve uncertainty quantification in current real-time weather forecasting systems, which is vital for predicting extreme events.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge