Peter Du

Isaac Lab: A GPU-Accelerated Simulation Framework for Multi-Modal Robot Learning

Nov 06, 2025

Abstract:We present Isaac Lab, the natural successor to Isaac Gym, which extends the paradigm of GPU-native robotics simulation into the era of large-scale multi-modal learning. Isaac Lab combines high-fidelity GPU parallel physics, photorealistic rendering, and a modular, composable architecture for designing environments and training robot policies. Beyond physics and rendering, the framework integrates actuator models, multi-frequency sensor simulation, data collection pipelines, and domain randomization tools, unifying best practices for reinforcement and imitation learning at scale within a single extensible platform. We highlight its application to a diverse set of challenges, including whole-body control, cross-embodiment mobility, contact-rich and dexterous manipulation, and the integration of human demonstrations for skill acquisition. Finally, we discuss upcoming integration with the differentiable, GPU-accelerated Newton physics engine, which promises new opportunities for scalable, data-efficient, and gradient-based approaches to robot learning. We believe Isaac Lab's combination of advanced simulation capabilities, rich sensing, and data-center scale execution will help unlock the next generation of breakthroughs in robotics research.

W-RIZZ: A Weakly-Supervised Framework for Relative Traversability Estimation in Mobile Robotics

Jun 04, 2024

Abstract:Successful deployment of mobile robots in unstructured domains requires an understanding of the environment and terrain to avoid hazardous areas, getting stuck, and colliding with obstacles. Traversability estimation--which predicts where in the environment a robot can travel--is one prominent approach that tackles this problem. Existing geometric methods may ignore important semantic considerations, while semantic segmentation approaches involve a tedious labeling process. Recent self-supervised methods reduce labeling tedium, but require additional data or models and tend to struggle to explicitly label untraversable areas. To address these limitations, we introduce a weakly-supervised method for relative traversability estimation. Our method involves manually annotating the relative traversability of a small number of point pairs, which significantly reduces labeling effort compared to traditional segmentation-based methods and avoids the limitations of self-supervised methods. We further improve the performance of our method through a novel cross-image labeling strategy and loss function. We demonstrate the viability and performance of our method through deployment on a mobile robot in outdoor environments.

Conveying Autonomous Robot Capabilities through Contrasting Behaviour Summaries

Apr 01, 2023Abstract:As advances in artificial intelligence enable increasingly capable learning-based autonomous agents, it becomes more challenging for human observers to efficiently construct a mental model of the agent's behaviour. In order to successfully deploy autonomous agents, humans should not only be able to understand the individual limitations of the agents but also have insight on how they compare against one another. To do so, we need effective methods for generating human interpretable agent behaviour summaries. Single agent behaviour summarization has been tackled in the past through methods that generate explanations for why an agent chose to pick a particular action at a single timestep. However, for complex tasks, a per-action explanation may not be able to convey an agents global strategy. As a result, researchers have looked towards multi-timestep summaries which can better help humans assess an agents overall capability. More recently, multi-step summaries have also been used for generating contrasting examples to evaluate multiple agents. However, past approaches have largely relied on unstructured search methods to generate summaries and require agents to have a discrete action space. In this paper we present an adaptive search method for efficiently generating contrasting behaviour summaries with support for continuous state and action spaces. We perform a user study to evaluate the effectiveness of the summaries for helping humans discern the superior autonomous agent for a given task. Our results indicate that adaptive search can efficiently identify informative contrasting scenarios that enable humans to accurately select the better performing agent with a limited observation time budget.

Adaptive Failure Search Using Critical States from Domain Experts

Apr 01, 2023Abstract:Uncovering potential failure cases is a crucial step in the validation of safety critical systems such as autonomous vehicles. Failure search may be done through logging substantial vehicle miles in either simulation or real world testing. Due to the sparsity of failure events, naive random search approaches require significant amounts of vehicle operation hours to find potential system weaknesses. As a result, adaptive searching techniques have been proposed to efficiently explore and uncover failure trajectories of an autonomous policy in simulation. Adaptive Stress Testing (AST) is one such method that poses the problem of failure search as a Markov decision process and uses reinforcement learning techniques to find high probability failures. However, this formulation requires a probability model for the actions of all agents in the environment. In systems where the environment actions are discrete and dependencies among agents exist, it may be infeasible to fully characterize the distribution or find a suitable proxy. This work proposes the use of a data driven approach to learn a suitable classifier that tries to model how humans identify {critical states and use this to guide failure search in AST. We show that the incorporation of critical states into the AST framework generates failure scenarios with increased safety violations in an autonomous driving policy with a discrete action space.

CoCAtt: A Cognitive-Conditioned Driver Attention Dataset (Supplementary Material)

Jul 08, 2022

Abstract:The task of driver attention prediction has drawn considerable interest among researchers in robotics and the autonomous vehicle industry. Driver attention prediction can play an instrumental role in mitigating and preventing high-risk events, like collisions and casualties. However, existing driver attention prediction models neglect the distraction state and intention of the driver, which can significantly influence how they observe their surroundings. To address these issues, we present a new driver attention dataset, CoCAtt (Cognitive-Conditioned Attention). Unlike previous driver attention datasets, CoCAtt includes per-frame annotations that describe the distraction state and intention of the driver. In addition, the attention data in our dataset is captured in both manual and autopilot modes using eye-tracking devices of different resolutions. Our results demonstrate that incorporating the above two driver states into attention modeling can improve the performance of driver attention prediction. To the best of our knowledge, this work is the first to provide autopilot attention data. Furthermore, CoCAtt is currently the largest and the most diverse driver attention dataset in terms of autonomy levels, eye tracker resolutions, and driving scenarios. CoCAtt is available for download at https://cocatt-dataset.github.io.

CoCAtt: A Cognitive-Conditioned Driver Attention Dataset

Nov 23, 2021

Abstract:The task of driver attention prediction has drawn considerable interest among researchers in robotics and the autonomous vehicle industry. Driver attention prediction can play an instrumental role in mitigating and preventing high-risk events, like collisions and casualties. However, existing driver attention prediction models neglect the distraction state and intention of the driver, which can significantly influence how they observe their surroundings. To address these issues, we present a new driver attention dataset, CoCAtt (Cognitive-Conditioned Attention). Unlike previous driver attention datasets, CoCAtt includes per-frame annotations that describe the distraction state and intention of the driver. In addition, the attention data in our dataset is captured in both manual and autopilot modes using eye-tracking devices of different resolutions. Our results demonstrate that incorporating the above two driver states into attention modeling can improve the performance of driver attention prediction. To the best of our knowledge, this work is the first to provide autopilot attention data. Furthermore, CoCAtt is currently the largest and the most diverse driver attention dataset in terms of autonomy levels, eye tracker resolutions, and driving scenarios.

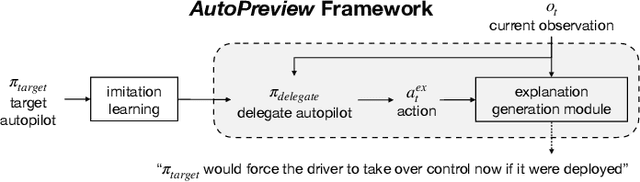

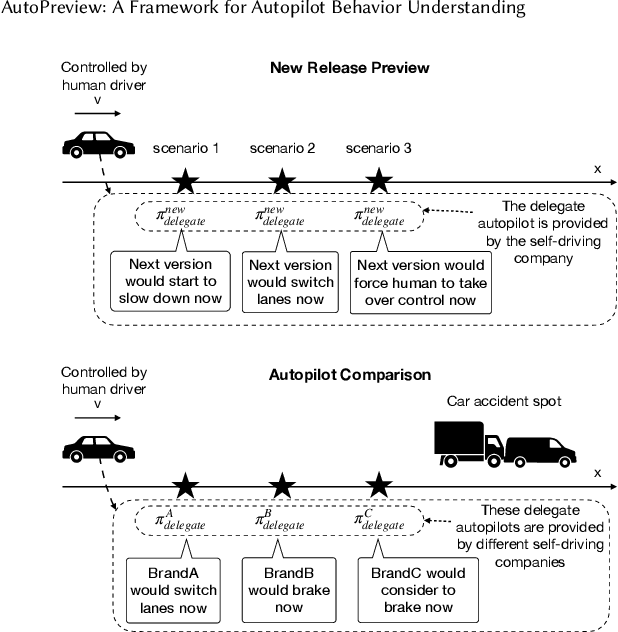

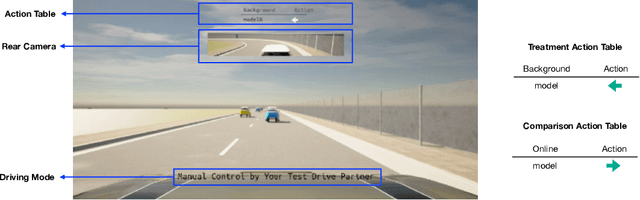

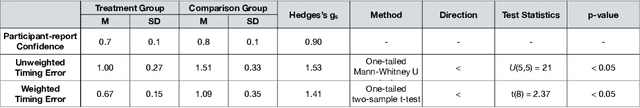

AutoPreview: A Framework for Autopilot Behavior Understanding

Feb 25, 2021

Abstract:The behavior of self driving cars may differ from people expectations, (e.g. an autopilot may unexpectedly relinquish control). This expectation mismatch can cause potential and existing users to distrust self driving technology and can increase the likelihood of accidents. We propose a simple but effective framework, AutoPreview, to enable consumers to preview a target autopilot potential actions in the real world driving context before deployment. For a given target autopilot, we design a delegate policy that replicates the target autopilot behavior with explainable action representations, which can then be queried online for comparison and to build an accurate mental model. To demonstrate its practicality, we present a prototype of AutoPreview integrated with the CARLA simulator along with two potential use cases of the framework. We conduct a pilot study to investigate whether or not AutoPreview provides deeper understanding about autopilot behavior when experiencing a new autopilot policy for the first time. Our results suggest that the AutoPreview method helps users understand autopilot behavior in terms of driving style comprehension, deployment preference, and exact action timing prediction.

* 7 pages, 5 figures, CHI 2021 Late breaking Work

Online monitoring for safe pedestrian-vehicle interactions

Oct 12, 2019

Abstract:As autonomous systems begin to operate amongst humans, methods for safe interaction must be investigated. We consider an example of a small autonomous vehicle in a pedestrian zone that must safely maneuver around people in a free-form fashion. We investigate two key questions: How can we effectively integrate pedestrian intent estimation into our autonomous stack. Can we develop an online monitoring framework to give formal guarantees on the safety of such human-robot interactions. We present a pedestrian intent estimation framework that can accurately predict future pedestrian trajectories given multiple possible goal locations. We integrate this into a reachability-based online monitoring scheme that formally assesses the safety of these interactions with nearly real-time performance (approximately 0.3 seconds). These techniques are integrated on a test vehicle with a complete in-house autonomous stack, demonstrating effective and safe interaction in real-world experiments.

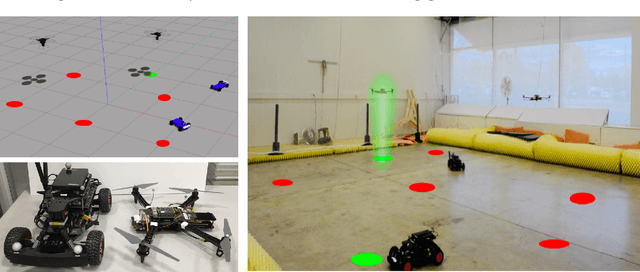

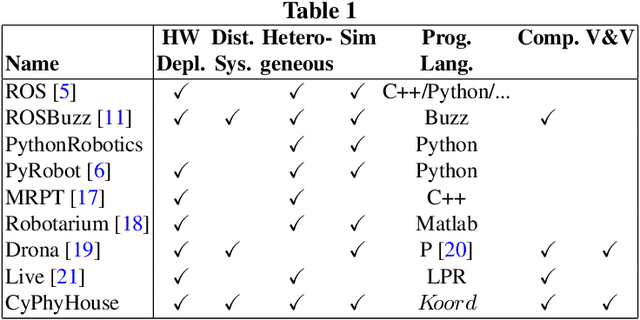

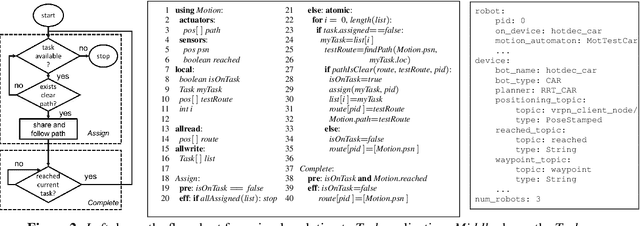

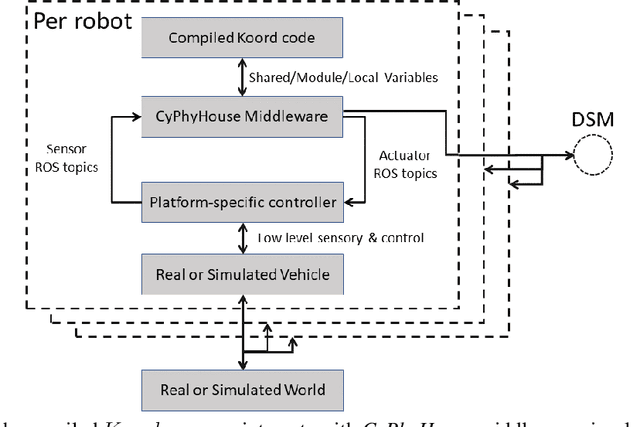

CyPhyHouse: A Programming, Simulation, and Deployment Toolchain for Heterogeneous Distributed Coordination

Oct 10, 2019

Abstract:Programming languages, libraries, and development tools have transformed the application development processes for mobile computing and machine learning. This paper introduces the CyPhyHouse - a toolchain that aims to provide similar programming, debugging, and deployment benefits for distributed mobile robotic applications. Users can develop hardware-agnostic, distributed applications using the high-level, event driven Koord programming language, without requiring expertise in controller design or distributed network protocols. The modular, platform-independent middleware of CyPhyHouse implements these functionalities using standard algorithms for path planning (RRT), control (MPC), mutual exclusion, etc. A high-fidelity, scalable, multi-threaded simulator for Koord applications is developed to simulate the same application code for dozens of heterogeneous agents. The same compiled code can also be deployed on heterogeneous mobile platforms. The effectiveness of CyPhyHouse in improving the design cycles is explicitly illustrated in a robotic testbed through development, simulation, and deployment of a distributed task allocation application on in-house ground and aerial vehicles.

Adaptive Stress Testing with Reward Augmentation for Autonomous Vehicle Validation

Aug 06, 2019

Abstract:Determining possible failure scenarios is a critical step in the evaluation of autonomous vehicle systems. Real-world vehicle testing is commonly employed for autonomous vehicle validation, but the costs and time requirements are high. Consequently, simulation-driven methods such as Adaptive Stress Testing (AST) have been proposed to aid in validation. AST formulates the problem of finding the most likely failure scenarios as a Markov decision process, which can be solved using reinforcement learning. In practice, AST tends to find scenarios where failure is unavoidable and tends to repeatedly discover the same types of failures of a system. This work addresses these issues by encoding domain relevant information into the search procedure. With this modification, the AST method discovers a larger and more expressive subset of the failure space when compared to the original AST formulation. We show that our approach is able to identify useful failure scenarios of an autonomous vehicle policy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge