Shanduojiao Jiang

AutoPreview: A Framework for Autopilot Behavior Understanding

Feb 25, 2021

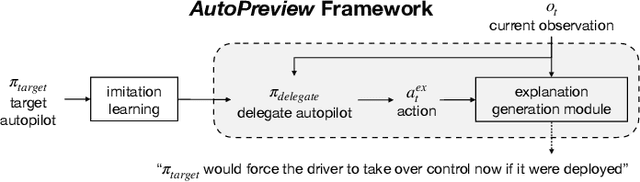

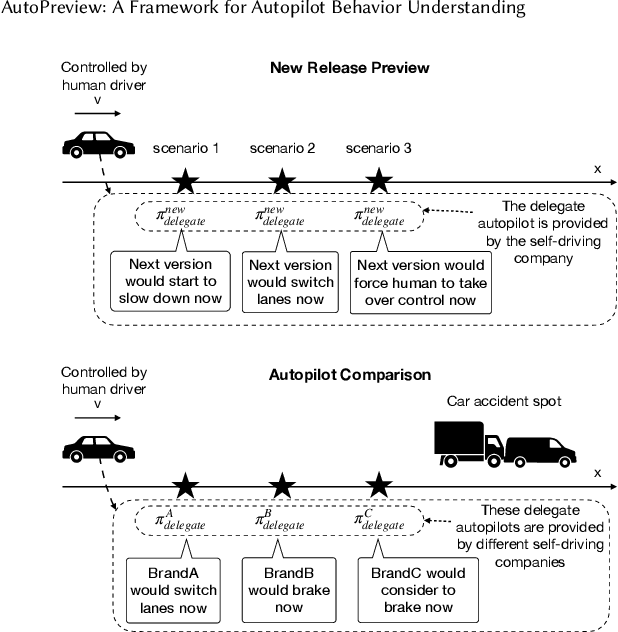

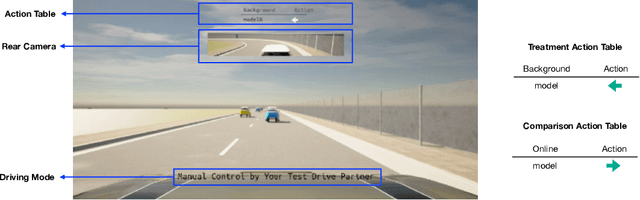

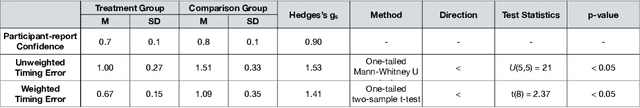

Abstract:The behavior of self driving cars may differ from people expectations, (e.g. an autopilot may unexpectedly relinquish control). This expectation mismatch can cause potential and existing users to distrust self driving technology and can increase the likelihood of accidents. We propose a simple but effective framework, AutoPreview, to enable consumers to preview a target autopilot potential actions in the real world driving context before deployment. For a given target autopilot, we design a delegate policy that replicates the target autopilot behavior with explainable action representations, which can then be queried online for comparison and to build an accurate mental model. To demonstrate its practicality, we present a prototype of AutoPreview integrated with the CARLA simulator along with two potential use cases of the framework. We conduct a pilot study to investigate whether or not AutoPreview provides deeper understanding about autopilot behavior when experiencing a new autopilot policy for the first time. Our results suggest that the AutoPreview method helps users understand autopilot behavior in terms of driving style comprehension, deployment preference, and exact action timing prediction.

* 7 pages, 5 figures, CHI 2021 Late breaking Work

To Explain or Not to Explain: A Study on the Necessity of Explanations for Autonomous Vehicles

Jun 21, 2020

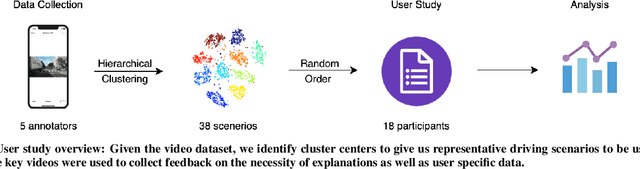

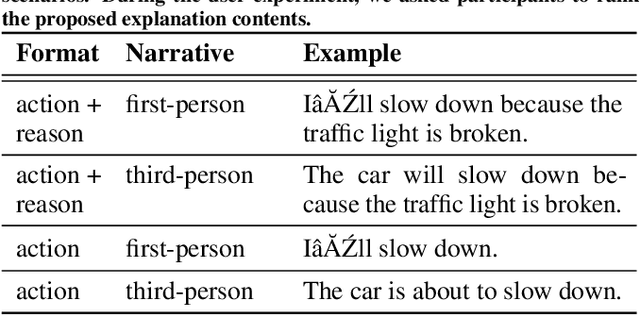

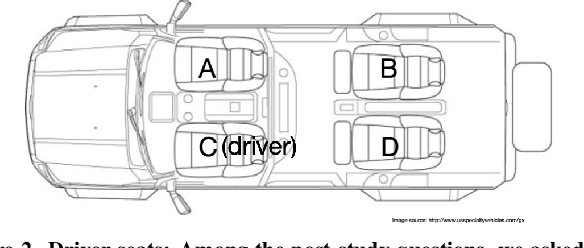

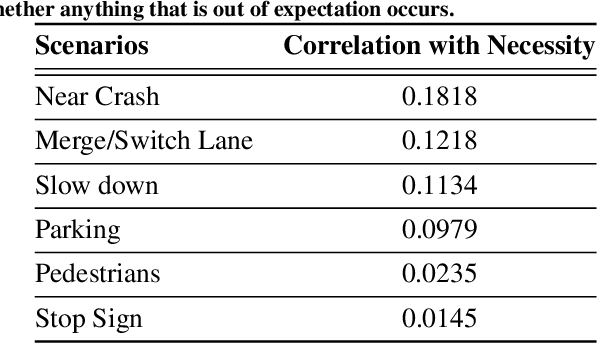

Abstract:Explainable AI, in the context of autonomous systems, like self driving cars, has drawn broad interests from researchers. Recent studies have found that providing explanations for an autonomous vehicle actions has many benefits, e.g., increase trust and acceptance, but put little emphasis on when an explanation is needed and how the content of explanation changes with context. In this work, we investigate which scenarios people need explanations and how the critical degree of explanation shifts with situations and driver types. Through a user experiment, we ask participants to evaluate how necessary an explanation is and measure the impact on their trust in the self driving cars in different contexts. We also present a self driving explanation dataset with first person explanations and associated measure of the necessity for 1103 video clips, augmenting the Berkeley Deep Drive Attention dataset. Additionally, we propose a learning based model that predicts how necessary an explanation for a given situation in real time, using camera data inputs. Our research reveals that driver types and context dictates whether or not an explanation is necessary and what is helpful for improved interaction and understanding.

Can AI decrypt fashion jargon for you?

Mar 18, 2020

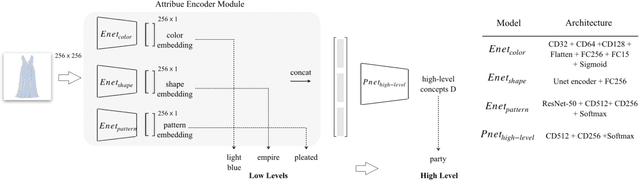

Abstract:When people talk about fashion, they care about the underlying meaning of fashion concepts,e.g., style.For example, people ask questions like what features make this dress smart.However, the product descriptions in today fashion websites are full of domain specific and low level words. It is not clear to people how exactly those low level descriptions can contribute to a style or any high level fashion concept. In this paper, we proposed a data driven solution to address this concept understanding issues by leveraging a large number of existing product data on fashion sites. We first collected and categorized 1546 fashion keywords into 5 different fashion categories. Then, we collected a new fashion product dataset with 853,056 products in total. Finally, we trained a deep learning model that can explicitly predict and explain high level fashion concepts in a product image with its low level and domain specific fashion features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge