To Explain or Not to Explain: A Study on the Necessity of Explanations for Autonomous Vehicles

Paper and Code

Jun 21, 2020

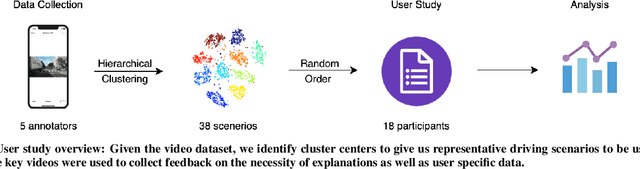

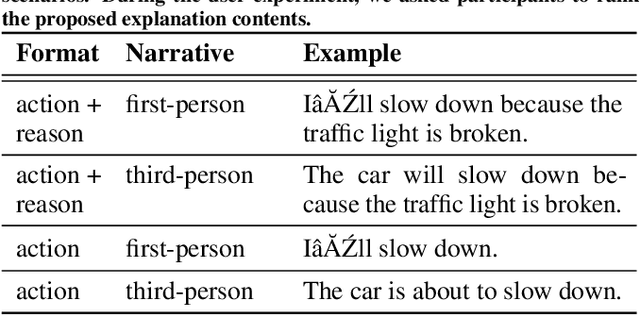

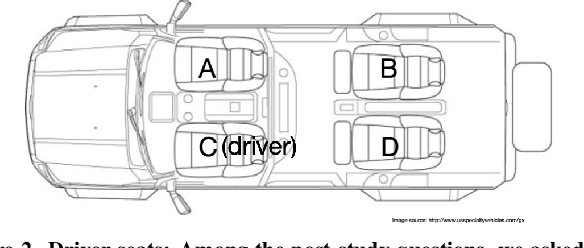

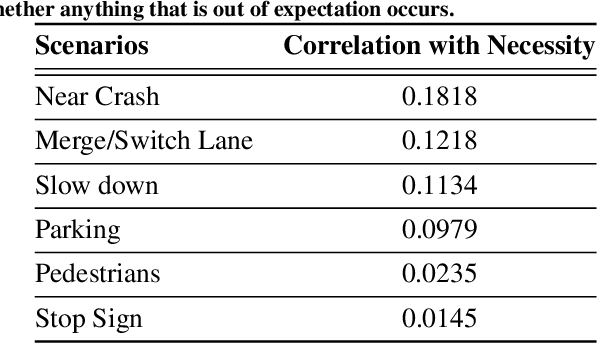

Explainable AI, in the context of autonomous systems, like self driving cars, has drawn broad interests from researchers. Recent studies have found that providing explanations for an autonomous vehicle actions has many benefits, e.g., increase trust and acceptance, but put little emphasis on when an explanation is needed and how the content of explanation changes with context. In this work, we investigate which scenarios people need explanations and how the critical degree of explanation shifts with situations and driver types. Through a user experiment, we ask participants to evaluate how necessary an explanation is and measure the impact on their trust in the self driving cars in different contexts. We also present a self driving explanation dataset with first person explanations and associated measure of the necessity for 1103 video clips, augmenting the Berkeley Deep Drive Attention dataset. Additionally, we propose a learning based model that predicts how necessary an explanation for a given situation in real time, using camera data inputs. Our research reveals that driver types and context dictates whether or not an explanation is necessary and what is helpful for improved interaction and understanding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge