Peter Bruza

An Extension Of Combinatorial Contextuality For Cognitive Protocols

Feb 15, 2022

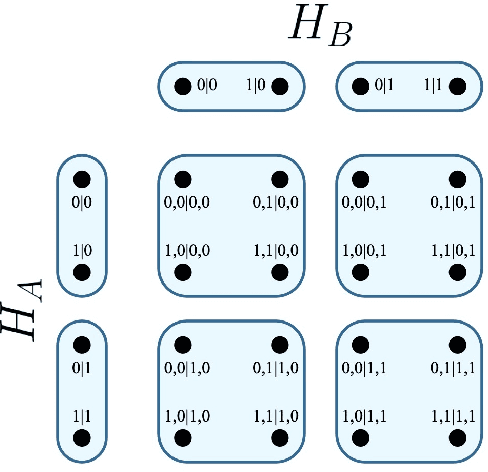

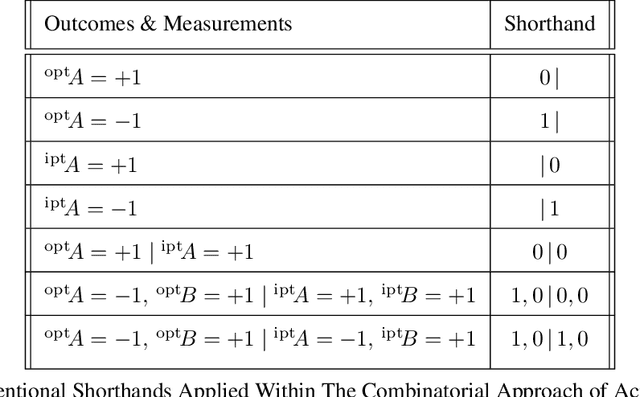

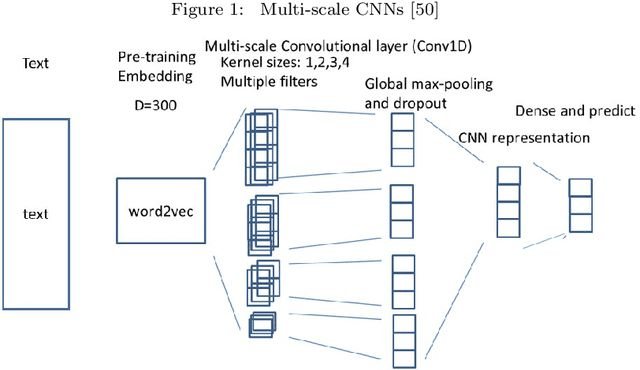

Abstract:This article extends the combinatorial approach to support the determination of contextuality amidst causal influences. Contextuality is an active field of study in Quantum Cognition, in systems relating to mental phenomena, such as concepts in human memory [Aerts et al., 2013]. In the cognitive field of study, a contemporary challenge facing the determination of whether a phenomenon is contextual has been the identification and management of disturbances [Dzhafarov et al., 2016]. Whether or not said disturbances are identified through the modelling approach, constitute causal influences, or are disregardableas as noise is important, as contextuality cannot be adequately determined in the presence of causal influences [Gleason, 1957]. To address this challenge, we first provide a formalisation of necessary elements of the combinatorial approach within the language of canonical9 causal models. Through this formalisation, we extend the combinatorial approach to support a measurement and treatment of disturbance, and offer techniques to separately distinguish noise and causal influences. Thereafter, we develop a protocol through which these elements may be represented within a cognitive experiment. As human cognition seems rife with causal influences, cognitive modellers may apply the extended combinatorial approach to practically determine the contextuality of cognitive phenomena.

Structural block driven - enhanced convolutional neural representation for relation extraction

Mar 21, 2021

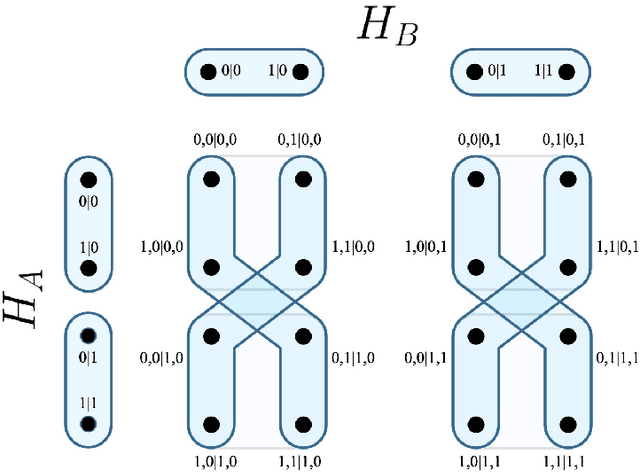

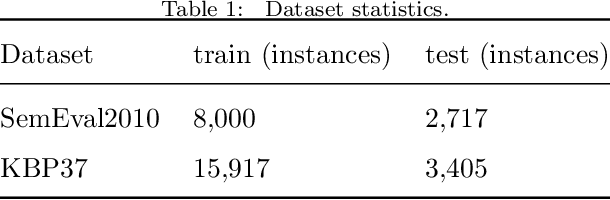

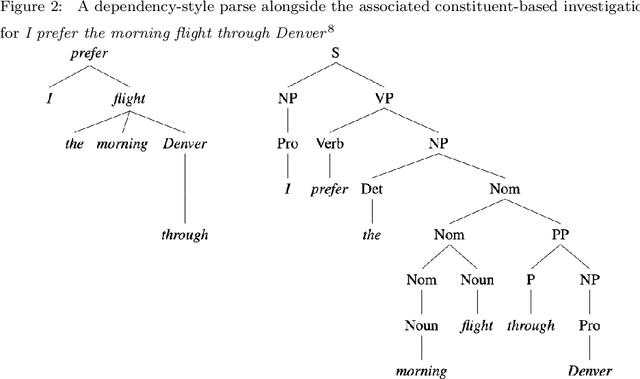

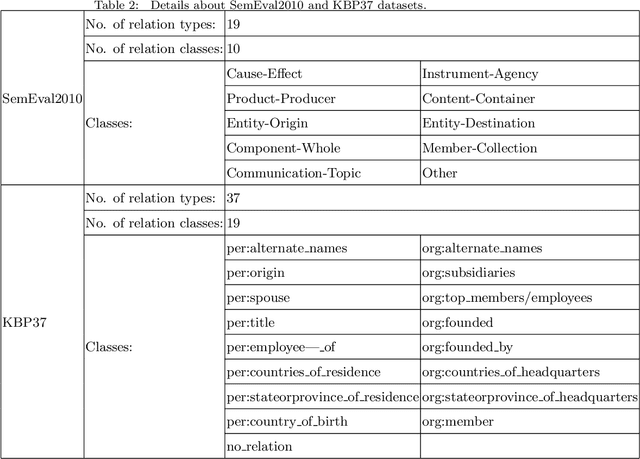

Abstract:In this paper, we propose a novel lightweight relation extraction approach of structural block driven - convolutional neural learning. Specifically, we detect the essential sequential tokens associated with entities through dependency analysis, named as a structural block, and only encode the block on a block-wise and an inter-block-wise representation, utilizing multi-scale CNNs. This is to 1) eliminate the noisy from irrelevant part of a sentence; meanwhile 2) enhance the relevant block representation with both block-wise and inter-block-wise semantically enriched representation. Our method has the advantage of being independent of long sentence context since we only encode the sequential tokens within a block boundary. Experiments on two datasets i.e., SemEval2010 and KBP37, demonstrate the significant advantages of our method. In particular, we achieve the new state-of-the-art performance on the KBP37 dataset; and comparable performance with the state-of-the-art on the SemEval2010 dataset.

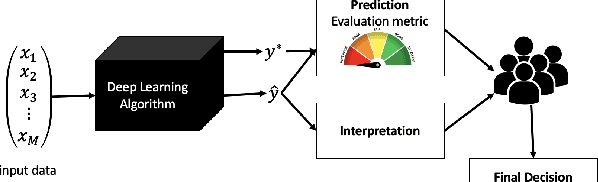

Counterfactuals and Causability in Explainable Artificial Intelligence: Theory, Algorithms, and Applications

Mar 07, 2021

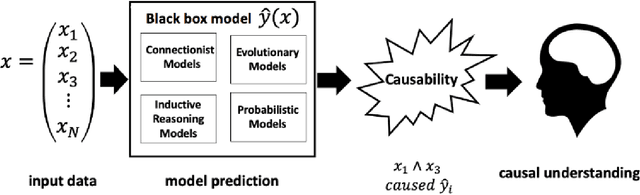

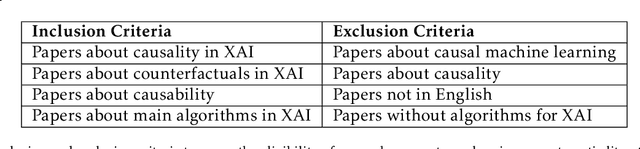

Abstract:There has been a growing interest in model-agnostic methods that can make deep learning models more transparent and explainable to a user. Some researchers recently argued that for a machine to achieve a certain degree of human-level explainability, this machine needs to provide human causally understandable explanations, also known as causability. A specific class of algorithms that have the potential to provide causability are counterfactuals. This paper presents an in-depth systematic review of the diverse existing body of literature on counterfactuals and causability for explainable artificial intelligence. We performed an LDA topic modelling analysis under a PRISMA framework to find the most relevant literature articles. This analysis resulted in a novel taxonomy that considers the grounding theories of the surveyed algorithms, together with their underlying properties and applications in real-world data. This research suggests that current model-agnostic counterfactual algorithms for explainable AI are not grounded on a causal theoretical formalism and, consequently, cannot promote causability to a human decision-maker. Our findings suggest that the explanations derived from major algorithms in the literature provide spurious correlations rather than cause/effects relationships, leading to sub-optimal, erroneous or even biased explanations. This paper also advances the literature with new directions and challenges on promoting causability in model-agnostic approaches for explainable artificial intelligence.

An Interpretable Probabilistic Approach for Demystifying Black-box Predictive Models

Jul 21, 2020

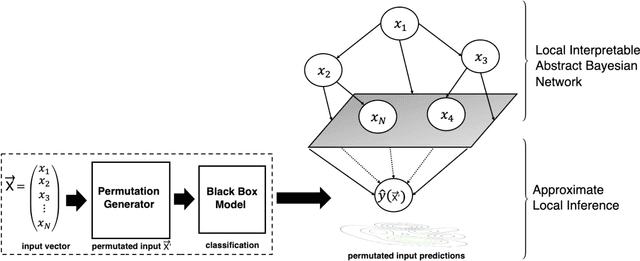

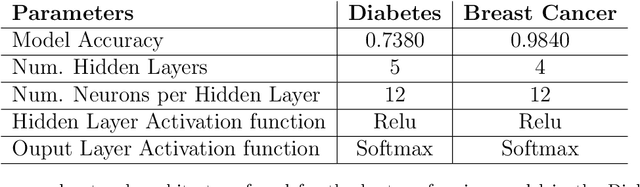

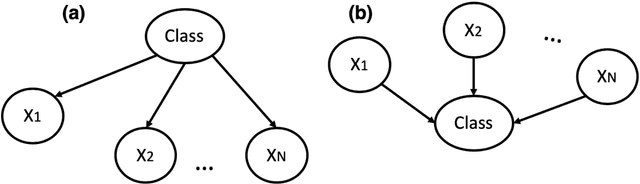

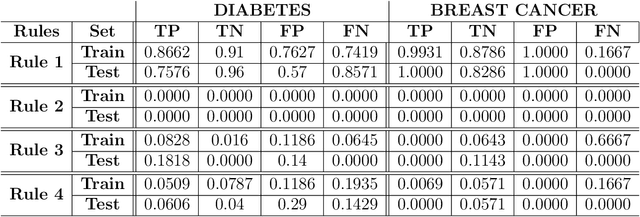

Abstract:The use of sophisticated machine learning models for critical decision making is faced with a challenge that these models are often applied as a "black-box". This has led to an increased interest in interpretable machine learning, where post hoc interpretation presents a useful mechanism for generating interpretations of complex learning models. In this paper, we propose a novel approach underpinned by an extended framework of Bayesian networks for generating post hoc interpretations of a black-box predictive model. The framework supports extracting a Bayesian network as an approximation of the black-box model for a specific prediction. Compared to the existing post hoc interpretation methods, the contribution of our approach is three-fold. Firstly, the extracted Bayesian network, as a probabilistic graphical model, can provide interpretations about not only what input features but also why these features contributed to a prediction. Secondly, for complex decision problems with many features, a Markov blanket can be generated from the extracted Bayesian network to provide interpretations with a focused view on those input features that directly contributed to a prediction. Thirdly, the extracted Bayesian network enables the identification of four different rules which can inform the decision-maker about the confidence level in a prediction, thus helping the decision-maker assess the reliability of predictions learned by a black-box model. We implemented the proposed approach, applied it in the context of two well-known public datasets and analysed the results, which are made available in an open-source repository.

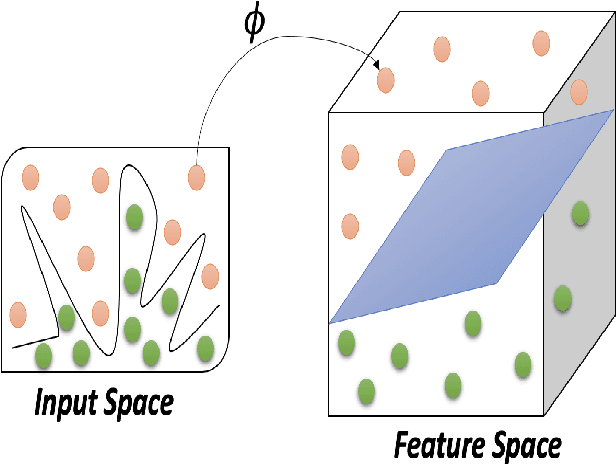

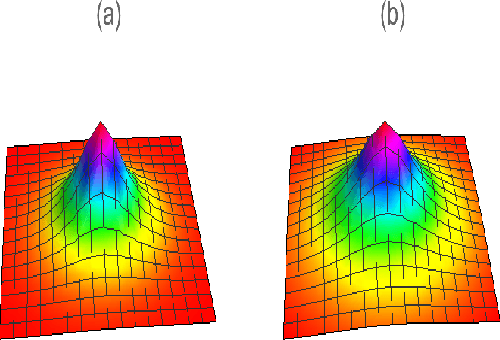

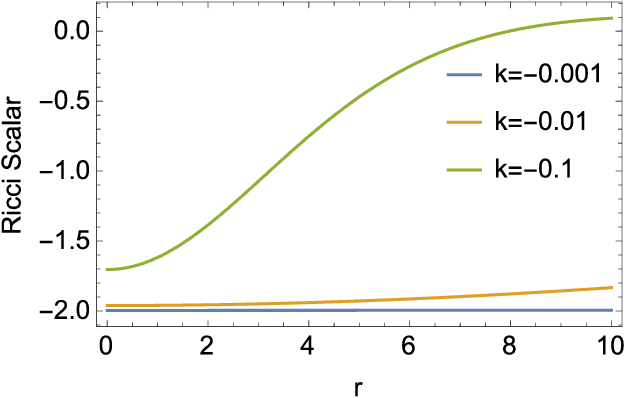

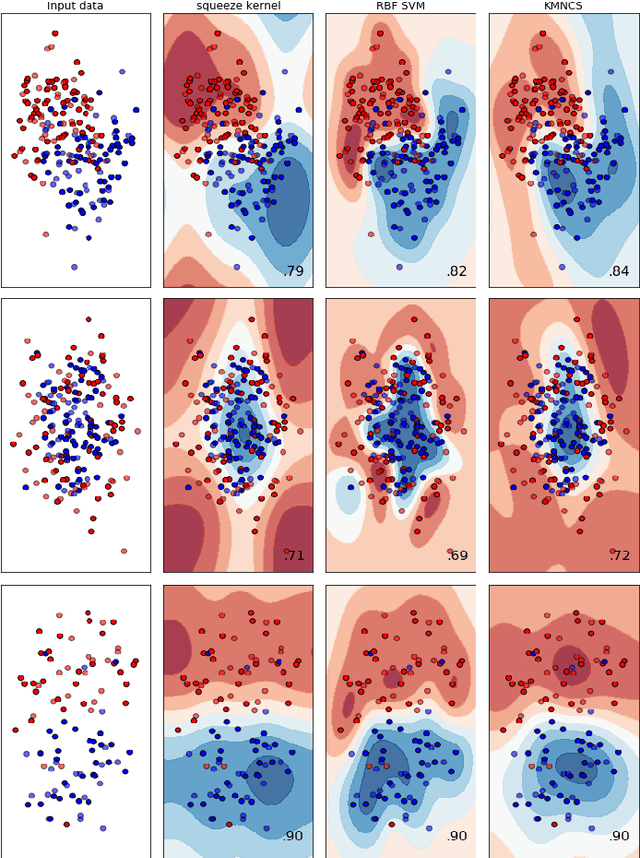

Kernel Method based on Non-Linear Coherent State

Jul 15, 2020

Abstract:In this paper, by mapping datasets to a set of non-linear coherent states, the process of encoding inputs in quantum states as a non-linear feature map is re-interpreted. As a result of this fact that the Radial Basis Function is recovered when data is mapped to a complex Hilbert state represented by coherent states, non-linear coherent states can be considered as natural generalisation of associated kernels. By considering the non-linear coherent states of a quantum oscillator with variable mass, we propose a kernel function based on generalized hypergeometric functions, as orthogonal polynomial functions. The suggested kernel is implemented with support vector machine on two well known datasets (make circles, and make moons) and outperforms the baselines, even in the presence of high noise. In addition, we study impact of geometrical properties of feature space, obtaining by non-linear coherent states, on the SVM classification task, by using considering the Fubini-Study metric of associated coherent states.

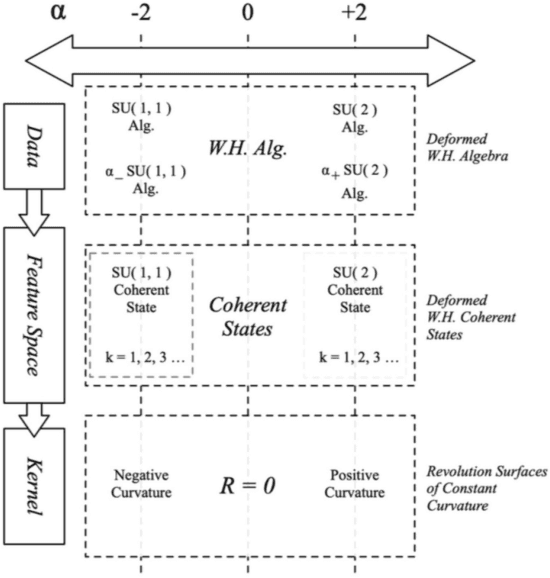

Construction of 'Support Vector' Machine Feature Spaces via Deformed Weyl-Heisenberg Algebra

Jun 02, 2020

Abstract:This paper uses deformed coherent states, based on a deformed Weyl-Heisenberg algebra that unifies the well-known SU(2), Weyl-Heisenberg, and SU(1,1) groups, through a common parameter. We show that deformed coherent states provide the theoretical foundation of a meta-kernel function, that is a kernel which in turn defines kernel functions. Kernel functions drive developments in the field of machine learning and the meta-kernel function presented in this paper opens new theoretical avenues for the definition and exploration of kernel functions. The meta-kernel function applies associated revolution surfaces as feature spaces identified with non-linear coherent states. An empirical investigation compares the deformed SU(2) and SU(1,1) kernels derived from the meta-kernel which shows performance similar to the Radial Basis kernel, and offers new insights (based on the deformed Weyl-Heisenberg algebra).

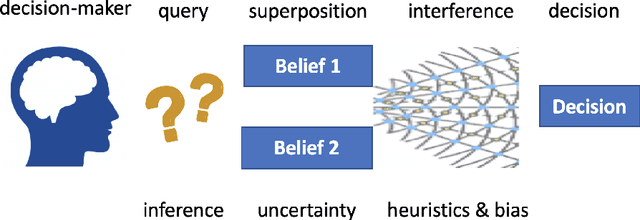

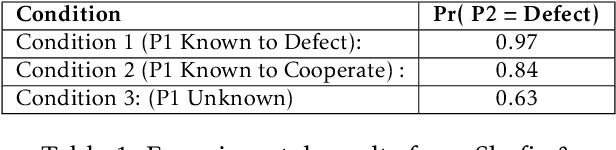

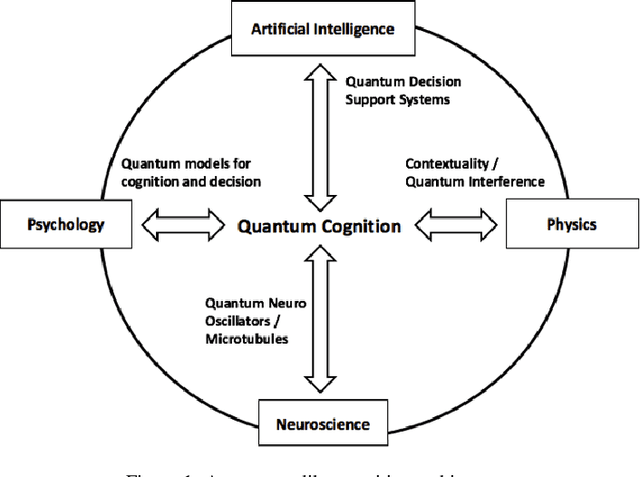

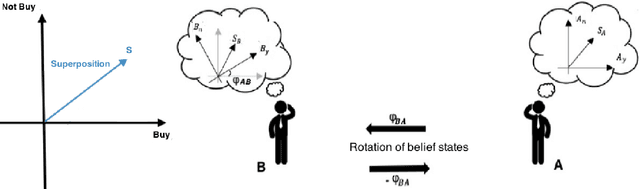

QuLBIT: Quantum-Like Bayesian Inference Technologies for Cognition and Decision

May 30, 2020

Abstract:This paper provides the foundations of a unified cognitive decision-making framework (QulBIT) which is derived from quantum theory. The main advantage of this framework is that it can cater for paradoxical and irrational human decision making. Although quantum approaches for cognition have demonstrated advantages over classical probabilistic approaches and bounded rationality models, they still lack explanatory power. To address this, we introduce a novel explanatory analysis of the decision-maker's belief space. This is achieved by exploiting quantum interference effects as a way of both quantifying and explaining the decision-maker's uncertainty. We detail the main modules of the unified framework, the explanatory analysis method, and illustrate their application in situations violating the Sure Thing Principle.

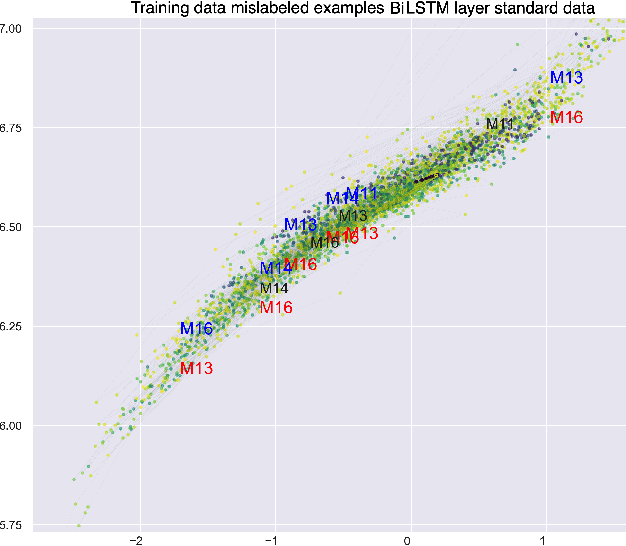

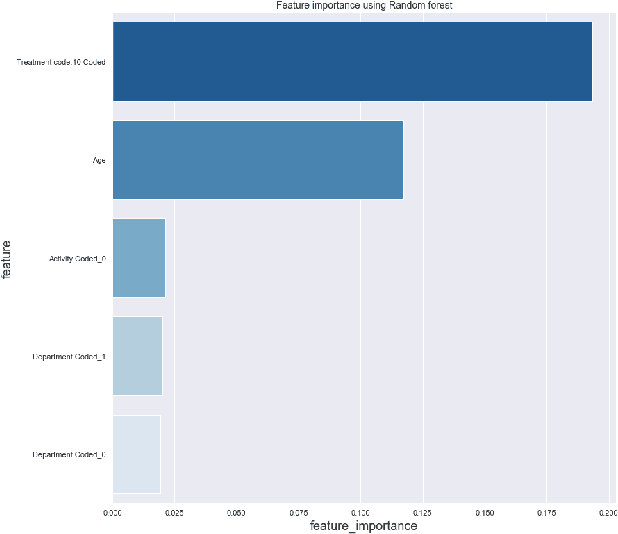

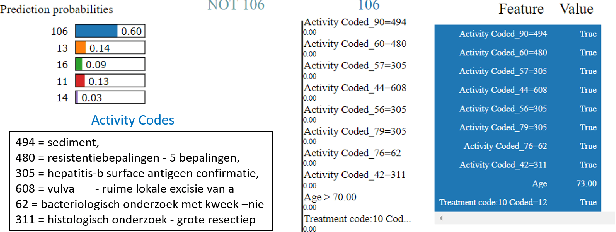

An Investigation of Interpretability Techniques for Deep Learning in Predictive Process Analytics

Feb 21, 2020

Abstract:This paper explores interpretability techniques for two of the most successful learning algorithms in medical decision-making literature: deep neural networks and random forests. We applied these algorithms in a real-world medical dataset containing information about patients with cancer, where we learn models that try to predict the type of cancer of the patient, given their set of medical activity records. We explored different algorithms based on neural network architectures using long short term deep neural networks, and random forests. Since there is a growing need to provide decision-makers understandings about the logic of predictions of black boxes, we also explored different techniques that provide interpretations for these classifiers. In one of the techniques, we intercepted some hidden layers of these neural networks and used autoencoders in order to learn what is the representation of the input in the hidden layers. In another, we investigated an interpretable model locally around the random forest's prediction. Results show learning an interpretable model locally around the model's prediction leads to a higher understanding of why the algorithm is making some decision. Use of local and linear model helps identify the features used in prediction of a specific instance or data point. We see certain distinct features used for predictions that provide useful insights about the type of cancer, along with features that do not generalize well. In addition, the structured deep learning approach using autoencoders provided meaningful prediction insights, which resulted in the identification of nonlinear clusters correspondent to the patients' different types of cancer.

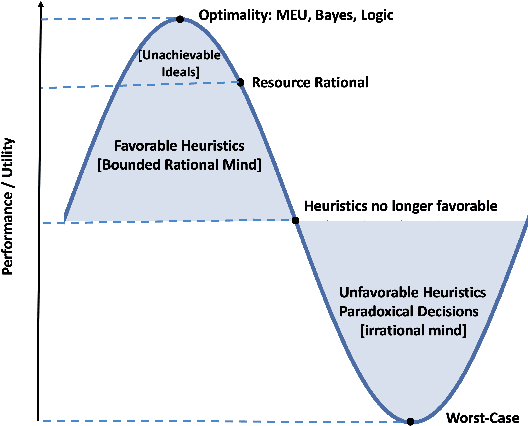

Towards a Quantum-Like Cognitive Architecture for Decision-Making

May 11, 2019

Abstract:We propose an alternative and unifying framework for decision-making that, by using quantum mechanics, provides more generalised cognitive and decision models with the ability to represent more information than classical models. This framework can accommodate and predict several cognitive biases reported in Lieder & Griffiths without heavy reliance on heuristics nor on assumptions of the computational resources of the mind.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge