Pengfei Song

Human Semantic Segmentation using Millimeter-Wave Radar Sparse Point Clouds

Apr 28, 2023

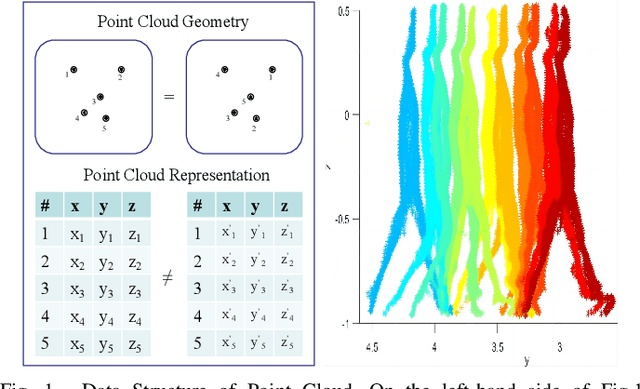

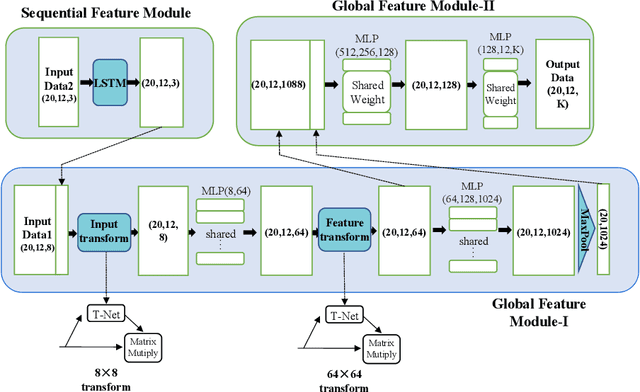

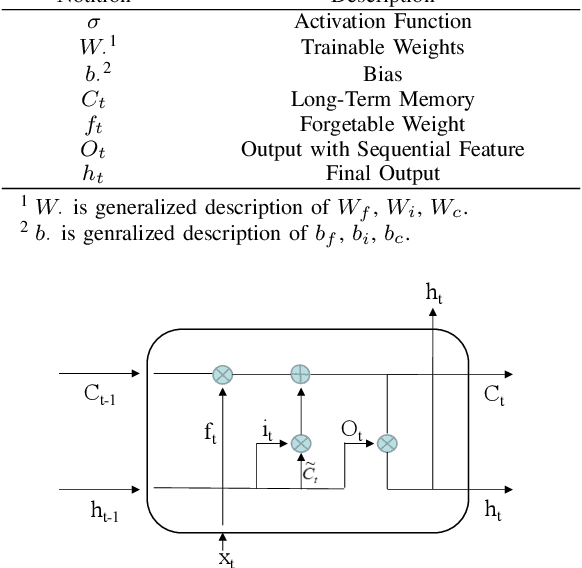

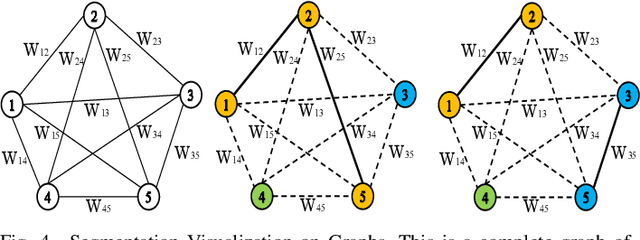

Abstract:This paper presents a framework for semantic segmentation on sparse sequential point clouds of millimeter-wave radar. Compared with cameras and lidars, millimeter-wave radars have the advantage of not revealing privacy, having a strong anti-interference ability, and having long detection distance. The sparsity and capturing temporal-topological features of mmWave data is still a problem. However, the issue of capturing the temporal-topological coupling features under the human semantic segmentation task prevents previous advanced segmentation methods (e.g PointNet, PointCNN, Point Transformer) from being well utilized in practical scenarios. To address the challenge caused by the sparsity and temporal-topological feature of the data, we (i) introduce graph structure and topological features to the point cloud, (ii) propose a semantic segmentation framework including a global feature-extracting module and a sequential feature-extracting module. In addition, we design an efficient and more fitting loss function for a better training process and segmentation results based on graph clustering. Experimentally, we deploy representative semantic segmentation algorithms (Transformer, GCNN, etc.) on a custom dataset. Experimental results indicate that our model achieves mean accuracy on the custom dataset by $\mathbf{82.31}\%$ and outperforms the state-of-the-art algorithms. Moreover, to validate the model's robustness, we deploy our model on the well-known S3DIS dataset. On the S3DIS dataset, our model achieves mean accuracy by $\mathbf{92.6}\%$, outperforming baseline algorithms.

High-resolution Power Doppler Using Null Subtraction Imaging

Jan 09, 2023

Abstract:To improve the spatial resolution of power Doppler (PD) imaging, we explored null subtraction imaging (NSI) as an alternative beamforming technique to delay-and-sum (DAS). NSI is a nonlinear beamforming approach that uses three different apodizations on receive and incoherently sums the beamformed envelopes. NSI uses a null in the beam pattern to improve the lateral resolution, which we apply here for improving PD spatial resolution both with and without contrast microbubbles. In this study, we used NSI with singular value decomposition (SVD)-based clutter filtering and noise equalization to generate high-resolution PD images. An element sensitivity correction scheme was also performed to further improve the image quality of PD images using NSI. First, a microbubble trace experiment was performed to quantitatively evaluate the performance of NSI based PD. Then, both contrast-enhanced and contrast free ultrasound data were collected from a rat brain. Higher spatial resolution and image quality were observed from the NSI-based PD microvessel images compared to microvessel images generated by traditional DAS-based beamforming.

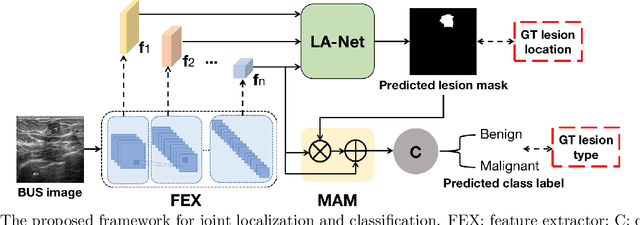

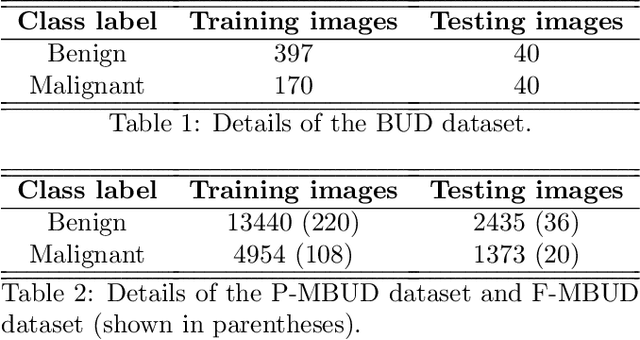

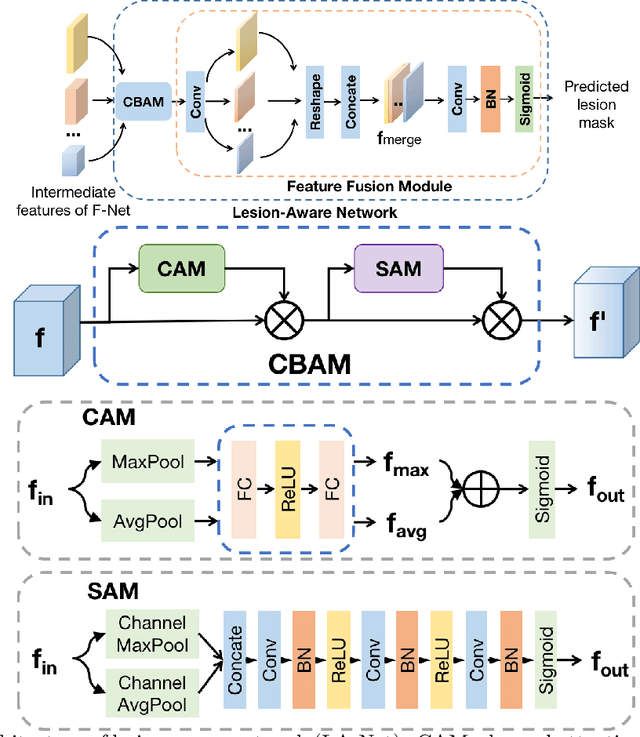

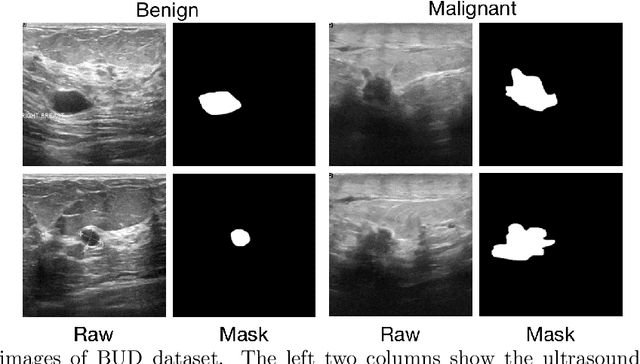

Joint localization and classification of breast tumors on ultrasound images using a novel auxiliary attention-based framework

Oct 11, 2022

Abstract:Automatic breast lesion detection and classification is an important task in computer-aided diagnosis, in which breast ultrasound (BUS) imaging is a common and frequently used screening tool. Recently, a number of deep learning-based methods have been proposed for joint localization and classification of breast lesions using BUS images. In these methods, features extracted by a shared network trunk are appended by two independent network branches to achieve classification and localization. Improper information sharing might cause conflicts in feature optimization in the two branches and leads to performance degradation. Also, these methods generally require large amounts of pixel-level annotated data for model training. To overcome these limitations, we proposed a novel joint localization and classification model based on the attention mechanism and disentangled semi-supervised learning strategy. The model used in this study is composed of a classification network and an auxiliary lesion-aware network. By use of the attention mechanism, the auxiliary lesion-aware network can optimize multi-scale intermediate feature maps and extract rich semantic information to improve classification and localization performance. The disentangled semi-supervised learning strategy only requires incomplete training datasets for model training. The proposed modularized framework allows flexible network replacement to be generalized for various applications. Experimental results on two different breast ultrasound image datasets demonstrate the effectiveness of the proposed method. The impacts of various network factors on model performance are also investigated to gain deep insights into the designed framework.

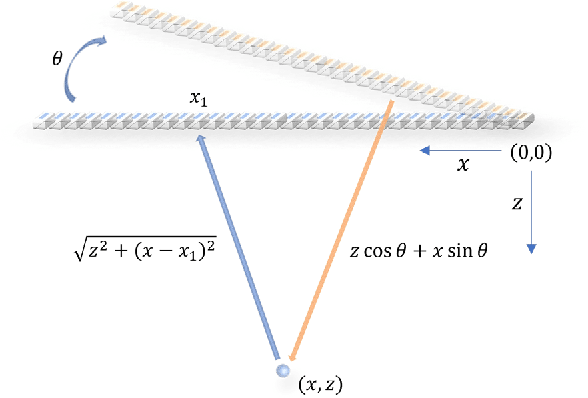

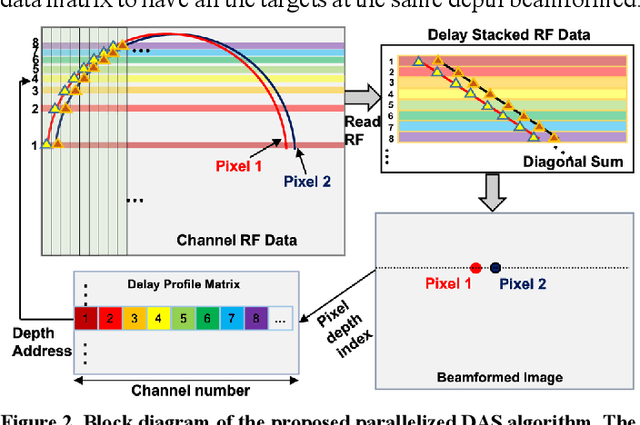

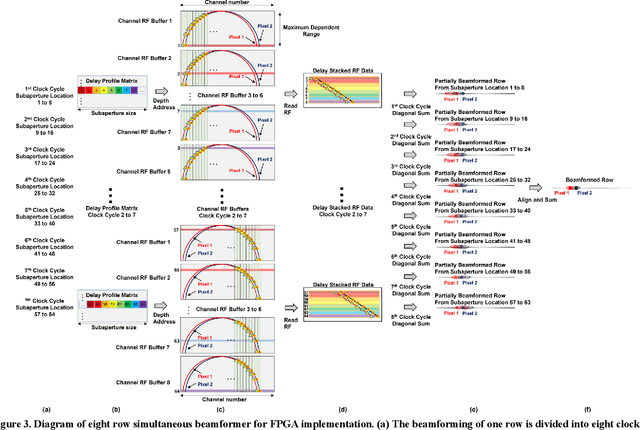

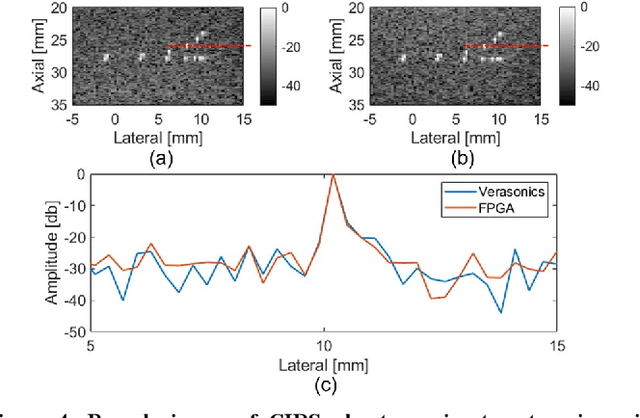

Towards a real-time continuous ultrafast ultrasound beamformer with programmable logic

Aug 06, 2022

Abstract:Ultrafast ultrasound imaging is essential for advanced ultrasound imaging techniques such as ultrasound localization microscopy (ULM) and functional ultrasound (fUS). Current ultrafast ultrasound imaging is challenged by the ultrahigh data bandwidth associated with the radio frequency (RF) signal, and by the latency of the computationally expensive beamforming process. As such, continuous ultrafast data acquisition and beamforming remain elusive with existing software beamformers based on CPUs or GPUs. To address these challenges, the proposed work introduces a hybrid solution composed of an improved delay and sum (DAS) algorithm with high hardware efficiency and an ultrafast beamformer based on the field programmable gate array (FPGA). Our proposed method presents two unique advantages over conventional FPGA-based beamformers: 1) high scalability that allows fast adaptation to different FPGA platforms; 2) high adaptability to different imaging probes and applications thanks to the absence of hard-coded imaging parameters. With the proposed method, we measured an ultrafast beamforming frame rate of over 3.38 GPixels/second. The performance of the proposed beamformer was compared with the software beamformer on the Verasonics Vantage system for both phantom imaging and in vivo imaging of a mouse brain. Multiple imaging schemes including B-mode, power Doppler and ULM were evaluated with the proposed solution.

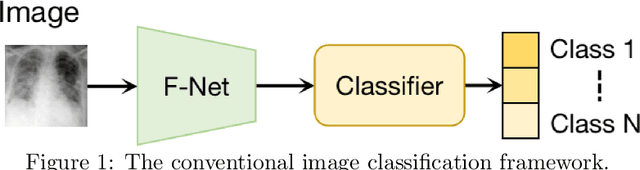

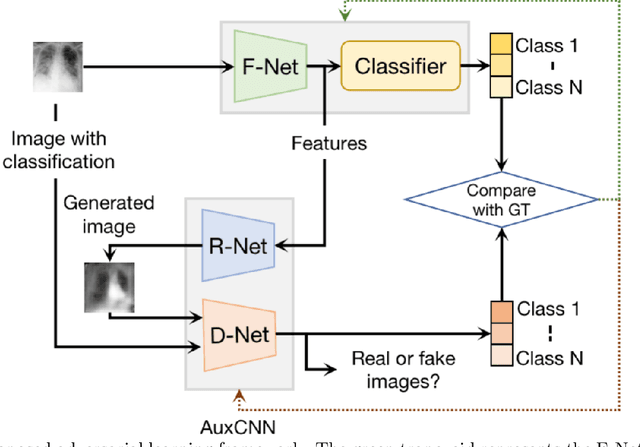

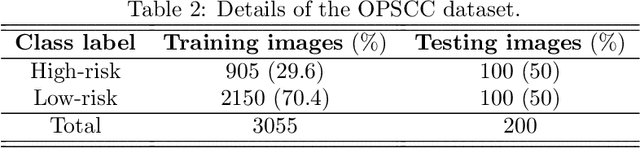

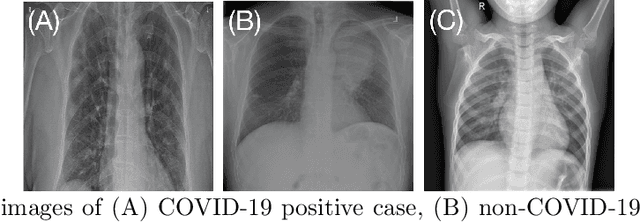

A novel adversarial learning strategy for medical image classification

Jul 07, 2022

Abstract:Deep learning (DL) techniques have been extensively utilized for medical image classification. Most DL-based classification networks are generally structured hierarchically and optimized through the minimization of a single loss function measured at the end of the networks. However, such a single loss design could potentially lead to optimization of one specific value of interest but fail to leverage informative features from intermediate layers that might benefit classification performance and reduce the risk of overfitting. Recently, auxiliary convolutional neural networks (AuxCNNs) have been employed on top of traditional classification networks to facilitate the training of intermediate layers to improve classification performance and robustness. In this study, we proposed an adversarial learning-based AuxCNN to support the training of deep neural networks for medical image classification. Two main innovations were adopted in our AuxCNN classification framework. First, the proposed AuxCNN architecture includes an image generator and an image discriminator for extracting more informative image features for medical image classification, motivated by the concept of generative adversarial network (GAN) and its impressive ability in approximating target data distribution. Second, a hybrid loss function is designed to guide the model training by incorporating different objectives of the classification network and AuxCNN to reduce overfitting. Comprehensive experimental studies demonstrated the superior classification performance of the proposed model. The effect of the network-related factors on classification performance was investigated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge