Zong Fan

Joint localization and classification of breast tumors on ultrasound images using a novel auxiliary attention-based framework

Oct 11, 2022

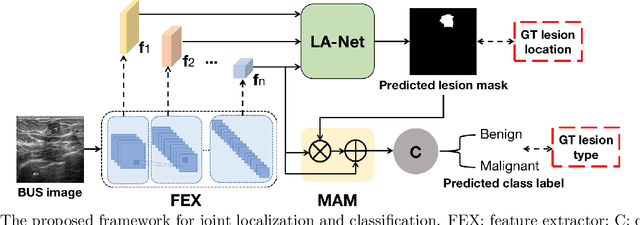

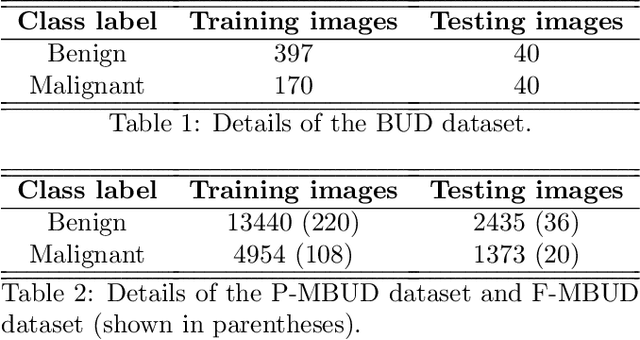

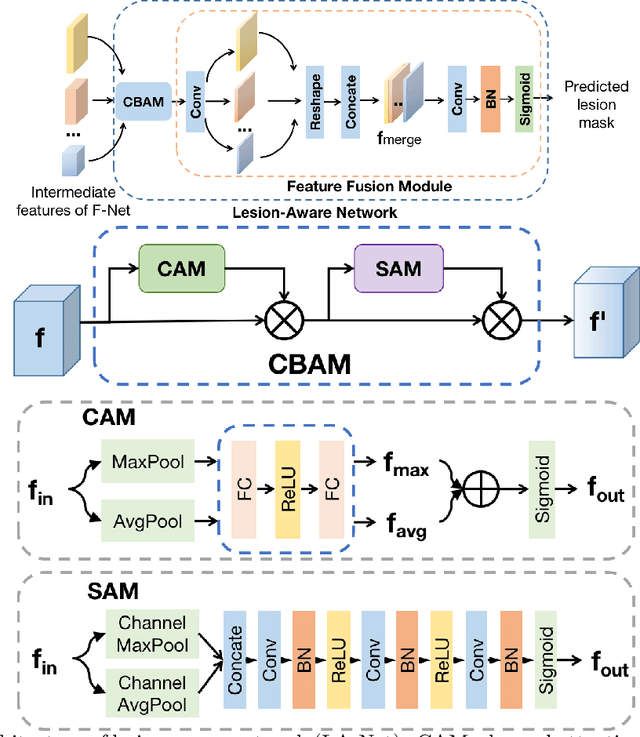

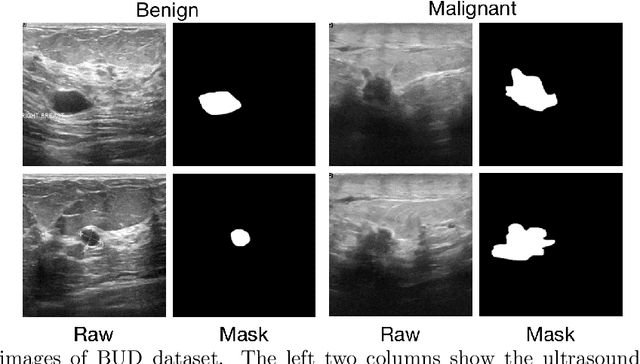

Abstract:Automatic breast lesion detection and classification is an important task in computer-aided diagnosis, in which breast ultrasound (BUS) imaging is a common and frequently used screening tool. Recently, a number of deep learning-based methods have been proposed for joint localization and classification of breast lesions using BUS images. In these methods, features extracted by a shared network trunk are appended by two independent network branches to achieve classification and localization. Improper information sharing might cause conflicts in feature optimization in the two branches and leads to performance degradation. Also, these methods generally require large amounts of pixel-level annotated data for model training. To overcome these limitations, we proposed a novel joint localization and classification model based on the attention mechanism and disentangled semi-supervised learning strategy. The model used in this study is composed of a classification network and an auxiliary lesion-aware network. By use of the attention mechanism, the auxiliary lesion-aware network can optimize multi-scale intermediate feature maps and extract rich semantic information to improve classification and localization performance. The disentangled semi-supervised learning strategy only requires incomplete training datasets for model training. The proposed modularized framework allows flexible network replacement to be generalized for various applications. Experimental results on two different breast ultrasound image datasets demonstrate the effectiveness of the proposed method. The impacts of various network factors on model performance are also investigated to gain deep insights into the designed framework.

A novel adversarial learning strategy for medical image classification

Jul 07, 2022

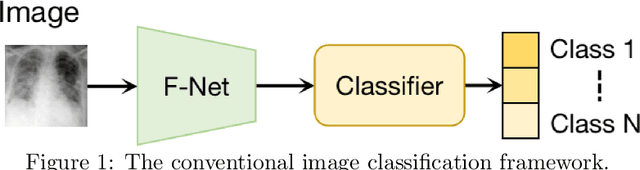

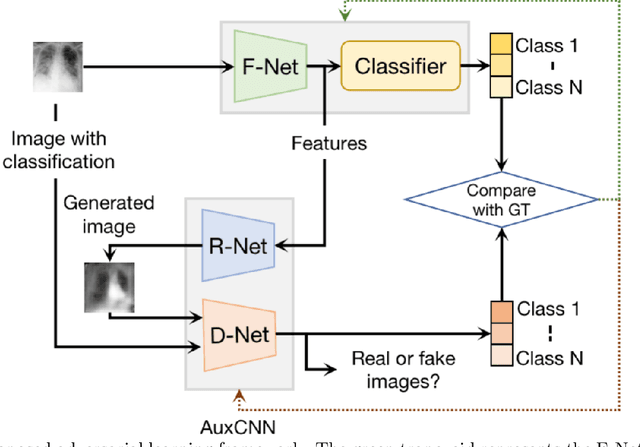

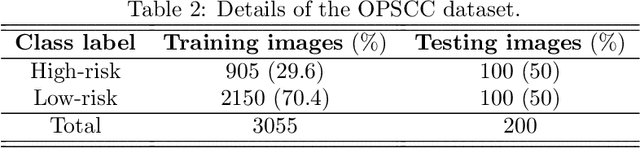

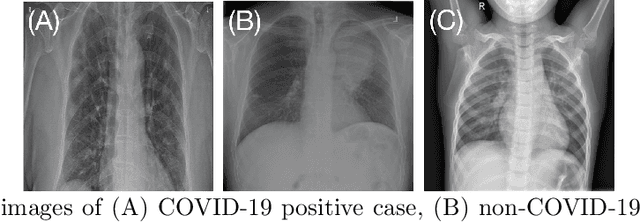

Abstract:Deep learning (DL) techniques have been extensively utilized for medical image classification. Most DL-based classification networks are generally structured hierarchically and optimized through the minimization of a single loss function measured at the end of the networks. However, such a single loss design could potentially lead to optimization of one specific value of interest but fail to leverage informative features from intermediate layers that might benefit classification performance and reduce the risk of overfitting. Recently, auxiliary convolutional neural networks (AuxCNNs) have been employed on top of traditional classification networks to facilitate the training of intermediate layers to improve classification performance and robustness. In this study, we proposed an adversarial learning-based AuxCNN to support the training of deep neural networks for medical image classification. Two main innovations were adopted in our AuxCNN classification framework. First, the proposed AuxCNN architecture includes an image generator and an image discriminator for extracting more informative image features for medical image classification, motivated by the concept of generative adversarial network (GAN) and its impressive ability in approximating target data distribution. Second, a hybrid loss function is designed to guide the model training by incorporating different objectives of the classification network and AuxCNN to reduce overfitting. Comprehensive experimental studies demonstrated the superior classification performance of the proposed model. The effect of the network-related factors on classification performance was investigated.

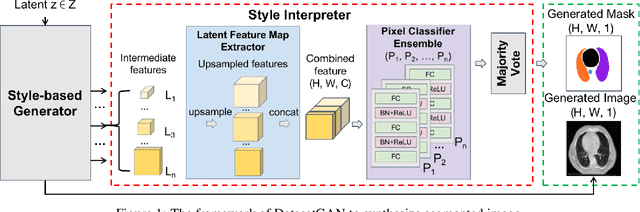

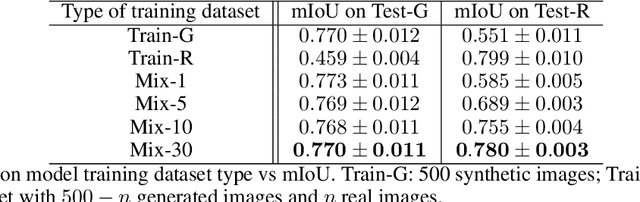

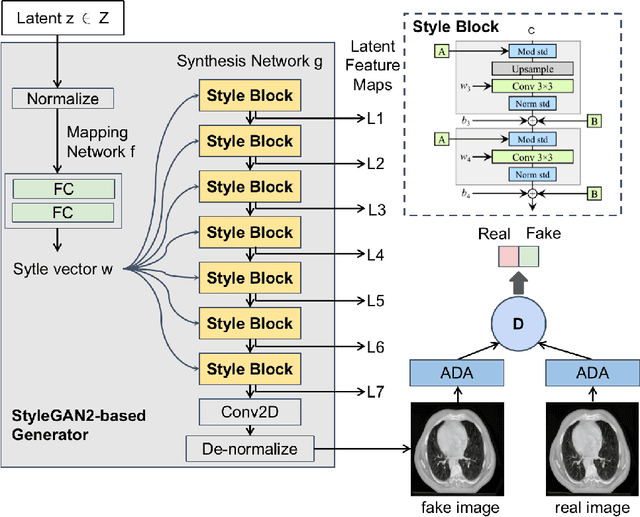

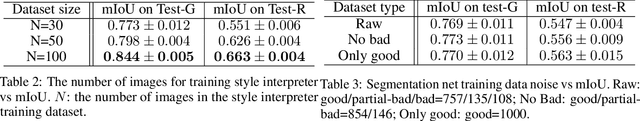

Application of DatasetGAN in medical imaging: preliminary studies

Feb 27, 2022

Abstract:Generative adversarial networks (GANs) have been widely investigated for many potential applications in medical imaging. DatasetGAN is a recently proposed framework based on modern GANs that can synthesize high-quality segmented images while requiring only a small set of annotated training images. The synthesized annotated images could be potentially employed for many medical imaging applications, where images with segmentation information are required. However, to the best of our knowledge, there are no published studies focusing on its applications to medical imaging. In this work, preliminary studies were conducted to investigate the utility of DatasetGAN in medical imaging. Three improvements were proposed to the original DatasetGAN framework, considering the unique characteristics of medical images. The synthesized segmented images by DatasetGAN were visually evaluated. The trained DatasetGAN was further analyzed by evaluating the performance of a pre-defined image segmentation technique, which was trained by the use of the synthesized datasets. The effectiveness, concerns, and potential usage of DatasetGAN were discussed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge