Peng Lei

BARS: Joint Search of Cell Topology and Layout for Accurate and Efficient Binary ARchitectures

Dec 21, 2020

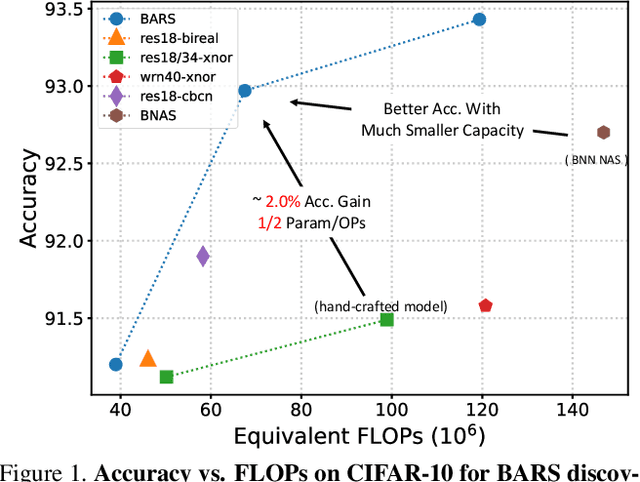

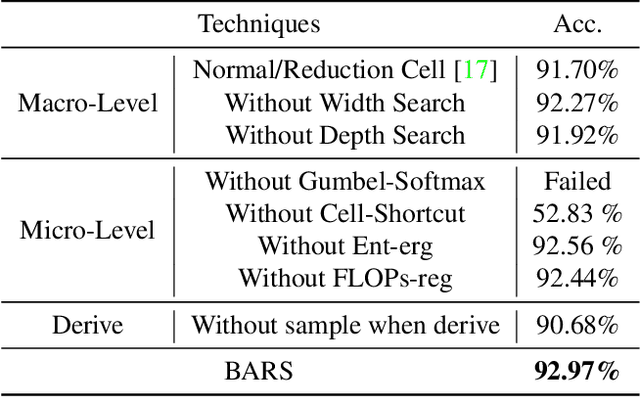

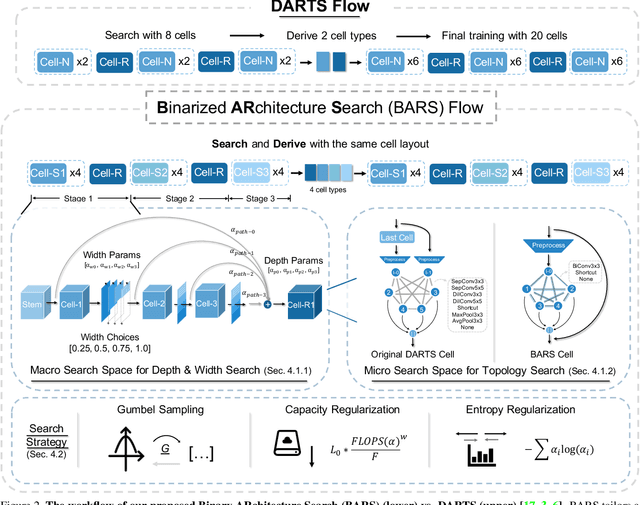

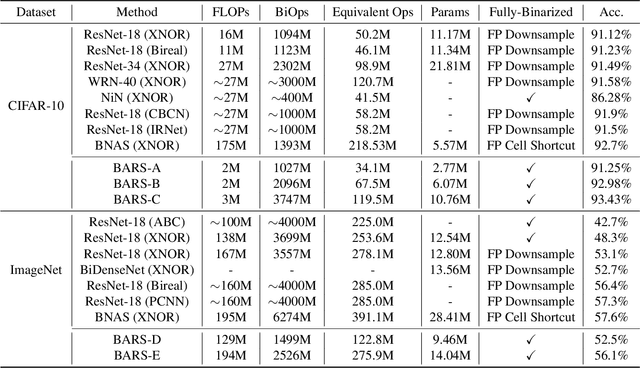

Abstract:Binary Neural Networks (BNNs) have received significant attention due to their promising efficiency. Currently, most BNN studies directly adopt widely-used CNN architectures, which can be suboptimal for BNNs. This paper proposes a novel Binary ARchitecture Search (BARS) flow to discover superior binary architecture in a large design space. Specifically, we design a two-level (Macro & Micro) search space tailored for BNNs and apply a differentiable neural architecture search (NAS) to explore this search space efficiently. The macro-level search space includes depth and width decisions, which is required for better balancing the model performance and capacity. And we also make modifications to the micro-level search space to strengthen the information flow for BNN. A notable challenge of BNN architecture search lies in that binary operations exacerbate the "collapse" problem of differentiable NAS, and we incorporate various search and derive strategies to stabilize the search process. On CIFAR-10, BARS achieves 1.5% higher accuracy with 2/3 binary Ops and $1/10$ floating-point Ops. On ImageNet, with similar resource consumption, BARS-discovered architecture achieves 3% accuracy gain than hand-crafted architectures, while removing the full-precision downsample layer.

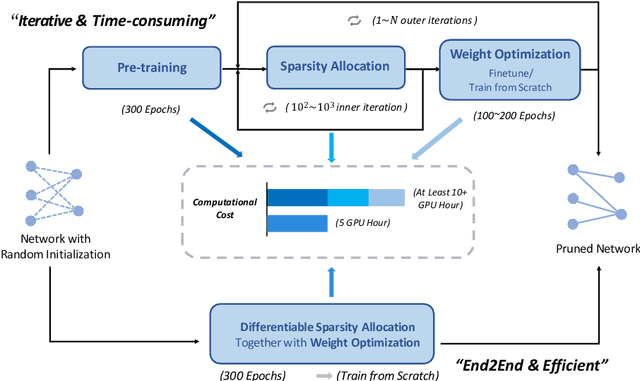

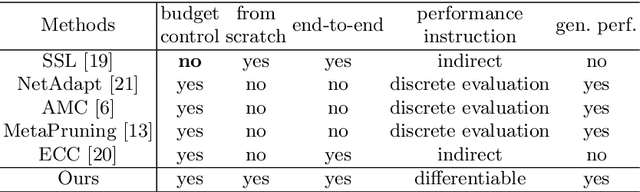

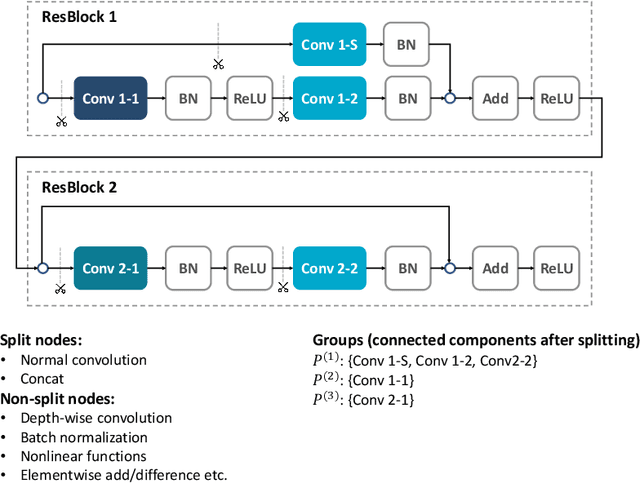

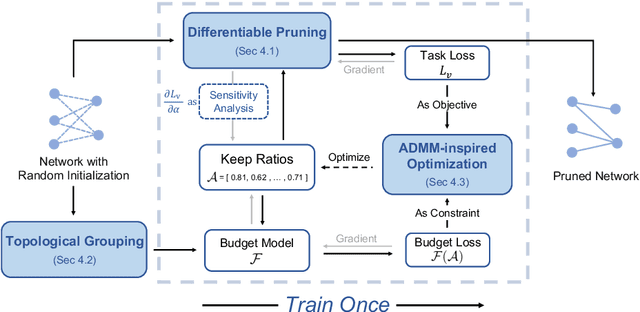

DSA: More Efficient Budgeted Pruning via Differentiable Sparsity Allocation

Apr 26, 2020

Abstract:Budgeted pruning is the problem of pruning under resource constraints. In budgeted pruning, how to distribute the resources across layers (i.e., sparsity allocation) is the key problem. Traditional methods solve it by discretely searching for the layer-wise pruning ratios, which lacks efficiency. In this paper, we propose Differentiable Sparsity Allocation (DSA), an efficient end-to-end budgeted pruning flow. Utilizing a novel differentiable pruning process, DSA finds the layer-wise pruning ratios with gradient-based optimization. It allocates sparsity in continuous space, which is more efficient than methods based on discrete evaluation and search. Furthermore, DSA could work in a pruning-from-scratch manner, whereas traditional budgeted pruning methods are applied to pre-trained models. Experimental results on CIFAR-10 and ImageNet show that DSA could achieve superior performance than current iterative budgeted pruning methods, and shorten the time cost of the overall pruning process by at least 1.5x in the meantime.

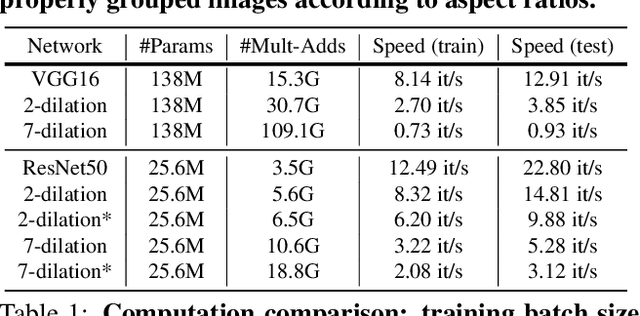

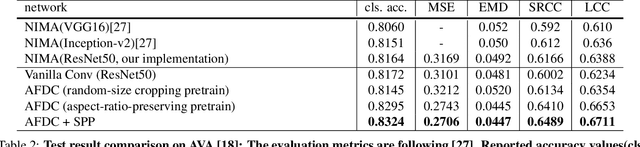

Adaptive Fractional Dilated Convolution Network for Image Aesthetics Assessment

Apr 06, 2020

Abstract:To leverage deep learning for image aesthetics assessment, one critical but unsolved issue is how to seamlessly incorporate the information of image aspect ratios to learn more robust models. In this paper, an adaptive fractional dilated convolution (AFDC), which is aspect-ratio-embedded, composition-preserving and parameter-free, is developed to tackle this issue natively in convolutional kernel level. Specifically, the fractional dilated kernel is adaptively constructed according to the image aspect ratios, where the interpolation of nearest two integers dilated kernels is used to cope with the misalignment of fractional sampling. Moreover, we provide a concise formulation for mini-batch training and utilize a grouping strategy to reduce computational overhead. As a result, it can be easily implemented by common deep learning libraries and plugged into popular CNN architectures in a computation-efficient manner. Our experimental results demonstrate that our proposed method achieves state-of-the-art performance on image aesthetics assessment over the AVA dataset.

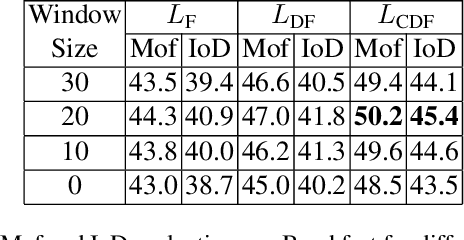

Weakly Supervised Energy-Based Learning for Action Segmentation

Sep 28, 2019

Abstract:This paper is about labeling video frames with action classes under weak supervision in training, where we have access to a temporal ordering of actions, but their start and end frames in training videos are unknown. Following prior work, we use an HMM grounded on a Gated Recurrent Unit (GRU) for frame labeling. Our key contribution is a new constrained discriminative forward loss (CDFL) that we use for training the HMM and GRU under weak supervision. While prior work typically estimates the loss on a single, inferred video segmentation, our CDFL discriminates between the energy of all valid and invalid frame labelings of a training video. A valid frame labeling satisfies the ground-truth temporal ordering of actions, whereas an invalid one violates the ground truth. We specify an efficient recursive algorithm for computing the CDFL in terms of the logadd function of the segmentation energy. Our evaluation on action segmentation and alignment gives superior results to those of the state of the art on the benchmark Breakfast Action, Hollywood Extended, and 50Salads datasets.

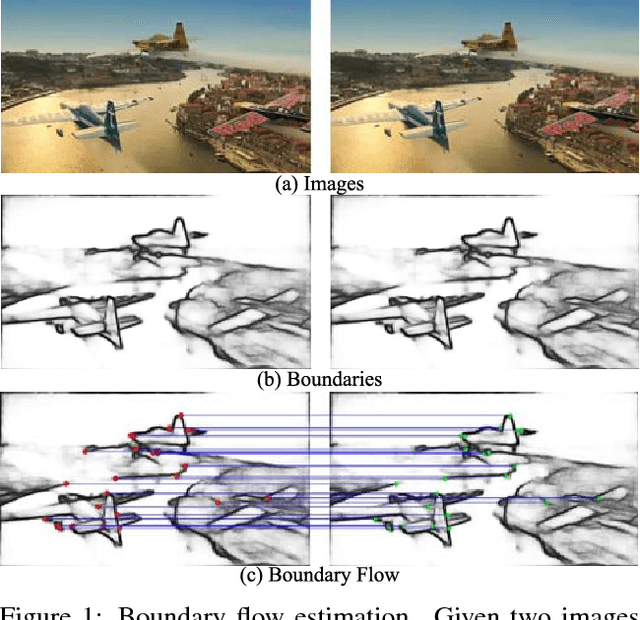

Boundary Flow: A Siamese Network that Predicts Boundary Motion without Training on Motion

Apr 08, 2018

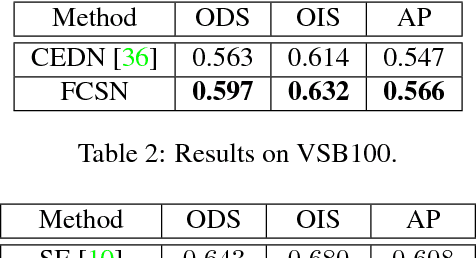

Abstract:Using deep learning, this paper addresses the problem of joint object boundary detection and boundary motion estimation in videos, which we named boundary flow estimation. Boundary flow is an important mid-level visual cue as boundaries characterize objects spatial extents, and the flow indicates objects motions and interactions. Yet, most prior work on motion estimation has focused on dense object motion or feature points that may not necessarily reside on boundaries. For boundary flow estimation, we specify a new fully convolutional Siamese network (FCSN) that jointly estimates object-level boundaries in two consecutive frames. Boundary correspondences in the two frames are predicted by the same FCSN with a new, unconventional deconvolution approach. Finally, the boundary flow estimate is improved with an edgelet-based filtering. Evaluation is conducted on three tasks: boundary detection in videos, boundary flow estimation, and optical flow estimation. On boundary detection, we achieve the state-of-the-art performance on the benchmark VSB100 dataset. On boundary flow estimation, we present the first results on the Sintel training dataset. For optical flow estimation, we run the recent approach CPMFlow but on the augmented input with our boundary-flow matches, and achieve significant performance improvement on the Sintel benchmark.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge