Songyi Yang

BARS: Joint Search of Cell Topology and Layout for Accurate and Efficient Binary ARchitectures

Dec 21, 2020

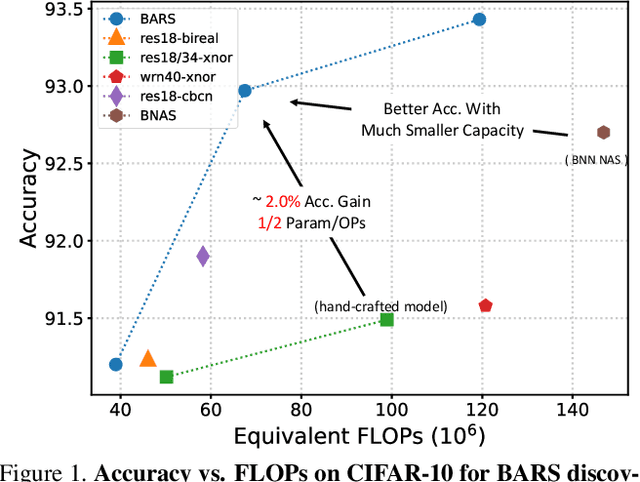

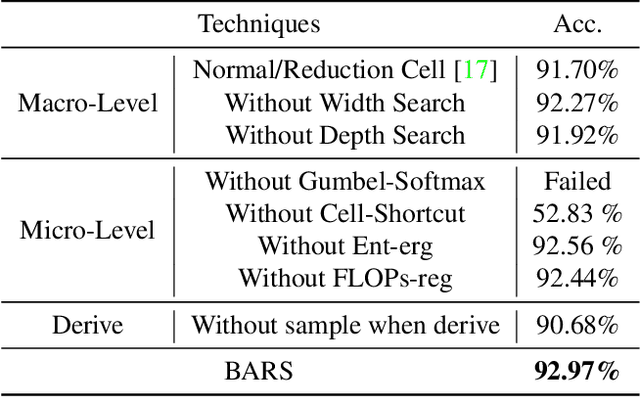

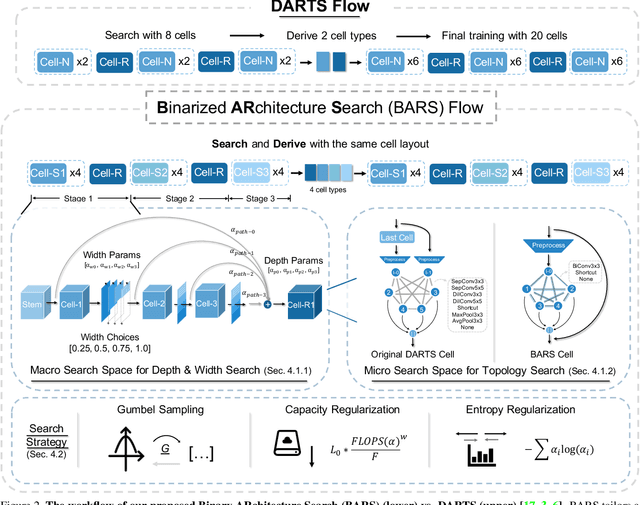

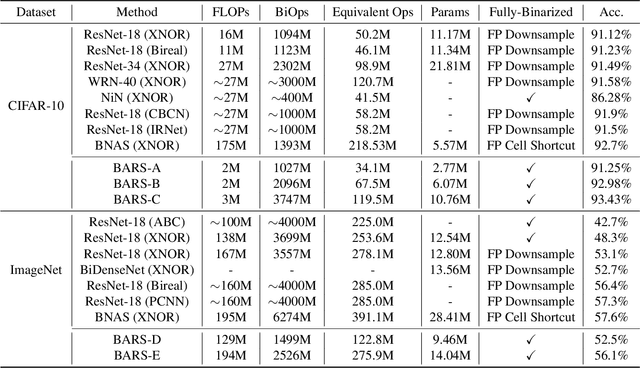

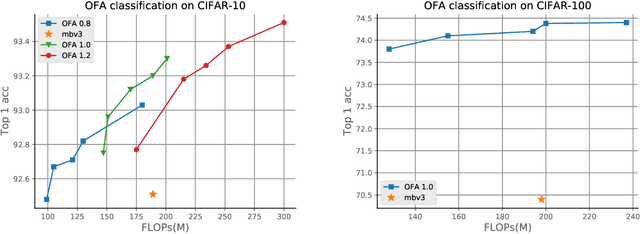

Abstract:Binary Neural Networks (BNNs) have received significant attention due to their promising efficiency. Currently, most BNN studies directly adopt widely-used CNN architectures, which can be suboptimal for BNNs. This paper proposes a novel Binary ARchitecture Search (BARS) flow to discover superior binary architecture in a large design space. Specifically, we design a two-level (Macro & Micro) search space tailored for BNNs and apply a differentiable neural architecture search (NAS) to explore this search space efficiently. The macro-level search space includes depth and width decisions, which is required for better balancing the model performance and capacity. And we also make modifications to the micro-level search space to strengthen the information flow for BNN. A notable challenge of BNN architecture search lies in that binary operations exacerbate the "collapse" problem of differentiable NAS, and we incorporate various search and derive strategies to stabilize the search process. On CIFAR-10, BARS achieves 1.5% higher accuracy with 2/3 binary Ops and $1/10$ floating-point Ops. On ImageNet, with similar resource consumption, BARS-discovered architecture achieves 3% accuracy gain than hand-crafted architectures, while removing the full-precision downsample layer.

aw_nas: A Modularized and Extensible NAS framework

Nov 25, 2020

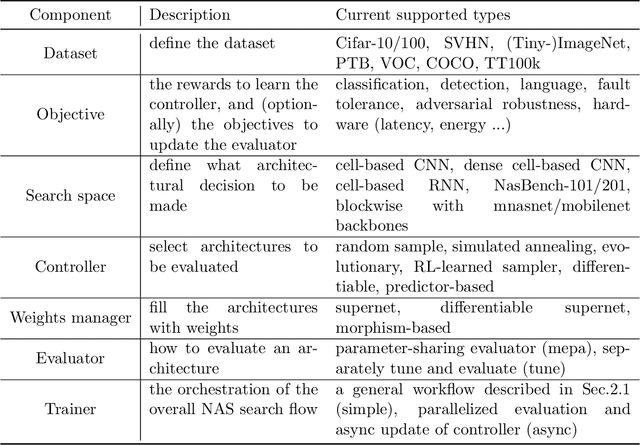

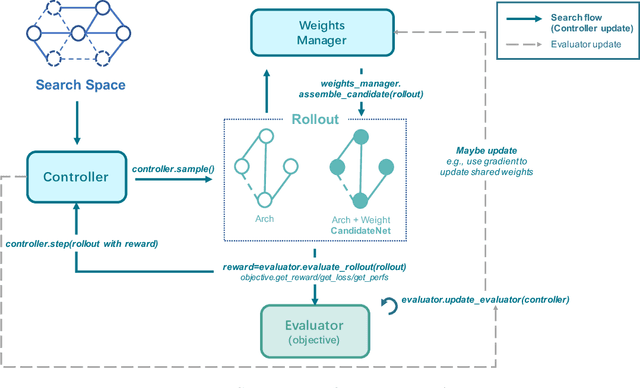

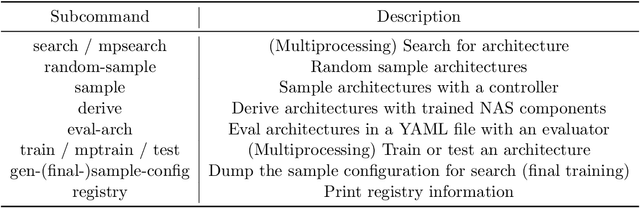

Abstract:Neural Architecture Search (NAS) has received extensive attention due to its capability to discover neural network architectures in an automated manner. aw_nas is an open-source Python framework implementing various NAS algorithms in a modularized manner. Currently, aw_nas can be used to reproduce the results of mainstream NAS algorithms of various types. Also, due to the modularized design, one can simply experiment with different NAS algorithms for various applications with awnas (e.g., classification, detection, text modeling, fault tolerance, adversarial robustness, hardware efficiency, and etc.). Codes and documentation are available at https://github.com/walkerning/aw_nas.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge