Patrick Helber

Vision Impulse GmbH and DFKI, Germany

Adaptive Fusion of Multi-view Remote Sensing data for Optimal Sub-field Crop Yield Prediction

Jan 22, 2024

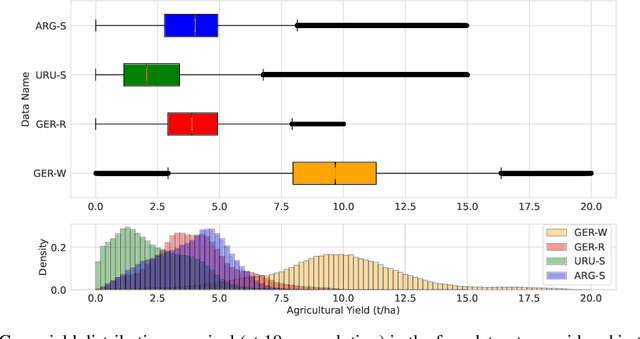

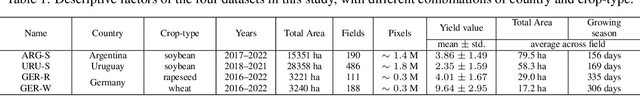

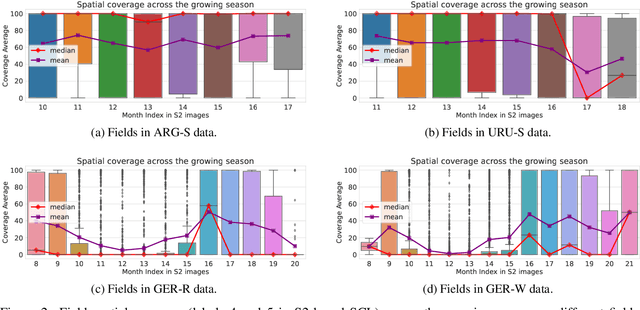

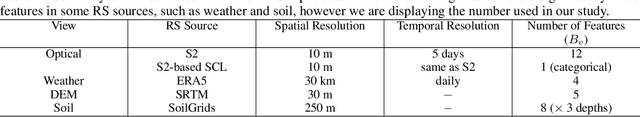

Abstract:Accurate crop yield prediction is of utmost importance for informed decision-making in agriculture, aiding farmers, and industry stakeholders. However, this task is complex and depends on multiple factors, such as environmental conditions, soil properties, and management practices. Combining heterogeneous data views poses a fusion challenge, like identifying the view-specific contribution to the predictive task. We present a novel multi-view learning approach to predict crop yield for different crops (soybean, wheat, rapeseed) and regions (Argentina, Uruguay, and Germany). Our multi-view input data includes multi-spectral optical images from Sentinel-2 satellites and weather data as dynamic features during the crop growing season, complemented by static features like soil properties and topographic information. To effectively fuse the data, we introduce a Multi-view Gated Fusion (MVGF) model, comprising dedicated view-encoders and a Gated Unit (GU) module. The view-encoders handle the heterogeneity of data sources with varying temporal resolutions by learning a view-specific representation. These representations are adaptively fused via a weighted sum. The fusion weights are computed for each sample by the GU using a concatenation of the view-representations. The MVGF model is trained at sub-field level with 10 m resolution pixels. Our evaluations show that the MVGF outperforms conventional models on the same task, achieving the best results by incorporating all the data sources, unlike the usual fusion results in the literature. For Argentina, the MVGF model achieves an R2 value of 0.68 at sub-field yield prediction, while at field level evaluation (comparing field averages), it reaches around 0.80 across different countries. The GU module learned different weights based on the country and crop-type, aligning with the variable significance of each data source to the prediction task.

Predicting Crop Yield With Machine Learning: An Extensive Analysis Of Input Modalities And Models On a Field and sub-field Level

Aug 17, 2023

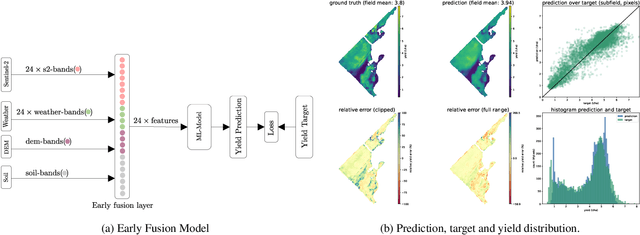

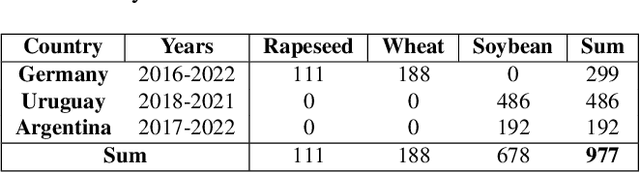

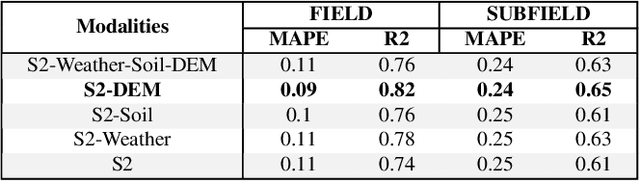

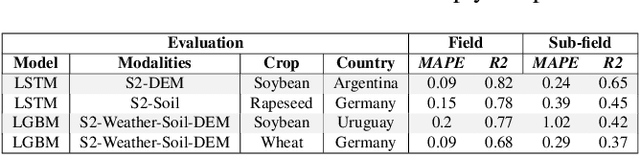

Abstract:We introduce a simple yet effective early fusion method for crop yield prediction that handles multiple input modalities with different temporal and spatial resolutions. We use high-resolution crop yield maps as ground truth data to train crop and machine learning model agnostic methods at the sub-field level. We use Sentinel-2 satellite imagery as the primary modality for input data with other complementary modalities, including weather, soil, and DEM data. The proposed method uses input modalities available with global coverage, making the framework globally scalable. We explicitly highlight the importance of input modalities for crop yield prediction and emphasize that the best-performing combination of input modalities depends on region, crop, and chosen model.

RapidAI4EO: Mono- and Multi-temporal Deep Learning models for Updating the CORINE Land Cover Product

Oct 26, 2022

Abstract:In the remote sensing community, Land Use Land Cover (LULC) classification with satellite imagery is a main focus of current research activities. Accurate and appropriate LULC classification, however, continues to be a challenging task. In this paper, we evaluate the performance of multi-temporal (monthly time series) compared to mono-temporal (single time step) satellite images for multi-label classification using supervised learning on the RapidAI4EO dataset. As a first step, we trained our CNN model on images at a single time step for multi-label classification, i.e. mono-temporal. We incorporated time-series images using a LSTM model to assess whether or not multi-temporal signals from satellites improves CLC classification. The results demonstrate an improvement of approximately 0.89% in classifying satellite imagery on 15 classes using a multi-temporal approach on monthly time series images compared to the mono-temporal approach. Using features from multi-temporal or mono-temporal images, this work is a step towards an efficient change detection and land monitoring approach.

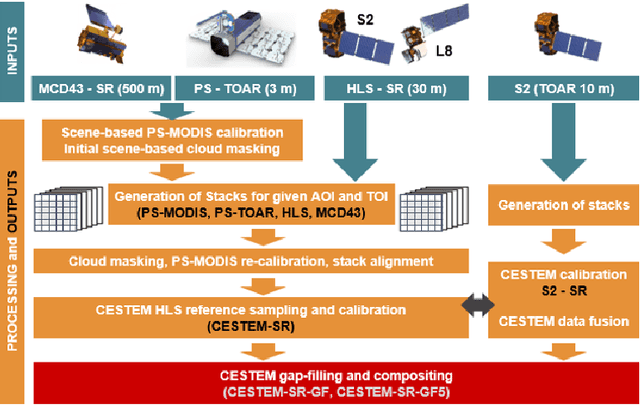

RapidAI4EO: A Corpus for Higher Spatial and Temporal Reasoning

Oct 05, 2021

Abstract:Under the sponsorship of the European Union Horizon 2020 program, RapidAI4EO will establish the foundations for the next generation of Copernicus Land Monitoring Service (CLMS) products. The project aims to provide intensified monitoring of Land Use (LU), Land Cover (LC), and LU change at a much higher level of detail and temporal cadence than it is possible today. Focus is on disentangling phenology from structural change and in providing critical training data to drive advancement in the Copernicus community and ecosystem well beyond the lifetime of this project. To this end we are creating the densest spatiotemporal training sets ever by fusing open satellite data with Planet imagery at as many as 500,000 patch locations over Europe and delivering high resolution daily time series at all locations. We plan to open source these datasets for the benefit of the entire remote sensing community.

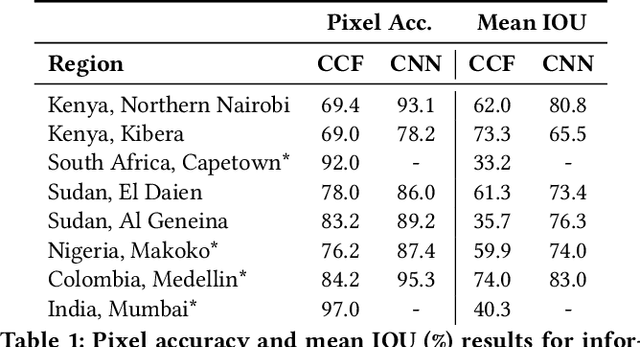

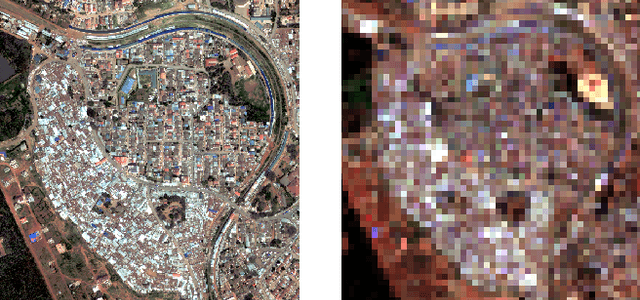

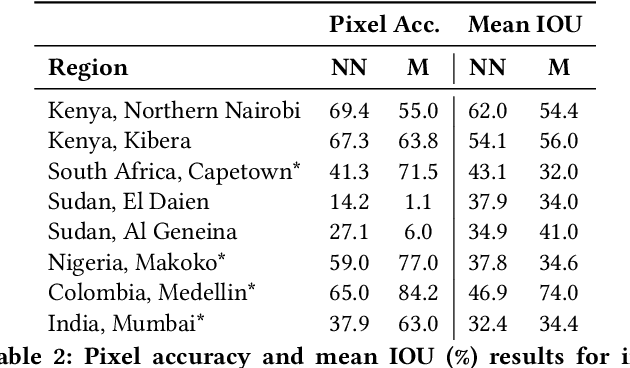

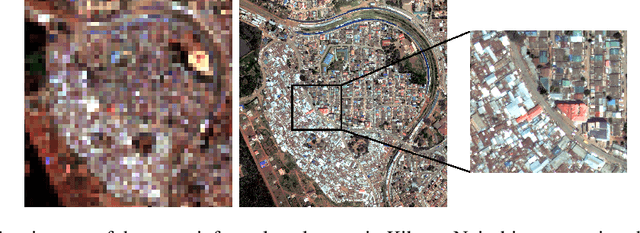

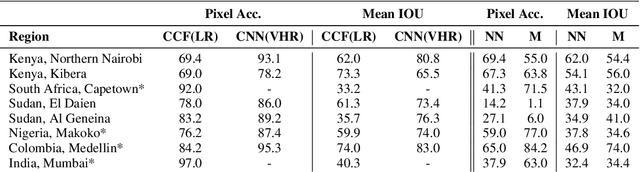

Mapping Informal Settlements in Developing Countries using Machine Learning and Low Resolution Multi-spectral Data

Jan 03, 2019

Abstract:Informal settlements are home to the most socially and economically vulnerable people on the planet. In order to deliver effective economic and social aid, non-government organizations (NGOs), such as the United Nations Children's Fund (UNICEF), require detailed maps of the locations of informal settlements. However, data regarding informal and formal settlements is primarily unavailable and if available is often incomplete. This is due, in part, to the cost and complexity of gathering data on a large scale. An additional complication is that the definition of an informal settlement is also very broad, which makes it a non-trivial task to collect data. This also makes it challenging to teach a machine what to look for. Due to these challenges we provide three contributions in this work. 1) A brand new machine learning data-set, purposely developed for informal settlement detection that contains a series of low and very-high resolution imagery, with accompanying ground truth annotations marking the locations of known informal settlements. 2) We demonstrate that it is possible to detect informal settlements using freely available low-resolution (LR) data, in contrast to previous studies that use very-high resolution (VHR) satellite and aerial imagery, which is typically cost-prohibitive for NGOs. 3) We demonstrate two effective classification schemes on our curated data set, one that is cost-efficient for NGOs and another that is cost-prohibitive for NGOs, but has additional utility. We integrate these schemes into a semi-automated pipeline that converts either a LR or VHR satellite image into a binary map that encodes the locations of informal settlements. We evaluate and compare our methods.

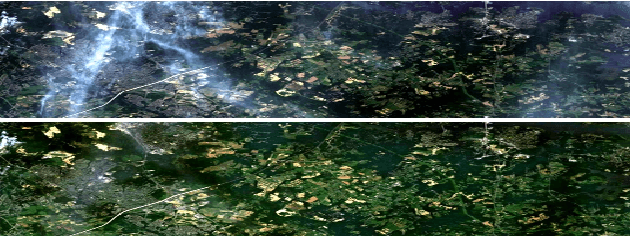

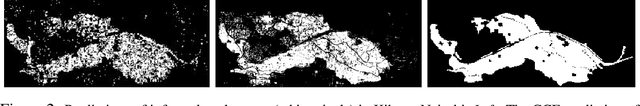

Mapping Informal Settlements in Developing Countries with Multi-resolution, Multi-spectral Data

Nov 30, 2018

Abstract:Detecting and mapping informal settlements encompasses several of the United Nations sustainable development goals. This is because informal settlements are home to the most socially and economically vulnerable people on the planet. Thus, understanding where these settlements are is of paramount importance to both government and non-government organizations (NGOs), such as the United Nations Children's Fund (UNICEF), who can use this information to deliver effective social and economic aid. We propose two effective methods for detecting and mapping the locations of informal settlements. One uses only low-resolution (LR), freely available, Sentinel-2 multispectral satellite imagery with noisy annotations, whilst the other is a deep learning approach that uses only costly very-high-resolution (VHR) satellite imagery. To our knowledge, we are the first to map informal settlements successfully with low-resolution satellite imagery. We extensively evaluate and compare the proposed methods. Please find additional material at https://frontierdevelopmentlab.github.io/informal-settlements/.

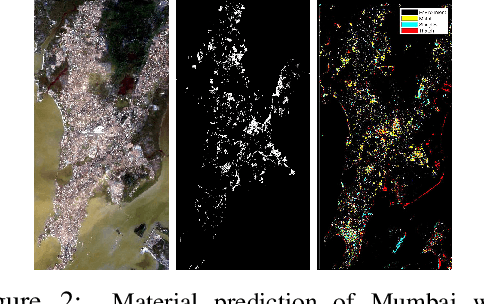

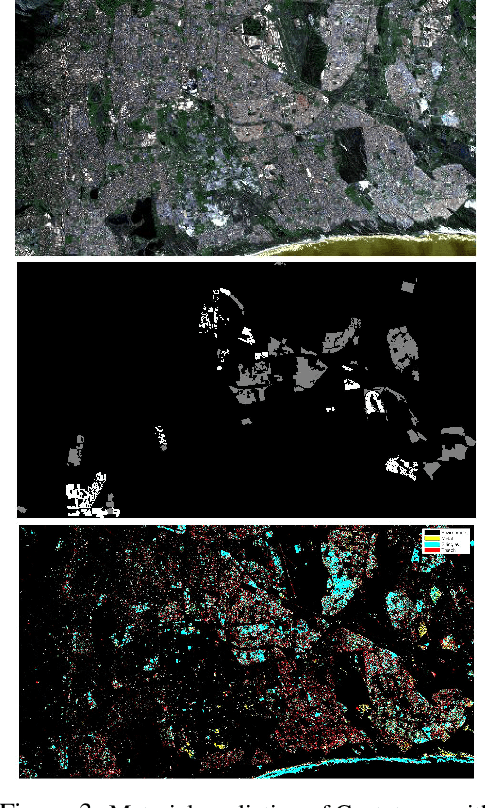

Generating Material Maps to Map Informal Settlements

Nov 30, 2018

Abstract:Detecting and mapping informal settlements encompasses several of the United Nations sustainable development goals. This is because informal settlements are home to the most socially and economically vulnerable people on the planet. Thus, understanding where these settlements are is of paramount importance to both government and non-government organizations (NGOs), such as the United Nations Children's Fund (UNICEF), who can use this information to deliver effective social and economic aid. We propose a method that detects and maps the locations of informal settlements using only freely available, Sentinel-2 low-resolution satellite spectral data and socio-economic data. This is in contrast to previous studies that only use costly very-high resolution (VHR) satellite and aerial imagery. We show how we can detect informal settlements by combining both domain knowledge and machine learning techniques, to build a classifier that looks for known roofing materials used in informal settlements. Please find additional material at https://frontierdevelopmentlab.github.io/informal-settlements/.

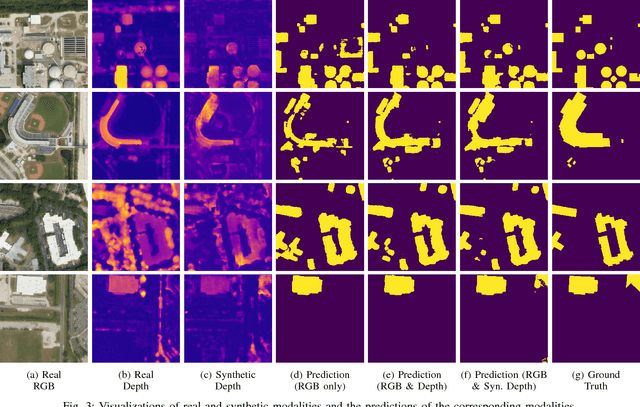

Overcoming Missing and Incomplete Modalities with Generative Adversarial Networks for Building Footprint Segmentation

Aug 09, 2018

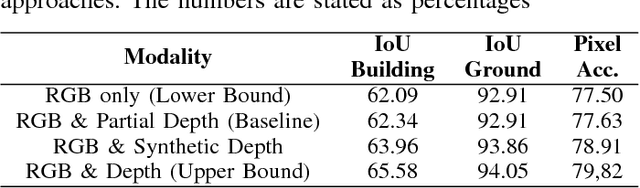

Abstract:The integration of information acquired with different modalities, spatial resolution and spectral bands has shown to improve predictive accuracies. Data fusion is therefore one of the key challenges in remote sensing. Most prior work focusing on multi-modal fusion, assumes that modalities are always available during inference. This assumption limits the applications of multi-modal models since in practice the data collection process is likely to generate data with missing, incomplete or corrupted modalities. In this paper, we show that Generative Adversarial Networks can be effectively used to overcome the problems that arise when modalities are missing or incomplete. Focusing on semantic segmentation of building footprints with missing modalities, our approach achieves an improvement of about 2% on the Intersection over Union (IoU) against the same network that relies only on the available modality.

Multi-Task Learning for Segmentation of Building Footprints with Deep Neural Networks

Sep 18, 2017

Abstract:The increased availability of high resolution satellite imagery allows to sense very detailed structures on the surface of our planet. Access to such information opens up new directions in the analysis of remote sensing imagery. However, at the same time this raises a set of new challenges for existing pixel-based prediction methods, such as semantic segmentation approaches. While deep neural networks have achieved significant advances in the semantic segmentation of high resolution images in the past, most of the existing approaches tend to produce predictions with poor boundaries. In this paper, we address the problem of preserving semantic segmentation boundaries in high resolution satellite imagery by introducing a new cascaded multi-task loss. We evaluate our approach on Inria Aerial Image Labeling Dataset which contains large-scale and high resolution images. Our results show that we are able to outperform state-of-the-art methods by 8.3\% without any additional post-processing step.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge