Octavio Villarreal

ViTAL: Vision-Based Terrain-Aware Locomotion for Legged Robots

Dec 02, 2022

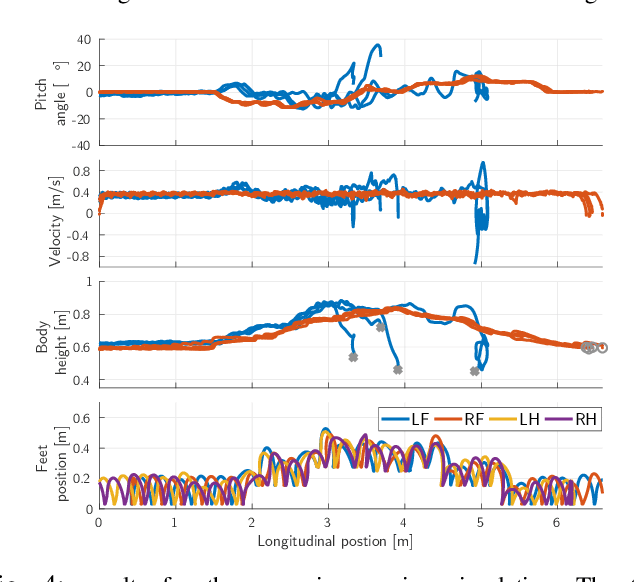

Abstract:This work is on vision-based planning strategies for legged robots that separate locomotion planning into foothold selection and pose adaptation. Current pose adaptation strategies optimize the robot's body pose relative to given footholds. If these footholds are not reached, the robot may end up in a state with no reachable safe footholds. Therefore, we present a Vision-Based Terrain-Aware Locomotion (ViTAL) strategy that consists of novel pose adaptation and foothold selection algorithms. ViTAL introduces a different paradigm in pose adaptation that does not optimize the body pose relative to given footholds, but the body pose that maximizes the chances of the legs in reaching safe footholds. ViTAL plans footholds and poses based on skills that characterize the robot's capabilities and its terrain-awareness. We use the 90 kg HyQ and 140 kg HyQReal quadruped robots to validate ViTAL, and show that they are able to climb various obstacles including stairs, gaps, and rough terrains at different speeds and gaits. We compare ViTAL with a baseline strategy that selects the robot pose based on given selected footholds, and show that ViTAL outperforms the baseline.

Foothold Evaluation Criterion for Dynamic Transition Feasibility for Quadruped Robots

Mar 08, 2022

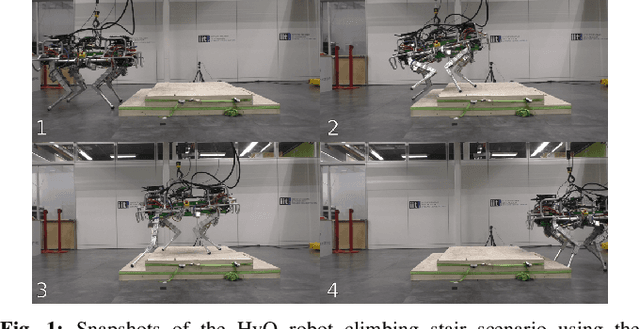

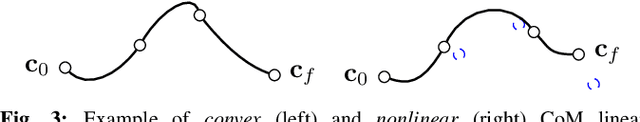

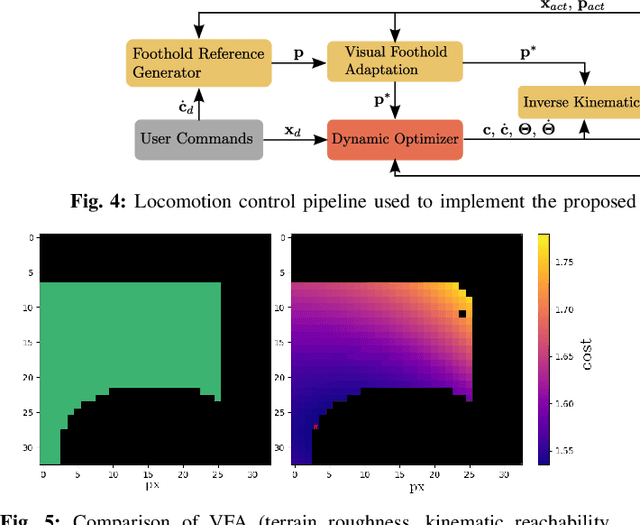

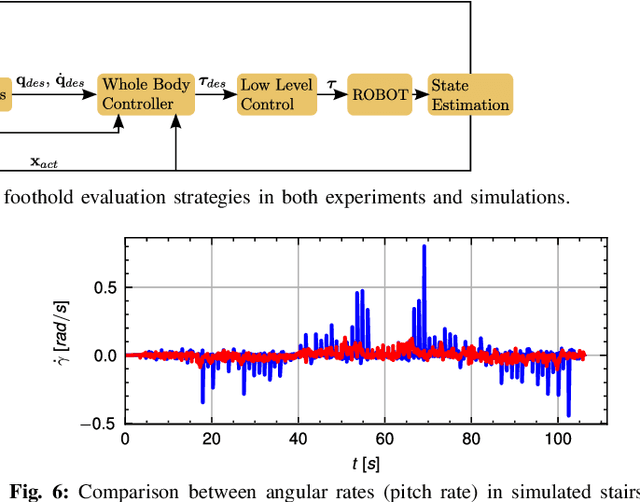

Abstract:To traverse complex scenarios reliably a legged robot needs to move its base aided by the ground reaction forces, which can only be generated by the legs that are momentarily in contact with the ground. A proper selection of footholds is crucial for maintaining balance. In this paper, we propose a foothold evaluation criterion that considers the transition feasibility for both linear and angular dynamics to overcome complex scenarios. We devise convex and nonlinear formulations as a direct extension of the Continuous Convex Resolution of Centroidal Dynamic Trajectories (C-CROC) in a receding-horizon fashion to grant dynamic feasibility for future behaviours. The criterion is integrated with a Vision-based Foothold Adaptation (VFA) strategy that takes into account the robot kinematics, leg collisions and terrain morphology. We verify the validity of the selected footholds and the generated trajectories in simulation and experiments with the 90kg quadruped robot HyQ.

Mobility-enhanced MPC for Legged Locomotion on Rough Terrain

May 12, 2021

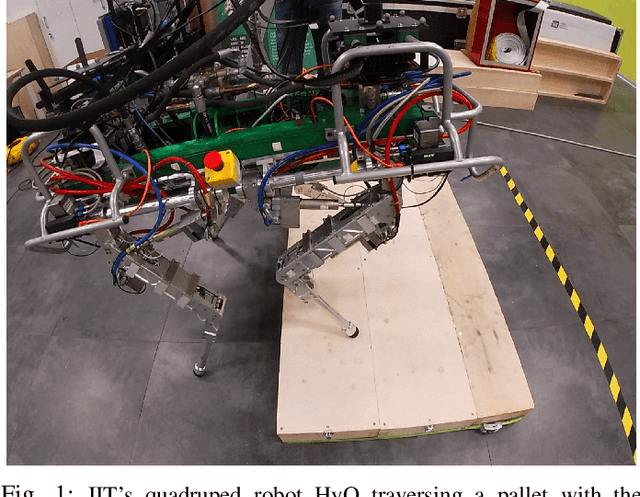

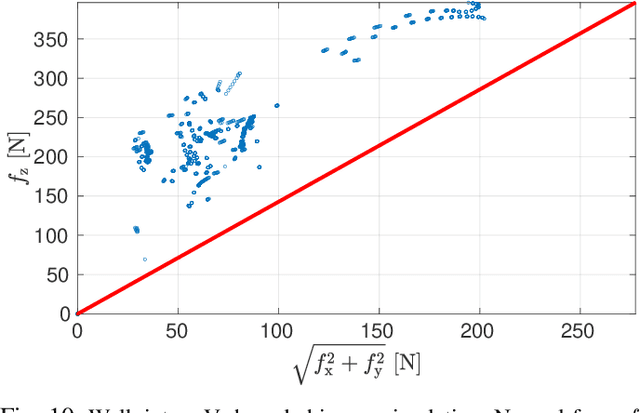

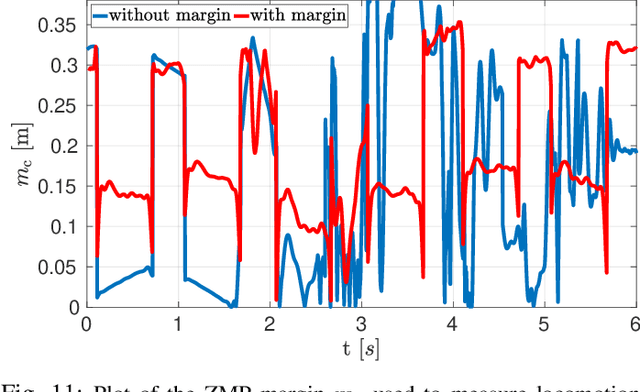

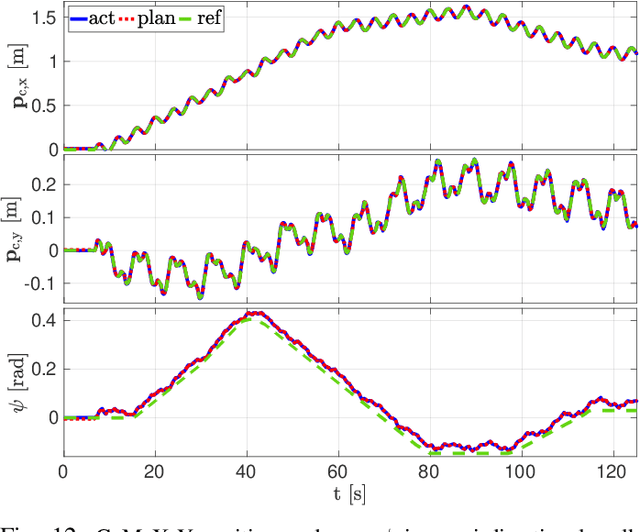

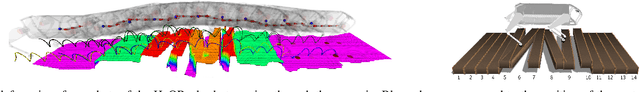

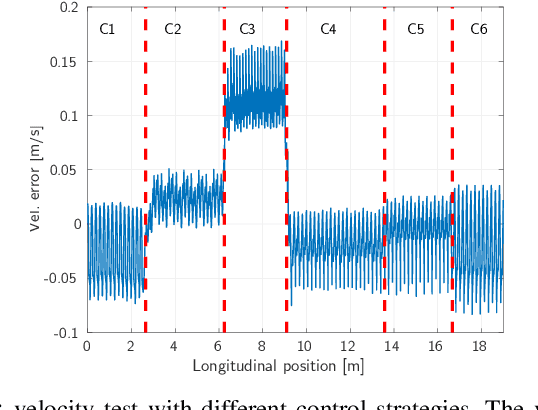

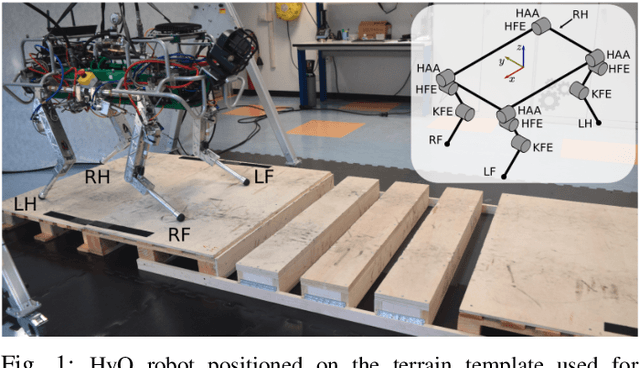

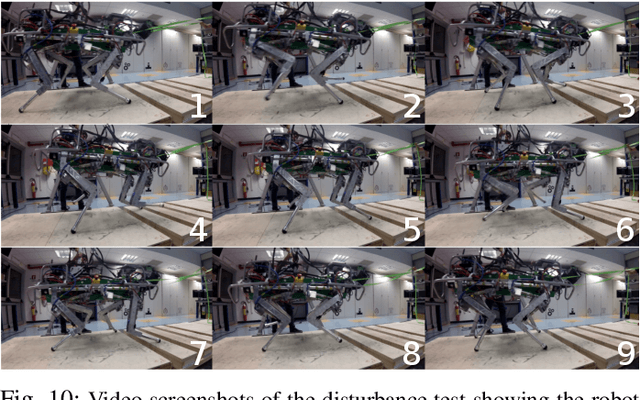

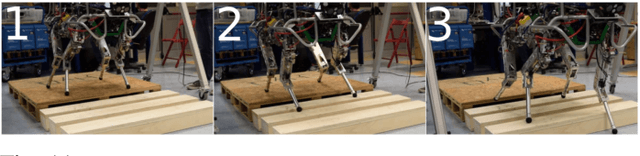

Abstract:Re-planning in legged locomotion is crucial to track a given set-point while adapting to the terrain and rejecting external disturbances. In this work, we propose a real-time Nonlinear Model Predictive Control (NMPC) tailored to a legged robot for achieving dynamic locomotion on a wide variety of terrains. We introduce a mobility-based criterion to define an NMPC cost that enhances the locomotion of quadruped robots while maximizing leg mobility and staying far from kinematic limits. Our NMPC is based on the real-time iteration scheme that allows us to re-plan online at $25 \, \mathrm{Hz}$ with a time horizon of $2$ seconds. We use the single rigid body dynamic model defined in the center of mass frame that allows to increase the computational efficiency. In simulations, the NMPC is tested to traverse a set of pallets of different sizes, to walk into a V-shaped chimney, and to locomote over rough terrain. We demonstrate the effectiveness of our NMPC with the mobility feature that allowed IIT's $87.4 \,\mathrm{kg}$ quadruped robot HyQ to achieve an omni-directional walk on flat terrain, to traverse a static pallet, and to adapt to a repositioned pallet during a walk in real experiments.

MPC-based Controller with Terrain Insight for Dynamic Legged Locomotion

Sep 30, 2019

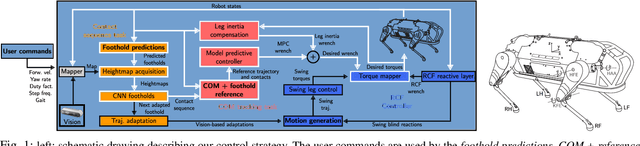

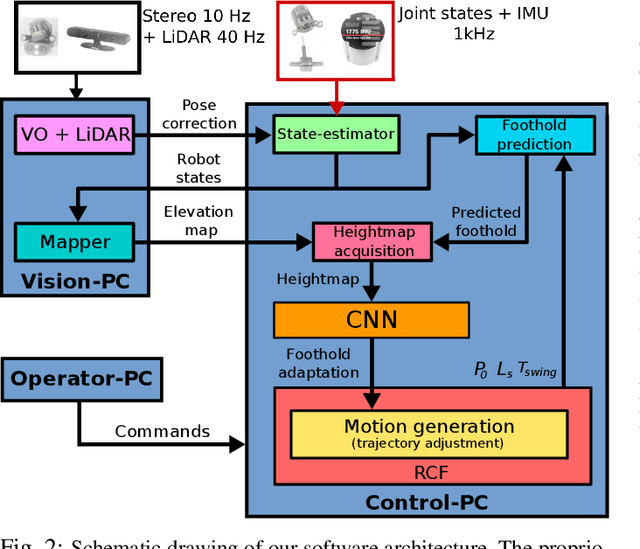

Abstract:We present a novel control strategy for dynamic legged locomotion in complex scenarios, that considers information about the morphology of the terrain in contexts when only on-board mapping and computation are available. The strategy is built on top of two main elements: first a contact sequence task that provides safe foothold locations based on a convolutional neural network to perform fast and continuous evaluation of the terrain in search of safe foothold locations; then a model predictive controller that considers the foothold locations given by the contact sequence task to optimize target ground reaction forces. We assess the performance of our strategy through simulations of the hydraulically actuated quadruped robot HyQReal traversing rough terrain under realistic on-board sensing and computing conditions.

Fast and Continuous Foothold Adaptation for Dynamic Locomotion through CNNs

Feb 15, 2019

Abstract:Legged robots can outperform wheeled machines for most navigation tasks across unknown and rough terrains. For such tasks, visual feedback is a fundamental asset to provide robots with terrain-awareness. However, robust dynamic locomotion on difficult terrains with real-time performance guarantees remains a challenge. We present here a real-time, dynamic foothold adaptation strategy based on visual feedback. Our method adjusts the landing position of the feet in a fully reactive manner, using only on-board computers and sensors. The correction is computed and executed continuously along the swing phase trajectory of each leg. To efficiently adapt the landing position, we implement a self-supervised foothold classifier based on a Convolutional Neural Network (CNN). Our method results in an up to 200 times faster computation with respect to the full-blown heuristics. Our goal is to react to visual stimuli from the environment, bridging the gap between blind reactive locomotion and purely vision-based planning strategies. We assess the performance of our method on the dynamic quadruped robot HyQ, executing static and dynamic gaits (at speeds up to 0.5 m/s) in both simulated and real scenarios; the benefit of safe foothold adaptation is clearly demonstrated by the overall robot behavior.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge