Nicholas Asher

IRIT-MELODI, CNRS

Minimal Clips, Maximum Salience: Long Video Summarization via Key Moment Extraction

Dec 12, 2025Abstract:Vision-Language Models (VLMs) are able to process increasingly longer videos. Yet, important visual information is easily lost throughout the entire context and missed by VLMs. Also, it is important to design tools that enable cost-effective analysis of lengthy video content. In this paper, we propose a clip selection method that targets key video moments to be included in a multimodal summary. We divide the video into short clips and generate compact visual descriptions of each using a lightweight video captioning model. These are then passed to a large language model (LLM), which selects the K clips containing the most relevant visual information for a multimodal summary. We evaluate our approach on reference clips for the task, automatically derived from full human-annotated screenplays and summaries in the MovieSum dataset. We further show that these reference clips (less than 6% of the movie) are sufficient to build a complete multimodal summary of the movies in MovieSum. Using our clip selection method, we achieve a summarization performance close to that of these reference clips while capturing substantially more relevant video information than random clip selection. Importantly, we maintain low computational cost by relying on a lightweight captioning model.

TELL-TALE: Task Efficient LLMs with Task Aware Layer Elimination

Oct 26, 2025Abstract:In this paper we introduce Tale, Task-Aware Layer Elimination, an inference-time algorithm that prunes entire transformer layers in an LLM by directly optimizing task-specific validation performance. We evaluate TALE on 9 tasks and 5 models, including LLaMA 3.1 8B, Qwen 2.5 7B, Qwen 2.5 0.5B, Mistral 7B, and Lucie 7B, under both zero-shot and few-shot settings. Unlike prior approaches, TALE requires no retraining and consistently improves accuracy while reducing computational cost across all benchmarks. Furthermore, applying TALE during finetuning leads to additional performance gains. Finally, TALE provides flexible user control over trade-offs between accuracy and efficiency. Mutual information analysis shows that certain layers act as bottlenecks, degrading task-relevant representations. Tale's selective layer removal remedies this problem, producing smaller, faster, and more accurate models that are also faster to fine-tune while offering new insights into transformer interpretability.

Improving in-context learning with a better scoring function

Aug 20, 2025Abstract:Large language models (LLMs) exhibit a remarkable capacity to learn by analogy, known as in-context learning (ICL). However, recent studies have revealed limitations in this ability. In this paper, we examine these limitations on tasks involving first-order quantifiers such as {\em all} and {\em some}, as well as on ICL with linear functions. We identify Softmax, the scoring function in attention mechanism, as a contributing factor to these constraints. To address this, we propose \textbf{scaled signed averaging (SSA)}, a novel alternative to Softmax. Empirical results show that SSA dramatically improves performance on our target tasks. Furthermore, we evaluate both encoder-only and decoder-only transformers models with SSA, demonstrating that they match or exceed their Softmax-based counterparts across a variety of linguistic probing tasks.

KisMATH: Do LLMs Have Knowledge of Implicit Structures in Mathematical Reasoning?

Jul 15, 2025

Abstract:Chain-of-thought traces have been shown to improve performance of large language models in a plethora of reasoning tasks, yet there is no consensus on the mechanism through which this performance boost is achieved. To shed more light on this, we introduce Causal CoT Graphs (CCGs), which are directed acyclic graphs automatically extracted from reasoning traces that model fine-grained causal dependencies in the language model output. A collection of $1671$ mathematical reasoning problems from MATH500, GSM8K and AIME, and their associated CCGs are compiled into our dataset -- \textbf{KisMATH}. Our detailed empirical analysis with 15 open-weight LLMs shows that (i) reasoning nodes in the CCG are mediators for the final answer, a condition necessary for reasoning; and (ii) LLMs emphasise reasoning paths given by the CCG, indicating that models internally realise structures akin to our graphs. KisMATH enables controlled, graph-aligned interventions and opens up avenues for further investigation into the role of chain-of-thought in LLM reasoning.

Integrating Video and Text: A Balanced Approach to Multimodal Summary Generation and Evaluation

May 10, 2025Abstract:Vision-Language Models (VLMs) often struggle to balance visual and textual information when summarizing complex multimodal inputs, such as entire TV show episodes. In this paper, we propose a zero-shot video-to-text summarization approach that builds its own screenplay representation of an episode, effectively integrating key video moments, dialogue, and character information into a unified document. Unlike previous approaches, we simultaneously generate screenplays and name the characters in zero-shot, using only the audio, video, and transcripts as input. Additionally, we highlight that existing summarization metrics can fail to assess the multimodal content in summaries. To address this, we introduce MFactSum, a multimodal metric that evaluates summaries with respect to both vision and text modalities. Using MFactSum, we evaluate our screenplay summaries on the SummScreen3D dataset, demonstrating superiority against state-of-the-art VLMs such as Gemini 1.5 by generating summaries containing 20% more relevant visual information while requiring 75% less of the video as input.

DIMSUM: Discourse in Mathematical Reasoning as a Supervision Module

Mar 07, 2025

Abstract:We look at reasoning on GSM8k, a dataset of short texts presenting primary school, math problems. We find, with Mirzadeh et al. (2024), that current LLM progress on the data set may not be explained by better reasoning but by exposure to a broader pretraining data distribution. We then introduce a novel information source for helping models with less data or inferior training reason better: discourse structure. We show that discourse structure improves performance for models like Llama2 13b by up to 160%. Even for models that have most likely memorized the data set, adding discourse structural information to the model still improves predictions and dramatically improves large model performance on out of distribution examples.

Two in context learning tasks with complex functions

Feb 05, 2025Abstract:We examine two in context learning (ICL) tasks with mathematical functions in several train and test settings for transformer models. Our study generalizes work on linear functions by showing that small transformers, even models with attention layers only, can approximate arbitrary polynomial functions and hence continuous functions under certain conditions. Our models also can approximate previously unseen classes of polynomial functions, as well as the zeros of complex functions. Our models perform far better on this task than LLMs like GPT4 and involve complex reasoning when provided with suitable training data and methods. Our models also have important limitations; they fail to generalize outside of training distributions and so don't learn class forms of functions. We explain why this is so.

Re-examining learning linear functions in context

Nov 18, 2024Abstract:In context learning (ICL) is an attractive method of solving a wide range of problems. Inspired by Garg et al. (2022), we look closely at ICL in a variety of train and test settings for several transformer models of different sizes trained from scratch. Our study complements prior work by pointing out several systematic failures of these models to generalize to data not in the training distribution, thereby showing some limitations of ICL. We find that models adopt a strategy for this task that is very different from standard solutions.

On Explaining with Attention Matrices

Oct 24, 2024

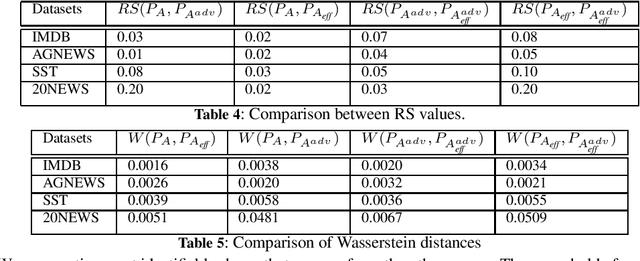

Abstract:This paper explores the much discussed, possible explanatory link between attention weights (AW) in transformer models and predicted output. Contrary to intuition and early research on attention, more recent prior research has provided formal arguments and empirical evidence that AW are not explanatorily relevant. We show that the formal arguments are incorrect. We introduce and effectively compute efficient attention, which isolates the effective components of attention matrices in tasks and models in which AW play an explanatory role. We show that efficient attention has a causal role (provides minimally necessary and sufficient conditions) for predicting model output in NLP tasks requiring contextual information, and we show, contrary to [7], that efficient attention matrices are probability distributions and are effectively calculable. Thus, they should play an important part in the explanation of attention based model behavior. We offer empirical experiments in support of our method illustrating various properties of efficient attention with various metrics on four datasets.

Learning Semantic Structure through First-Order-Logic Translation

Oct 04, 2024

Abstract:In this paper, we study whether transformer-based language models can extract predicate argument structure from simple sentences. We firstly show that language models sometimes confuse which predicates apply to which objects. To mitigate this, we explore two tasks: question answering (Q/A), and first order logic (FOL) translation, and two regimes, prompting and finetuning. In FOL translation, we finetune several large language models on synthetic datasets designed to gauge their generalization abilities. For Q/A, we finetune encoder models like BERT and RoBERTa and use prompting for LLMs. The results show that FOL translation for LLMs is better suited to learn predicate argument structure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge