Natasha Lepore

MRI-to-CT Synthesis With Cranial Suture Segmentations Using A Variational Autoencoder Framework

Dec 29, 2025Abstract:Quantifying normative pediatric cranial development and suture ossification is crucial for diagnosing and treating growth-related cephalic disorders. Computed tomography (CT) is widely used to evaluate cranial and sutural deformities; however, its ionizing radiation is contraindicated in children without significant abnormalities. Magnetic resonance imaging (MRI) offers radiation free scans with superior soft tissue contrast, but unlike CT, MRI cannot elucidate cranial sutures, estimate skull bone density, or assess cranial vault growth. This study proposes a deep learning driven pipeline for transforming T1 weighted MRIs of children aged 0.2 to 2 years into synthetic CTs (sCTs), predicting detailed cranial bone segmentation, generating suture probability heatmaps, and deriving direct suture segmentation from the heatmaps. With our in-house pediatric data, sCTs achieved 99% structural similarity and a Frechet inception distance of 1.01 relative to real CTs. Skull segmentation attained an average Dice coefficient of 85% across seven cranial bones, and sutures achieved 80% Dice. Equivalence of skull and suture segmentation between sCTs and real CTs was confirmed using two one sided tests (TOST p < 0.05). To our knowledge, this is the first pediatric cranial CT synthesis framework to enable suture segmentation on sCTs derived from MRI, despite MRI's limited depiction of bone and sutures. By combining robust, domain specific variational autoencoders, our method generates perceptually indistinguishable cranial sCTs from routine pediatric MRIs, bridging critical gaps in non invasive cranial evaluation.

RetinalGPT: A Retinal Clinical Preference Conversational Assistant Powered by Large Vision-Language Models

Mar 06, 2025Abstract:Recently, Multimodal Large Language Models (MLLMs) have gained significant attention for their remarkable ability to process and analyze non-textual data, such as images, videos, and audio. Notably, several adaptations of general-domain MLLMs to the medical field have been explored, including LLaVA-Med. However, these medical adaptations remain insufficiently advanced in understanding and interpreting retinal images. In contrast, medical experts emphasize the importance of quantitative analyses for disease detection and interpretation. This underscores a gap between general-domain and medical-domain MLLMs: while general-domain MLLMs excel in broad applications, they lack the specialized knowledge necessary for precise diagnostic and interpretative tasks in the medical field. To address these challenges, we introduce \textit{RetinalGPT}, a multimodal conversational assistant for clinically preferred quantitative analysis of retinal images. Specifically, we achieve this by compiling a large retinal image dataset, developing a novel data pipeline, and employing customized visual instruction tuning to enhance both retinal analysis and enrich medical knowledge. In particular, RetinalGPT outperforms MLLM in the generic domain by a large margin in the diagnosis of retinal diseases in 8 benchmark retinal datasets. Beyond disease diagnosis, RetinalGPT features quantitative analyses and lesion localization, representing a pioneering step in leveraging LLMs for an interpretable and end-to-end clinical research framework. The code is available at https://github.com/Retinal-Research/RetinalGPT

NNMobile-Net: Rethinking CNN Design for Deep Learning-Based Retinopathy Research

Jun 02, 2023Abstract:Retinal diseases (RD) are the leading cause of severe vision loss or blindness. Deep learning-based automated tools play an indispensable role in assisting clinicians in diagnosing and monitoring RD in modern medicine. Recently, an increasing number of works in this field have taken advantage of Vision Transformer to achieve state-of-the-art performance with more parameters and higher model complexity compared to Convolutional Neural Networks (CNNs). Such sophisticated and task-specific model designs, however, are prone to be overfitting and hinder their generalizability. In this work, we argue that a channel-aware and well-calibrated CNN model may overcome these problems. To this end, we empirically studied CNN's macro and micro designs and its training strategies. Based on the investigation, we proposed a no-new-MobleNet (nn-MobileNet) developed for retinal diseases. In our experiments, our generic, simple and efficient model superseded most current state-of-the-art methods on four public datasets for multiple tasks, including diabetic retinopathy grading, fundus multi-disease detection, and diabetic macular edema classification. Our work may provide novel insights into deep learning architecture design and advance retinopathy research.

Improved Prediction of Beta-Amyloid and Tau Burden Using Hippocampal Surface Multivariate Morphometry Statistics and Sparse Coding

Oct 28, 2022

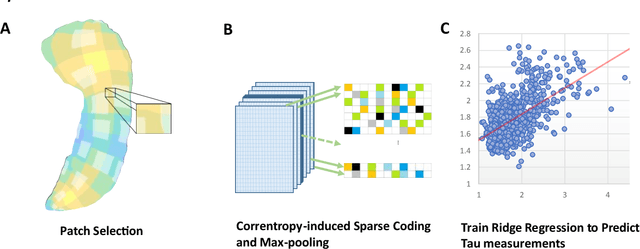

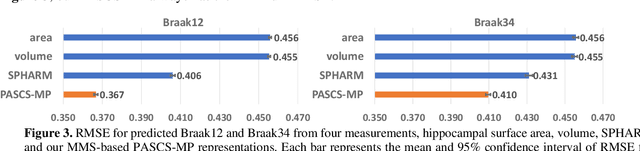

Abstract:Background: Beta-amyloid (A$\beta$) plaques and tau protein tangles in the brain are the defining 'A' and 'T' hallmarks of Alzheimer's disease (AD), and together with structural atrophy detectable on brain magnetic resonance imaging (MRI) scans as one of the neurodegenerative ('N') biomarkers comprise the ''ATN framework'' of AD. Current methods to detect A$\beta$/tau pathology include cerebrospinal fluid (CSF; invasive), positron emission tomography (PET; costly and not widely available), and blood-based biomarkers (BBBM; promising but mainly still in development). Objective: To develop a non-invasive and widely available structural MRI-based framework to quantitatively predict the amyloid and tau measurements. Methods: With MRI-based hippocampal multivariate morphometry statistics (MMS) features, we apply our Patch Analysis-based Surface Correntropy-induced Sparse coding and max-pooling (PASCS-MP) method combined with the ridge regression model to individual amyloid/tau measure prediction. Results: We evaluate our framework on amyloid PET/MRI and tau PET/MRI datasets from the Alzheimer's Disease Neuroimaging Initiative (ADNI). Each subject has one pair consisting of a PET image and MRI scan, collected at about the same time. Experimental results suggest that amyloid/tau measurements predicted with our PASCP-MP representations are closer to the real values than the measures derived from other approaches, such as hippocampal surface area, volume, and shape morphometry features based on spherical harmonics (SPHARM). Conclusion: The MMS-based PASCP-MP is an efficient tool that can bridge hippocampal atrophy with amyloid and tau pathology and thus help assess disease burden, progression, and treatment effects.

Self-Supervised Equivariant Regularization Reconciles Multiple Instance Learning: Joint Referable Diabetic Retinopathy Classification and Lesion Segmentation

Oct 12, 2022

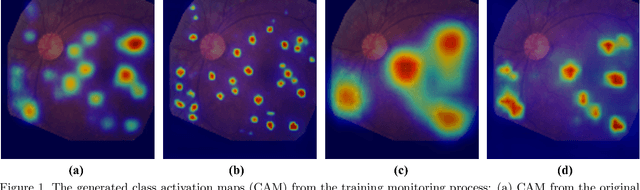

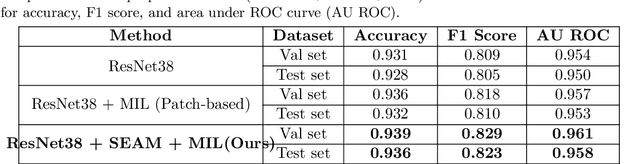

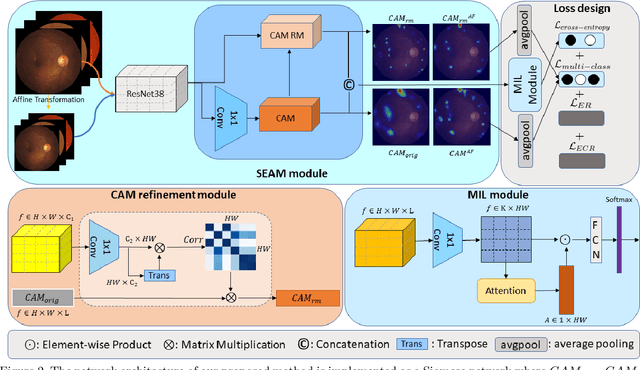

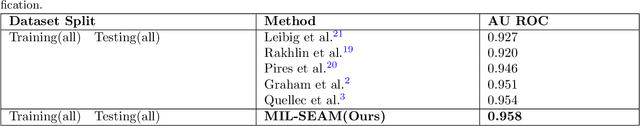

Abstract:Lesion appearance is a crucial clue for medical providers to distinguish referable diabetic retinopathy (rDR) from non-referable DR. Most existing large-scale DR datasets contain only image-level labels rather than pixel-based annotations. This motivates us to develop algorithms to classify rDR and segment lesions via image-level labels. This paper leverages self-supervised equivariant learning and attention-based multi-instance learning (MIL) to tackle this problem. MIL is an effective strategy to differentiate positive and negative instances, helping us discard background regions (negative instances) while localizing lesion regions (positive ones). However, MIL only provides coarse lesion localization and cannot distinguish lesions located across adjacent patches. Conversely, a self-supervised equivariant attention mechanism (SEAM) generates a segmentation-level class activation map (CAM) that can guide patch extraction of lesions more accurately. Our work aims at integrating both methods to improve rDR classification accuracy. We conduct extensive validation experiments on the Eyepacs dataset, achieving an area under the receiver operating characteristic curve (AU ROC) of 0.958, outperforming current state-of-the-art algorithms.

Predicting Tau Accumulation in Cerebral Cortex with Multivariate MRI Morphometry Measurements, Sparse Coding, and Correntropy

Oct 20, 2021

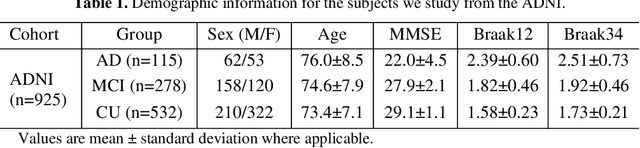

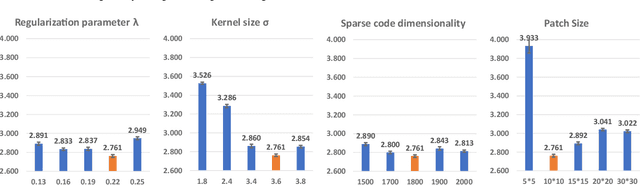

Abstract:Biomarker-assisted diagnosis and intervention in Alzheimer's disease (AD) may be the key to prevention breakthroughs. One of the hallmarks of AD is the accumulation of tau plaques in the human brain. However, current methods to detect tau pathology are either invasive (lumbar puncture) or quite costly and not widely available (Tau PET). In our previous work, structural MRI-based hippocampal multivariate morphometry statistics (MMS) showed superior performance as an effective neurodegenerative biomarker for preclinical AD and Patch Analysis-based Surface Correntropy-induced Sparse coding and max-pooling (PASCS-MP) has excellent ability to generate low-dimensional representations with strong statistical power for brain amyloid prediction. In this work, we apply this framework together with ridge regression models to predict Tau deposition in Braak12 and Braak34 brain regions separately. We evaluate our framework on 925 subjects from the Alzheimer's Disease Neuroimaging Initiative (ADNI). Each subject has one pair consisting of a PET image and MRI scan which were collected at about the same times. Experimental results suggest that the representations from our MMS and PASCS-MP have stronger predictive power and their predicted Braak12 and Braak34 are closer to the real values compared to the measures derived from other approaches such as hippocampal surface area and volume, and shape morphometry features based on spherical harmonics (SPHARM).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge